1. Introduction

Questions are used to elicit information. But questions are also used to convey information. For example, if Harry wants to tell Rosa that John owns a Mercedes, he might use the following question:

(1) Did you know that John owns a Mercedes?

In uttering (1), Harry presupposes that John owns a Mercedes, and he intends to convey the content of this presupposition to Rosa. If Rosa has no information to the contrary, she will accommodate the presupposition and come to believe that John owns a Mercedes. In this way, utterances of questions and other non-declarative sentences are commonly used to convey information.

Now, suppose that Harry knows that John does not own a Mercedes. Would he then be lying to Rosa by uttering (1)? More generally, is it possible to lie by asking a question? The current debate on how lying should be defined suggests a negative answer to these questions. The most detailed treatment of the matter is to be found in several recent works by Andreas Stokke (cf. Stokke 2016; 2017; 2018). In these works, Stokke argues that questions cannot be lies:

[N]on-declarative utterances, such as questions and orders, can be misleading, even though they cannot be lies, since they do not say things. (Stokke 2018: 80)

The aim of this paper is to show that this stance is in conflict with how ordinary speakers judge examples of insincere questions.

The main focus of the paper is on judgements of ordinary speakers about utterances of questions (i.e., interrogative sentences) with believed-false presuppositions, such as Harry’s utterance of (1).1 With the help of three experiments, we show that ordinary speakers judge such utterances to be lies. These judgements are robust and remain so when the participants are given the possibility of classifying the utterances as misleading or deceiving without being a lie. The judgements contrast with judgements participants give about cases of misleading or deceptive behaviour. Further, they pattern with judgements participants make about corresponding declarative lies. These three experiments thus provide strong evidence for a folk view of lying that allows for the possibility of lying with questions, and in particular with questions carrying believed-false presuppositions.

In a fourth experiment, we then broaden the scope and consider other kinds of non-declarative insincerity. This experiment indicates that utterances of imperative, exclamative or optative sentences carrying believed-false presuppositions are also judged to be lies by ordinary speakers. The possibility of lying with non-declaratives is thus not confined to questions.

2. Lying, Misleading and Non-Declaratives

2.1. Lying and Misleading

To begin with, let us introduce the distinction between lying and misleading. Almost all theorists in the debate on how to define lying agree that lying is not the only form of linguistic insincerity and that many utterances are intentionally misleading without being lies (see, e.g., Carson 2006; Fallis 2009; Saul 2012: Ch. 1; Stokke 2016; 2018; Mahon 2016; for an opposing view, see Meibauer 2014). Consider the following example, which is discussed, for example, by Saul (2012: 70):

A dying woman asks the doctor whether her son is well. The doctor saw him yesterday, when he was well, but knows that he was later killed in an accident.

Version A:

(2) Doctor: Your son is fine.

Version B:

(3) Doctor: I saw your son yesterday and he was fine.

There is a widespread consensus that the two versions of the example are importantly different. By uttering (2), the doctor lies to the dying woman, whereas her utterance (3) is misleading without being a lie.

There are several reasons why theorists take the distinction between lying and misleading to be important. To begin with, they assume that ordinary language speakers accept such a distinction, as is evident in the following passage by Saul:

The distinction between lying and merely misleading is an immensely natural one. It is clearly not a mere philosophers’ distinction, unfamiliar to ordinary life and of dubious significance. It is a distinction that ordinary speakers draw extremely readily, and generally care about, and a distinction recognised and accorded great significance in some areas of the law. (Saul 2012: vii)

Furthermore, many theorists hold that there are at least some cases in which it is worse to lie than to convey the same message by a misleading utterance. For example, it is widely held that, in the example of the dying woman, it is worse for the doctor to lie by uttering (2) than to mislead by uttering (3) (see, e.g., Adler 1997; Strudler 2010; Timmermann & Viebahn 2021; for opposing views see Williams 2002 and Saul 2012).

Given the importance that theorists place on the distinction between lying and misleading, it is unsurprising that recent definitions of lying are designed not to count putative cases of misleading as lies. Stokke (2016: 96; 2018: 31), for example, defends the following definition of lying, which is based on the notion of what is said:

Stokke’s definition of lying

A lies to B if and only if there is a proposition p such that:

(L1) A says that p to B, and

(L2) A proposes to make it common ground that p, and

(L3) A believes that p is false.

The second and third clauses of this definition capture the natural view that, in order to lie, speakers have to put forward something they believe to be false. The first clause makes sure that merely misleading utterances, such as (3), are not counted as lies. While by uttering (2), the doctor does say, according to Stokke’s narrow notion of what is said, that the dying woman’s son is fine, she does not do so by uttering (3).2 Rather, the doctor’s utterance of (3) merely conversationally implicates that the dying woman’s son is fine (cf. Grice 1975). The underlying idea of Stokke’s definition is thus that an utterance cannot be a lie if it merely conversationally implicates something the speaker believes to be false. Several recently proposed definitions of lying are similar in this respect: they require lies to be said, adopt a narrow construal of what is said and thus rule out the possibility of lying with conversational implicatures.3

2.2. Non-Declarative Insincerity

Insincerity is not confined to declarative utterances. Utterances of questions, imperatives and exclamatives can be insincere, too. Here are two ways in which an utterance of a non-declarative sentence might be insincere:

On the one hand, speakers might utter non-declarative sentences and thereby presuppose and intend to convey that they have a certain conscious attitude, which in fact they do not have.4 For example, A might ask B whether B’s family is well, thereby presupposing and intending to convey to B that she wants to know the answer to her question. If A in fact does not want to know the answer to her question, her utterance is insincere. This kind of non-declarative insincerity might be called non-declarative attitudinal insincerity.

On the other hand, an utterance of a non-declarative sentence might be insincere because it carries a non-attitudinal presupposition the speaker intends to convey but believes to be false. This is what happens in the initial example of Harry and Rosa (“Did you know that John owns a Mercedes?”). Such cases are similar to the previous ones in that the speakers are insincere with respect to the attitude expressed by the kind of non-declarative sentence they utter (e.g., they do not want to know the answer to their question). However, the primary aim of such utterances is to convey disinformation to the hearer that does not concern their conscious attitudes (i.e., that is not about themselves). In uttering the question “Did you know that John owns a Mercedes?”, Harry’s primary aim is to convey disinformation about John, and not to make Rosa believe that Harry is interested in what Rosa knows. We will refer to such insincerity as non-declarative disinformative insincerity.5

Can either of these forms of non-declarative insincerity amount to lying? In particular, can insincere questions be lies? In a series of illuminating papers and a recent monograph, Stokke argues that these questions should be answered in the negative (see Stokke 2014 on non-declarative attitudinal insincerity and Stokke 2016: 115–19; 2017; 2018: 109–15 on non-declarative disinformative insincerity). Stokke provides two arguments for his view, which can be summed up as follows.

The first argument is based on an often-mentioned contrast between asserting and presupposing: Stokke holds that speakers cannot assert something by presupposing it, and he accepts the standard view that all lies are assertions (cf. Stokke 2017: 128). Taken together, these views imply that presuppositions cannot be used to lie. This in turn implies that a believed-false presupposition carried by a non-declarative sentence cannot be used to lie.

The second argument takes as its starting point Stokke’s view that presuppositions do not belong to what is said (cf. Stokke 2018: 123–24). According to Stokke’s definition, lying requires saying. But with insincere non-declarative utterances (of the kinds discussed above), speakers merely presuppose, but do not say, something they believe to be false. Hence, their utterances can be misleading, but they cannot be lies.

In addition to these arguments, Stokke occasionally appeals to intuitions. For instance, Stokke (2017: 132) discusses an example featuring a believed-false presupposition and argues that it should not be counted as a lie because “the verdict that [it] is a lie is not obviously evinced by judgements on the case”. And he holds that this contrasts with other cases, which will be counted as a lie “by any competent judge” (2017: 132).6

While non-declarative insincerity is not often explicitly discussed in the current debate on how to define lying, Stokke’s view fits well with what other theorists have said on the matter. For one thing, Stokke is not the only one to defend a definition of lying that is based on the notion of what is said, as we mentioned above: similar definitions have been proposed, for example, by Fallis (2009: 34), Carson (2010: 37), Saul (2012: 17) and García-Carpintero (2018). If Stokke is right that presuppositions do not belong to what is said, then these definitions also rule out the possibility of lying with non-declaratives (carrying believed-false presuppositions).7 Furthermore, theorists in the debate have remarked that it is impossible to lie with questions in general. For example, Stuart Green voices this opinion in the following passage:

Because they have no truth value, questions, commands, statements of opinion, greetings, apologies, christenings, and so forth are not capable of being lies, although they can certainly be misleading. (Green 2001: 163–64)

Such remarks have gone largely unchallenged, and, in line with this, non-declarative sentences are hardly ever mentioned as examples of lies.

Opposing views have been voiced on occasion: Leonard (1959), Meibauer (2014: 140) and Viebahn (2020) have argued that it is possible to lie with questions. However, Leonard and Meibauer accept a broad view of lying that does not contrast with misleading; their positions are thus not in direct opposition to Stokke’s (who accepts a narrow view of lying that does contrast with misleading). Viebahn argues that questions (and more generally utterances carrying believed-false presuppositions) can be lies even on a narrow view of lying.8

3. Lying with Non-Declaratives and the Folk View of Lying

3.1. The Relevance of the Folk View of Lying

Given that it is contested whether it is possible to lie with questions, one might ask what ordinary speakers think about the matter. After all, theorists proposing definitions of lying aim for a definition that fits with how ordinary speakers employ the term ‘lying’. This aim is explicitly acknowledged, for example, by Carson (2006: 301), Fallis (2009: 32) and Saul (2012: vii), and it is attested by Arico and Fallis in the following passage:

[The] participants in this debate are not disagreeing about the best way to define a highly technical notion or theoretical construct specific to debates among philosophers and other academics…; nor is each theorist merely laying out his own idiosyncratic conception of lying. (Arico & Fallis 2013: 794)

Even though Stokke does not explicitly address the matter, he seems to adopt a similar approach. Above, we noted that Stokke (2017: 132) appeals to what “any competent judge” would say in arguing against the lie-status of an example featuring a believed-false presupposition. Moreover, in defending the view that lying requires believed falsity, rather than actual falsity, Stokke (2018: 33) invokes empirical data on folk intuitions, and he conjectures that “most people, upon reflection, agree that the mere fact that what was said is true does not imply that no lie was told”. This suggests that Stokke, too, sees a role for views of ordinary speakers in assessing definitions of lying.9

What is more, an approach that respects the views of ordinary speakers seems to make good sense, as Arico and Fallis (2013: 794–95) argue. Firstly, lying is an everyday phenomenon. Ordinary speakers produce and are confronted with lies on a daily basis. Thus, there is good reason to believe that they have robust and reliable intuitions about lying and misleading. Secondly, an important application of definitions of lying is in ethical debates about the matter (see Section 2.1). But in such debates, philosophers aim to uncover ethical properties of lying as it is ordinarily understood, and not of a technical notion introduced by theorists in the definitional debate.

The view that the folk view of lying deserves to be empirically investigated has gained recognition in recent years, and there is a growing number of studies on the matter.10 However, there are still questions about the folk view of lying that are under-examined. One of those under-examined questions is whether ordinary speakers allow for the possibility of lying with non-declarative sentences. To our knowledge, there are no empirical studies on this matter, and so our paper is an attempt to begin to fill this gap.11

3.2. Experiments and Predictions

In the following, we will present four experiments designed to test whether the ordinary concept of lying allows for lies told with utterances of non-declarative sentences. For the first three experiments, we have narrowed the focus in two ways: firstly, by focussing on questions (interrogative sentences) as a subclass of non-declaratives; and secondly, by concentrating on questions featuring the second kind of insincerity discussed above, namely non-declarative disinformative insincerity. For ease of reference, we will label such questions disinformative questions. The fourth experiment then addresses non-declaratives of various kinds: not only utterances of questions, but also utterances of imperative, exclamative and optative sentences carrying believed-false presuppositions.12

There are several reasons why we chose to use questions as starting point and main focus of the investigation. To begin with, questions form a central class of non-declaratives. If it is possible to lie with questions, it is clearly possible to lie with non-declaratives. Secondly, questions are commonly used to convey information through presuppositions they carry. One reason for this is communicative economy: it simply takes less effort to use (1) than to achieve the same effect with two sentences (e.g., “John owns a Mercedes. Did you know that?”).13 Thirdly, they are promising candidates to count as lies. Note how similar (1) and (4) are with respect to their communicative upshot (in the situation described at the beginning of this paper, in which the speaker intends to convey that John owns a Mercedes, which he believes to be false):

(1) Did you know that John owns a Mercedes?

(4) John owns a Mercedes.

Given this similarity, it would indeed be surprising if ordinary speakers classified (4) as a lie but (1) as a non-lie.

This final point goes against what Stokke has said about disinformative questions. If his definition maps ordinary usage adequately, we should expect the following judgements about cases involving disinformative questions:

We conducted three experiments to test these predictions. Experiment 1 tests whether ordinary speakers consider disinformative questions as lies or rather as misleading and deceiving. The results of this experiment suggest that ordinary speakers do in fact consider such questions as instances of lying, contrary to Prediction 1.Prediction 1: Ordinary speakers do not consider disinformative questions as lies, but rather as deceptive or misleading.

If ordinary speakers are presented with utterances such as (1), and if they are given the option of classifying Harry’s question as lie, as misleading or as deceiving, they should be willing to call it misleading and deceiving, but they should be unwilling to call it a lie.14

Prediction 2: Verdicts about disinformative questions pattern with clear examples of misleading and deceiving.

There are certain cases in the literature that are seen as uncontroversial and clear examples of misleading (without lying) or deceiving (without lying). Among these cases are misleading utterances such as (3), but also examples in which the deception has nothing to do with the content of an utterance, such as the use of a fake accent or dressing up. Stokke’s definition predicts that ordinary speakers’ verdicts about insincere questions should pattern with such clear examples of misleading or deceiving (and not with clear examples of lies).

Prediction 3: While ordinary speakers judge disinformative questions to be non-lies, they judge the declarative counterparts of such questions to be lies.

For each of the disinformative questions tested, there is a declarative counterpart that has (almost) the same communicative upshot. For example, (1) has (4) as its declarative counterpart. Stokke’s definition predicts that these declarative counterparts are judged to be lies, while the original disinformative questions are judged to be non-lies.

One possible explanation of the results of Experiment 1 is that ordinary speakers have an undifferentiated concept of lying and simply call every form of deceptive behaviour or misleading a lie. To evaluate the plausibility of this explanation and to empirically test Prediction 2, we conducted Experiment 2. This experiment pits disinformative questions against clear cases of misleading and deceiving. Its results show that ordinary speakers do not have an undifferentiated concept of lying. Rather, ordinary speakers do distinguish deceiving and misleading from lying, and they group the previously tested questions with clear lies, and not with clear cases of deceiving and misleading.

We then conducted a third experiment to compare verdicts about disinformative questions with verdicts about their direct declarative counterparts. This allows us to compare verdicts about declarative and non-declarative insincerity, while leaving all other factors of the tested cases constant. The results of Experiment 3 speak against Prediction 3: ordinary speakers classify both disinformative questions as well as their declarative counterparts as lies, with the more prototypical declarative lies being judged as lies only slightly stronger than insincere questions.

Having found strong evidence that ordinary speakers consider disinformative questions to be lies, we decided to conduct a fourth experiment to test judgements about cases involving other kinds of declarative sentences. As mentioned, the communicative upshot of (1) and (4) is very similar (in the situation described at the beginning of the paper), which is why we expected both to be judged as lies:

For the same reason, we expected ordinary speakers to judge utterances of imperative, exclamative and optative counterparts of (1), such as (5), (6) and (7), to be lies (in the same situation):(1) Did you know that John owns a Mercedes?

(4) John owns a Mercedes.

The results of the first three experiments thus led to the following prediction:(5) Keep in mind that John owns a Mercedes!

(6) What a nice Mercedes John owns!

(7) If only John would let me use the Mercedes he owns!

Our fourth experiment tested this prediction and compared judgements about cases involving the four different kinds of non-declarative sentences just mentioned. The results of Experiment 4 confirm Prediction 4: ordinary speakers classify disinformative non-declaratives of all four kinds (questions, imperatives, exclamatives and optatives) as lies. The folk concept of lying thus allows for non-declarative lies beyond questions.Prediction 4: Ordinary speakers consider utterances of disinformative non-declaratives (including questions, imperatives, exclamatives and optatives) as lies.

If ordinary speakers are presented with utterances of non-declaratives carrying a presupposition the speaker believes to be false but intends to convey, such as (1), (5), (6) or (7), they should be willing to classify them as lies – even if they are given the option of classifying them as misleading or as deceiving.

4. Experiment 1: Disinformative Questions as Lies

The first experiment examines whether people consider disinformative questions to be lies. To this end, we presented participants with eight test and two control cases. To make sure that the results are robust across measures, we used two different kinds of response options (binary versus 7-point Likert item). We also included a condition in which we not only asked whether the agent was lying, but also whether she misled or deceived the addressee. This way, we are able to distinguish whether participants only judge agents to be lying for lack of a more fitting response option, or whether they genuinely consider an utterance as an instance of lying.

4.1. Participants

In this and the following experiments, participants were recruited on Prolific Academics (Palan & Schitter 2017) and completed an online survey implemented in Unipark. All participants were required to be at least 18 years old and native English speakers (this also holds for all following experiments). 512 participants started the survey and the data of 471 were included in the analysis (41 were excluded for not finishing the experiment or failing a simple attention check). Mean age was 27.6 years, 36.5 % were male and 63.5 % female. Participants received £0.50 for estimated 5 minutes of their time (£6/h).

4.2. Design, Procedure and Materials

We implemented a 2 (response options: Binary vs. 7-point Likert item) * 2 (questions asked: Lie Only vs. Lie Deceive Mislead) * 10 (scenario: Exam, Crowd, Drunk, Mercedes, Homeless, Claire’s Party, Fight, Baby, Paul’s Party, Meeting) mixed design, with response options and questions asked as between-subject factors, and scenario tested within-subjects. Participants were randomly assigned to one of the four between-subject conditions.

Participants first read general instructions to familiarise them with the task and the response formats. They were then presented with the ten scenarios in randomised order. On the final page, participants were asked about their gender, age, and were also presented with a simple transitivity task (“If Peter is taller than Alex, and Alex is taller than Max, who is the smallest among them?”).

In the Lie Only conditions participants were only asked “Did [name of agent] lie to [name of addressee]” in each of the ten scenarios. In contrast, in Lie Deceive Mislead they were additionally asked both “Did [name of agent] mislead [name of addressee]” and “Did [name of agent] deceive [name of addressee]” for each case. All three questions were presented on the same page in random order. In the Binary conditions, the response options were “Yes”/“No”, and in the Likert-item conditions, the 7-point response scale ranged from “Definitely did not” to “Definitely did” (with no labels for the response options between these endpoints).

The eight test vignettes all included an agent who intended to deceive the addressee by posing a question to her. This question included a presupposition that the agent believed to be false and that was also actually false. Moreover, the addressee always ended up with the false belief the agent intended to cause in the addressee. As control cases, we also included a vignette featuring a clear lie (phrased as a declarative sentence) and a vignette in which the agent both uttered a true declarative sentence and was fully sincere. Here are the wordings of three vignettes (one test case and the two control cases). All other vignettes can be found in the Appendix:

Exam (test case, disinformative question):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “Have you revised for the maths exam tomorrow?” Billy comes to believe that there will be a maths exam tomorrow.

Paul’s Party (control, clear lie):

Dennis is going to Paul’s party tonight. He has a long day of work ahead of him before that, but he is very excited and can’t wait to get there. Dennis’s annoying friend Rebecca comes up to him and starts talking about the party. Dennis is fairly sure that Rebecca won’t go unless she thinks he’s going, too. Rebecca asks Dennis: “Are you going to Paul’s party?” Dennis replies: “No, I’m not going to Paul’s party.” Rebecca comes to believe that Dennis is not going to Paul’s party.15

Meeting (control, clear non-lie):

Zach and Sarah are on the investigative unit of a newspaper. Sarah has just returned from an illness and asks Zach when the next team meeting will be, as team meetings for the investigative unit are never shared by email. Zach knows that the meeting is scheduled for 3pm on Thursday and replies: “The meeting is at 3pm on Thursday.” Sarah comes to believe that the meeting is at 3pm on Thursday.

4.3. Software, Packages, and Data Availability

The statistical analyses in this and all following experiments have been conducted using R (R Core Team 2019) and RStudio (RStudio Team 2016). The following packages were used for data transformation and analysis throughout the paper: effsize (Torchiano 2020), reshape2 (Wickham 2007), ez (Lawrence 2016), pastecs (Grosjean & Ibanez 2018), multcomp (Hothorn, Bretz, & Westfall 2008), tidyr (Wickham & Henry 2019). All figures were also created with R and RStudio, using the following packages: ggplot2 (Wickham 2016), showtext (Qiu 2020), colorspace (Zeileis, Hornik, & Murrell 2009), ggpubr (Kassambra 2019). Raw data for all experiments and code for all statistical analyses can be found at https://osf.io/npwbt/.

4.4. Results

Ratings on the Likert-scale conditions were counted as indicating lies when the mean lie rating for a vignette across subjects was significantly above the midpoint of 4 (and as indicating non-lies otherwise). Answers in the binary condition were counted as indicating lies when the distribution of Yes- and No-answers differed significantly from chance (with more Yes- than No-answers), and as indicating non-lies otherwise.

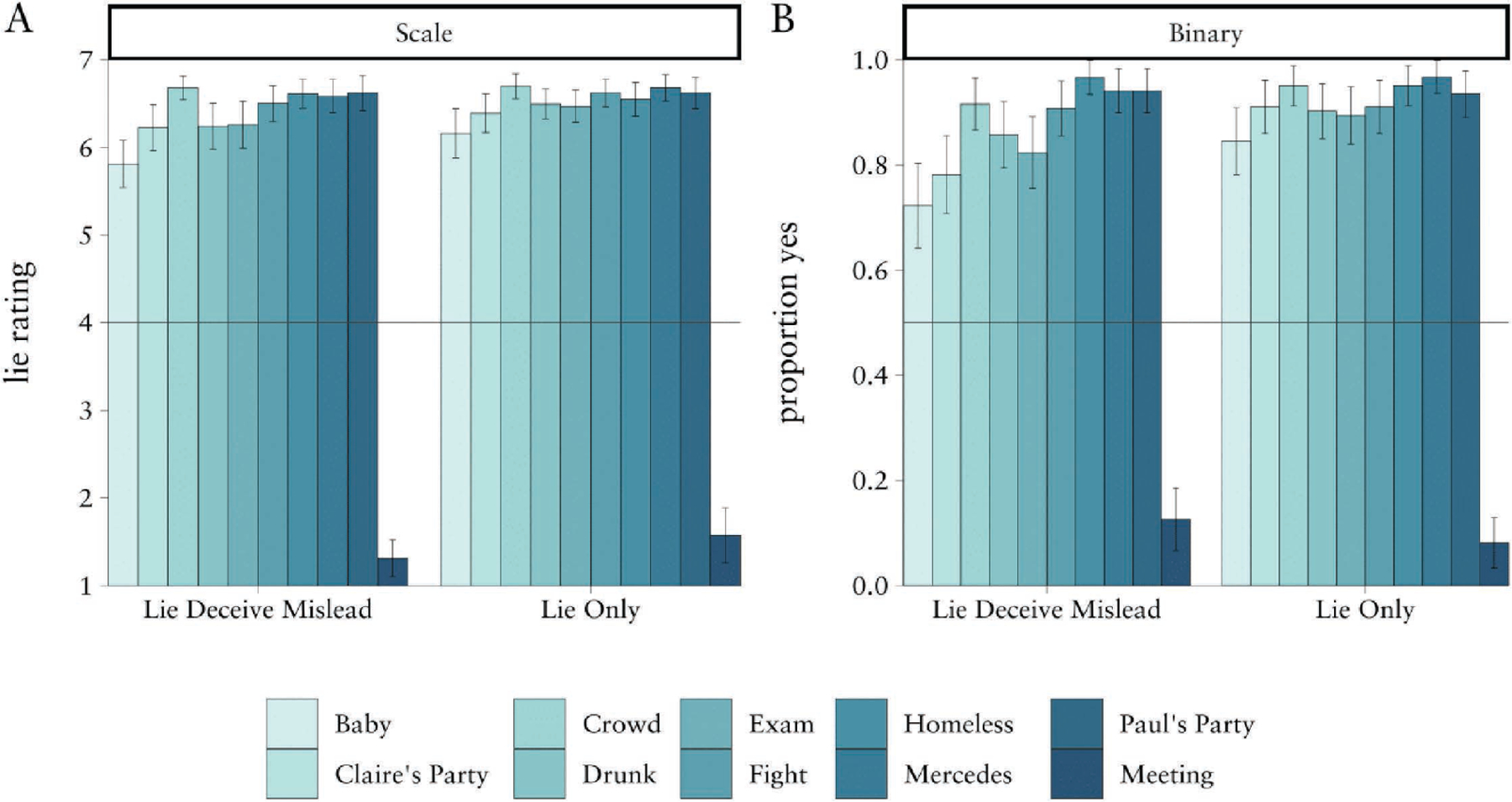

To foreshadow the overall pattern of results, which are summarised in Figure 1: Irrespective of question format and largely irrespective of the presence of additional questions about deceiving and misleading, participants judged that the agent in all eight test cases lied by asking a disinformative question, thereby challenging Prediction 1, according to which such questions should be judged as misleading or deceiving, but not as lies. We proceed to a detailed discussion of our findings.

Panel A shows the mean agreement to the Lie-statement per question condition (Lie Deceive Mislead vs. Lie Only) and vignette for the Likert-item condition (N = 229). Panel B shows the proportion of participants who agreed to the Lie-statement in the Binary-condition (N = 242). Error bars indicate 95% confidence intervals.

The detailed numbers for the two Binary conditions, in which participants could answer with “Yes” or “No” to the question of whether the agent lied, can be found in Table 1. In all eight test scenarios the question asked by the agent was considered as an instance of lying by the majority of participants (all p’s <.001 for chi-square tests against 50%). Moreover, the lie ratings for six out of the eight test cases did not differ significantly between the Lie Only and Lie Deceive Mislead condition (and when the p-values are adjusted for multiple comparisons using Bonferroni correction, none of the eight comparisons is significant). Thus, for the Binary scale, providing people with three instead of just one question and thereby providing the opportunity to indicate that a question is deceiving and misleading, but not a lie, did not change their judgements about whether the agent lied by asking a disinformative question.

Lie judgements in the binary conditions and the comparison of the Lie Only (only the lie-question was asked) and the Lie Deceive Mislead (lie-, deceive-, and mislead-questions were asked) condition. The reported p-values are not adjusted for multiple comparisons.

| Scenario | Condition | n Yes | n No | % Yes | χ2 (df=1) and p, Yes vs. No | χ2 (df=1) and p, between conditions |

|---|---|---|---|---|---|---|

| Exam | Lie Only | 110 | 13 | 89.4 | 76.50, <.001 | 1.96, n.s. |

| Lie Deceive Mislead | 98 | 21 | 82.4 | 49.82, <.001 | ||

| Crowd | Lie Only | 117 | 6 | 95.1 | 100.17, <.001 | 0.71, n.s. |

| Lie Deceive Mislead | 109 | 10 | 91.6 | 82.36, <.001 | ||

| Drunk | Lie Only | 111 | 12 | 90.2 | 79.68, <.001 | 0.79, n.s. |

| Lie Deceive Mislead | 102 | 17 | 85.7 | 60.71, <.001 | ||

| Mercedes | Lie Only | 119 | 4 | 96.7 | 107.52, <.001 | 0.45, n.s. |

| Lie Deceive Mislead | 112 | 7 | 94.1 | 92.65, <.001 | ||

| Homeless | Lie Only | 117 | 6 | 95.1 | 100.17, <.001 | 0.07, n.s. |

| Lie Deceive Mislead | 115 | 4 | 96.6 | 103.54, <.001 | ||

| Claire’s Party | Lie Only | 112 | 11 | 91.1 | 82.94, <.001 | 6.81, .009 |

| Lie Deceive Mislead | 93 | 26 | 78.2 | 37.72, <.001 | ||

| Fight | Lie Only | 112 | 11 | 91.1 | 82.94, <.001 | 0.00, n.s. |

| Lie Deceive Mislead | 108 | 11 | 90.8 | 79.07, <.001 | ||

| Baby | Lie Only | 104 | 19 | 84.6 | 58.74, <.001 | 4.71, .03 |

| Lie Deceive Mislead | 86 | 33 | 72.3 | 23.61, <.001 | ||

| Paul’s Party (Control) | Lie Only | 115 | 8 | 93.5 | 93.08, <.001 | 0.00, n.s. |

| Lie Deceive Mislead | 112 | 7 | 94.1 | 92.65, <.001 | ||

| Meeting (Control) | Lie Only | 10 | 113 | 8.1 | 86.25, <.001 | 0.87, n.s. |

| Lie Deceive Mislead | 15 | 104 | 12.6 | 66.56, <.001 |

Do we find any differences between one and three questions when we use a 7-point Likert item instead of a binary scale? In all eight test scenarios, the question was considered to be an instance of lying by the majority of participants (see Table 2, all p’s <.001 for one-sample t-test against the midpoint of the scale, 4). Moreover, the lie ratings did not differ significantly between the Lie Only and Lie Deceive Mislead condition for any of the eight test cases. All test cases were also judged as clearly deceiving and misleading.

Lie judgements in the Likert-item conditions (t-tests against scale midpoint) and the comparison of the Lie Only (only the lie-question was asked) and the Lie Deceive Mislead (lie-, deceive-, and mislead-questions were asked) condition. The reported p-values are not adjusted for multiple comparisons.

| Scenario | Condition | M (SD) | t (df) and p, t-test against midpoint | d | t (df) and p, t-test between conditions |

|---|---|---|---|---|---|

| Exam | L | 6.47 (0.99) | 26.47 (112), <.001 | 2.50 | 1.28 (203.62), 0.20 |

| LDM | 6.26 (1.45) | 16.76 (115), <.001 | 1.56 | ||

| Crowd | L | 6.70 (0.77) | 37.44 (112), <.001 | 3.52 | 0.18 (226.2), 0.86 |

| LDM | 6.68 (0.74) | 38.95, (115) <.001 | 3.62 | ||

| Drunk | L | 6.50 (0.93) | 28.62 (112), <.001 | 2.69 | 1.60 (197.8), 0.11 |

| LDM | 6.24 (1.43) | 16.88 (115), <.001 | 1.57 | ||

| Mercedes | L | 6.68 (0.80) | 35.42 (112), <.001 | 3.33 | 0.78 (216.1), 0.44 |

| LDM | 6.59 (1.04) | 26.81 (115), <.001 | 2.49 | ||

| Homeless | L | 6.55 (1.04) | 26.18 (112), <.001 | 2.46 | −0.50 (220.37), 0.62 |

| LDM | 6.61 (0.89) | 31.53 (115), <.001 | 2.93 | ||

| Claire’s Party | L | 6.39 (1.17) | 21.74, (112) <.001 | 2.05 | 0.96 (220.51), 0.34 |

| LDM | 6.22 (1.43) | 16.79 (115), <.001 | 1.56 | ||

| Fight | L | 6.62 (0.85) | 32.82 (112), <.001 | 3.09 | 0.91 (214.52), 0.36 |

| LDM | 6.50 (1.12) | 24.15 (115), <.001 | 2.24 | ||

| Baby | L | 6.16 (1.51) | 15.21 (112), <.001 | 1.43 | 1.77 (226.34), 0.08 |

| LDM | 5.81 (1.47) | 13.28 (115), <.001 | 1.23 | ||

| Paul’s Party (Control) | L | 6.62 (0.96) | 29.09 (112), <.001 | 2.74 | −0.01 (224.83), 0.99 |

| LDM | 6.62 (1.08) | 26.02 (115), <.001 | 2.42 | ||

| Meeting (Control) | L | 1.58 (1.67) | −15.40 (112), <.001 | −1.45 | 1.40 (196.72), 0.16 |

| LDM | 1.31 (1.14) | −25.46 (115), <.001 | −2.36 |

4.5. Discussion

Does the concept of lying as it is held by ordinary speakers include disinformative questions? The aim of Experiment 1 was to answer that question and, in doing so, test the prediction of Stokke’s definition of lying, according to which utterances featuring disinformative questions cannot be lies. The results demonstrate that ordinary speakers do count such questions as lies. Across all eight test cases, participants expressed that the speaker lied to the addressee when uttering a disinformative question. These results occur for both the 7-point rating scale as well as for the binary scale. Importantly, presenting people with the possibility to classify the utterance as a case of misleading or deceiving did not significantly reduce lie ratings.

5. Experiment 2: Disinformative Questions Compared to False Implicatures and Deceptive Behaviour

The findings of the first experiment suggest that ordinary speakers consider disinformative questions to be lies. A potential explanation for this finding holds that ordinary speakers have an undifferentiated concept of lying and that they hence do not distinguish between lying on the one hand, and misleading utterances or deceptive behaviour on the other. If this turns out to be correct, the folk concept of lying would be much broader than the philosophical concept of lying (as outlined in Section 2). An alternative explanation holds that the folk concept is not much broader than the philosophical concept. Laypeople do distinguish between lying and other forms of deception, but they nonetheless consider disinformative questions to be lies.

Experiment 2 aims to decide between these two explanations by adding utterances and behaviours that philosophers consider to be uncontroversial cases of deceiving (without lying) or misleading (without lying). If the first explanation is correct, we should expect that people do not differentiate between cases involving disinformative questions and cases usually considered as clear examples of deceiving and misleading. Both should be clearly judged as lies. If, however, people only clearly judge disinformative questions to be lies and differentiate them from examples considered as clear cases of deceiving and misleading, the first explanation is unlikely, and we have found additional evidence for the claim that the ordinary concept of lying does allow for the possibility of lying with disinformative questions – without being overly broad.

5.1. Participants

Participants were again recruited using Prolific Academic (Palan & Schitter 2017), completed an online survey implemented in Unipark and were required to be at least 18 years old, native English speakers and to not have participated in our first experiment. 243 participants started the survey and the data of 214 were included in the analysis (27 were excluded for not finishing the experiment or failing an attention check in form of a simple transitivity task). Mean age was 35.5 years, 34 % were male and 66 % female. Participants received £0.50 for estimated 5 minutes of their time (£6/h).

5.2. Design, Procedure and Materials

Participants were randomly assigned to one of two conditions in a 2 (questions asked: Lie Only vs. Lie Deceive Mislead) * 14 (scenario: False implicature (Therapist, Car, Politician, Trip) vs. Deceptive behaviour (Neighbour, Football, Accent, Actor) vs. Questions (Crowd, Mercedes, Homeless, Fight) vs. Control (Paul’s Party, Meeting)) mixed design. The question factor was manipulated between-subjects, while the scenario factor was manipulated within-subjects.

Participants first read general instructions to familiarise them with the task and the response options. They were then presented with the fourteen scenarios in randomised order. On the final page, participants were asked about their gender, age, and were also presented with a simple attention check. Whereas in the Lie Only conditions participants were again only asked “Did [name of agent] lie to [name of addressee]”, in Lie Deceive Mislead they were additionally asked both “Did [name of agent] mislead [name of addressee]” and “Did [name of agent] deceive [name of addressee]”, in randomised order. The response options were “Yes/No”.

The twelve test vignettes all included an agent who intended to deceive the addressee by his/her utterance or behaviour. The addressee always ended up with the false belief the agent intended to cause in the addressee. The twelve test vignettes consisted of four cases usually considered as misleading without lying through False implicatures (taken from the literature, slightly adapted for the study), four Deceptive behaviours that theorists do not consider as lying (in some cases taken or adapted from the literature), and four Disinformative questions that each included a believed-false (and actually false) presupposition (these cases were taken from the previous experiment). Additionally, participants were presented with the same control cases (Paul’s Party, Meeting) as in Experiment 1. Here are examples for all test categories (see appendix for full set of vignettes):

False implicatures

Therapist:

A physical therapist is treating a woman who is not in touch with her son anymore. The woman knows that her son is seeing the same therapist and asks the therapist whether her son is alright. The therapist saw the son yesterday (at which point he was fine), but knows that shortly after their meeting he was hit by a truck and killed. The therapist doesn’t think it is the right moment for the woman to find out about her son’s death and so, for now, wants her to think that her son is fine. In response to the woman’s question whether her son is alright he says: “I saw him yesterday and he was happy and healthy.” The woman comes to believe that her son is fine.16

Deceptive behavior

Neighbour:

Marc is annoyed by his neighbour Robert who rings Marc’s doorbell almost every day to borrow all kinds of things. Marc wants to make Robert believe that he is leaving on a journey so that Robert will leave him alone for a few days. Realizing that Robert is observing him through the window, Marc packs several bags, calls a taxi and makes sure that Robert sees him leaving with the taxi. Robert comes to believe that Marc is leaving on a journey and leaves him alone for a few days.17

Disinformative question

Homeless

A homeless person approaches a stranger to ask him for money. Although he has no children, he asks the stranger: “Could you spare one pound for my ill son?” The stranger comes to believe that the homeless person has an ill son and gives him some change.

5.3. Results

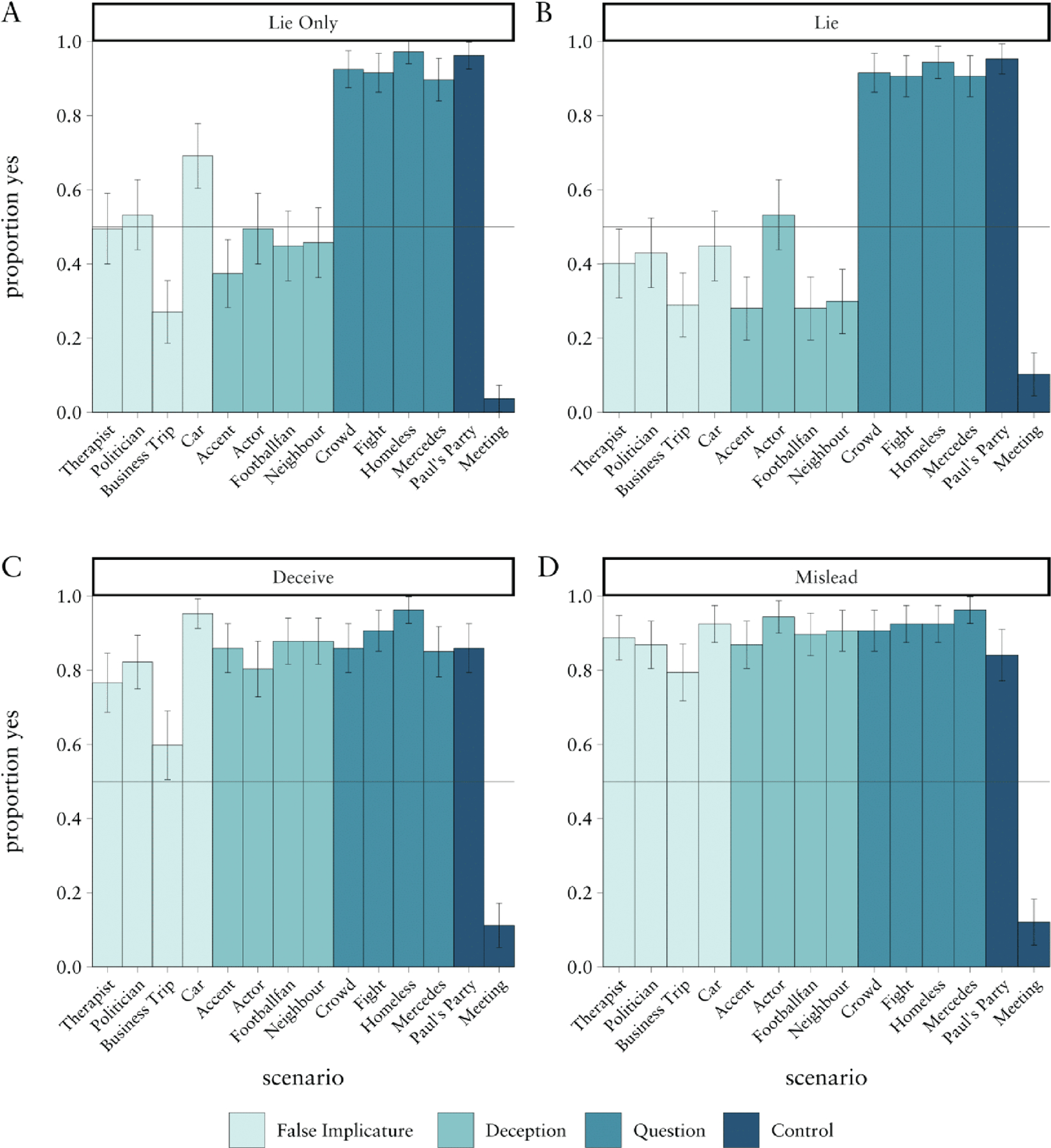

The results per scenario are summarised in Table 3 (lie ratings only) and Figure 3 (all ratings). To put it briefly, the disinformative questions from Experiment 1 were again clearly judged as lies (and, in the Lie Deceive Mislead condition, also as deceiving and misleading). Again, it did not make a difference for lie judgements whether participants were only asked about lying or also about deceiving and misleading.

Proportions of participants judging that the agent lied as a function of scenario for the condition in which only the lie-question was asked (Lie Only; panel A) and percentages of affirmative answers to the lie-, deceive-, and mislead-questions in the condition in which judgements about deceiving and misleading were assessed as well (panels B, C, and D). Error bars indicate 95% confidence intervals.

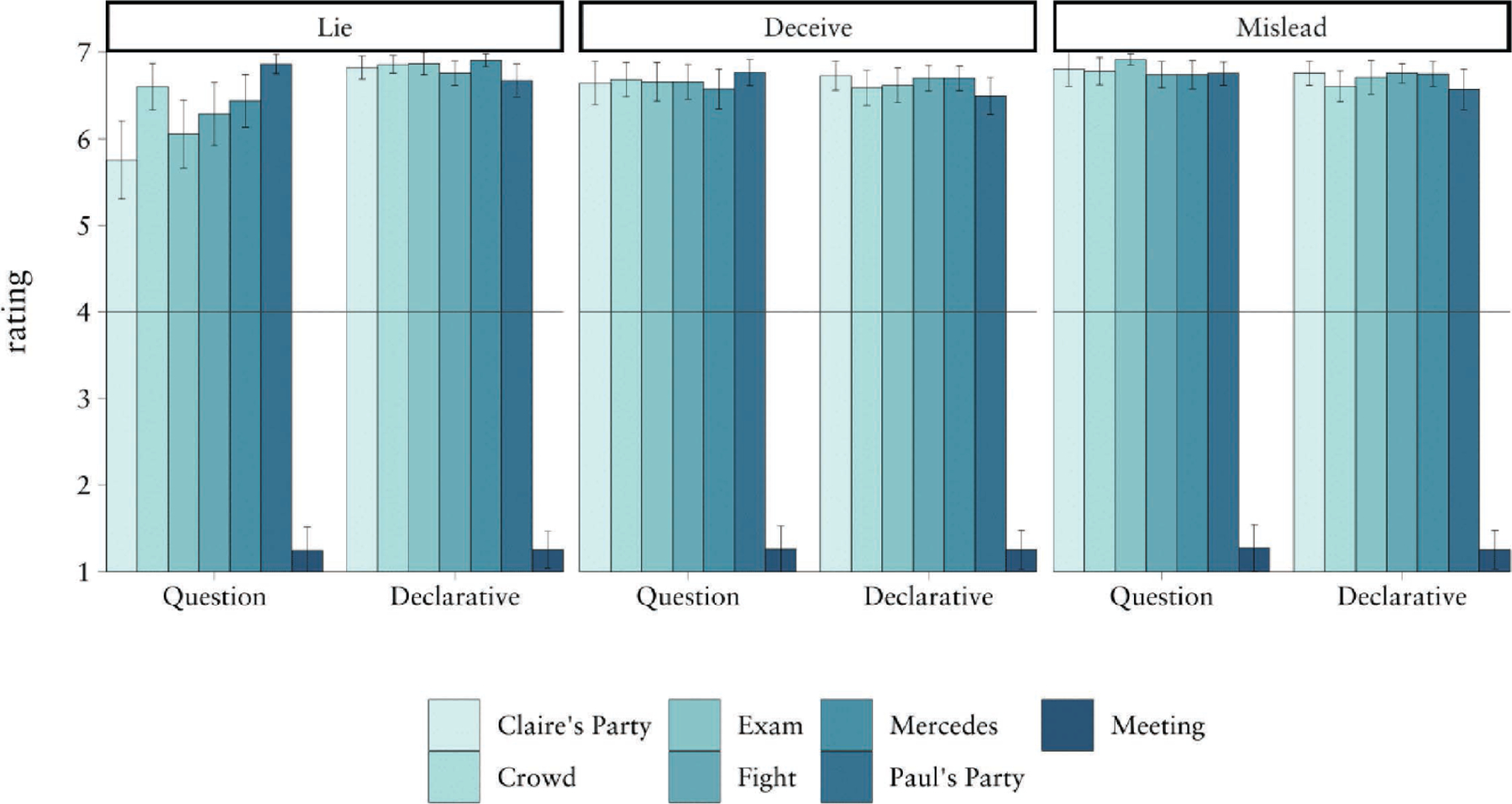

Mean agreement to the claims that the agent lied to, misled or deceived the addressee per condition (Question vs. Declarative) and vignette. Error bars indicate 95% confidence intervals. Note that the control vignettes (Pauls’s Party, Meeting) were phrased as declaratives in both conditions.

Lie judgements for the fourteen cases in Lie Only (only the lie-question was asked) and the Lie Deceive Mislead (lie-, deceive-, and mislead-questions were asked) condition. Reported p-values are not adjusted for multiple comparisons.

| Scenario | Condition | n Yes | n No | % Yes | χ2 and p, Yes vs. No | χ2 and p, L vs. LDM |

|---|---|---|---|---|---|---|

| Therapist | L | 53 | 54 | 50.5 | 0.01, n.s. | 1.53, n.s. |

| LDM | 43 | 64 | 40.2 | 4.12, <.05 | ||

| Car | L | 74 | 33 | 69.2 | 15.71, <.001 | 11.92, <.001 |

| LDM | 48 | 59 | 44.9 | 1.13, n.s. | ||

| Politician | L | 57 | 50 | 53.3 | 0.46, n.s. | 1.87, n.s. |

| LDM | 46 | 61 | 43.0 | 2.10, n.s. | ||

| Business Trip | L | 29 | 78 | 27.1 | 22.44, <.001 | 0.02, n.s. |

| LDM | 31 | 76 | 29.0 | 18.93, <.001 | ||

| Neighbour | L | 49 | 58 | 45.8 | 0.76, n.s. | 5.09, .02 |

| LDM | 32 | 75 | 29.9 | 17.28, <.001 | ||

| Football Fan | L | 48 | 59 | 44.9 | 1.13, n.s. | 5.83, .02 |

| LDM | 30 | 77 | 28.0 | 20.65, <.001 | ||

| Accent | L | 40 | 67 | 37.4 | 6.81, .009 | 1.72, n.s. |

| LDM | 30 | 77 | 28.0 | 20.65, <.001 | ||

| Actor | L | 53 | 54 | 49.5 | 0.01, n.s. | 0.17, n.s. |

| LDM | 57 | 50 | 53.3 | 0.46, n.s. | ||

| Crowd | L | 99 | 8 | 92.5 | 77.39, <.001 | 0.00, n.s. |

| LDM | 98 | 9 | 91.6 | 74.03, <.001 | ||

| Mercedes | L | 96 | 11 | 89.7 | 67.52, <.001 | 0.00, n.s. |

| LDM | 97 | 10 | 90.7 | 70.74, <.001 | ||

| Homeless | L | 104 | 3 | 97.2 | 95.34, <.001 | 0.46, n.s. |

| LDM | 101 | 6 | 94.4 | 84.35, <.001 | ||

| Fight | L | 98 | 9 | 91.6 | 74.03, <.001 | 0.00, n.s. |

| LDM | 97 | 10 | 90.7 | 70.74, <.001 | ||

| Paul’s Party | L | 103 | 4 | 96.3 | 91.60, <.001 | 0.00, n.s. |

| LDM | 102 | 5 | 95.3 | 87.94, <.001 | ||

| Meeting | L | 4 | 103 | 3.7 | 91.60, <.001 | 2.58, n.s. |

| LDM | 11 | 96 | 10.3 | 67.52, <.001 |

Deceptive behaviours on the other hand were mostly not considered to be lies, and even less so when they could also be judged as instances of deceiving and misleading (which they were). For false implicatures, responses were roughly split (and also tended to be lower when additional options were available), indicating that participants seemed to be unsure or undecided about whether they should be counted as lies. However, they were also clearly judged as instances of deceiving and misleading.

Thus, it seems that people distinguish disinformative questions from cases that are generally held to be instances of deceiving and misleading, but not lies. In addition to the detailed tests per scenario in Table 3, we also calculated the mean numbers of “yes” responses to the lie question within the categories Questions, False implicatures, and Deceptive behaviours in the Lie Only condition.18

Chi-Squared tests for the mean number of “Yes”-responses per category (across vignettes) confirmed that questions as a group were judged as lies more often than would expected by chance, i.e., 50% (99.25 out of 107, χ² = 78.25, df = 1, p<.001), but the same was not true for false implicatures (53.25 out of 107, χ² = .002, df = 1, p = .96) or deceptive behaviours (47.5 out of 107, χ² = 1.35, df = 1, p = .25). Thus, even when no other response option than “lie” was available to participants, they hesitated to apply this label to false implicatures and deceptive behaviours.

5.4. Discussion

The results of Experiment 2 provide additional insights into how to interpret the results of Experiment 1. Experiment 2 demonstrates that ordinary speakers do not have an undifferentiated concept of lying that applies to all instances of misleading utterances or deceptive behaviours. For both misleading with false implicatures and deceptive behaviour, participants judged the agent to be misleading and deceptive, but the result pattern was not clear-cut for the lie-question. In contrast, testing cases of questions with false presuppositions, people also agreed that the agent was misleading and deceptive, but the clear majority also judged that the agent lied. As a consequence, we can reject the aforementioned explanation of our results in Experiment 1, as well as Prediction 2, according to which ordinary speakers do not differentiate between disinformative questions and cases usually considered as misleading or deceiving.

6. Experiment 3: Questions vs. Declaratives

The results of our first two experiments strongly indicate that disinformative questions are considered to be lies. In the third experiment, we tested whether participants differentiate between such non-declarative instances of lying and declarative cases of lying. One might suspect that non-declarative instances of lying are cases of lying, yet they are less prototypical than standard, declarative cases of lying. If this is the case, we should expect higher lie ratings for declarative cases, compared to non-declarative cases.

We used five previously tested instances of disinformative questions and created a declarative counterpart for each of them. For instance, while the agent in the question version of the Mercedes vignette asks “Did you know that John owns a Mercedes?”, he utters “John owns a Mercedes.” in the declarative version of this scenario. According to Stokke’s definition, speakers should only judge the agent to have lied in the condition in which she utters a declarative sentence (Prediction 3). Based on the findings of Experiments 1 and 2, however, we expected that the corresponding disinformative questions would be seen as lies as well.

6.1. Participants

Participants were again recruited using Prolific Academics, completed an online survey implemented in Unipark and were required to be at least 18 years old, native English speakers and to not have participated in our previous experiments. 242 participants started the survey and the data of 180 were included in the analysis (62 were excluded for not finishing the experiment, failing an attention check in form of a simple transitivity task or failing a manipulation check that assessed whether the utterances in the vignettes were phrased as declaratives or questions). Mean age was 34.6 years, 31% were male and 69% female. Participants received £0.50 for estimated 5 minutes of their time (£6/h).

6.2. Design, Procedure and Materials

Participants were randomly assigned to one of two conditions in a 2 (sentence: Question vs. Declarative; between) * 7 (scenario: Exam, Crowd, Mercedes, Claire’s Party, Fight, Paul’s Party, Meeting; within) mixed design.

Participants first read general instructions to familiarise them with the task and the response options. They were then presented with the seven scenarios in randomised order. For each scenario, participants were asked three questions: “Did [agent] lie to [addressee]”, “Did [agent] mislead [addressee]”, and “Did [agent] deceive [addressee]”. A 7-point Likert-item, ranging from “Definitely did not” to “Definitely did”, was used to be able to detect—in contrast to the binary format—differences in the degree to which participants considered an utterance as lying.

On the final page, participants were asked about demographics and presented with an attention check and a manipulation check (“In the majority of cases that I saw in the experiment, one person deceived another by using a [Question/Declarative sentence]”).

Again, all test vignettes (taken or adapted from Experiment 1) included an agent who intended to deceive the addressee by her utterance, and the addressee always ended up with the false belief the agent intended to cause in the addressee. Here is the wording of the Exam-vignette in its declarative form (see the Appendix for the other declarative versions of the vignettes; for the question versions of the vignettes (taken from Experiment 1) and the two control cases, Paul’s Party and Meeting, see Section 4 and also the Appendix):

Exam (Declarative):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “There will be a maths exam tomorrow.” Billy comes to believe that there will be a maths exam tomorrow.

6.3. Results

The results are summarised in Figure 4, Table 4 and Table 5. All test cases and the clear-lie control case (Paul’s Party) were judged as lying (see Table 4 and Table 5 for details). A mixed ANOVA on lie ratings for the test cases revealed a main effect of sentence (F(1,178) = 24.95, p <.001, = .123), a main effect of scenario (F(4, 712) = 5.78, p <.001, = .031) and a significant two-way interaction (F(4, 712) = 6.67, p<.001, = .036). To further break down this interaction, we conducted pairwise t-tests between the question version and the declarative version of each vignette, as well as t-tests against the scale midpoint for each single case (see Table 4 for descriptive statistics and Table 5 for results of the t-tests). Agreement ratings for the claim that the agent lied to the addressee were significantly higher for the declarative version than for the question version in four of the five test vignettes (see Table 5 for test statistics). However, the difference between these two versions only made a quantitative difference (all utterances were clearly judged to be lies) and only for the Claire’s Party vignette was the difference between question and declarative greater than one point on the 7-point Likert-item and reached what is considered a large effect size (d=0.8). Agreements to the claims about deceiving and misleading in the test cases were generally high and not significantly different either between the sentence type conditions or between vignettes.

Means and standard deviations for agreement to the claims that the agent lied to, deceived or misled the addressee, per vignette and condition.

| Lie | Deceive | Mislead | ||

|---|---|---|---|---|

| Scenario | Condition | M (SD) | M (SD) | M (SD) |

| Exam | Question | 6.05 (1.68) | 6.66 (0.95) | 6.92 (0.28) |

| Declarative | 6.87 (0.67) | 6.62 (1.04) | 6.71 (1.03) | |

| Crowd | Question | 6.60 (1.15) | 6.68 (0.85) | 6.78 (0.67) |

| Declarative | 6.86 (0.54) | 6.59 (1.05) | 6.61 (0.94) | |

| Mercedes | Question | 6.44 (1.31) | 6.58 (1.00) | 6.74 (0.71) |

| Declarative | 6.91 (0.38) | 6.70 (0.74) | 6.75 (0.74) | |

| Claire’s Party | Question | 5.75 (1.93) | 6.64 (1.07) | 6.81 (0.86) |

| Declarative | 6.82 (0.70) | 6.73 (0.87) | 6.76 (0.71) | |

| Fight | Question | 6.29 (1.56) | 6.66 (0.87) | 6.74 (0.65) |

| Declarative | 6.76 (0.74) | 6.70 (0.78) | 6.76 (0.58) | |

| Paul’s Party | Question | 6.86 (0.48) | 6.77 (0.66) | 6.75 (0.57) |

| (Control, always Declarative) | Declarative | 6.67 (1.00) | 6.50 (1.12) | 6.57 (1.24) |

| Meeting | Question | 1.25 (1.14) | 1.26 (1.14) | 1.27 (1.16) |

| (Control, always Declarative) | Declarative | 1.25 (1.13) | 1.25 (1.16) | 1.25 (1.16) |

Results of two-sided t-tests against scale midpoint for each vignette in each condition and of pairwise Welch t-tests for the declarative versus the question version of each vignette. Reported p-values are not adjusted for multiple comparisons.

| t-test against | t-test | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| midpoint (4) | Q vs. D | ||||||||

| Scenario | Condition | df | t | p | d | df | t | p | d |

| Exam | Question | 72 | 10.44 | <.001 | 1.22 | 87.92 | 3.93 | <.001 | 0.68 |

| Declarative | 106 | 44.03 | <.001 | 4.26 | |||||

| Crowd | Question | 72 | 19.31 | <.001 | 2.26 | 93.78 | 1.78 | .08 | n.s. |

| Declarative | 106 | 54.79 | <.001 | 5.30 | |||||

| Mercedes | Question | 72 | 15.88 | <.001 | 1.86 | 80.17 | 2.97 | .004 | 0.53 |

| Declarative | 106 | 79.75 | <.001 | 7.71 | |||||

| Claire’s Party | Question | 72 | 7.77 | <.001 | 0.91 | 84.99 | 4.54 | <.001 | 0.80 |

| Declarative | 106 | 41.83 | <.001 | 4.04 | |||||

| Fight | Question | 72 | 12.54 | <.001 | 1.47 | 94.19 | 2.40 | .02 | 0.41 |

| Declarative | 106 | 38.66 | <.001 | 3.74 | |||||

| Paul’s Party | Question | 72 | 50.90 | <.001 | 5.96 | ||||

| (Control, always Declarative) | Declarative | 106 | 27.71 | <.001 | 2.68 | ||||

| Meeting | Question | 72 | −20.64 | <.001 | −2.42 | ||||

| (Control, always Declarative) | Declarative | 106 | −25.26 | <.001 | −2.44 | ||||

6.4. Discussion

Experiment 3 shows that judgements about disinformative questions are similar to judgements about their declarative counterparts. While the declarative utterances received slightly higher lie-ratings than the questions, both were considered as clear instances of lying.

7. Experiment 4: Questions and Other Kinds of Non-Declaratives

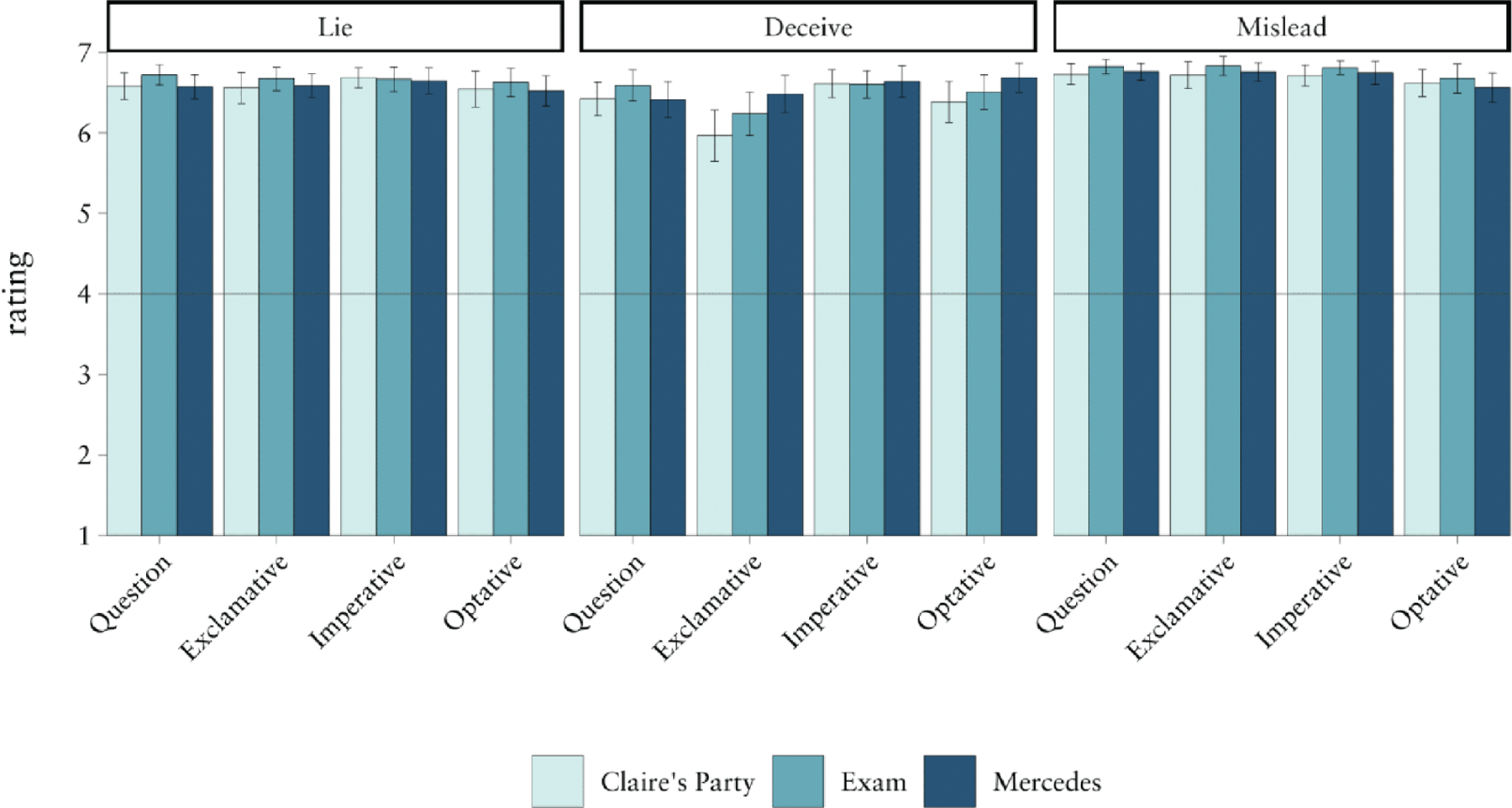

The first three experiments indicate that ordinary speakers judge disinformative questions to be lies. Given the similarity in communicative upshot between disinformative questions and other kinds of disinformative non-declaratives, we expected disinformative non-declaratives in general to be counted as lies (Prediction 4). The fourth experiment tests this prediction and compares judgements about disinformative questions with judgements about disinformative imperatives, exclamatives and optatives.19

We used three previously tested instances of disinformative questions and created imperative, exclamative and optative counterparts for them, in which only the speaker’s utterance is changed. For example, while Harry utters (1) in the question-version of Mercedes, he utters (5), (6) and (7), respectively, in the other non-declarative versions of this vignette:

(1) Did you know that John owns a Mercedes?

(5) Keep in mind that John owns a Mercedes!

(6) What a nice Mercedes John owns!

(7) If only John would let me use the Mercedes he owns!

Each of these utterances carries the presupposition that John owns a Mercedes, which Harry believes to be false but intends to convey. We expected participants to judge Harry to be lying in all four versions of the examples.

7.1. Participants

Participants were again recruited using Prolific Academic, completed an online survey implemented in Unipark and were required to be at least 18 years old, native English speakers and to not have participated in our previous experiments. 446 participants started the survey and the data of 430 were included in the analysis (16 were excluded for not finishing the experiment or failing an attention check in form of a simple transitivity task). Mean age was 34.4 years, 37 % were male, 61 % female and 1 % non-binary. Participants received £0.45 for estimated 4 minutes of their time (£6.75/h).

7.2. Design, Procedure and Materials

Participants were randomly assigned to one of four conditions in a 4 (sentence type: Questions vs. Imperatives vs. Exclamatives vs. Optatives; between) * 3 (scenario: Exam, Mercedes, Claire’s Party; within) mixed design. The procedure was the same as in the previous experiments. The three vignettes were only slightly adjusted to match the respective sentence type. Each participant was asked whether the speaker lied to, misled or deceived the addressee. The complete material can be found in the appendix.

7.3. Results

The results are summarized in Figure 5. The ratings for the mislead- and deceive-question were consistently close to the ceiling. Participants rated all twelve combinations of sentence type and vignette as clear lies (none of the confidence intervals comes close to including the midpoint of the scale). For the lie ratings, we found a significant main effect of sentence type (F(3, 426) = 3.12, p =.026, = .022), a significant main effect of vignette (F(2, 852) = 7.78, p <.001, = .018, as well as a significant interaction of sentence type and vignette (F(6, 852) = 3.21, p = .004, = .022). Post-hoc Tukey-contrasts revealed that, averaged across the three vignettes, lie ratings for questions were significantly lower compared to exclamatives (p <.001), imperatives (p = .007), as well as optatives (p =.038). The other comparisons were not statistically significant.

7.4. Discussion

The results clearly indicate that ordinary speakers not only judge disinformative questions to be lies, but also their counterparts featuring imperative, exclamative or optative sentences. Overall, the folk view of lying thus allows for lying with believed-false presuppositions, regardless of sentence type.

8. General Discussion

The aim of our experiments was to test judgements of ordinary speakers about certain insincere utterances of non-declarative sentences. Our focus was on disinformative questions, that is, questions carrying a presupposition (concerning something other than the speaker’s attitudes) which the speaker believes to be false but intends to convey. Andreas Stokke has argued for a definition of lying according to which such questions cannot be lies. If Stokke’s definition of lying is paired with the assumption that definitions of lying should track the ordinary usage of the term ‘lying’, it yields the following predictions about the judgements of ordinary speakers:

Our first three experiments challenge these predictions. Experiment 1 demonstrates that participants consider disinformative questions to be lies. This effect occurred for eight different scenarios, and the effect remained robust regardless of whether we provided participants with binary answer options or with a 7-point rating scale. Providing participants with the opportunity to judge the speaker’s utterance as misleading and deceiving (in addition to lying) did not annihilate this effect; it even had no effect at all in the majority of cases. This demonstrates that participants’ willingness to call disinformative questions lies was not an artefact of a lack of alternative options, such as a missing opportunity to classify the agent’s utterance as misleading or deceiving.Prediction 1: Ordinary speakers do not consider disinformative questions as lies, but rather as deceptive or misleading.

Prediction 2: Verdicts about disinformative questions pattern with clear examples of misleading and deceiving.

Prediction 3: While ordinary speakers judge disinformative questions to be non-lies, they judge the declarative counterparts of such questions to be lies.

Experiment 2 responds to a possible objection against the significance of the aforementioned results, according to which ordinary speakers have an overly inclusive concept of lying that applies to all instances of misleading or deceptive behaviours. To test this explanation, we presented another group of participants with twelve vignettes. Four vignettes included disinformative questions, four vignettes included false implicatures, and four included forms of deceptive behaviour. Theorists hold that in the stories including false implicatures or deceptive behaviour the speakers deceive or mislead, but do not lie. Our results indicate that ordinary speakers also draw this distinction. In contrast to disinformative questions, false implicatures and deceptive behaviours were not consistently evaluated as lies (in fact, deceptive behaviours were rather not evaluated as lies, while false implicatures were a mixed bag). This is evidence that ordinary speakers do distinguish between deceptive behaviour and misleading, on the one hand, and lying, on the other. It speaks against the alternative explanation of the results in Experiment 1 according to which laypeople have an overly broad concept of lying. The results also challenge Prediction 2, according to which verdicts about insincere questions pattern with clear examples of misleading and deceiving.

In Experiment 3, we examined whether disinformative questions are considered lies to the same extent as their declarative counterparts. Our results show that ordinary speakers are slightly less willing to call disinformative questions lies than they are for the corresponding declarative utterances. Declarative lies may thus be considered to be more prototypical lies. But the results nonetheless confirm that both disinformative questions and their declarative counterparts are judged to be lies, contrary to Prediction 3.

The results of the first three experiments raise the question of whether ordinary speakers judge other kinds of disinformative non-declaratives (carrying believed-false presuppositions the speakers intend to convey) to be lies. We expected that they do (Prediction 4), and this expectation was confirmed by Experiment 4. Just as disinformative questions, disinformative imperatives, exclamatives and optatives are judged to be lies.

The results of the experiments speak against Stokke’s definition of lying, insofar it is meant to track the ordinary usage of the term ‘lying’. If Stokke is right that presuppositions do not belong to what is said, they also present a problem for the currently popular approach of defining lying in terms of what is said, and thus for the definitions put forward by Fallis (2009: 34), Carson (2010: 37), Saul (2012: 17) and García-Carpintero (2018). The experiments thus motivate the search for alternative views: either says-based definitions of lying that are combined with a notion of what is said that includes presuppositions, or definitions that do not tie lying to what is said.

According to the first of these two approaches, Stokke is right about tying lying to what is said, but wrong to exclude presuppositions from what is said. A broader notion of what is said that does include presuppositions would indeed allow says-based definitions to count the disinformative non-declaratives of this paper as lies. But there are several open questions and challenges for such an approach. To begin with, there is the question of which presuppositions to include in what is said. As mentioned above (in Footnote 5), the presuppositions in the examples of this paper are regarded as lexically triggered semantic presuppositions. How about pragmatic presuppositions? Can they be used to lie, too? Adherents of the current approach would have to take a stand on this matter. Then, there are challenges for definitions based on an extended notion of what is said. On the one hand, such definitions might over-reach. At least at first view, it is not clear that every utterance featuring a believed-false semantic presupposition (that is proposed as an update to the common ground) is a lie. For instance, it is not clear that weaker constative speech-acts (such as conjectures or predictions) carrying believed-false semantic presuppositions should count as lies (see Viebahn 2020: §4 for discussion of this matter). On the other hand, there are areas in which the extended says-based approach might still be too narrow. In particular, Fallis (2014) and Viebahn (2017) have argued that such definitions fail to make room for lies that are told while speaking non-literally, although there is a good case to be made for the possibility of non-literal lies. So while the experiments do not rule out definitions of lying based on the notion of what is said, they do indicate that there is work left to do for adherents of such definitions.

How about definitions that decouple lying from what is said? One such definition has been proposed by Jörg Meibauer (2005: 1382; 2011: 285; 2014: 125; 2018: 195), who argues that speakers can lie by asserting or by conversationally implicating something they believe to be false. This definition leads to a very inclusive view of lying that admits not only the possibility of lying with non-declaratives carrying believed-false presuppositions (see Meibauer 2014: 137–40; 2018), but with conversational implicatures in general. At first sight, there seems to be a slight tension between such a very inclusive definition of lying and the results of our second experiment, in which participants seemed to distinguish between non-declarative lies and utterances with believed-false implicatures. However, further experiments would be required to find out whether and to which extent Meibauer’s approach fits with the folk view of lying (for initial findings relevant to this question, see Wiegmann & Willemsen 2017; Weissman & Terkourafi 2019; and Reins & Wiegmann 2021).

A second definition of lying that eschews the notion of what is said and allows for non-declarative lies is offered by Viebahn (2017; 2020; 2021). On this view, speakers have to commit themselves to something they believe to be false in order to lie. This commitment-based approach allows for non-declarative lies, while counting at least some cases involving believed-false implicatures (such as standard cases of misleading) as non-lies. The definition is thus broader than says-based definitions, while being narrower than Meibauer’s inclusive definition. It seems to fit with the results in Reins and Wiegmann (2021), in which lie-judgements for a range of different utterance types pattern with commitment-judgements.

Finally, our experiments suggest a broader investigation of judgements about non-declarative insincerity. We have only investigated judgements about cases of disinformative non-declarative insincerity. It would certainly be interesting to find out how ordinary speakers classify utterances of non-declaratives featuring only attitudinal insincerity (as introduced in Section 2.2). For example, are they willing to call a question a lie simply because the speaker is not interested in the answer? Such judgements would also be of interest because they feature pragmatic (rather than semantic) presuppositions. They would thus offer some insight into the broader question of whether ordinary speakers hold that it is possible to lie with pragmatic presuppositions.

Acknowledgements

We would like to thank audiences in Göttingen, Hatfield, London and Zurich, two anonymous referees and an editor of this journal for very helpful comments and suggestions. Alex Wiegmann’s work on this paper was supported by a grant of the Deutsche Forschungsgemeinschaft for the Emmy Noether Independent Junior Research Group Experimental Philosophy and the Method of Cases: Theoretical Foundations, Responses, and Alternatives (EXTRA), project number 391304769. He also acknowledges support by the Leibniz Association through funding for the Leibniz ScienceCampus Primate Cognition.

Appendix

1. Remaining vignettes from Experiment 1

Crowd

Laura and Barbara are making their way through a densely-packed crowd of people. They are carrying heavy and bulky bags and have to take care not to hit anyone with their bags. Laura is walking behind Barbara and sees that Barbara successfully avoids bumping into anyone with her bag, but Laura wants Barbara to think otherwise. After they have made it through the crowd, Laura asks Barbara: “Did you notice how you hit an old woman with your bag?” Barbara comes to believe that she hit an old woman with her bag.

Drunk

Sam and Harry spend the evening at a bar together. Harry gets very drunk, and on the next day he has no recollection of what happened in the later parts of the evening. Sam wants to trick Harry into thinking that he gave Harry an envelope, which Harry then misplaced. In fact, Sam did not give Harry an envelope. Sam asks Harry: “Have you still got the envelope I gave you last night?” Harry comes to believe that Sam gave him an envelope the previous night.

Mercedes

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. Harry asks Rosa: “Did you know that John owns a Mercedes?” Rosa comes to believe that John owns a Mercedes.

Homeless

A homeless person approaches a stranger to ask him for money. Although he has no children, he asks the stranger: “Could you spare one pound for my ill son?” The stranger comes to believe that the homeless person has an ill son and gives him some change.

Claire’s Party

Mike and Alex have a common acquaintance, Claire, whom they both want to get to know better. Mike wants Alex to think that Claire is hosting a party, and that she hasn’t invited Alex. Claire is in fact not hosting a party, as Mike is well aware. Mike asks Alex: “Are you going to Claire’s party on Saturday?” Alex comes to believe that Claire is hosting a party on Saturday.

Fight

Toby wants Mary to think badly of Simon, whom he dislikes. Toby knows that Simon was ill yesterday and stayed at home all day. He asks Mary: “Have you heard that Simon got into a fight at the pub yesterday?” Mary comes to believe that Simon got into a fight at the pub yesterday.

Baby (see Stokke 2018: 121)

Marion and Mick are colleagues of John, whose wife has recently given birth to a baby boy. Marion has seen the baby and knows it is a boy. She knows that Mick hasn’t seen the baby and wants to trick him into thinking that John’s baby is a girl. Marion says to Mick: “John’s baby is lovely. Have you seen her yet?” Mick comes to believe that John’s baby is a girl.

2. Remaining vignettes from Experiment 2

Cases of misleading with false implicatures

Used car (Carson 2006: 284–85)

Brian is trying to sell his used car to a prospective buyer. The prospective buyer asks: “Does the car overheat?” Last year, Brian drove the car across the Mojave desert on a very hot day and had no problems. However, since then, the car has developed problems that frequently cause it to overheat, as Brian knows very well. Brian wants to convince the prospective buyer that the car doesn’t overheat and responds: “Last year I drove this car across the Mojave desert on a very hot day and had no problems.” The prospective buyer comes to believe that the car doesn’t overheat.

Improper relationship (see Saul 2012: vii)

A politician has been accused of having an affair with his assistant. In an interview, a journalist asks the politician: “Did you have an improper relationship with your assistant?” The politician did have an affair with his assistant, but at the time of the interview the affair is over. The politician wants the journalist to come to believe that he never had an improper relationship with his assistant and replies: “There is no improper relationship between me and my assistant.” The journalist comes to believe that the politician never had an improper relationship with his assistant.

Business trip (see Adler 1997: 447)

Dominic and Lisa are meeting for a coffee. Lisa was expecting Dominic to bring along his girlfriend Amanda and is slightly surprised to see Dominic on his own. She asks Dominic: “Is everything going ok with you and Amanda?” Dominic has just split up with Amanda, but does not want to talk about it and wants Lisa to think that his relationship with Amanda is fine. As it happens, Dominic knows that Amanda is on a business trip about which she didn’t know until the previous day. He replies: “Amanda is on a business trip about which she was notified only yesterday.” Lisa thinks that everything is going ok with Dominic and Amanda.

Deceptive behaviour

Football fan

Dennis wants to go to a party that the local football club is organising for its fans. He isn’t interested in football and isn’t a fan of the local football club, but he knows that the organisers will check at the door and only let in fans of the club. He buys a jersey of the club and dresses in suitable colours in order to make the organisers at the door think that he is a fan. When he arrives at the party, the organisers see his outfit and indeed come to think that he is a fan of the club, so they let him enter.

Accent

Peter sells crêpes from his stall in London. He addresses customers in a strong French accent to get them to believe that he is from France. In fact, Peter is not from France and has never lived there. When Jim passes by, Peter again puts on a French accent and says: “Would you like to try one of my delicious crêpes?” Jim comes to believe that Peter is from France and buys a crêpe.

Actor (see Fallis 2015: 84)

At a party, Zach tries to make Carl, a new acquaintance, believe that he is a professional actor. In fact, Zach is not an actor and works as a school teacher. He takes on a theatrical pose and quotes Shakespeare: “To be, or not to be: that is the question.” Carl comes to believe that Zach is a professional actor.

3. Remaining vignettes from Experiment 3

Crowd (Declarative):

Laura and Barbara are making their way through a densely-packed crowd of people. They are carrying heavy and bulky bags and have to take care not to hit anyone with their bags. Laura is walking behind Barbara and sees that Barbara successfully avoids bumping into anyone with her bag, but Laura wants Barbara to think otherwise. After they have made it through the crowd, Laura says to Barbara: “You hit an old woman with your bag.” Barbara comes to believe that she hit an old woman with her bag.

Fight (Declarative):

Toby wants Mary to think badly of Simon, whom he dislikes. Toby knows that Simon was ill yesterday and stayed at home all day. He says to Mary: “Simon got into a fight at the pub yesterday.” Mary comes to believe that Simon got into a fight at the pub yesterday.

Mercedes (Declarative):

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. When Harry and Rosa are talking about John, Harry says: “John owns a Mercedes.” Rosa comes to believe that John owns a Mercedes.

Exam (Declarative):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “There will be a maths exam tomorrow.” Billy comes to believe that there will be a maths exam tomorrow.

Claire’s party (Declarative):

Mike and Alex have a common acquaintance, Claire, whom they both want to get to know better. Mike wants Alex to think that Claire is hosting a party, and that she hasn’t invited Alex. Claire is in fact not hosting a party, as Mike is well aware. When he meets Alex, Mike says: “Claire is hosting a party on Saturday.” Alex comes to believe that Claire is hosting a party on Saturday.

4. Remaining vignettes from Experiment 4

Exam

Exam (test case, disinformative question):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “Have you revised for the maths exam tomorrow?” Billy comes to believe that there will be a maths exam tomorrow.

Exam (Imperative):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “Don’t forget to prepare for the maths exam tomorrow!” Billy comes to believe that there will be a maths exam tomorrow.

Exam (Exclamative):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “How I’m dreading the maths exam tomorrow!” Billy comes to believe that there will be a maths exam tomorrow.

Exam (Optative):

Anna and Billy are classmates. Billy has missed a few days of school because he was ill. Anna wants Billy to think they have a maths exam tomorrow, although there in fact is no such exam. She texts him: “I hope you’re preparing for the maths exam tomorrow!” Billy comes to believe that there will be a maths exam tomorrow.

Mercedes

Mercedes (Question/interrogative)

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. Harry asks Rosa: “Did you know that John owns a Mercedes?” Rosa comes to believe that John owns a Mercedes.

Mercedes (Imperative):

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. When Harry and Rosa are talking about John, Harry says: “Keep in mind that John owns a Mercedes!” Rosa comes to believe that John owns a Mercedes.

Mercedes (Exclamative):

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. When Harry and Rosa are talking about John, Harry says: “What a nice Mercedes John owns!” Rosa comes to believe that John owns a Mercedes.

Mercedes (Optative):

Harry wants Rosa to think that his friend John is wealthy. In fact, John is not wealthy and does not own a car. When Harry and Rosa are talking about John, Harry says: “If only John would let me use the Mercedes he owns!” Rosa comes to believe that John owns a Mercedes.

Claire’s Party

Claire’s Party (Question/interrogative)

Mike and Alex have a common acquaintance, Claire, whom they both want to get to know better. Mike wants Alex to think that Claire is hosting a party, and that she hasn’t invited Alex. Claire is in fact not hosting a party, as Mike is well aware. Mike asks Alex: “Are you going to Claire’s party on Saturday?” Alex comes to believe that Claire is hosting a party on Saturday.

Claire’s party (Imperative):

Mike and Alex have a common acquaintance, Claire, whom they both want to get to know better. Mike wants Alex to think that Claire is hosting a party, and that she hasn’t invited Alex. Claire is in fact not hosting a party, as Mike is well aware. When he meets Alex, Mike says: “Don’t be sad that Claire hasn’t invited you to her party on Saturday!” Alex comes to believe that Claire is hosting a party on Saturday.