The ringtone blaring from the next table—“It’s raining tacos”—seemed to last minutes, as did your excruciating auditory experience. It isn’t just that the relative durations of these two events (the ringtone and your experience of it) appear to match: the ringtone consists in a progression of notes and your auditory experience seemingly unfolds through similar successive phases.

To suggest as much is a mistake, according to an influential account of temporal experiences—experiences, that is, of the temporal features of perceived events, such as their duration, order, succession, etc. Perceptual experiences have a representational content: just as the contents of thoughts or beliefs, whereby a belief that the French Revolution ended in 1814 needn’t last twenty-five years, contentful psychological states more generally don’t usually instantiate the temporal properties they represent, and the same goes for perceptual experiences:

[T]he experience of A followed by B is backward-looking. That is, it occurs with the experience of B, all in one go, but it represents the temporally extended period of A’s preceding B. (Tye 2003: 88)

[. . .] experiences do not themselves have temporal structure that mirrors the temporal structure represented in their content. [. . .] for example, a temporal order experience does not involve first experiencing one event and then experiencing a second event [. . .]; it involves experiencing both events at the same time, even though they are experienced as happening at different times. (Lee 2014b: 1)1

The view has received various labels: I’ll use “retentionalism”, as it seems to have become rather well entrenched by now.2

At the heart of retentionalism, then, lies the denial of any essential isomorphy or similarity between (a) the temporal properties of the events we perceive and (b) the temporal properties of our experiences of those events. That is, if perceived events—like a ringtone—are (ia) extended in time with (iia) a given duration, composed of (iiia) distinct temporal parts—the succession of notes in the ringtone—unfolding in (iva) a given order, retentionalists deny that an experience of the ringtone has to be (ib) extended with (iib) roughly the same duration as the ringtone, or that (iiib) the experience is to be segmented into successive temporal parts—corresponding to those of the ringtone—(ivb) ordered in the same way.3

In contrast, some theories of temporal experience acknowledge or even exploit various temporal isomorphies of this sort. Some merely insist there are temporal similarities between (a) and (b) simply as a matter of phenomenological observation. Some go a little further, advancing different kinds of dependence relations between (a) events and (b) experiences thereof to explain why a given isomorphy holds.4 But there is a range of theories putting some relations of temporal isomorphy to work in order to account for the distinctive phenomenology of temporal experiences, say, or how temporal experiences present or represent events and their temporal properties, with different types of explanations put forward to that effect.5 In its negative strand, then, retentionalism rejects any such temporal isomorphy as required by an explanation of how we experience the temporal properties of perceived events, since retentionalists typically deny such isomorphies hold in the first place.

The central feature of perceptual experiences, the positive strand in retentionalism goes, is their representational content: it’s possible, retentionalists insist, to represent an extended event with a certain duration and temporal structure without the experience itself being extended or structured in time (no successive temporal parts, no order)—indeed, it’s in principle possible for the instantiation of such temporal contents in consciousness to be strictly instantaneous, on such a view.

The aim of this paper is to question one crucial assumption behind the retentionalist view. The focus won’t be—not directly, at least—on the temporal properties of experiences per se, or any temporal isomorphy between experiences and the events they represent. Instead, the paper is concerned with some of the temporal properties of the neural processes responsible for the experiences in question and their content. Here’s why: suppose the ringtone lasts about 3 seconds, starting with a Re, ending with a Sol. Retentionalism has it that both notes, separated by a little less than 3 seconds, can figure in the same temporally extended content, which becomes conscious “all at once”, as it were. This means that both notes are consciously heard at the same time (while being represented as successive). The implication, then, must be that neural processes behind your consciously hearing the first Re take longer than the processing underpinning your hearing the last Sol: about 3 seconds longer, that is. Hence, retentionalism must presuppose that the neural processes behind our conscious sensory experiences can be partially delayed in the following manner:

partially delayed processing (pdp): if some perceived event x occurring at t-n and some later event y occurring at t-n* can both figure in the content of a conscious perceptual experience e occurring at t, then the neural processing NPx prompted by x at t-n and which results in experience e at t begins earlier—and lasts longer—than the underlying neural processing NPy of y from t-n* to t.

That is, the neural processes behind the perceptual representation of an earlier stimulus in some temporally extended content are delayed relative to the processing of a later stimulus figuring in the very same (token) content—conversely, the latter processing is “compressed” in time relative to the former.6

There are reasons to doubt such an assumption holds, however. The argument proceeds in two steps. First, I review certain empirically documented cases where the assumption of partially delayed processing (pdp) appears to be false: not only does the assumption lead to some implausible consequences, but it delivers the wrong results in the cases under consideration (§2).7 The second step aims to generalize the argument beyond the specific cases reviewed in §2: if partially delayed processing (pdp) is false, then a somewhat different temporal constraint must constrict how neural processing of perceptual stimuli unfolds in time, and we can ask what the most minimal but plausible deviation from partially delayed processing might amount to (§3). The obvious problem is that, unsurprisingly, such a temporal constraint undermines the central retentionalist claim about the temporal ontology of experiences. As a result, the retentionalist denial of temporal isomorphy between experiences and perceived events is jeopardized too, since the latter is grounded in the retentionalist proposal about the temporal ontology of experience. What’s more interesting, on the other hand, is that the resulting picture of temporal experiences remains perfectly compatible with the main considerations retentionalists advance to motivate their view.

1. Retentionalism

Three theses characterize the core of retentionalism—two concern the representational content of perceptual experiences, the third their temporal ontology:

Retentionalism

R1: extended temporal contents: the representational content of a perceptual experience can represent (a) a temporally extended event, (b) some of its distinct temporal parts, and (c) temporal features of, as well as temporal relations between, its temporal parts.

R2: backward-looking contents: such perceptual contents (d) do not represent all temporal parts of an extended event as simultaneous, but represent (e) earlier temporal parts of the event represented as occurring before later temporal parts.

R3: unextended experiences: a conscious perceptual experience with such an extended content needn’t be temporally extended.

We do perceive the temporal features (duration, order, succession, etc.) of perceived events, the view assumes, and theses R1 to R3 aim to offer an account of such experiences and their temporal content. The first thesis specifies the sort of temporal information conveyed in experience: it’s not just that experiences represent events which are in fact temporally extended, but they are perceived as such, at least to the extent that distinct temporal parts of perceived events are represented at distinct temporal locations—that much is required for the perceptual representation of temporal relations between distinct events.8

The second thesis adds a claim about the directionality with which such temporal information is presented. In principle, R1 is compatible with the suggestion that extended contents could, like expectations or predictions, be future-directed.9 One rationale behind R2 is the idea that experiences and their representational contents causally depend upon the events they represent, and hence must succeed what they represent, if only by a short amount of time (Tye 2003: 88).

These first two claims, it’s important to note, pertain to the contents of perceptual experiences exclusively: the retentionalist view distinguishes itself from a more traditional “memory account”, according to which memory plays a crucial role in explaining how we can become aware of the temporal features of perceived events (e.g., Reid 1855; LePoidevin 2007):

[. . .] the Atomist can reject Husserl’s idea that temporal experience involves a special kind of “retentional” memory experience [. . .]. Atomic temporal experiences might involve just one kind of conscious perceptual experience, not differentiated between “retention” and “perception”. Moreover, an atomic temporal experience need not involve “retention” even in the weak sense that it involves retaining contents from immediately past experiences: a temporally extended content could include—perhaps exclusively—information about events that were not presented in any previous experiences. (Lee 2014a: 6)

Lee isn’t denying, of course, that we have various sorts of memories of very recent experiences—or that such memories accompany later perceptual experiences:10 only that they fulfil any explanatory function in the retentionalist account. This “no-dependence-on-memory” desideratum upon temporally extended perceptual contents can be phrased thus:

non-mnemonic temporal content: if a conscious experience e occurring at some time t represents an event y succeeding some event x, (i) e depends on sensory stimulation by x (at t-n) and y (at t-n*), neurally processed so that (ii) both x at t-n and y at t-n* can figure in the content of a conscious perceptual experience for the first time at t, and (iii) neither the representation of x nor that of y in e “retain the content” of an earlier conscious experience of x (at t-n) or y (at t-n*).

Why insist on such a requirement? One reason simply goes back, it seems to me, to the central retentionalist idea that temporal experiences can be accounted for solely in terms of their temporally extended contents. For instance, Lee’s argument for retentionalism—the “trace integration argument” (Lee 2014a: 13–16) to be discussed briefly in §3.4—rests on the undeniable observation that perceptual systems are equipped with mechanisms (such as visual motion detectors in MT) which process information from successive stages of a stimulus. This suggests there are mechanisms in the visual cortex or earlier which are perfectly apt to deliver the sort of temporally extended contents retentionalists posit: the retentionalist explanation, then, has no need for additional mnemonic processes to “retain [. . .] contents from immediately past experiences” (Lee 2014a: 6). This explains why, as Lee says above, retentionalism doesn’t have to factor such contents into different components, one perceptual, the other mnemonic.11

What conception of memory does the desideratum presuppose—what kinds of memories does it aim to rule out? Lee’s formulation clearly states that what’s being rejected as explanatorily irrelevant is any kind of memory “even in the weak sense that it involves retaining contents from immediately past experiences” (Lee 2014a: 6). This seemingly functionalist characterisation involves three features: the kind of memory at issue consists in (i) some content-involving mechanism, which operates (ii) on the content of conscious perceptual experiences that have just occurred (its inputs), and (iii) serves to “retain” them in consciousness (outputs). Presumably, “retaining” means that the content of a conscious experience remains conscious even once the experience has ended, or at least that it can make its way back into consciousness immediately after the initial experience.12 Presumably, it’s also possible that the content thus retained via memory isn’t exactly identical to the content of the initial experience: it might largely overlap the original perceptual content, if the mnemonic content in question partially fades or degrades. Clearly, different kinds of memories might fall under the scope of this characterisation, including iconic memories, episodic memories of experiences just passed, as well as the sort of “retentions” Husserl (1991) posited.13 An advantage of such a characterisation, then, is that it promises to capture a shared trait of different kinds of memories, while ignoring their differences.14

The third thesis, R3, concerns experiences themselves—qua psychological events or states—and their temporal ontology. Again, the underlying thought appears to be that, insofar as the phenomenology and content of our sensory awareness of temporally extended events can be fully accounted for via the sort of temporally extended perceptual contents specified in R1 and R2, there’s no reason to expect experiences with such contents to be extended in time, let alone mirror the temporal structure of the events they represent. It is this third thesis, then, which grounds the negative strand in retentionalism regarding temporal isomorphies—and thereby distinctively targets extensionalist approaches for whom temporal experiences must be temporally extended in order to present or represent the temporal features of perceived events.15

R3’s formulation is often qualified along two dimensions: one temporal, the other modal. Temporally, first: perceptual experiences are in fact strictly unextended in time, one version of retentionalism has it:

temporally restricted R3: perceptual experiences of temporally extended events are instantaneous.

This is implied by another standard motivation for retentionalism:16

The Principle of Simultaneous Awareness (PSA): to be experienced as unified, contents must be presented simultaneously to a single momentary awareness (Dainton 2017: §3, p. 31).

Depending on what a “single momentary awareness” amounts to—specifically, whether such awareness is taken to occupy a single point, as it often seems to—psa leads directly to a temporally restricted version of retentionalism.

Yet it’s not uncommon to grant that the relevant experiences do in fact have a little duration nonetheless:

[. . .] experiences, although they are not experiential processes, need not be instantaneous. An atomic experience might be a property instantiation enjoyed most fundamentally by a subject as they are over a short interval of time. Related to this, an atomic experience may be realized by an extended physical process (as I would argue all experiences are—see below). What makes this coherent is that the proper temporal parts of the realizing process need not themselves realize any experiences; in particular, they might be simply too short-lived. (Lee 2014a: 4)

Leading to a slightly weaker construal of R3:

temporally relaxed R3: perceptual experiences of temporally extended events have a relatively brief duration.

The other dimension along which R3 can vary is whether distinct events (or temporal parts of events) figuring in temporally extended contents must be experienced at the same time. That is,

modally restricted R3: perceptual experiences of temporally extended events have a content which necessarily represents successive events simultaneously—though not as simultaneous.

This version voices the main idea behind psa, going back to Kant (1790/1980: 133), as well as Geach:

Even if we accepted the view . . . that a judgement is a complex of Ideas, we could hardly suppose that in a thought the Ideas occur successively, as the words do in a sentence; it seems reasonable to say that unless the whole complex content is grasped all together—unless the Ideas . . . are all simultaneously present—the thought or judgement just does not exist at all. (Geach 1957: 104) 17

Some retentionalists, on the other hand, opt for a slightly weaker proposal:

modally relaxed R3: perceptual experiences of temporally extended events have a content which can represent successive events simultaneously—though not as simultaneous.18

This weaker version often serves to motivate the retentionalist denial that the delivery of temporal information in conscious experience has to be temporally extended and structured. It needn’t be, the point goes:

Granted, I experience the red flash as being before the green one. But it need not be true that my experience or awareness of the red flash is before my experience or awareness of the green one. If I utter the sentence

The green flash is after the red flash,

I represent the red flash as being fore the green one; but my representation of the red flash is not before my representation of the green flash. In general, represented order has no obvious link with the order of representations. Why suppose that there is such a link for experiential representations? (Tye 2003: 90)19

Note that the modally relaxed version of R3 might be construed differently, depending on how its modality (“can”) is interpreted. Indeed, it’s not entirely clear how the possibility in question should be understood. Perhaps, it’s not even alethic, but epistemic: something like (m1) “it is consistent with phenomenological data—or the nature of representation in general—that our temporal experiences are not in fact temporally extended or structured”. On the other hand, if the modality is genuinely alethic, the modal claim at issue is meant to be stronger, I take it, than a mere logical or metaphysical possibility—in the sense, say, that even if our temporal experiences are actually extended and structured temporally, we find (m2) some possible worlds containing experiences in some subjects (be they alien, or humans with a different psychological or neurophysiological make-up), which are not. Perhaps, the modality is (m3) nomological, or at least (m4) restricted to close worlds where human subjects are psychological and neurophysiological duplicates of actual humans.

These different interpretations, we’ll see (§3.2), make a difference to whether the modally relaxed version of thesis R3 is undermined by the considerations in the next sections. On the other hand, the modally restricted version of R3 clearly appears to be falsified by such empirical considerations, as do the temporally restricted and temporally relaxed versions. This means that the negative strand in retentionalism is under threat: the retentionalist rejection of isomorphies between the temporal properties of experiences and those of the events experienced hinges on R3, which relies on the assumption of partially delayed processing (pdp). If, however, the latter isn’t empirically viable, this undercuts the retentionalist denial of temporal isomorphies.

2. Colour-Motion Visual Asynchrony

Now to the argument: I begin by reviewing one experiment which sheds some light on the timeframe in which stimuli and their different features are processed, and how this affects perceptual consciousness. I’ll then explore its significance for the retentionalist view (§2.4).

2.1. Perceptual Latencies

The causal process whereby the occurrence of a stimulus in a subject’s environment leads to a conscious experience of that stimulus takes time. Psychologists divide that interval into three rough periods:20

external latency: the time it takes for a physical signal produced by the distal stimulus to reach the sensory receptors of a perceiving subject.

neural latency: the time it takes for the physical signals produced by a subject’s sensory receptors following proximal stimulation to “ascend the neural processing hierarchy”, including different brain areas responsible for processing different features of the stimulus (Holcombe 2009; 2015).

online latency: the time between completion of neural processing of a stimulus in central cortical areas and the occurrence of a conscious experience of that stimulus.21

There is evidence that different features of a stimulus can be processed at different speeds, and so incur differential neural latencies. For instance, differences in contrast intensity, or in chromatic brightness, can affect the speed at which dedicated neurons respond to such features: the more intense the stimulus, and the brighter the contrast, the faster the neuronal response. This can be observed early on in the lateral geniculate nucleus (LGN) of macaque monkeys, in their primary visual cortex, even in later stages of processing such as the superior temporal sulcus.22 There’s also evidence that colour can be processed faster than motion.23

2.2. Differential Latencies in Vision

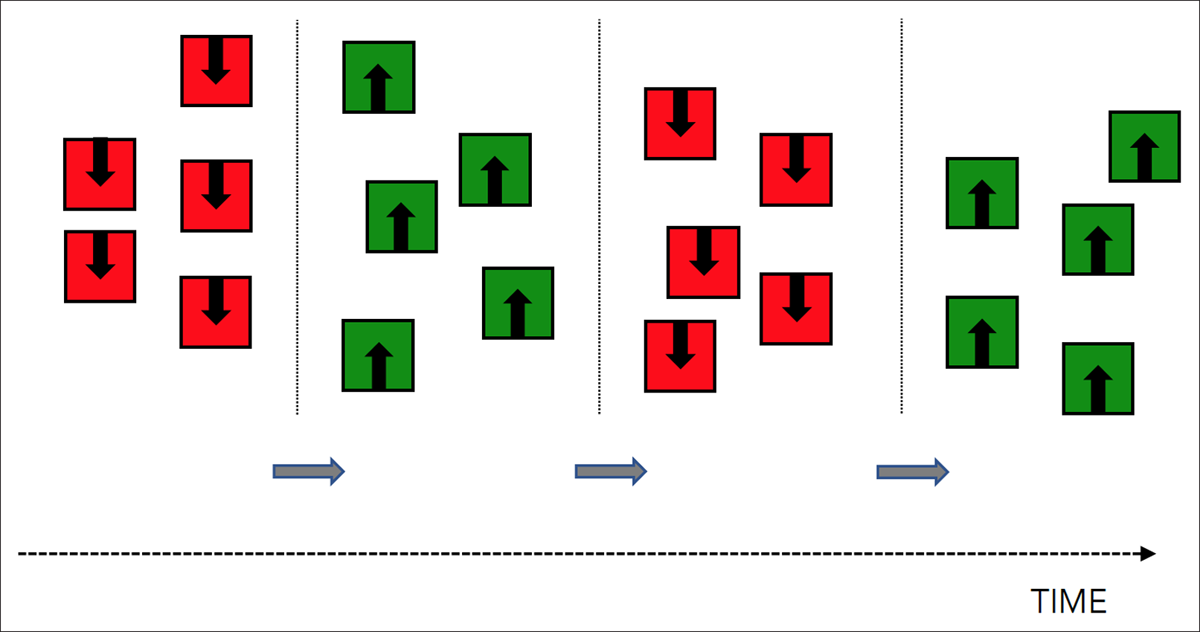

In an influential experiment by Moutoussis and Zeki (1997a), the display consisted of squares moving up and down at an alternation rate of 1–2 Hz. As they moved, their colour also changed from green to red at the same alternation rate: all squares simultaneously had the same colour and changed colour simultaneously, likewise with their direction of motion.24

Illustration of two oscillations (in-phase trial) in Moutoussis and Zeki’s (1997a) experiment.

The experiment included two series of trials: one where the period of oscillation for each feature took 565 ms, the other with oscillations at 706 ms (Moutoussis & Zeki 1997a; Bedell et al. 2003; 2006). In some trials, changes in motion direction and colour were in phase (i.e., the switch in both colour and motion was simultaneous), while in other trials they were not (the chromatic change occurred either midway through the motion, or so as to allow one colour to dominate through motion in one direction), and each combination was presented four times in mixed order (see Moutoussis & Zeki 1997a: 394, figure 2), with each trial consisting of ten repetitions. Subjects had to perform a pairing (or matching) task: that is, report the colour of the squares for a specified direction of motion, and vice versa.25

Moutoussis and Zeki found that, for the squares to appear with a uniform colour when moving in one direction, the change in the direction of motion must in fact occur about 80 to 140 ms before the change in colour.26 In other words, subjects systematically paired the direction of motion with the wrong colour:

Color-motion pairs perceived to occur simultaneously are not simultaneously present in the real world. By changing both the color and the direction of motion of objects rapidly and continuously and asking subjects to report which color-motion pairs were perceived as coexisting, it was found that motion was paired to the color present on the computer screen roughly 100 ms later (Moutoussis & Zeki, 1997a, 1997b). If there were no perception-time difference between color and motion, then the perceptual experience of the subjects should follow the reality occurring on the screen. These results thus suggest that the color of an object is perceived 100 ms before its direction of motion, a phenomenon we have called perceptual asynchrony and that we have attributed to the different processing-times necessary for the two functionally specialized systems to “finish” their corresponding jobs. These systems are thus not only processing but also perceptual systems, creating specific visual percepts in their own time and independently from one another. (Moutoussis 2014: 202–3)

The results vary somewhat as a function of the following factors:

angle of direction: if the direction of motion changes by less than 180 degrees (say the object’s trajectory shifts by 45 degrees only), motion needs to precede colour only by 80 ms.27

relative luminance: decreasing chromatic luminance reduced the effect by 25 ms; 40 ms when the luminance of the motion was decreased.28

task: the effect is observed when subjects are asked to report which colour they see when the object is moving, say, upwards (pairing/matching task). There are suggestions (Nishida & Johnston 2002; 2010; Bedell et al. 2003; Clifford et al. 2003) that the effect seems to disappear almost entirely (reduced to about 6 ms) when subjects are tasked instead with reporting which change (colour or direction of motion) occurs first (temporal order judgment).29

These results demonstrate that, owing to the differential neural latencies with which different features of a stimulus are processed, what are in fact non-simultaneous features (motion preceding colour) of non-simultaneous presentations of a stimulus (or of successive stimuli) appear to occur simultaneously in experience. This, we’ll now see, has various implications for online latencies—the interval between the completion of neural processing for a representation of a given feature and when that feature becomes conscious.

2.3. Two Models

According to the online model,30 a stimulus feature becomes conscious immediately after its processing by a dedicated neural mechanism is completed.31 Hence, differences in neural latencies between colour and motion result in the colour and motion of one stimulus (at a time) becoming conscious at different times, which serves to explain visual asynchrony. Because the direction of motion of an upward green stimulus takes longer to process (about 100 ms more, say), such a feature becomes conscious at the same time as the colour (red) of the subsequent downward stimulus, which is processed at greater speed. As a result, subjects report seeing a red square moving upward, followed by a green square moving downward.32

In contrast, Eagleman’s “delayed perception” model has it that the last stages of processing leading to the generation of a conscious experience involve postdictive mechanisms, to the effect that the visual system “wait[s] for the slowest information to arrive” before “going live”—Eagleman suggests a delay of about 80 ms (2010: 223).33 On this model, online latencies might outstrip neural latencies in the sense that the visual system generates a conscious representation only after all the synchronous features of a stimulus have been processed. But if the brain waits for the slower feature to be processed before generating a conscious experience of both, why expect any visual asynchrony at the conscious level, on this model? The timeframe is key: an 80 ms delay allows the most recent information about the direction of motion to be still missing when the processing of such information will be completed, say, 100 ms after chromatic information is processed. Hence the asynchrony: when neural processing of the colour (green) of the upward moving square is completed, the direction of its motion is still being processed, even after 80 ms, given a 100 ms neural latency. This is why, the suggestion must go, its colour (green) ends up being paired, inaccurately, with the direction of motion of the previous downward-moving red stimulus.34

An additional possible difference between the two models concerns feature-binding in conscious experience. For the online model, different types of features (e.g., colour and motion) are processed separately and independently—as Moutoussis insists in the last sentence quoted earlier. And since, on this model, such features will become conscious as soon as their neural processing is completed, this means that the colour of a stimulus will “make it into” consciousness slightly before its direction of motion does, if the latter is processed more slowly. In other words, the online model suggests, cases of visual asynchrony provide some evidence that there is no feature-binding of the different features of a stimulus in conscious experience (following Zeki 1993). That is, there is strictly no single experience representing, say, a red square moving upward, on this version of the online model. Instead, an experience of redness and a distinct experience of upward motion occur more or less at the same time, which explains why subjects report them as simultaneous when engaged in a pairing task.

It might seem natural, on the other hand, to view the delay model as operating under the assumption that the different features of a stimulus are bound together in conscious experience: indeed, the point of the 80 ms online delay could be precisely to allow for feature-binding, whereby colour and motion, which are processed separately and at different speeds, are then bound together in a conscious representation of both features during the online delay. Subjects thus enjoy a visual illusion of a red square moving upward, which explains the pairing judgments subjects are tasked to report.35

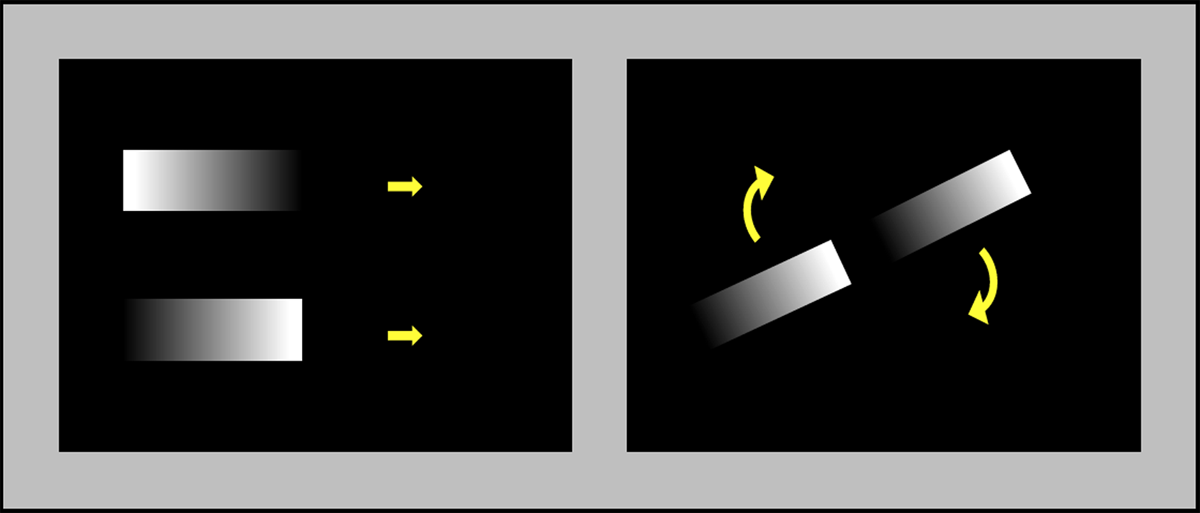

The little evidence there is to adjudicate between these models doesn’t seem to support the delayed perception model, however.36 Consider the illustration on the left of figure 2:37

The rectangles are moving from left to right and back in synchrony—that is, in the same direction, at the same speed, occupying the same relative location at the same time. Whereas the top rectangle is brighter on its left side, the bottom rectangle has a brighter right side. If brighter stimuli are processed faster, one should expect the brighter side of each stimulus to be processed more rapidly than their darker side. So, were the online model to be correct, the reasoning goes, one should expect that, when both rectangles are moving to the right, the apparent length of the top rectangle should seem to contract somewhat while that of the bottom rectangle should seem to expand (Eagleman 2010: 221–23; Holcombe 2015: 825). The thought appears to be that, since the brighter side of each rectangle is processed faster than the other, it will become conscious earlier, if the online model is true. Thus, as it moves towards the right, the left-hand side of the top rectangle will become conscious before the right-hand side, and will retain this temporal advantage throughout its rightward motion. When the right-hand side becomes conscious a little later, it will appear at a location which, owing to its online delay, is closer to the location occupied by the faster processed left-hand side: closer, that is, than it in fact is, hence the apparent contraction. Similarly with the bottom rectangle: its brighter right-hand side will become conscious first, while the left-hand side is delayed and appearing at a location further apart from that of the faster processed right-hand side, thereby producing an apparent dilation of the stimulus when moving rightward.

Were such an illusion to be observed, advocates of both models seem to agree, it would rule in favour of the online model (Eagleman 2010: 221–26; Holcombe 2015: 825). Though the brighter side may be neurally processed faster than the other, the reasoning seems to go, the different neural latencies at play may still fall within an 80 ms online delay, for instance. Hence, according to the delayed perception model, both sides of the square could be fully processed before becoming jointly conscious following the 80 ms delay. That’s why there’s no reason to think, on the delay model, that one side of the square becomes conscious earlier than the other, and so no reason to expect any apparent contraction or expansion of the stimulus as a result.38

Yet, it appears the sort of illusory experience the online model predicts does in fact occur. And while Eagleman (2010: 226) has conceded this much, he has tried to explain the illusion away as a function of the use of a neutral density filter to reduce contrast over parts of the screen. White et al. (2008) report having produced the illusion without a density filter, however—in this case, a rotating Hess effect, similar to the illustration on the right of figure 2, where the rectangles (or lines, in this case) look to curve—thereby suggesting that different parts of the figure with different degrees of illumination reach consciousness at different times, as the online model predicts.39

2.4. Significance for Retentionalism

How does the evidence from visual asynchrony bear on the retentionalist view? At first sight, it might seem as though the online model is inimical to retentionalism: if stimuli become conscious very soon after being neurally processed, one might naturally expect that two successive events (e.g., two notes in a ringtone, or two successive presentations of the same red object) would become conscious successively, as soon as each is neurally processed. Conversely, the delayed perception model might look like a natural fit for retentionalism: an 80 ms delay could help explain how two successive events can nevertheless become simultaneously conscious in a temporally extended content, as retentionalism requires. If so, any evidence supporting the online model against the delay model (as in §2.3) ought to sound like bad news for retentionalism.

The situation is a little more complicated, though—and, in fact, worse for retentionalism. For even if the evidence had favoured the delay model rather than the online model, the former isn’t really such a natural fit for retentionalism after all. In fact, evidence from visual asynchrony appears to undermine retentionalism in at least two respects, even when the “delayed perception” model is taken for granted.

To see this, it’s important to keep two commitments of retentionalism clearly in sight. First, the “no-dependence-on-memory” desideratum for temporally extended perceptual contents:

non-mnemonic temporal content: if a conscious experience e occurring at some time t represents an event y succeeding some event x, (i) e depends on sensory stimulation by x (at t-n) and y (at t-n*), neurally processed so that (ii) both x at t-n and y at t-n* can figure in the content of a conscious perceptual experience for the first time at t, and (iii) neither the representation of x nor that of y in e “retain the content” of an earlier conscious experience of x (at t-n) or y (at t-n*).

Second, clauses (i) and (ii) in this desideratum have the following implication for the neural processing underpinning temporally extended perceptual contents:

partially delayed processing (pdp): if some perceived event x occurring at t-n and some later event y occurring at t-n* can both figure in the content of a conscious perceptual experience e occurring at t, then the neural processing NPx prompted by x at t-n and which results in experience e at t begins earlier—and lasts longer—than the underlying neural processing NPy of y from t-n* to t.

To repeat, retentionalism requires that non-simultaneous events represented at the same time in the same experience must have different neural or online latencies.

Yet, evidence from visual asynchrony suggests there are cases where this isn’t so. For the evidence surveyed in §2.2 indicates, not just that (a) there doesn’t seem to be any neural or online delays of the sort retentionalism needs, but that (b) for those neural latencies which do impact conscious experience, the resulting experiences lack a temporally extended content.

A brief summary of the evidence for (a) includes:

(i) no delay: even with different synchronous features of a stimulus, when one feature (motion and its direction) has a longer processing latency than the other (colour), the brain doesn’t appear to wait until the more slowly processed stimulus feature (motion) has been fully processed. Rather, each feature becomes conscious in its own time, with colour reaching consciousness before the direction of motion. This holds for the online model, as well as with the 80 ms delay posited by the delayed perception model—the differential online latency between colour and motion is simply much shorter (about 20 ms rather than 100 ms) on the latter.

(ii) constant processing: if a colour of a given intensity is processed at a certain speed, it is processed roughly at that same speed through successive presentations of the stimulus (the same goes for the slower processing of direction of motion). This explains how the effect of Zeki and Moutoussis’s experiment occurs through successive presentations of the display in a given trial (consisting of 10 repetitions): the upward direction of motion of an earlier presentation is wrongly paired with the red colour of a later presentation, followed by the downward motion of yet another earlier presentation being paired (wrongly again) with the green colour of a later presentation, and so on. There is no indication, that is, that processing speeds or online delays somehow become shorter over successive presentations, as one would expect if partially delayed processing (pdp) were true.

As for (b):

(iii) no extended content: in a pairing task, the direction of motion of an earlier stimulus (x at t-n) is reported as synchronous with the colour of a faster-processed later stimulus (y at t t-n*): though the motion of x at t-n and the colour of y at t-n* seem to become conscious more or less at the same time (t), both features are judged (wrongly) to occur simultaneously.

(iv) delay not enough for extended content: if the brain did wait an extra 80 ms before binding in consciousness the more slowly processed direction of motion of x at t-n with the more quickly processed colour of y at t-n* (as the delay model predicts), such delay wouldn’t suffice to guarantee that the resulting experience is representing two successive events or their features as such: the point of the delay model is to (1) explain how colour and motion are bound into a conscious representation of a simultaneous event (e.g., an upward-moving red square), where (2) the posited delay (80 ms) is short enough to explain how information about the direction of motion (e.g., the red square is in fact moving downward) arrives too late to be factored in such a conscious representation. Even on the delay model, that is, processing isn’t so delayed as to allow that different synchronous features of the same stimulus (rather than successive ones) are represented in the same conscious experience—let alone that successive stimuli could be processed to become conscious simultaneously.

It isn’t just that the evidence from visual asynchrony fails to line up with the main claims and commitments of retentionalism. The assumption of partially delayed processing (pdp), which the retentionalist postulation of temporally extended perceptual contents demands, seems inconsistent with what happens in cases of visual asynchrony.

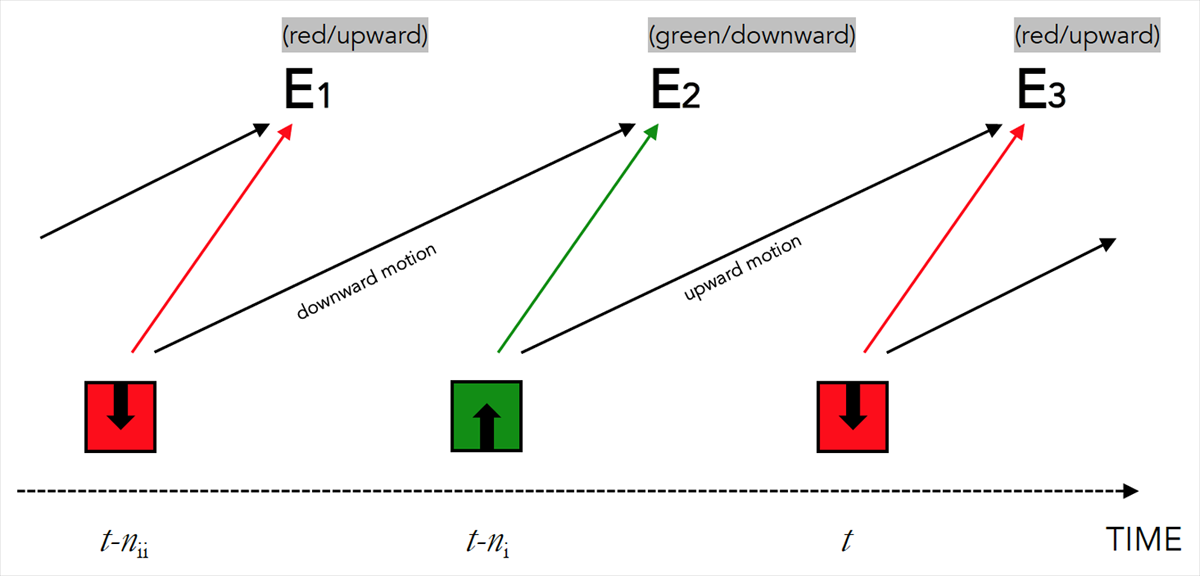

Consider figure 3, to begin with, which depicts the sort of experiences subjects appear to have in cases of visual asynchrony—with the distal stimuli at the bottom, the contents of the relevant experiences at the top (highlighted in grey), and the different processing latencies marked by the orientation and length of the different arrows in-between:40

Note first that no model or explanation of visual asynchrony posits or needs to posit an experience at t (or thereafter) which represents both events x (stimulus presentation at t-nii) and y (stimulus presentation at t-ni), let alone represents them as succeeding one another. The main difference between the online and delay models is how long after a stimulus is neurally processed do conscious experiences e1, e2, e3, occur: 80 ms or much less.

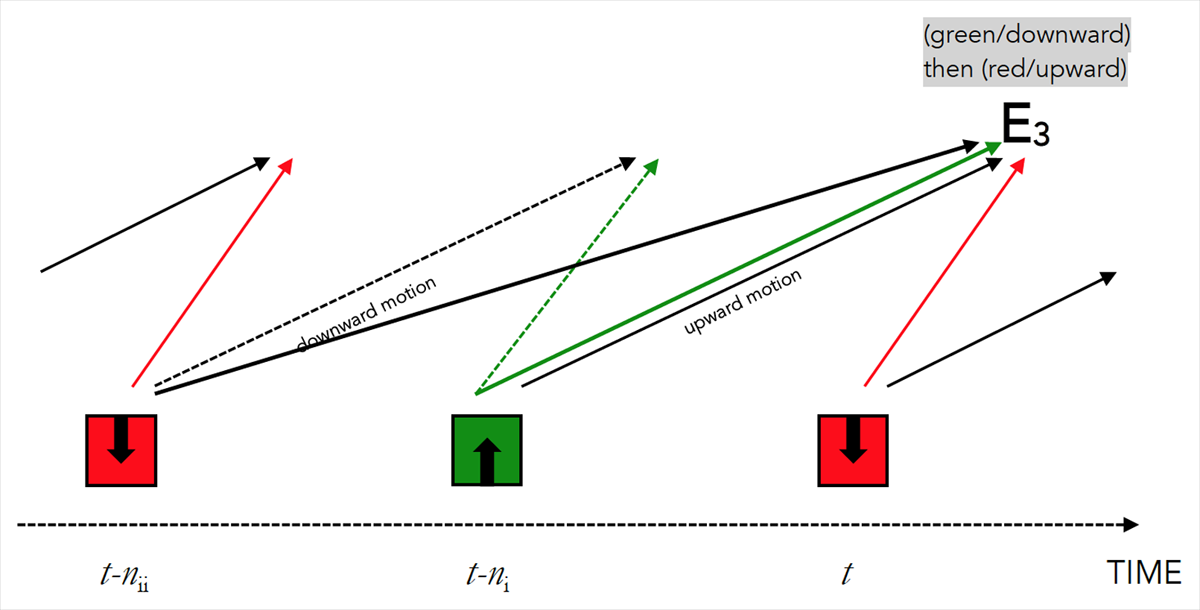

But why exactly couldn’t the experiences depicted in figure 3 have temporally extended contents instead? Can’t a retentionalist simply re-describe the situation as in figure 4: the subject’s experience right after t (e3) has a temporally extended content representing the stimulus’ changes from t-nii to t—that is, the downward motion of a green square, followed by the upward motion of a red square? Figure 4, that is, illustrates the kind of partially delayed processing (pdp) retentionalism would need to assume in this case:

There are three problems with this picture. First, for experience e3 to represent both x (the stimulus at t-nii) and y (the stimulus at t-ni) in a temporally extended content, online latencies would have to be significantly longer than what the evidence suggests. The online latency for the stimulus’ downward motion at t-nii would have to be significantly longer than the online latency for the stimulus’ upward motion at t-ni in order for both to become conscious simultaneously after t (similarly with online latencies for the stimulus’ green colour at t-ni and its red colour at t). The problem isn’t just that there’s no evidence that this is how online latencies unfold. The problem is that the evidence from visual asynchrony shows that such partially delayed processing (pdp) for the same type of features is in fact rather unlikely: processing of the direction of motion remains stable in such conditions, with a constant latency across successive presentations or repetitions. Otherwise, the asynchrony effect might not hold across the ten repetitions which constitute a given trial in Zeki and Moutoussis’s experiment.

What’s more, the online delays implied by such a suggestion should strike one as implausibly long. Suppose the delay in question encompasses processing of just two successive presentations of the stimulus (e.g., at t-nii and t-ni), even at the rapid alternation rates used in cases of visual asynchrony: between 250 ms between changes (in the version of Nishida & Johnston 2002) and up to 565 ms or even 700 ms (for Zeki & Moutoussis’s 1997a version). Assume it takes only about 80 ms at the earliest for the second presentation of the stimulus at t-ni to become conscious (that is, assume a rather short neural latency with no added online latency, if only for the sake of argument): this version of retentionalism would still require processing delays of the first presentation of the stimulus at t-nii to range between 330 ms and up to 780 ms, in order for an experience like e3 in figure 4 to have an extended content representing just two successive presentations of a stimulus at rapid alternations.41

Second, what about experience e2 (right after t-ni) which precedes experience e3 (see figure 3)? There’s no reason why retentionalists should deny that an experience occurs at that time. But if the contents of e2 and e3 are both temporally extended (as in figure 4) by virtue of partially delayed processing (pdp), the content of e3 representing the succession [green downward moving square followed by red upward moving square] from t-nii to t is likely to partially overlap that of e2, since both have [green downward moving square] from the stimulus between t-nii and t-ni as part of their content. The retentionalist view would then need to explain the status of that part of e3’s content it shares with e2’s. For if such content rests on neural processing already deployed for the same representation of the stimulus in an earlier experience (e2), doesn’t it violate the “no-dependence-on-memory” desideratum on temporally extended contents? That is, isn’t the shared content [green downward moving square] from t-nii to t-ni in fact mnemonically “retained” from e2 to e3—and if not, why not?

Third, and more importantly, it becomes unclear why there should be any visual asynchrony in the first place. For if partially delayed processing (pdp) is true, it remains mysterious why, in experience e3 (figure 4), the upward motion of y at t-ni should end up being bound with the red colour of a later presentation of the stimulus at t, rather than being (accurately) bound with its own colour (green) at t-ni—ditto for the stimulus’ features at t-nii. On both the online and delay models, what accounts for the asynchrony is the fact that there isn’t enough time to complete neural processing of both synchronous features (i.e., colour and direction of motion at t-ni) before one (colour) becomes conscious, owing to the longer neural latency of the other (motion direction). But given the longer neural or online latencies retentionalists need to posit in pdp, if the brain has all this additional time to produce a conscious experience of successive red and green moving squares, doesn’t it thereby have ample time to fully process and accurately bind the synchronous colour and direction of motion of a given synchronous presentation? There’s no reason to expect that cases of visual asynchrony arise, if partially delayed processing (pdp) is assumed, and no mechanism to explain why they nevertheless do.

Here, retentionalism faces the same difficulty the delayed perception model ran into: the latter, we saw, implies that illusions like the Hess illusion ought not to occur. The retentionalist has to posit even longer online delays, since such delays must encompass the completion of neural processing for several successive presentations or repetitions of the changing stimulus—whereas the 80 ms delay of the delayed perception model was meant to explain just one synchronous presentation of the stimulus at a time, not several. And if there’s reason to rule out online delays of 80 ms, why think that longer online delays are somehow likelier?

The difficulties surveyed thus far arise in a specific set of conditions, where the alternations of the changing stimuli from green to red and moving up and down proceed at the rate of 1–2 Hz. It’s in those conditions that evidence of visual asynchrony has surfaced. In the next section, I attempt to generalize the problem.

3. The Causal Argument

If partially delayed processing is false (at least in some cases), the question is what to replace it with—what might be the most minimal deviation from partially delayed processing (pdp) nevertheless compatible with evidence from visual asynchrony? To zero in on such a replacement, it may help to start with three related general assumptions about the mechanisms underpinning our perceptual systems: (a) perception of a stimulus essentially involves a causal chain from the occurrence of the distal stimulus in the subject’s environment to a conscious experience of that stimulus, via proximal stimulation of the relevant sensory receptors and subsequent processing mechanisms, all the way to various dedicated cortical areas. Consequently, (b) such processes take time: there’s a temporal gap, even if a very brief one, between the occurrence of a worldly event and its presentation or representation in conscious experience.42 This temporal gap is (c) relatively constant, insofar as the part of the causal chain beginning with the proximal stimulus (stimulation of a sensory organ) is concerned: even if not perfectly constant, there are constraints on the speed of processing throughout the perceptual system, to the effect that there’s at least a lower and upper bound on how long it takes a stimulus to be processed and become conscious.

The constancy hypothesis, (c), is problematic for the retentionalist view, since it contradicts the assumption of partially delayed processing (pdp) retentionalism relies upon. The difficulty concerns what retentionalism says about the temporal ontology of experience—thesis R3: namely, that experiences with temporally extended contents aren’t, or needn’t be, temporally extended or structured. Call this the “causal argument” against the retentionalist view. The argument should seem unsurprising: its interest lies mostly in exactly which retentionalist commitment(s) it undermines, which assumptions it leaves standing, and how retentionalists might try resisting it. Before outlining the argument, the constancy hypothesis needs some refinement.

3.1. Constant Processing

Is the temporal gap from proximal sensory stimulation to conscious experience really constant? After all, evidence from visual asynchrony reveals that the processing of different features of a stimulus (e.g., motion, orientation) is delayed by about 100 ms in comparison to others (e.g., brightness, colour).

Properly contextualized, the assumption that the temporal gap is constant remains consistent with such evidence. First, relative to those features (bright contrasts, more intense colours) that are neurally processed faster, there’s no reason to think that the interval between proximal sensory stimulation and a resulting conscious experience doesn’t stay constant through successive presentations. Ditto with those features processed somewhat less rapidly (motion and its direction, orientation, etc.). Second, though there is evidence that neural and online latencies vary between different sensory modalities,43 the constancy hypothesis can be restricted to a specific modality. More importantly, the constancy hypothesis isn’t at all a claim to the effect that different features, in different sensory modalities, can be neurally processed more or less at the same time. It’s a claim, rather, about the relative speed and duration of neural processing for successive presentations of the same stimulus’ features through the same sensory channels—it’s in this sense that processing latencies are supposed to remain relatively constant over time.

Accordingly, different perceptible features within a sensory modality can be grouped in terms of the interval it takes for them to be neurally processed and reach consciousness. For each such group, one can obtain a different specific version of the following principle, the causal constancy assumption:

| (cca) | for a given sensory modality, and for some properties F, G, H, . . . of a stimulus x, perceived through that modality (and for some similar experimental settings w1, w2, w3, . . . .), the processing interval λ from proximal sensory stimulation caused by x to the onset of a conscious perceptual experience of F, G, or H, . . . is constant (across situations w1, w2, w3, . . .). |

Reference to “similar experimental settings w1, w2, w3” merely serves to emphasise it is not enough to characterize such causal/temporal assumptions regarding neural processing solely in terms of the features being processed: the conditions in which they are perceived (e.g., whether adaptation can take place, what angular information about motion is presented, at what rate the features alternate, what stimuli they are preceded by, etc.) can make a difference too.44

Note also what talk of “constancy” amounts to in this context: it’s not just that the temporal gap λ after which a conscious experience of a given feature F occurs takes (i) no less than λ across successive proximal stimulations caused by F, but also that it takes (ii) not much more than λ in normal circumstances. Indeed, evidence from visual asynchrony doesn’t just support a conclusion about how long a conscious experience is delayed (or not) after proximal stimulation, owing to the pace of neural processing. It provides information about the maximal relative length of such a delay. For instance, in Moutoussis and Zeki’s (1997a) experiment, the evidence suggests it always takes longer for the visual system to process information about the direction of motion than about colour. But this delay (between 80 and 140 ms) isn’t just a lower bound: neural processing of the direction of motion is always behind the neural processing for colour by more or less the same amount. Significantly, this holds for successive presentations of the same stimulus throughout a given trial (in this case, ten repetitions, in the same conditions).

This means that λ needn’t be a strict or precise value, but a limited range: there is room for some variation between 80 and 140 ms, both across different trials carried out in different conditions, and through successive repetitions within a given trial. The latter, for instance, would allow that λ compresses somewhat over several successive presentations of the same stimulus with the same stimulus features presented in the same conditions. For example, processing of a green surface could well accelerate over the course of successive repetitions—thus, λ could be a little longer for neural processing at the onset of a stimulus compared to its offset. Such compression, however, still requires some minimum delay (λ’s lower bound) in the processing of each successive presentation of the relevant stimulus feature.45 As we’ll see, this is all the argument below really needs—provided, that is, that processing of a later presentation of the same stimulus feature isn’t so compressed as to become conscious simultaneously with an earlier one (in which case, pdp holds, and processing wouldn’t be constant at all, even in this moderate sense).

Finally, note that cca doesn’t presuppose the online or delay model. In particular, cca can still be true if the delayed perception model is preferred: for even if the production of a conscious experience is delayed by about 80 ms to allow for the slower processing of other simultaneously presented features, 80 ms serves as a maximum too.

3.2. The Argument

Imagine seeing a red fire-truck cross your visual field at great speed—in a few seconds, it is gone. Bright red and seen in broad daylight, the fire-truck’s colour is likely to be neurally processed relatively quickly. Its motion (not just the fact it is moving, but its trajectory through the scene) is the sort of temporally extended event one can perceive, according to retentionalism, via a temporally extended content. The example can be schematically described as follows:

(i) at t1, the red fire-truck is located at l1.

(ii) at t2, the red fire-truck is located at l2.

(iii) at t3, the red fire-truck is located at l3.

(iv) at t4, the red fire-truck is located at l4.

(v) at t5, the red fire-truck is located at l5.

Suppose that each time—t1, t2, t3, t4, and t5—is separated from the next by about 200 ms, so that the interval from t1 to t5 lasts about 800 ms. Suppose also that the perceptual conditions from t1 to t5 (lighting, your visual attention focused on the truck, etc.) remain perfectly stable.

Next, apply cca:

| (cca) | for a given sensory modality, and for some properties F, G, H, . . . of a stimulus x, perceived through that modality (and for some similar experimental settings w1, w2, w3, . . . .), the processing interval λ from proximal sensory stimulation caused by x to the onset of a conscious perceptual experience of F, G, or H, . . . is constant (across situations w1, w2, w3, . . .). |

It’s quite possible that different instances of cca, with different values for λ, need to apply: one for the colour of the fire-truck, another for its motion and its direction, or for its relative location, if these features are neurally processed at different speeds. But since the colour of the fire-truck can be processed fairly rapidly, and is seen in the same perceptual conditions throughout, application of cca to the situation ((i)–(v)) delivers the following:46

(1) at t1+λ, S has a conscious visual experience of the fire-truck’s redness at l1.

(2) at t2+λ, S has a conscious visual experience of the fire-truck’s redness at l2.

(3) at t3+λ, S has a conscious visual experience of the fire-truck’s redness at l3.

(4) at t4+λ, S has a conscious visual experience of the fire-truck’s redness at l4.

(5) at t5+λ, S has a conscious visual experience of the fire-truck’s redness at l5.

This description of S’s conscious experience through the relevant interval—(1) to (5)—can be summarized as follows:

(6) from t1+λ to t5+λ, S has a succession of different conscious visual experiences, each with a different representational content: each represents the fire-truck’s redness at a different location.

Obviously, this carries serious implications for the different interpretations of thesis R3, the retentionalist claim about the temporal ontology of experience.

First, (6) clashes with the temporally restricted version of claim R3—that perceptual experiences with temporally extended contents are instantaneous. Since neural processing takes time, and since the time it takes for a given feature F (colour) to be neurally processed in the same conditions is relatively constant, it takes no less and no more than λ for successive presentations of such a feature through t1 to t5 to be processed. In which case, successive presentations lead, via λ in cca, to a succession of different experiences. Even if neural processing of feature F did shorten progressively over the interval from t1 to t5 (becoming faster at t5 + λ than it was at t1 + λ), the conclusion would still hold. For even a relatively inconstant and narrowing temporal gap λ* would suffice to guarantee that an experience of the fire-truck’s colour from t1 to t5 occupies an interval from t1+λ* to t5+λ* and varies in content across that interval.

The modally restricted version of claim R3—that the temporally extended content of a perceptual experience must be such that everything represented is represented simultaneously—is falsified too, for the same reason. Passage of a bright red fire-truck in front of you from t1 to t5 entails, via cca, that conscious experiences of the successive temporal parts of the passing truck—that is, the fire-truck at t1, and then at t2, and then at t3, etc.—are consciously experienced after roughly the same interval (λ). Hence, an experience of the truck’s redness at l1 will become conscious at t1+λ, followed at t2+λ by an experience of the truck’s redness at l2, and so on.

As a result, the temporally relaxed version of retentionalism—that a perceptual experience with a temporally extended content can have a brief extension but still lack temporal structure and distinct temporal parts—is also contradicted by (6). An experience of the fire truck from t1+λ to t5+λ will have different successive temporal parts with distinct contents (representing the truck’s redness at different locations).

Finally, whether the modally relaxed version of R3—that an experience can represent the successive temporal parts of a temporally extended event simultaneously—is incompatible with (6), depends on how the modality expressed by the relevant “can” is interpreted. If it’s meant to express a “mere possibility”—for example, that there could have been some creatures with unextended or unstructured experiences, as in (m2)—then there’s no inconsistency between (6) and the modally relaxed version of R3. The fact that our experiences do not actually represent the successive temporal parts of extended events simultaneously doesn’t rule out that the experiences of other possible creatures—including possible humans with different kinds of brains—could have. Of course, such a possibility is rather uninformative in this context: so construed, the modally relaxed version of R3 may be true, yet tells us hardly anything about our actual experiences. If, on the other hand, the possibility in question is meant to be restricted to creatures like us, with the kinds of brain we actually have, in the conditions in which our actual brains usually operate—as in (m3) or (m4)—then it looks as though such a possibility is ruled out by (6): cca is grounded in evidence about our actual neural processes, and implies that the perceived successive temporal parts of a moving fire-truck are not neurally processed so as to appear simultaneously in consciousness.47

In short, every substantive interpretation of the third retentionalist thesis (R3) is undermined by cca, the alternative to partially delayed processing (pdp).

3.3. Response #1: Re-Description Strategies

As I said, the argument just articulated is hardly surprising. The interesting question is: is there room for retentionalists to resist it—without, that is, merely rejecting cca by digging their heels in about pdp? A retentionalist could insist that most experiences in (1) to (5) above ought to be ascribed, at the very least, a temporally extended content. And indeed, nothing in the description from (1) to (5) above precludes the retentionalist from offering a more detailed description of the relevant experiences and their respective contents. For instance,

(1) at t1+λ, S has a conscious visual experience of the fire-truck’s redness at l1.

(2*) at t2+λ, S has a conscious visual experience of the fire-truck’s redness at l1 & at l2.

(3*) at t3+λ, S has a conscious visual experience of the fire-truck’s redness at l2 & at l3.

(4*) at t4+λ, S has a conscious visual experience of the fire-truck’s redness at l3 & at l4.

(5*) at t5+λ, S has a conscious visual experience of the fire-truck’s redness at l4 & at l5.

This isn’t the only way in which to sketch a proposal of this sort. Perhaps, some such contents are more extended than others: perhaps, the experience at t5+λ represents the whole sequence from t1 to t5, say.

No matter how the details are worked out, the suggestion risks running afoul of the retentionalist’s “no-dependence-on-memory” desideratum on temporally extended perceptual contents. For instance, the experience at t3+λ represents some of the same events (i.e., the truck being red at l2) already represented in the experience at t2+λ. Hence, the content of the later experience (3*) appears to “retain” part of the content of the preceding one (2*) in consciousness (in the sense specified by Lee—§1).48

Worse, what the retentionalist ends up with, in any case, is still an extended experience temporally structured by way of its distinct temporal parts—contra R3—owing to the distinct extended contents in the succession from (1) to (5*). What this shows, interestingly, is that claims about the temporally extended contents of perceptual experiences—that is, theses R1 and R2 in the retentionalist view—can be severed from thesis R3 about the temporal ontology of experience, once partially delayed processing (pdp) is replaced by something like cca. This serves to highlight the rather crucial role pdp plays, even if usually left unarticulated, in tying together the different strands of the retentionalist view.

3.4. Response #2: Integrated Processing of Temporal Features

Another facet of the causal argument worth noting: cca does not clash in any way with the more compelling considerations advanced to motivate the retentionalist view. Hence, it seems such considerations are impotent in blocking the argument in §3.2.

For example, one of the most interesting and promising attempts in this regard is Geoffrey Lee’s (2014a; 2014b) appeal to Reichardt detectors (2014a: 14): a simple model of a processing mechanism to explain motion perception, composed of one detector tracking edges at one location in the visual field and another detector tracking edges at another location. The first detector will be triggered by a moving stimulus first, followed by the second detector when the stimulus reaches the area it is sensitive to. But the information processed by the first detector must somehow be delayed until the second detector has been stimulated and its output processed, so that both pieces of information can be “integrated” in order to represent motion:

Suppose we have temporal information at the periphery that is contained in a temporally extended pattern of receptor stimulation, say on the retina. In order for the representation of this information to causally impact post-perceptual processing, each relevant temporal part of the initial extended stimulation has to leave a trace in the brain. If you consider the process leading up to, say, a verbal report of the information, you can see that each of these traces will have to be simultaneously present before the report is made: the alternative is that traces from certain parts of the stimulus no longer exist, and therefore can have no causal impact on the report. Furthermore, the traces will have to be integrated in the right way for simultaneous representation of each temporal stage of the input to able to control later processes in an appropriate way: if each relevant stage of the input leaves a trace but the traces are in completely different neural populations that aren’t functionally integrated, the information is not present in a useful form; it is not “explicit” in the relevant sense. For example, you can see in the motion detection example that if the representation of motion is going to have an appropriate later effect, the triggering of the first detector has to cause a trace that is integrated with the trace from the triggering of the second detector. Models of temporal computation implicitly assume that setting up such traces and then simultaneously integrating them is the task that the brain has to perform. (Lee 2014a: 14–15)49

Grant Lee’s very plausible rationale about the need for informational integration, as well as the rest of his argument. What does this show à propos cca? Very little, I think.

True, if temporal features such as motion and duration are represented in conscious sensory experiences, the neural processing of such features must involve integration of locational information (e.g., that x is at l1 at t1, that x is F at l1 at t1, etc.) from successive stages of a moving or extended stimulus. And such integration will undoubtedly eventuate in some processing delay of the earlier stages. But notice: this is a point about neural latencies, that is, about the necessary processing requirements for the representation of certain types of features (indeed, it can serve to explain why motion and its direction incur longer neural latencies than, say, colour). It is not, in and of itself, a point about online latencies—about how and when our brains generate conscious experiences, which is what cca is concerned with.

Thus, integration of locational information from successive presentations of a moving stimulus for the purpose of representing its motion will entail a processing delay. Yet, during the delay in question, the very same locational information (e.g., that x is at l1 at t1, or that x is F at l1 at t1) can continue to be processed further in the neural hierarchy. This means that, in principle, such locational information could well become conscious even before its integration for motion representation has taken place. Motion representation, after all, is just one processing function: it needn’t signify the end of processing for a given stimulus feature involved in motion processing.

Processing models such as Reichardt detectors are thus perfectly at home with cca—and with the evidence from visual asynchrony. The different latencies required by each apply to the production of outputs of different types, such as (i) a neural representation of feature F (e.g., motion) at some level of processing, and (ii) a conscious experience of feature G (e.g., location or colour). To represent x’s motion, the relevant detectors must indeed integrate information from x’s location l1 at t1 with information from x’s location l2 at t2, and so wait, by neural delay λM, for the latter. As a result, the neural latency λM is likely longer than the neural latency λC which characterizes the processing of x’s colour at t1. But if λC remains constant through processing of x’s colour at t1, and then at t2, and t3, etc., so can λC in cca, which sets the onset of conscious colour experience from t1 + λC to t3 + λC, etc.—where interval λC in cca, recall, covers both the neural latency λC and the online latency (if any) for conscious experience of colour.

In fact, cca applies to λM just as well: if it takes λM to neurally process x’s motion from t1 to t2, it also takes λM to process x’s motion from t2 to t3, and then again from t3 to t4, etc. Hence, λM, which determines the onset of the relevant motion experience in consciousness, can very well remain constant from t1 + λM to t2 + λM, t3 + λM, etc.—again, whether λM > λM or λM = λM. In which case, successive presentations of a moving stimulus will give way to successive conscious experiences with different contents, by cca—the kind of delayed processing at play in Reichardt detectors isn’t enough to rescue the retentionalist thesis R3.

4. Conclusion

Even if, in principle, unextended perceptual experiences could represent temporally extended events, there are reasons for thinking that, in fact, our conscious experiences are somehow “spread out” in time and structured accordingly. Such a conclusion derives from an eminently plausible assumption about the causal structure of our perceptual systems: it takes time for sensory information to be processed through a hierarchy of different neural areas. Such an interval is likely to vary somewhat, yet retain a lower and upper bound.

This explains why the retentionalist assumption of partially delayed processing (pdp), according to which successive stimuli could be processed at such different speeds as to enter consciousness simultaneously, is compromised by the evidence from visual asynchrony (§2.4). It’s a problem for retentionalism, because the retentionalist thesis, R3, about the temporal ontology of experience must presuppose pdp, we have seen.

On the other hand, a plausible alternative to partially delayed processing—cca, which is compatible with visual asynchrony—can serve to mount a direct objection against R3 and its different interpretations (§3.2). Given cca, it’s not true that experiences with temporally extended contents are (1) instantaneous (temporally restricted version), that (2) such experiences must represent the successive temporal parts of an extended event simultaneously (modally restricted version), or that (3) slightly extended but very brief—and temporally unstructured—experiences do so (temporally relaxed version). And since cca is anchored in empirical considerations about our actual neurophysiological processes (including the evidence from visual asynchrony), it isn’t in fact (4) possible in any relevant sense for the experiences of our neural duplicates to be unextended while carrying temporally extended contents (modally relaxed version, interpreted along the lines of m4).

Accordingly, this paper aimed to suggest, the retentionalist thesis about the temporal ontology of experience (R3) ought to be discarded on empirical grounds. Such a conclusion leaves the other retentionalist theses about temporally extended contents—R1 and R2—intact. Yet it implies that the mere availability of such extended contents isn’t, in and of itself, enough to support a conclusion like R3—not without partially delayed processing (§3.3), that is. In this light, it’s hardly surprising that considerations advanced to support the postulation of such extended contents—that is, the existence of Reichardt detectors, perfectly suited to deliver such contents—make little difference to the truth of the third retentionalist thesis (§3.4).

More importantly, if R3 and the assumption of partially delayed processing it rests upon are false, this leaves room for some isomorphy or similarity between the temporal properties of experiences and those of the events perceived, after all. What kind of temporal isomorphy? Does it mean that extensionalist views (for whom temporal experiences must be temporally extended) are thereby vindicated?

Not quite. Combined with cca, the toy example in §3.2 suggests at most that successive stimuli (or successive presentations of the same stimulus) and their different features reach consciousness successively. There is, in other words, some temporal ordering with which one consciously perceives the successive temporal parts of perceived events, at least. But then, synchronous features of the same stimulus may not, in fact, be consciously perceived simultaneously either—the evidence from visual asynchrony shows. Not much more, however, can be inferred from the evidence reviewed in this paper.

In which case, these results do not yet warrant the inference that our temporal experiences must be extended in time and temporally structured, as extensionalists argue. It could still turn out, for instance, that conscious perception largely consists in successions of very brief conscious snapshots, each representing only some feature of a given stimulus—the so-called “snapshot view”.50 In fact, we have seen (§3.3), these results do not rule out a weakened version of retentionalism, according to which temporal experiences consist in successions of overlapping extended contents. Nevertheless, even if extensionalism isn’t vindicated by the evidence discussed here, at least it retains the advantage (together with the snapshot view) of remaining entirely compatible with such evidence, unlike retentionalism.51

Notes

- See also Broad (1923; 1925), Grush (2005; 2006; 2008), Lee (2014a), Pelczar (2010), and Strawson (2009). ⮭

- Following Dainton (2017). Lee (2014a; 2014b) prefers “atomism”—unfortunately, that term can also be used for a view exclusively about the mereology of experience (see Lee 2014c; Chuard 2022) which needn’t entail the distinctive commitments of retentionalism. ⮭

- See Lee (2014a: 7–8) for different kinds of temporal isomorphy; Chuard (2020: §9.2.2) for some of the different theoretical roles they might play. ⮭

- See, e.g., Locke (1689/1975: II, 14, §§6-8, 12), von Helmholtz (1910/1962: 22), James (1890/1952: 627–28), and Chuard (2017), for causal accounts. ⮭

- Explanations of this sort are typically associated with forms of extensionalism, according to which temporal experiences must at the very least be temporally extended to present or represent extended events: see, e.g., Dainton (2000; 2008; 2014a; 2014b), Hoerl (2012; 2013), Phillips (2014a; 2014b), Rashbrook-Cooper (2017), Soteriou (2010; 2013). ⮭

- Presumably, such partial delays admit of varying durations when it comes to temporally extended contents which represent more than just two non-simultaneous events. ⮭

- I should note: while most of the evidence reviewed here pertains to vision, there seems to be no principled reason why the consequences drawn from such evidence cannot extend to neural processing in other sensory modalities too. And I’ve carried the discussion at that level of generality accordingly. Were it to turn out that such a generalization is unwarranted, this wouldn’t affect the main conclusions of the paper: owing to its commitment to pdp, retentionalism faces serious difficulties in its account of visual experiences with temporally extended contents. ⮭

- How such locations are specified in the content of experience may vary between different versions—see, e.g., Lee (2014a; 2014b). ⮭

- As some propose they are: see, e.g., Husserl (1991) and Grush (2005; 2006). Indeed, it’s possible to add to retentionalism the claim that experiences have future-directed content as well, though most retentionalists seem dismissive of such a suggestion. ⮭

- Nor is he saying that an extended content “bears no relation to previous experiential contents” (Phillips 2018: 291). Retentionalists can allow that successive temporally extended contents are related in a variety of ways—e.g., by similarity, co-reference or semantic overlap, causal influence, etc. The only relation being denied here is that of conscious content-retainment through memory. ⮭

- Another factor owes to the perceived fortunes of those more traditional “memory accounts” (see, e.g., Tye 2003: 87–88)—see also Dainton (2000: 125–27; 2017: §4.3), Hoerl (2009; 2013; 2017), Phillips (2010: 191–96; 2018: 288–89), for criticism of such accounts. That is, the difficulties facing traditional “memory accounts” might naturally drive retentionalists towards an alternative approach, free of any reliance on memory. Lee (2014a: 6) offers several other reasons—see Phillips (2018: 290–91) for discussion. ⮭

- Clause (iii), note, does not rule out suggestions that an earlier experience could causally impact a subsequent one—either by (a) speeding up the processing of the later experience, or by (b) somehow affecting its content (see Phillips 2018: 291)—since neither suggestion hangs on the content of the earlier experience being retained in consciousness afterwards. ⮭

- For a brief but useful survey of different kinds of memories, see Palmer (1999: ch. 12). ⮭

- See Lee (2014a: 6, n. 7; 2014b: 153). Phillips (2018) argues that any theory of temporal experiences relies in fact on memory in one sense or another. Phillips carries his discussion in terms, not of Lee’s characterization of memory via conditions (i) to (iii), but of his own, whereby memory involves “preserving past psychological success” (2018: 291). ⮭

- See footnote 5 for references. ⮭

- See, e.g., Dainton (2000: 133–42, 166; 2017: §3), James (1890/1952: 411), Miller (1984: 107–9), Phillips (2010: 179–80), and Tye (2003: 90). ⮭

- See also Geach (1957: 104; 1969: 34), Anscombe and Geach (1961: 96), and for a useful discussion, Soteriou (2007; 2013). For the reference to Kant, see Dainton (2017: §2.4). For a contemporary echo of this point, see Lee (2014a: 14–16; 2014b: 154). ⮭

- A word about the logical connections between these four readings of thesis R3. The temporally restricted version entails the modally restricted one (as the temporally relaxed version is also meant to), but not vice versa, since the modally restricted version is compatible with the temporally relaxed version (simultaneity of presentation doesn’t imply such presentations are instantaneous). The modally relaxed claim is entailed by all three other construals, but is compatible with their respective negations. ⮭

- See also Dennett and Kinsbourne (1992), Pelczar (2010), and Dainton (2014b) and Phillips (2010: 181–82) for discussion. ⮭

- See, e.g., Breitmeyer and Ögmen (2006), Holcombe (2009; 2015), Keetels and Vroomen (2011). ⮭

- The distinction between neural and online latencies, note, doesn’t precisely delineate which neural processes are to be included in each, and so where to draw the line precisely. A less rough characterization of the distinction would ultimately depend, I take it, on where the core neural correlates of consciousness (see, e.g., Chalmers 2000; Bayne & Howhy 2015; Koch 2004) are to be located, on what these core neural correlates exactly do (i.e., whether they function merely to (i) produce a representation of a stimulus feature, as opposed to (ii) making that representation accessible to consciousness, or both), and whether they should be included among the processes relevant to neural or online latencies (at least the latter, I presume). ⮭

- See Allik and Kreegipuu (1998), Lee et al. (1990), Maunsell and Gibson (1992), Maunsell et al. (1999), Oram et al. (2002). ⮭