1. Introducing the Pictorial Account of Emojis

Wittgenstein is sometimes (half-)jokingly credited with the invention of emojis, on the basis of the following excerpt from his Lectures on Aesthetics:1

If I were a good draughtsman, I could convey an innumerable number of expressions by four strokes.

Such words as ‘pompous’ and ‘stately’ could be expressed by faces. Doing this, our descriptions would be much more flexible and various than they are expressed by adjectives. [⋯] I could instead use gesture or [⋯] dancing. In fact, if we want to be exact, we do use gesture or facial expression. (Wittgenstein 1966)

The suggestion is that drawing stylized faces would be a useful addition to written language, as it would provide an efficient way to express certain meanings,2 especially those kinds of meanings that are usually conveyed by gesture or facial expression, or else, less efficiently, or less precisely, by evaluative adjectives. In the context of other famous remarks like “the human body is the best picture of the human soul” (Wittgenstein 1958: 178), this could be taken as suggesting the view that such face drawings, like gestures and facial expressions, are expressives, that is, meaningful signs that do not directly contribute to truth conditions, but rather express something non-propositional, like the speaker’s emotional state.

There is no doubt that some modern emojis are used roughly as Wittgenstein envisages for his face sketches. Not surprisingly, some of the points Wittgenstein makes are echoed in recent linguistic analyses of emojis. In particular, we see suggestions that emojis are like gestures (Gawne & McCulloch 2019; Pasternak & Tieu 2022; Pierini 2021), that face emojis in particular are expressives (Grosz, Kaiser, & Pierini 2021; Grosz, Greenberg, De Leon, & Kaiser 2023). More generally, emoji are often considered to function somewhat like words (Barach, Feldman, & Sheridan 2021; King 2018; Scheffler, Brandt, de la Fuente, & Nenchev 2022; Tang, Chen, Zhao, & Zhao 2020).

What many of the modern linguistic approaches share is that they treat emojis, like regular words, as symbols—a mode of signification loosely characterized as conventional, non-natural, arbitrary, and/or learned. The obvious alternative to this symbolic view is one that treats emojis, like pictures, as icons—a mode of signification loosely characterized as based on resemblance between form and content (Peirce 1868). Such a view is apparently taken for granted by some semioticians (Cohn, Engelen, & Schilperoord 2019; Danesi 2016), but it is never explicitly argued for or made very precise. In this paper I propose, formalize, and defend such an iconic semantics for emojis. More specifically, I argue that emojis are simply little pictures. That is, like photographs and drawings, they are used to depict ‘what the world looks like’.3

A pictorial account of emojis promises several advantages over rival symbolic accounts. First, it sees the use of emojis as continuous with other, more obvious picture–text integrations, like stickers, gifs, and memes in modern internet communication, but also like illustrated books, instruction manuals, and comics—and of course Wittgenstein’s face drawings. This allows me for instance to borrow a fully general pragmatic model of picture–text composition (originally proposed for analyzing comics) and apply it to the use of emoji in Section 3.

Second, the pictorial account can explain creative, non-canonical emoji usage, like a use of the violin emoji to illustrate a cello performance, (1-a), or the use of the ‘persevering face’ emoji to express a host of seemingly unrelated emotions (frustration, sadness) and activities involving closing the eyes (praying, pretending to be asleep), united only by the fact that someone looks like that.

| (1) | a. | thanks to @anonymous for chatting to me about reaching a global audience online during lockdown with his stunning cello performances

|

| b. | Oh man⋯ that too? They stole that too?

|

|

| c. | Behind every quiet person there is sad untold story

|

|

| d. | I need a guy that’s ready for a serious relationship

|

|

| e. | I always close my eyes and pretend to be sleeping

|

To deal with such a range of conceptually distinct uses, a symbolic account would have to assume that the lexical item  or

or  is multiply ambiguous, while the pictorial account readily predicts these creative usages from the generic pictorial meaning, that is, that ‘it looks roughly like this’. We’ll explore creative uses in Section 4.

is multiply ambiguous, while the pictorial account readily predicts these creative usages from the generic pictorial meaning, that is, that ‘it looks roughly like this’. We’ll explore creative uses in Section 4.

Thirdly, and somewhat more speculatively, the pictorial account suggests a natural explanation of the rise of gender and skin-tone modifiers: on certain specific occasions,  or

or  may simply more closely resemble what they depict (the runner I’m describing, or my use of the gesture) than the default

may simply more closely resemble what they depict (the runner I’m describing, or my use of the gesture) than the default  or

or  , respectively. A symbolic account that analyzes

, respectively. A symbolic account that analyzes  as a lexical item expressing the speaker’s approval would presumably assign the exact same meaning to the skin-tone variants

as a lexical item expressing the speaker’s approval would presumably assign the exact same meaning to the skin-tone variants  or

or  and thus have a harder time explaining their different distributions and felicity conditions. We’ll revisit this argument in Section 5.

and thus have a harder time explaining their different distributions and felicity conditions. We’ll revisit this argument in Section 5.

Having made my sales pitch, I should add that there are also some limitations and caveats to the scope of my proposal. Emojis are not a wholly homogenous class, and my pictorial account will not treat all 3,521 emojis in the current Unicode standard uniformly. First, I’m assuming, with Grosz et al. (2021), a semantic distinction between emojis that depict facial expressions and hand gestures and those that depict other entities and eventualities. I propose to model that semantic distinction within my overall pictorial framework as follows: while the entity/event emojis depict what the world of evaluation looks like, the face/hand emojis tend to depict what the utterance context looks like. A face emoji thus essentially depicts what the actual speaker looks like while producing the utterance. The Wittgensteinian intuition that face emojis are expressive is then explained by the further assumption that the human facial expressions (or hand gestures) depicted are themselves meaningful signs, and that the pictorial meaning layer naturally composes with the expressive gesture, in a way to be made precise in Section 5.

I’m also leaving open the possibility that there is some subclass of emojis that are best analyzed as symbols. For instance,  , the fifth most common emoji on Twitter according to emojitracker.com, is conventionally used to denote recycling or, more commonly, retweeting, but it’s not obviously a picture of either of these activities—it doesn’t resemble them. Similarly, the Belgian flag emoji doesn’t resemble the country it stands for, but refers to it by an arbitrary convention, much like the English word ‘Belgium’, or an actual Belgian flag. Some emojis in the Emojipedia4 category of ‘Symbols’ fall in the grey area between picture and symbol —is

, the fifth most common emoji on Twitter according to emojitracker.com, is conventionally used to denote recycling or, more commonly, retweeting, but it’s not obviously a picture of either of these activities—it doesn’t resemble them. Similarly, the Belgian flag emoji doesn’t resemble the country it stands for, but refers to it by an arbitrary convention, much like the English word ‘Belgium’, or an actual Belgian flag. Some emojis in the Emojipedia4 category of ‘Symbols’ fall in the grey area between picture and symbol —is  just a conventional symbol of love, or is it perhaps in some sense a stylized (see Section 2.2) picture of a human heart, which by (fossilized) metonymic extension (see Section 4.3) is associated with love and positive emotions? For the purposes of this paper I’m happy to concede that there may a subclass of symbolic emojis, with fuzzy borders. Still, there might be an alternative analysis that treats (some of) these symbolic emojis as pictures as well.5 Following the two-stage meaning composition account I propose for face emojis in Section 5, briefly hinted at above, we might say that flag emojis are quite literally pictures of flags, which in turn are genuine symbols of countries. This would leave us with the task of explaining the apparent transparency of the pictorial layer here. While I do offer such an explanation for the apparent transparency of face emojis (Section 5), I will not pursue this route for flag and other symbol emojis here.

just a conventional symbol of love, or is it perhaps in some sense a stylized (see Section 2.2) picture of a human heart, which by (fossilized) metonymic extension (see Section 4.3) is associated with love and positive emotions? For the purposes of this paper I’m happy to concede that there may a subclass of symbolic emojis, with fuzzy borders. Still, there might be an alternative analysis that treats (some of) these symbolic emojis as pictures as well.5 Following the two-stage meaning composition account I propose for face emojis in Section 5, briefly hinted at above, we might say that flag emojis are quite literally pictures of flags, which in turn are genuine symbols of countries. This would leave us with the task of explaining the apparent transparency of the pictorial layer here. While I do offer such an explanation for the apparent transparency of face emojis (Section 5), I will not pursue this route for flag and other symbol emojis here.

In addition to pictorial and symbolic emojis, and some in the grey area in between, there are also picture–symbol hybrids. For instance,  depicts a sleepy face with a giant snot bubble coming from the left nostril. The snot bubble here is a convention borrowed from Japanese manga and anime that symbolizes that a character is sleeping (Cohn 2013). We can analyze such mixtures by syntactically separating the symbolic elements from the pictorial elements, for instance as described for speech balloons and other picture–symbol hybrids in comics by Maier (2019). I will not discuss this matter further here.

depicts a sleepy face with a giant snot bubble coming from the left nostril. The snot bubble here is a convention borrowed from Japanese manga and anime that symbolizes that a character is sleeping (Cohn 2013). We can analyze such mixtures by syntactically separating the symbolic elements from the pictorial elements, for instance as described for speech balloons and other picture–symbol hybrids in comics by Maier (2019). I will not discuss this matter further here.

Finally, for reasons of space I’ll restrict attention to the use of emojis as separate discourse units, that is, typically inserted after a sentence, or as a stand-alone discourse move:

| (2) | Great idea  count me in count me in  I’m on my way I’m on my way

|

That is, I’m disregarding ‘pro-speech emojis’ (Pierini 2021)—emojis that are syntactically integrated into a sentence and ‘replace’ a specific word or concept, like ‘love’ and ‘present’ in (3-a), ‘happy’ in (3-b), and sometimes even a specific (English) word sound or shape, in rebus-like fashion, like in (3-c).6

| (3) | a. | keep doing what you need to do,  u bro if I was in Detroit I’d give you a u bro if I was in Detroit I’d give you a  . . |

| b. | Our project eventually succeeded, and I felt very  (Tang et al. 2020) (Tang et al. 2020) |

|

| c. | In the  of her hand. (Scheffler et al. 2022) of her hand. (Scheffler et al. 2022) |

With the above restrictions and caveats in place, we are left with the claim that a significant portion of emoji uses, the exact boundaries of which remain vague, but including many uses of emojis for animals, plants, objects, activities, hand gestures, people, facial expressions, are wholly or primarily pictorial. In this paper I will explicate in some detail what a pictorial semantics (and pragmatics) for emojis might look like.

The paper is structured as follows. In Section 2 I propose a formal semantic account of pictorial content in terms of geometric projection. In Section 3 I explain how the rather minimal projective semantics is enriched in the context of a discourse, building on insights from the study of linguistic discourse structure and coherence relations. In Section 3 I address what I call the pictorial overdetermination challenge: an emoji like  depicts a certain type of two-door red car, but can be used to denote cars of any color, make and model. The solution I propose invokes a pragmatic process of figurative interpretation, extending the basic projective contents via metaphoric and metonymic interpretation. In Section 5, finally, I turn to the second fundamental challenge facing a pictorial account: how to explain the apparent expressivity of face and hand emojis? I propose an extension of the Kaplanian account of use-conditional meaning to pictures and then show how the use-conditional pictorial content of an emoji naturally combines with the use-conditional content of the depicted facial expression or gesture to yield the observed expressive use of face and hand emojis.

depicts a certain type of two-door red car, but can be used to denote cars of any color, make and model. The solution I propose invokes a pragmatic process of figurative interpretation, extending the basic projective contents via metaphoric and metonymic interpretation. In Section 5, finally, I turn to the second fundamental challenge facing a pictorial account: how to explain the apparent expressivity of face and hand emojis? I propose an extension of the Kaplanian account of use-conditional meaning to pictures and then show how the use-conditional pictorial content of an emoji naturally combines with the use-conditional content of the depicted facial expression or gesture to yield the observed expressive use of face and hand emojis.

2. A Pictorial Semantics for Emojis

2.1. Geometric Projection

Pictures are representations. They represent the world as being a certain way. Hence, just like utterances can be true or false with respect to a given world, we could say that a picture is true or false with respect to a certain world—more colloquially, that a picture is an accurate or inaccurate picture of (a part of) that world. If we can capture a workable notion of pictorial truth we can define the semantic content of a picture as the set of worlds that the picture is true of—in the same way that we also define the content of an utterance in possible worlds semantics—in order to get a proper investigation of the semantics and pragmatics of pictures off the ground.

Intuitively, pictorial truth, unlike linguistic truth, is a matter of resemblance: a given picture is true of a world iff it resembles part of that world. On reflection, resemblance has turned out to be too vague, and arguably neither sufficient nor necessary for pictorial truth (Goodman 1976; Greenberg 2013). The geometrical notion of a projection function has been used with some success as a replacement for resemblance in pictorial semantics (Abusch 2020; Greenberg 2021).

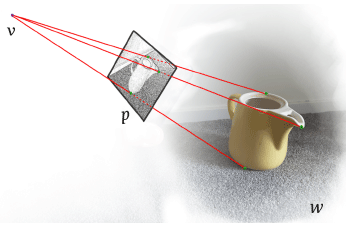

More technically, a geometric projection is a recipe for turning a 3D scene into a 2D pictorial representation of that scene. It’s a function, Π, mapping a possible world w and a viewpoint v (formally, a vector located at a certain spatiotemporal location, intuitively representing the gaze direction of some viewer/camera located somewhere in w) onto a picture p: Π(w,v) = p.

There are many different such recipes that qualify as geometric projection functions. One well-known example Π takes the world and the viewpoint, and (i) puts a white picture plane perpendicular to the viewpoint direction vector, (ii) draws all ‘projection lines’ connecting some part of an edge of an object in the world towards the viewpoint origin, and (iii) marks in black wherever the projection line intersects the picture plane. This procedure will generate a linear perspective, black and white drawing.

| (4) |  |

More complicated projection functions might include rules for representing colors, distinguishing edges and surfaces in the world, or some additional distortion, abstraction, and stylization transformations to create depictions that deviate more or less from ‘photorealistic’ projection in different ways.

| (5) |  |

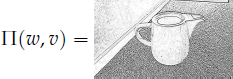

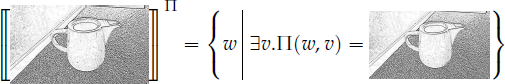

When we know how to turn some part of the world into a picture, using a Π and a v, we can define when a picture is true:

| (6) |  |

Here we’re assuming the projection function Π to be fixed, that is, provided by the context, just as in the linguistic domain we assume the language to be given pre-semantically, that is, before computing the truth value of an utterance. In other words, we can think of the projection function as the pictorial analogue of a language (Giardino & Greenberg 2015; Greenberg 2013).

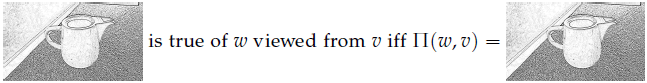

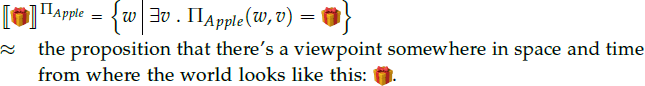

From the pictorial truth definition in (6) we can define various candidate notions or levels of pictorial content. A natural analogue of classic propositional content results from existential closure over the viewpoint: given a pictorial language Π, a picture expresses the proposition that there is a viewpoint from where the world projects onto that picture:

| (7) |  |

Alternatively, for different purposes we may need other notions of content, for example, analogues of centered/diagonal propositions (sets of world–viewpoint pairs, Rooth and Abusch 2017) or horizontal contents (sets of worlds, i.e., assuming a fixed, contextually given viewpoint). I’ll eventually introduce a dynamic semantic notion of pictorial content, in terms of information states.7 To avoid potential difficulties delimiting and quantifying over the space of possible pictorial (or linguistic) languages, we will assume that a Π is pre-semantically given. How an interpreter arrives at this Π is then a matter of contextual pragmatic inference that we will only talk about informally.

Finally, it is worth noting that the purely projective pictorial content defined in (7) is a rather minimal notion of semantic content, what Kulvicki (2006) calls ‘barebones content’—to be further enriched to what he calls ‘fleshed out content’ (see Greenberg 2021 a related view involving different levels of pictorial semantic content). In this paper I side with Abusch (2020) and stick with the barebones semantics in (7). I relegate the more fleshed out content derivation to the levels of discourse processing (see Section 3) and pragmatics (see Section 4).

2.2. Stylization

If emojis are pictures, they are not very ‘realistic’, but rather ‘abstract’ or ‘stylized’. In the geometric projection framework the differences between, say, a simple line drawing and a full color photograph can be thought of as corresponding to different parameter settings inside the projection function. A line drawing projection ignores colors, shadows, and other properties of surfaces, and instead focuses only on (clear, relatively sharp) edges of objects. Qualitatively very different scenes (solid blue cube on wooden table lighted from above, transparent glass cube on metal surface lighted from the left, etc.) could thus give rise to the same abstract line drawing.

Now, apart from ignoring surface textures, opacity, colors, and shadows—let’s call this ‘abstraction’—a typical line drawing also simplifies the geometry of the edges that the basic linear projection algorithm would give us. Let’s call this ‘stylization’. By stylization, slightly crooked edges and small imperfections might be represented by perfectly straight lines on the picture plane. We could also stylize our depiction by approximating any shape projected on the plane with the closest simple polygon (with less than 37 sides, say).8 We’ll consider such approximative geometric transformations part of the projection function (Abusch 2012; Greenberg 2021). With a properly abstract and stylized projection function, a simple wire cube drawing like  would be true of not just different geometric worlds where we’re looking at an actual floating Platonic cube (of arbitrary color and size), but also of worlds like ours where we’re looking at a shape that is roughly cube-like, like a sugar cube, a Rubik’s cube, or a dented cardboard box.

would be true of not just different geometric worlds where we’re looking at an actual floating Platonic cube (of arbitrary color and size), but also of worlds like ours where we’re looking at a shape that is roughly cube-like, like a sugar cube, a Rubik’s cube, or a dented cardboard box.

If emojis are pictures—as I maintain—they are clearly more like line drawings than like photos, with the relevant projection function involving multiple types of abstraction and approximative stylization transformations on top of a basic linear projection algorithm. Take a common object emoji, like the ‘wrapped present’ emoji. Here are a few instantiations of this emoji in different emoji sets.

| (8) |  |

Apple appears to be using a more photorealistic type of projection and HTC a more stylized one. More specifically, in our projective semantics terminology, we would say that Apple’s projection function, ΠApple, seems to involve a standard linear perspective;9 uses a range of different colors to mimic a smooth, somewhat shiny lightbrown or gold surface; and marks shadows and shiny edge highlights as if light is falling on the object from top left.

The OpenMoji projection seems to involve more abstraction and stylization. The box and the bow for instance are entirely symmetrical, suggesting that ΠOpenMoji ignores the precise shape and location of the bow in the basic projection image in favor of an approximation. There are only a few colors, again suggesting approximation, and all edges are marked uniformly in thick black lines. On the other hand, the dropdown shadow from the lid is still preserved. The type of perspective in OpenMoji’s projection remains unclear because a full frontal viewpoint seems to have been chosen.

HTC, finally, uses the same canonical viewpoint as OpenMoji and builds similar color, texture, and symmetry abstractions into its projection function, though with a slightly different edge processing, and now even ignoring all shadows.

Note that these informal (abductive) inferences about the nature of the three Π’s, drawn on the basis of just one image each, are all defeasible: Apple might in principle have intended to depict a crooked, multicolored box through a parallel method of projection, without shading; and HTC’s projection function might be sensitive to shadows and shading but we don’t notice because the scene had a light source near the viewpoint, etc. In fact, all three pictures might in principle be high resolution photographs of more or less abstract drawings of objects (Greenberg 2013; Kulvicki 2013).

As discussed also at the end of Section 2.1 we’ll ignore this general projection uncertainty and assume that context, common sense and experience with pictures of common objects pragmatically (or in any case, pre-semantically) narrow down the space of possible parameter settings to something like the assumptions laid out above.

2.3. Icon, Picture, or Diagram

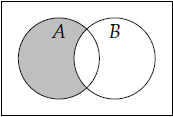

At the HTC level of stylization and abstraction we might be tempted to consider an alternative account, as suggested by an anonymous referee, viz., that emojis are diagrams. That is,  denotes a wrapped gift box in roughly the abstract but still iconic way that overlapping, partly greyed out circles in a Venn diagram might denote that all men are mortal. So how exactly do diagrams differ from pictures, icons, and symbols?

denotes a wrapped gift box in roughly the abstract but still iconic way that overlapping, partly greyed out circles in a Venn diagram might denote that all men are mortal. So how exactly do diagrams differ from pictures, icons, and symbols?

Giardino and Greenberg (2015) define iconicity as representation by virtue of “a kind of ‘direct’ or ‘natural’ correspondence between the spatial structure of the sign and the internal structure of the thing it represents.” Pictures fall under this definition, with the relevant correspondence provided by (the inverse of) the projection function. Importantly, pictorial correspondence is essentially viewpoint-dependent: a given linear perspective photograph may be a true depiction of a world from some specific viewpoint, but false from another viewpoint. According to Giardino and Greenberg, such viewpoint-dependence is what sets pictures apart from other icons, most saliently diagrams: a Venn diagram like (9) may convey, in virtue of a natural correspondence between circle overlap and set intersection, the proposition that all humans are mortal, regardless of any specific viewpoint or perspective.

| (9) |  |

Back to emojis. In the description of the projective content of the HTC wrapped present emoji in 2.2 I’ve assumed that it’s a stylized depiction from a canonical, frontal viewpoint. One might argue that if we incorporate such a fixed full frontal viewpoint into ΠHTC we’d technically end up with a viewpoint-independent projection, which by Giardino and Greenberg’s (2015) definition might already technically put us outside the pictorial domain. However, viewpoint-independence doesn’t seem to suffice to make an icon a diagram, for then interactive VR games or 3D marble sculptures would be diagrams as well.10 Following Hagen (1986) I will extend the label ‘pictorial’ to include forms of projection that stipulate a canonical viewpoint. This leaves it an open question whether  , while clearly iconic, is best thought of as a picture or a diagram.

, while clearly iconic, is best thought of as a picture or a diagram.

Perhaps the diagrammarian’s case is strongest for face emojis. In our terminology,  and

and  are iconic in the sense that they denote facial expressions and/or emotions—we return to this matter in Section 5—by virtue of a ‘natural’ correspondence between shapes in the sign (specifically the shapes of mouth and eyes) and properties of the speaker’s face and/or emotional state. Although this paper is primarily concerned with defending this iconic account against symbolic accounts, let me briefly explain my reasons for going one step further and pinning down the correspondence in question as pictorial, as cashed out in terms of the fairly well-understood geometric notion of a projection function.

are iconic in the sense that they denote facial expressions and/or emotions—we return to this matter in Section 5—by virtue of a ‘natural’ correspondence between shapes in the sign (specifically the shapes of mouth and eyes) and properties of the speaker’s face and/or emotional state. Although this paper is primarily concerned with defending this iconic account against symbolic accounts, let me briefly explain my reasons for going one step further and pinning down the correspondence in question as pictorial, as cashed out in terms of the fairly well-understood geometric notion of a projection function.

First, to the extent that it makes intuitive sense to consider Apple’s colorful and detailed  a picture, it makes sense to try and extend that approach, if possible, to more abstract emojis like

a picture, it makes sense to try and extend that approach, if possible, to more abstract emojis like  and other emoji sets like HTC’s, and perhaps even more abstract representations like emoticons :). On the account presented in 2.2 above,

and other emoji sets like HTC’s, and perhaps even more abstract representations like emoticons :). On the account presented in 2.2 above,  and

and  simply exemplify different pictorial dialects, characterized in terms of projection functions with different types of stylization and abstraction built in. Going in the other direction on the abstraction scale, we already noted in Section 1 that the pictorial account also provides an intuitive link between emojis and, say, Whatsapp stickers or gifs that often include drawings, photos, or videos that are uncontroversially projective and viewpoint-dependent in nature.

simply exemplify different pictorial dialects, characterized in terms of projection functions with different types of stylization and abstraction built in. Going in the other direction on the abstraction scale, we already noted in Section 1 that the pictorial account also provides an intuitive link between emojis and, say, Whatsapp stickers or gifs that often include drawings, photos, or videos that are uncontroversially projective and viewpoint-dependent in nature.

In Section 1, while comparing our pictorial account with the symbolic alternative, we also mentioned two other potential advantages of the pictorial view: (i) it correctly predicts the flexibility and creativity of emojis (e.g.,  denoting a cello performance, or

denoting a cello performance, or  denoting a wide variety of conceptually unrelated emotions and activities associated with a face that looks like that); and (ii) it can easily make sense of the rise of skin-tone and gender modifiers. It is not obvious whether a diagrammatic account would be similarly well positioned to explain these two phenomena—the explanations sketched earlier at least require a much more vision-like type of form–meaning correspondence than we find in, say, Venn diagrams (for which, indeed, creative interpretations and skin-tone/gender modifiers seem rather unlikely).

denoting a wide variety of conceptually unrelated emotions and activities associated with a face that looks like that); and (ii) it can easily make sense of the rise of skin-tone and gender modifiers. It is not obvious whether a diagrammatic account would be similarly well positioned to explain these two phenomena—the explanations sketched earlier at least require a much more vision-like type of form–meaning correspondence than we find in, say, Venn diagrams (for which, indeed, creative interpretations and skin-tone/gender modifiers seem rather unlikely).

It all comes down to how exactly the diagrammarian spells out the ‘natural’ correspondence between emoji and denotation in a way that is not projective or pictorial but instead distinctly diagram-like. The deeper problem is that it’s not clear what counts as ‘diagram-like’. Beyond detailed accounts of some specific logical and mathematical diagram systems (Shin, Lemon, & Mumma 2018), there is, as far as I’m aware, no general, formally precise, positive characterization of diagrammatic representation. Hence, the view that (some) emojis are diagrams is less informative than describing geometric projection functions with stylization, abstraction, and canonical viewpoints. In this paper I’ll henceforth restrict attention to the pictorial view.

3. Emojis in Discourse

The pictorial semantics I have proposed for emojis is incredibly minimal:

| (10) |  |

As we saw in 2.2, already some defeasible presemantic reasoning about the underlying ΠApple is required to get even this much. A lot more pragmatic reasoning is needed to turn this basic pictorial content into something worth adding to an actual tweet or text. I follow Grosz et al. (2021) and Kaiser and Grosz (2021) in appealing to coherence and discourse structure as a crucial factor in the pragmatics of emojis, but my reliance on pragmatic enrichment will be somewhat more radical, in part due to my much more minimal, pictorial semantics.

3.1. Coherence in Verbal and Visual Language

Hobbs (1979) famously proposed a systematic theory of discourse interpretation where maximizing coherence is a driving force behind various pragmatic inferences in communication and textual interpretation. Consider the simple discourse in (11):

| (11) | I missed another Zoom meeting this morning. My internet was out. |

We don’t merely interpret this as a conjunction of two eventualities occurring (missing a meeting and the internet being down), but almost automatically infer some kind of causal link between the two: I missed a meeting because my internet was out. Depending on the nature of the eventualities described we can infer different relations between them. While in (11) we inferred a relation commonly known as Explanation, in (12) we’ll likely infer a different one called Result.

| (12) | I missed another Zoom meeting this morning. They fired me. |

There are a number of more or less formalized theoretical frameworks describing the inference of these so-called coherence relations (Asher & Lascarides 2003; Kehler 2002; Mann & Thompson 1988). In all of them it is assumed that there is a certain finite number of such relations, ultimately grounded in “more general principles of coherence that we apply in attempting to make sense out of the world we find ourselves in” (Hobbs 1990). Following Pagin (2014) and Cohen and Kehler (2021) I refer to coherence-driven inferences as a form of ‘pragmatic enrichment’ of the more minimal underlying semantic content.

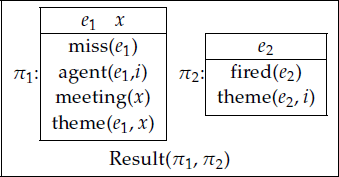

The most comprehensive coherence theory, that is also immediately compatible with the formal semantic machinery we’ve introduced thus far, is called Segmented Discourse Representation Theory (SDRT, Asher and Lascarides 2003). In SDRT, discourse relations like Result, Explanation, Contrast, Background, and Narration are represented at a level of discourse representation that extends a given dynamic semantic framework, typically Discourse Representation Theory (DRT, Kamp 1981). The relata are elementary discourse units, typically corresponding to sentences or clauses that express propositions, typically describing the existence of certain events or states (Davidson 1967). Using special propositional discourse referents (π1, π2, ⋯) to label these elementary units and using DRT to represent their semantic contents, we get Segmented Discourse Representation Structures (SDRS) like (13)

| (13) |  |

In this traditional box notation, the outer box is an SDRS proper. It describes two discourse units, π1 and π2, as related by the Result relation: π2 is the result of π1. The smaller boxes are DRS’s, they represent the contents of the individual discourse units. The first discourse unit, π1, corresponding to the first sentence of the discourse in (12), is characterized by this DRS box as (i) contributing two discourse referents, viz., an event e1 and an individual x, and (ii) ascribing a number of properties and relations to these discourse referents, viz., that e1 is an event of missing, that the agent of e1 (the person who is missing something) is i (a special indexical discourse referent picking out the actual speaker), etc.

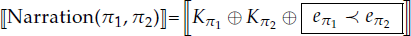

The model-theoretic interpretation of coherence conditions in SDRT can be formalized as an extension of the semantics of DRT, which is a dynamic extension of first-order logic. For instance, Narration holds between two units iff the information carried by both units is true (or, more dynamically: if both units update the common ground consecutively) and the main event described by the first unit (eπ1) immediately precedes (or ‘occasions’, notation: ≺) the main event described by the second. Note that this semantics presupposes that both units introduce a main event. With standard (S)DRT notation, that is, Kπ is the DRS box associated with unit π; and ⊕ stands for DRS merge,11 the DRT way of dynamic information updating (spelled out at the representational level of the DRS).

| (14) |  |

In words, (14) says that interpreting two units (π1,π2) joined by Narration means roughly that we add the information of both units together (i.e., we join the sets of discourse referents they introduce, and we join the sets of conditions they impose on them), and then add a new condition that says that the main event described in the first unit immediately precedes that described in the second.

The introduction of coherence relations in the discourse interpretation process (i.e., the step by step construction of an SDRS from a sequence of utterances) is guided by a global constraint that seeks to maximize overall discourse coherence (i.e., add as many coherence relations as possible) and a number of defeasible pragmatic inference rules. For instance, if one unit π1 introduces a state and a subsequent (structurally accessible) unit π2 introduces an event, then all else being equal we can add ‘Background (π1,π2)’ to the SDRS under construction. We’ll skip over all details of context change composition in the model-theoretic semantics, accessibility, complex discourse units, etc.—see Geurts, Beaver, and Maier (2020) for a gentle introduction to DRT and SDRT, and Asher and Lascarides (2003) for details.

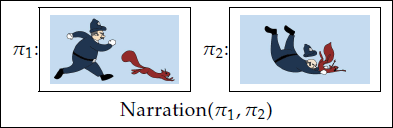

We’re interested in the application of coherence theory, and SDRT in particular, as a way of modeling pragmatic enrichment with partly or wholly pictorial discourses. First let’s rephrase Maier & Bimpikou’s (2019) DRT style analyses of purely pictorial narratives like (15) into the SDRT framework, by viewing the panels as elementary discourse units.12

| (15) |  |

The basic assumption behind Maier & Bimpikou’s (2019) PicDRT is that pictures are like elementary discourse units, that is, they express information about what the world looks like. As outlined above, to interpret a sequence of propositional units—pictorial or linguistic—as a coherent narrative means that we infer coherence relations. In this case, and in many panel transitions in many comics, the inferred coherence relation defaults to Narration: the policeman is chasing a squirrel and then he catches it.

| (16) |  |

The first thing to note is that, unlike in competing dynamic semantic accounts of pictorial discourse (Abusch & Rooth 2017; Wildfeuer 2019), the semantic representation in (16) literally contains pictures as constituents of its DRS boxes. This is in line with the original motivation of (S)DRT as a model of human cognitive discourse processing, with the (S)DRS approximating a dynamically changing structured mental representation ‘in the hearer’s head’ (Kamp 1981). The idea behind PicDRT representations like (16) is that human mental representations can be partly symbolic (as modeled by discourse referents and first-order logical formulas like ‘agent (e1, x)’), but also partly iconic and even pictorial (as modeled by the inclusion of picture conditions).

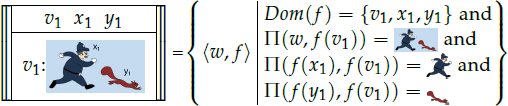

Zooming in on the pictorial DRS components in (16), Maier & Bimpikou (2019), inspired by Abusch (2012), add a ‘syntactic’ level of processing where pictures are labeled with viewpoint referents (v1, v2), and what they call salient regions of interest in the picture are labeled with individual discourse referents (x1, y2). A preliminary DRS representation of the first panel, with 2 salient regions introducing discourse referents, would be model-theoretically interpreted as in (17). Note that f is a partial assignment function, mapping discourse referents onto elements in the model’s domain (i.e., f maps x1 to an individual, v1 to a viewpoint vector, and e1 to an event). The (dynamic) semantic content of a DRS is an ‘information state’, that is, the set of world–assignment pairs that verify the DRS (Nouwen, Brasoveanu, van Eijck, & Visser 2022).

| (17) |  |

Paraphrasing informally, the DRS in (17) contributes the information that (i) there is a certain viewpoint from which the world looks like the whole picture (i.e., Π(w, f (v1)) =  ); (ii) there’s an individual that, when projected from that same viewpoint, looks like the bluish region (i.e., Π(f(x1), f(v1)) =

); (ii) there’s an individual that, when projected from that same viewpoint, looks like the bluish region (i.e., Π(f(x1), f(v1)) =  ); and (iii) there’s another individual that looks like the smaller brownish region (i.e., Π(f(y1), f(v1)) =

); and (iii) there’s another individual that looks like the smaller brownish region (i.e., Π(f(y1), f(v1)) =  ).

).

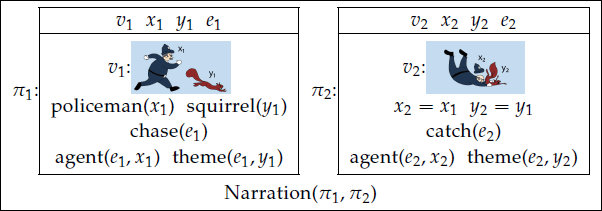

At a post-semantic level, based on general world-knowledge, genre, and background information about what things look like under common projections, properties and relations may be freely predicated of these discourse referents (e.g., ‘policeman (x1)’), as a form of ‘free pragmatic enrichment’ (Recanati 2010). Moreover, different discourse referents from different pictures can be equated (e.g., x2 = x1—a free pragmatic pictorial analogue of anaphora resolution, Abusch 2012). In addition to the discourse unit labels (π1, π2) and coherence relations (‘Narration(π1, π2)’) sketched in (17), we further add to the post-semantic enrichment stage the introduction of event discourse referents (e1,e2⋯). Note that this last enrichment is crucially driven by the semantics of Narration, which, as defined in (14), presupposes that both units introduce an event discourse referent.

| (18) |  |

The model-theoretic interpretation of (18), the post-semantically enriched version of the SDRS in (17), is a straightforward enrichment of the information state in (17) involving only standard DRT and SDRT semantics (but I will skip over the formalities here).

In sum: the semantics proper of a single picture is very minimal, basically, ‘the world looks like this at some point in space and time’. When presented with a few pictures in a seemingly deliberate sequence we go beyond mere conjunction of those minimal propositions (the world looks like this at some point and like that at some point), just like we do when presented with a series of utterances.13. The sequencing thus triggers a cognitive enrichment process that crucially involves the inference of various coherence relations in order to satisfy a global desire for maximal coherence.

Since the coherence-driven enrichment mechanism (here formalized in SDRT) thus applies uniformly to verbal and visual discourse, it should be well suited to modeling multimodal mixtures, like comics with textual elements (Wildfeuer 2019), illustrations with captions (Rooth & Abusch 2019) or tags (Greenberg 2019), and film (Cumming, Greenberg, & Kelly 2017; Wildfeuer 2014). If emojis are pictures, semantically, this same machinery should help us enrich the communicative content of emojis in relation to the surrounding text and/or other emojis.

3.2. Emojis and Coherence

We’ve assigned a minimal, pictorial content to object emojis like  that can be roughly paraphrased as ‘there is a viewpoint near where there is some object that looks like that.’ This semantic content is a proposition, or, if we follow the DRT approach sketched in the previous section, the dynamic equivalent of a proposition, viz. an information state.14 Following Lascarides and Stone’s (2009) original analysis of speech–gesture integration, but more directly following Grosz et al.’s (2021) analysis of activity emojis, these propositional emoji uses can be analyzed as discourse units in their own right, alongside the textual units.

that can be roughly paraphrased as ‘there is a viewpoint near where there is some object that looks like that.’ This semantic content is a proposition, or, if we follow the DRT approach sketched in the previous section, the dynamic equivalent of a proposition, viz. an information state.14 Following Lascarides and Stone’s (2009) original analysis of speech–gesture integration, but more directly following Grosz et al.’s (2021) analysis of activity emojis, these propositional emoji uses can be analyzed as discourse units in their own right, alongside the textual units.

| (19) | π1: Happy Birthday! | π2: I’m coming over this afternoon |

π3:

|

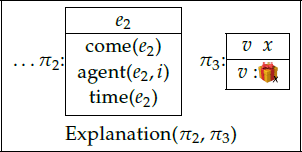

Maximizing coherence means inferring coherence relations between these discourse units. π2 contributes the existence of an event of the speaker coming over in the afternoon of the utterance day, while π3 contributes, roughly, the existence of a gold/brown box with a red bow at some point in space and time. The conjunction of those two pieces of information as such is not a coherent discourse, so we infer a relation, probably a causal relation (the box is the reason for the visit), which in SDRT would be called ‘Explanation’:

| (20) |  |

The semantics of Explanation, like Narration, (14), demands two events, and says that the second unit’s main event causes the first’s. Thus, the inference of an Explanation relation (to increase coherence), triggers the further inference that the picture depicts not just what the world looks like, but contributes an event. But how does a picture of a box depict an event?15

At this point I defer to what cognitive scientists call general cognitive schemas, scripts, or frames (Fillmore 2008): things that look like that, that is, nicely wrapped boxes with bows, typically contain gifts, and gifts are typically quite saliently involved in events of giving and receiving. Note that this is the same reasoning as what gave rise the inference that the entity depicted by the mostly blue shape in the comic in (15) is (probably) a police officer and that the inferred position of his arms legs are (probably) snapshots of him running (rather then assuming a weird pose and floating in the air).

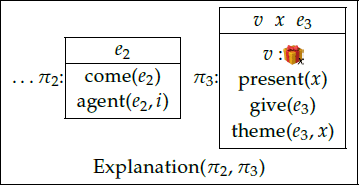

With all the defeasible pragmatic enrichments above, the coherent interpretation of the tweet in (19) now looks something like this:

| (21) |  |

Paraphrasing the interpretation of the SDRS in (21): there’s an event of the speaker coming over and an event of giving a present that looks like this,  , and the latter event explains the former.

, and the latter event explains the former.

But does the gift really have to look just like that, that is, in a gold-colored box with a red bow? Of course, we’re assuming that Apple’s projection function includes some abstraction and stylization, leading to some indeterminacy about the actual size, shape, color, lighting, and background of the box that is depicted, but what if it’s a blue box with a yellow bow, or a ball wrapped in newspaper without a bow, or even just an electronic gift certificate? As it stands, the pictorial account would make the speaker a liar16 if she meant to give such a gift, which is obviously absurd. The emoji can be used for almost any kind of gift, it doesn’t really have to look like this  . I consider this the fundamental challenge for a truly pictorial account of emojis, and I address it in the next section.

. I consider this the fundamental challenge for a truly pictorial account of emojis, and I address it in the next section.

4. Emoji Pragmatics: Figurative Depiction

4.1. The Pictorial Overdetermination Challenge

To illustrate our discourse semantics framework once more, consider another example tweet:

| (22) | I’m coming over this afternoon

|

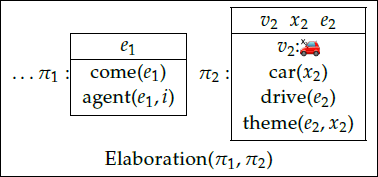

In this variation of (19) the emoji still clearly counts as a separate discourse unit, but now the connection is likely one of Elaboration (the event of my coming over involves a car) rather than Explanation.17

| (23) |  |

In words: there’s an event of the speaker coming over and that event includes the event of driving a car that looks like this. The phrase ‘looks like’ as always has to be understood in terms of projection.

Since Apple’s emojis tend to have various different colors that in many ways seem to reflect the actual colors of the depicted objects with some degree of faithfulness,18 we might reasonably assume that Apple’s projection function approximates actual color (within a finite set of fixed color codes). But then, counterintuitively, (22) would be false if the speaker drives a silver car.19

A first attempt at addressing this color mismatch problem in particular is to assume that apparently color is not in fact preserved in the relevant projections. Instead, just like we’re already abstracting away from details of shape, texture, shadow, etc., the Apple projection apparently also ignores most colors and just maps certain real-world surfaces to a default red. It’s tricky to define exactly what colors get mapped to red, and which to black, white, and to the various shades of grey and or blue that occur in this particular car emoji, and how these color marking rules should be adjusted for different categories of objects and their emoji depictions. These may just be technicalities, in which case it’s worth noting that the resulting projection would intuitively count as a pictorial mapping, in keeping with our starting point that the car emoji is a car picture.

However, this leaves us with a parallel problem of shape mismatches. What if the intended present is a round object wrapped in newspaper? Or the car is a big black 4 door BMW that looks nothing like  ? Extending the color solution outlined above to shapes would mean that Apple’s projection specifies a fixed shape and then effectively maps every car to that shape. Now note that such a projection would be essentially concept-based in the sense that anything that falls under the concept of ‘car’, no matter what it looks like, gets mapped onto the car emoji. This would take us away from pictorial representation and well into symbolic territory. In fact, this projection function is literally just the inverse of the lexical semantic meaning of the English word car, so we’d end up with a symbolic rather than an iconic account.

? Extending the color solution outlined above to shapes would mean that Apple’s projection specifies a fixed shape and then effectively maps every car to that shape. Now note that such a projection would be essentially concept-based in the sense that anything that falls under the concept of ‘car’, no matter what it looks like, gets mapped onto the car emoji. This would take us away from pictorial representation and well into symbolic territory. In fact, this projection function is literally just the inverse of the lexical semantic meaning of the English word car, so we’d end up with a symbolic rather than an iconic account.

Before I present a solution to the overdetermination challenge that avoids going symbolic like this, let’s first explore this alternative.

4.2. Creativity and Symbolic Overdetermination

As alluded to in Section 1, the apparent default view of emoji semantics treats emojis as essentially an extension of the lexicon of a certain genre of written language. In (Grosz et al. 2021: 348), for instance, activity emojis are said to “serve as free-standing event descriptions, whose core argument is anaphoric”. Although they try to remain agnostic on the details of the lexical entry of an activity emoji like  (because it has to cover both playing the violin and being a violinist, among other things), their notion of an event description that contains an argument that is moreover anaphoric, points in the direction of a language-like, that is, symbolic, lexicon extension, rather than a strictly pictorial semantics like the one I’m defending here.20

(because it has to cover both playing the violin and being a violinist, among other things), their notion of an event description that contains an argument that is moreover anaphoric, points in the direction of a language-like, that is, symbolic, lexicon extension, rather than a strictly pictorial semantics like the one I’m defending here.20

Interestingly, when we look at the variety of uses of our limited set of emojis, it becomes apparent that the symbolic approach also runs into an overdetermination problem. While it avoids overdetermining what the object looks like, it overdetermines its conceptual classification. In some cases,  really does denote something that looks like that without being a gift. For instance, in the tweet in (24) the advertiser is most likely creatively using the ‘wrapped present’ emoji to elaborate on the event of collecting parcels, relying on the fact that parcels are often boxes that kind of look like that.

really does denote something that looks like that without being a gift. For instance, in the tweet in (24) the advertiser is most likely creatively using the ‘wrapped present’ emoji to elaborate on the event of collecting parcels, relying on the fact that parcels are often boxes that kind of look like that.

| (24) | We deliver in South Africa via pep store for R59.95 or you can collect your parcels at Ferndale, Randburg

|

A symbolic account that treats  as a word that’s a synonym of ‘gift’ or ‘wrapped present’ or that otherwise ties the meaning symbolically to the concept of a gift,21 would predict that (24) would be infelicitous if the author intended to include people simply ordering stuff for themselves rather than as a gift.

as a word that’s a synonym of ‘gift’ or ‘wrapped present’ or that otherwise ties the meaning symbolically to the concept of a gift,21 would predict that (24) would be infelicitous if the author intended to include people simply ordering stuff for themselves rather than as a gift.

In this particular case, the symbolist might object that the use in (24) is infelicitous—the author should have chosen  to illustrate the general concept of parcels or delivery.22 But the same kind of creative usage of course happens when there is no better alternative emoji. For instance, on a symbolic account,

to illustrate the general concept of parcels or delivery.22 But the same kind of creative usage of course happens when there is no better alternative emoji. For instance, on a symbolic account,  probably denotes something involving a violin. But that would exclude uses where it denotes playing a viola, or a cello (neither of which have their own dedicated emoji).

probably denotes something involving a violin. But that would exclude uses where it denotes playing a viola, or a cello (neither of which have their own dedicated emoji).

| (25) | Double-cello action in #Arensky’s beautiful quartet has inspired our two cellists to treat the audience to some bonus duos⋯

|

In light of (25) the symbolist might propose ‘bleaching’ their lexical entry to accommodate cellos (e.g., to ‘bowed classical instrument’), but there will always be new, unforeseen use cases that don’t quite fit any proposed lexical definition (e.g., bring your ukulele!  ). We’ll discuss a more extreme case in the next subsection and suggest an appeal to metaphoric and other figurative meaning extensions to deal with such emoji usages. This appeal is in principle open to both symbolic and pictorial accounts, but I’ll argue that it works best with the pictorial account.

). We’ll discuss a more extreme case in the next subsection and suggest an appeal to metaphoric and other figurative meaning extensions to deal with such emoji usages. This appeal is in principle open to both symbolic and pictorial accounts, but I’ll argue that it works best with the pictorial account.

4.3.  as Image Metaphor

as Image Metaphor

A good illustration of the flexibility of emoji meaning involves the well-known use of  and

and  to refer to somewhat taboo body parts and events involving them. On a symbolic account, we might theoretically give a lexical semantic interpretation of

to refer to somewhat taboo body parts and events involving them. On a symbolic account, we might theoretically give a lexical semantic interpretation of  that includes both eggplants and male genitalia. But probably a more intuitive approach would have eggplants as the literal meaning and derive the other use as a secondary,23 non-literal meaning somehow. But what kind of non-literal meaning is this?

that includes both eggplants and male genitalia. But probably a more intuitive approach would have eggplants as the literal meaning and derive the other use as a secondary,23 non-literal meaning somehow. But what kind of non-literal meaning is this?

Lakoff (1993) uses the term ‘image metaphor’ to describe a metaphorical interpretation based on visual resemblance between the literal meaning (‘source’) and the metaphorical interpretation (‘target’). He illustrates the phenomenon with linguistic examples like (26):

| (26) | a. | My wife⋯ whose waist is an hourglass. (André Breton, cited by Lakoff 1993) |

| b. | His toes were like the keyboard of a spinet. (Rabelais, cited by Lakoff 1993) | |

| c. | The road snaked through the desert (Barnden 2010) |

Note for instance that the waist in (26-a) is not in any way conceptually related to an hourglass—it doesn’t help keep time, for instance—it just looks like one.

Examples like (26) show the need for a general account of image metaphor, wholly independently of emoji or pictorial semantics. Without getting into the details here, we’ll assume such an independent account, say Lakoff’s, or, more conveniently integrated within the kind of SDRT framework we’re already using, Agerri, Barnden, Lee, and Wallington’s (2007). Now, proponents of both the pictorial and the symbolic account could appeal to that account to explain the common non-literal interpretation of the eggplant emoji. Interestingly, since the symbolist makes the eggplant emoji roughly synonymous with a linguistic utterance of ‘(there’s an) eggplant’ we would expect the eggplant–penis metaphor to occur linguistically as well—and occasionally it does:

| (27) | The Warri pikin took to his IG account this morning to flaunt his eggplant in wet white underwear.24 |

Although examples like (27) are not hard to google, they really are not that common either. In fact, it’s quite possible that many of the linguistic instantiations of this particular image metaphor are derivative on the widespread emoji usage.

On my pictorial account of  , we can readily explain why the emoji instantiation is so much more prominent than its linguistic cousin. The the emoji literally tells us what the world looks like, and hence, when we get to the level of pragmatic enrichment, this pictorial content is readily extended with further image-based inferences. In cognitive processing terms, the basic pictorial semantics would predict engagement of the visual system in semantic processing already,25 so we can expect image-metaphoric pragmatic processing to be a natural follow-up, that is, exploiting more cognitive processing terminology, the image metaphoric pragmatics is primed by the pictorial semantics.26

, we can readily explain why the emoji instantiation is so much more prominent than its linguistic cousin. The the emoji literally tells us what the world looks like, and hence, when we get to the level of pragmatic enrichment, this pictorial content is readily extended with further image-based inferences. In cognitive processing terms, the basic pictorial semantics would predict engagement of the visual system in semantic processing already,25 so we can expect image-metaphoric pragmatic processing to be a natural follow-up, that is, exploiting more cognitive processing terminology, the image metaphoric pragmatics is primed by the pictorial semantics.26

4.4. Varieties of Figurative Meaning in Emoji Interpretation

Generalizing beyond  , the interpretation process I propose is as follows. The literal semantics of the emoji is projective: from some viewpoint, the world projects onto this,

, the interpretation process I propose is as follows. The literal semantics of the emoji is projective: from some viewpoint, the world projects onto this,  , which means it contains a red car shaped object. With this minimal meaning in hand (e.g., (mentally) represented in the form of a basic pictorial DRS condition), we enter into the realm of pragmatics, which includes various kinds of pragmatic enrichments, including the inference of coherence relations, properties, events, (as described in Section 3) but also, typically, finding a non-literal meaning whenever that fits the context better.2728

, which means it contains a red car shaped object. With this minimal meaning in hand (e.g., (mentally) represented in the form of a basic pictorial DRS condition), we enter into the realm of pragmatics, which includes various kinds of pragmatic enrichments, including the inference of coherence relations, properties, events, (as described in Section 3) but also, typically, finding a non-literal meaning whenever that fits the context better.2728

In the eggplant case, deriving this figurative meaning involved a rather pure image metaphor, for example, mapping the depicted vegetable to a body part on the basis of a visual resemblance. In other cases, the metaphor may be partly based on conceptual similarity. This is unproblematic for both symbolic and pictorial accounts, because since Lakoff and Johnson (2003) it is commonly accepted that metaphors involve similarities or analogies at the level of semantic content (i.e., mental concepts, in their cognitive semantic framework), rather than at a strictly linguistic level. This means that even if image metaphors are the most natural complements to our proposed pictorial semantics, any other kind of figurative interpretation we find in text or speech can in principle be applied to emojis as well—after all, on the projective account, pictures and linguistic utterances express similar semantic contents (viz., possible worlds propositions, or their dynamic equivalents, information states).

Let’s apply this to the examples illustrating the pictorial overdetermination challenge in Section 4.1. In the case of  referring to a big black BMW, the interpreter may map the depicted red car shaped object to various makes and models of cars on the basis of a mixture of resemblance and conceptual similarity (viz., all being cars). In the case of

referring to a big black BMW, the interpreter may map the depicted red car shaped object to various makes and models of cars on the basis of a mixture of resemblance and conceptual similarity (viz., all being cars). In the case of  referring to an electronic gift certificate, we have to move beyond image metaphor altogether and assume a purely conceptual mapping (from box with bow to gift card, on the basis of both being gifts).

referring to an electronic gift certificate, we have to move beyond image metaphor altogether and assume a purely conceptual mapping (from box with bow to gift card, on the basis of both being gifts).

Finally, in addition to metaphor, there are many metonymic uses of emojis that likewise require non-resemblance-based mappings. For instance,  (literally) depicts an old-fashioned camera, but can be used metonymically to denote photos, and

(literally) depicts an old-fashioned camera, but can be used metonymically to denote photos, and  literally depicts a half avocado, but can be used metonymically to denote avocados generally, or healthy vegan food more generally (28-a), or even the typical consumers of said food (28-b).

literally depicts a half avocado, but can be used metonymically to denote avocados generally, or healthy vegan food more generally (28-a), or even the typical consumers of said food (28-b).

| (28) | a. | Forever mad at myself for taking so long to go vegan

|

| b. | Proud to be #Hipster

|

To sum up, the pictorial account of emojis suggests a continuity between emoji semantics and image-metaphoric pragmatics, which correctly predicts the widespread use of image metaphors in emoji usage (see  ). In addition, pragmatic emoji interpretation on my pictorial account—as on any symbolic alternative—may also invoke (partly) conceptual metaphor, or various forms of metonymy. All kinds of non-literal meanings can be associated with any concept, whether it’s introduced symbolically by a word, or pictorially by a painting, animated gif, sticker, or emoji.

). In addition, pragmatic emoji interpretation on my pictorial account—as on any symbolic alternative—may also invoke (partly) conceptual metaphor, or various forms of metonymy. All kinds of non-literal meanings can be associated with any concept, whether it’s introduced symbolically by a word, or pictorially by a painting, animated gif, sticker, or emoji.

A proper description and formalization of ‘(vague) resemblance’ (either projectively or non-projectively), of ‘conceptual similariy’, of hybrid image-based/conceptual metaphors, of the metonymy–metaphor distinction, of the integration of metaphoric meaning extensions in the coherence-driven SDRT account of pragmatic enrichment, and of the conventional entrenchment and eventual lexicalization of stale metaphors/metonyms over time, is all well beyond the scope of the current paper. To defend these substantial omissions I can only point out that accounts of all these phenomena are already independently needed for the proper analysis of any figurative meaning in any kind of language, and hence in no way tied to the interpretation of emojis or pictures specifically.

5. Face Emojis and Expressives

5.1. The Special Status of Face Emojis

Up until this point we have been focusing almost entirely on a specific subclass of emojis, viz., those depicting familiar, concrete objects. In actual usage, object emojis however are decidedly less common than emojis depicting expressive parts of the body, especially faces and hands. According to emojitracker, the top 20 emojis include 14 face and 2 hand emojis, and 0 object or event emojis (there is also a recycling symbol and 3 types of heart emojis, which I already put aside as potentially symbolic rather than pictorial in Section 1).

Apart from some ‘symbolic modifiers’ (like the heart-shaped eyes in  , for which in Section 1 I deferred to Maier 2019), on my account these face emojis are just as pictorial as the object emojis discussed above, or as animated gifs, cartoons, or manga panels. Nonetheless, they are known to interact somewhat differently with the surrounding text. According to Grosz et al. (2021; in press), Kaiser and Grosz (2021), face emojis are expressives, meaning that they are used to express the speaker’s emotional state, roughly the same way verbal expressives do. Thus, the two utterances in (29) mean roughly the same: Kate said that Sue sent the report and I have a negative emotional attitude about that.

, for which in Section 1 I deferred to Maier 2019), on my account these face emojis are just as pictorial as the object emojis discussed above, or as animated gifs, cartoons, or manga panels. Nonetheless, they are known to interact somewhat differently with the surrounding text. According to Grosz et al. (2021; in press), Kaiser and Grosz (2021), face emojis are expressives, meaning that they are used to express the speaker’s emotional state, roughly the same way verbal expressives do. Thus, the two utterances in (29) mean roughly the same: Kate said that Sue sent the report and I have a negative emotional attitude about that.

| (29) | a. | kate said that sue sent the report to ann  (Grosz et al. 2021) (Grosz et al. 2021) |

| b. | kate said that sue sent the f cking report to ann (Grosz et al. 2021) |

Potts (2007) lists the defining characteristics of expressives: their contribution is hard to paraphrase precisely in non-expressive terms; they are speaker-oriented (‘indexical’) and (hence) unaffected by embeddings (but in some special cases may be subject to pragmatic ‘perspective shift’ in the sense of Amaral, Roberts, and Smith 2007; Harris and Potts 2010) and they are infinitely gradable (e.g., by varying intonation or repetition).

Emojis satisfy Potts’s characteristics. Regarding (i), the  in (29-a) indicates a negative attitude, but the linguistic paraphrase I gave above is just a rough approximation, not by any means semantically or pragmatically equivalent. Regarding (ii), Grosz et al. (2021) show that face emojis—unlike activity emojis—tend to express the emotional state of the producer of the utterance, while activity emojis can be anchored to any salient entity, depending on what connection creates the most coherent output.

in (29-a) indicates a negative attitude, but the linguistic paraphrase I gave above is just a rough approximation, not by any means semantically or pragmatically equivalent. Regarding (ii), Grosz et al. (2021) show that face emojis—unlike activity emojis—tend to express the emotional state of the producer of the utterance, while activity emojis can be anchored to any salient entity, depending on what connection creates the most coherent output.

| (30) | a. | Sue’s on her way now

|

|

| ↝ ⋯ and {I’m/*she’s} happy about that | |||

| b. | Sue’s on her way now

|

||

| ↝ ⋯ and {*I’m/she’s} traveling by car | |||

What’s more, face emojis tend to project out of embeddings, while activity emojis can also be interpreted under negation:

| (31) | a. | If I had gone, I’d have missed Ada

|

|

| ↝ I’m happy (that I didn’t go, because now I could hang out with my friend Ada) | |||

| ↝̸ If I’d gone, I’d have been happy (because then I’d have missed that annoying Ada) | |||

| b. | By now, Sue hasn’t trained for months  (Grosz et al. 2021) (Grosz et al. 2021) |

||

| ↝ surfing is part of the training29 | |||

Furthermore, Kaiser and Grosz (2021) show experimentally that face emojis are not always anchored to the actual speaker, but like linguistic expressives may indeed be subject to a perspective shift, for instance in constructions with a salient third-person experiencer argument the face emoji may be interpreted as conveying either the speaker’s or the experiencer’s attitude:

| (32) | Anna admired Betty

|

|

| ↝ I’m glad about that | ||

| ↝ Anna has a positive attitude | ||

Regarding (iii), while face emojis themselves are not as flexible as Wittgenstein’s suggestion of drawing expressive faces by hand, their emotive content can be scaled indefinitely by creating sequences of similar or the same emojis (McCulloch & Gawne 2018):

| (33) | Omgggggg he’s so cute

|

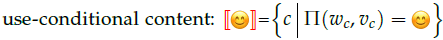

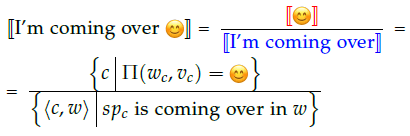

The linguistic data reviewed above strongly suggest that face emojis are first of all semantically different from the object and event emojis that we discussed in the previous section, and second of all that they seem to be expressives. In this section I reconcile these observations with my primary claim that emojis—face, object, and event emojis alike—are pictures. This requires that we first get clear on what expressives are and how to analyze them semantically (viz., in terms of use conditions). I then argue that many facial expressions and hand gestures are really expressives, and that face and hand emojis are ‘use-conditional pictures’ of such expressive gestures.

5.2. Expressivism, Expressives, and Use-Conditional Content

Expressivism is the view that some linguistic constructions can express meaningful semantic content that does not contribute to the derivation of truth-conditional content. Philosophers and linguists, more or less independently of each other, have provided expressivist accounts of ethical and esthetic vocabulary, knowledge ascriptions, mental state self-ascriptions, exclamatives, epithets, slurs, etc. What exactly is expressed by these constructions or statements containing them is a matter of debate, ranging from the emotional state of the speaker (Ayer 1936; Potts 2007; Stevenson 1944) to a more abstract semantic notion like use-conditional content (Charlow 2015; Gutzmann 2015; Kaplan 1999; Predelli 2013). While Grosz et al. (2021) opt for a more Pottsian (2007) analysis (defining emotive content in terms of real intervals signifying emotional valence), I’ll introduce and adopt the latter, more minimalistic approach to expressive content, which has the benefit of not forcing the semanticist to make any assumptions about the underlying cognitive architecture of emotions.

The use-conditional analysis of expressives can be traced back, again, to Wittgenstein:

We ask, ‘What does “I am frightened” really mean, what am I referring to when I say it?’ And, of course, we find no answer, or one that is inadequate. The question is: ‘In what sort of context does it occur?’ (Wittgenstein 1958)

In other words, expressive utterances are not amenable to a standard compositional semantic treatment in terms of reference and truth. “I am frightened” is not so much a (truth-evaluable) assertion about what the world is like, but rather an expression of the speaker’s emotional state. Instead of trying to capture the propositional content, that is, the set of worlds where the sentence is true, we should look for the ‘contexts of use’. While Wittgenstein himself takes this idea much further, turning ‘meaning is use’ into a general characterization of linguistic meaning across the board, Kaplan (1999) offers a way to isolate this insight about the meaning of expressive vocabulary and integrate it into an otherwise traditional formal semantic framework.

There are words that have a meaning, or at least for which we can give their meaning, words like ‘fortnight’ and ‘feral’. There are also words that don’t seem to have a meaning, words like [‘ouch’ and ‘oops’]. If the latter have a meaning, they’re at least hard to define. Still, they have a use, and those who know English know how to use them. (Kaplan 1999)

Gutzmann (2015) works out the details of semantic composition, adding significant extensions to Kaplan’s program. I’ll adopt some of Gutzmann’s implementation and notation below. The general idea is that in semantics we encounter two types of content: descriptive (or truth-conditional) and expressive (or use-conditional). Some expressions carry only descriptive content (‘flower’, ‘walk’, ‘every’) and combining them into a sentence will give us its truth conditions, in linguistics typically captured as a possible worlds proposition. In the following we’ll use  to denote the descriptive content of a term α.

to denote the descriptive content of a term α.

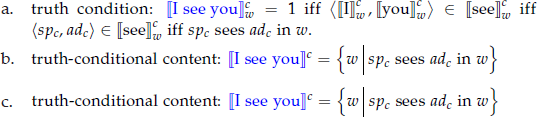

To deal with indexicals, Kaplan (1989) had already introduced a second semantic parameter, c, to the semantics. That way we can model the truth-conditional proposition expressed by an utterance of an expression in a context, (34-b), as well as the more abstract ‘character’ modelling the descriptive linguistic meaning of the sentence, (34-c). Notation: spc and adc denote the speaker/writer of context c and hearer/reader/interpreter/addressee of c, respectively.

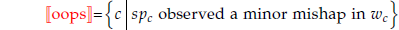

| (34) |  |

As we saw in the quotation above, the starting assumption of Kaplan’s (1999) expressivism was that there are some expressions that do not contribute to this truth-conditional content (or character), but instead express content we can model in terms of use conditions. Take Kaplan’s central example: ‘Oops’. While it’s weird to judge ‘oops’ as either true or false, we can judge whether a particular ‘oops’ was uttered felicitously on a given occasion by a given speaker. For instance, in the context where someone just saw a car run over and kill their family’s beloved pet, an ‘oops’ would be infelicitous, because the word ‘oops’ seems reserved for what Kaplan calls ‘minor mishaps’, as captured in the use condition in (35-a). We then define use-conditional content as a set of contexts —those where the expression is felicitously used, (35-b).

| (35) | a. | use condition: a use of ‘oops’ is felicitously uttered in c iff the speaker just observed a minor mishap in c |

| b. | use-conditional content: | |

|

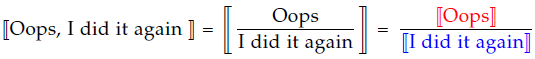

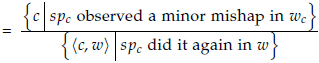

Gutzmann goes on to set up a type system to model the compositional contributions of hybrid and subsentential expressives, but first he introduces a nice fracture notation for Logical Forms (LF) that puts expressives (and their use-conditional interpretations) on top, and descriptions (and their truth-conditional interpretations) below.30

| (36) |  |

|

The full linguistic meaning of a sentence with some expressives is thus a pair consisting of a use-conditional content and a truth-conditional character.31

5.3. Expressive Gestures

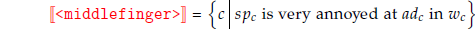

Emblematic gestures like the middle finger or waving goodbye are sometimes said to be non-verbal expressives (Ebert 2014). Indeed, we can easily verify this by checking off Potts’s (2007) criteria, the way we already did for emojis above. For instance, the meaning of the middle finger gesture concerns the speaker’s attitude (towards their addressee); it is surely negative but hard to pin down with a purely descriptive paraphrase; and it can be graded continuously by exaggerating or repeating the gesture (or combining it with facial expressions or verbal expressives).32 Since these emblematic gestures are as much conventional, intentional, symbolic, and hence as ‘language-like’ as Kaplan’s verbal examples ‘ouch’ and ‘oops’, it makes sense to analyze them semantically on a par, that is, as contributing use-conditional content.

| (37) | a. | use condition: use of middle finger gesture is felicitous iff the speaker is very annoyed at the addressee |

| b. | use-conditional content: | |

|

Crucially, as with verbal expressives, there are both felicitous and infelicitous uses of the middle finger. Someone who gives their neighbor the finger to greet her is doing something wrong, or at least breaking an established convention, as is someone who gives someone the finger while she is angry at someone else. Hence, the use-conditional content provided by the definition in (37) is non-trivial and arguably approximates the gesture’s core linguistic meaning.

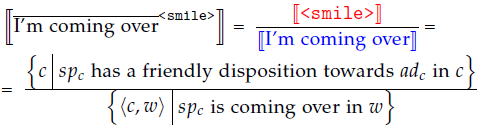

Now, the same considerations apply to (some) facial expressions. Though a smile, unlike the middle finger gesture, is to some extent more natural and perhaps even culturally universal (Darwin 1872), and not always intentional or conscious, we can still say that it is felicitous if the speaker has a friendly disposition towards the addressee, and infelicitous otherwise.33 Notation: I’m using an ‘overline’ notation borrowed from sign language studies to denote co-speech gestures.

| (38) |  |

5.4. Face Emojis as Use-Conditional Pictures

Facial expressions and face emojis both behave like expressives, as we verified by checking off Potts’s criteria in 5.3 and 5.1, respectively. But only face emojis are at the same time pictures. I’ve proposed viewing face emojis as pictures of facial expressions, which are in turn expressives. But this does not immediately explain why the emojis themselves behave like expressives.