1 Current assessment systems and their issues

This article considers how the commonly adopted mechanisms of research assessment are reducing multilingualism and bibliodiversity—publications that address national and regional audiences in their own languages (Giménez Toledo et al. 2019)—in research publishing worldwide. By considering assessment at the university, journal, and book level, we will demonstrate that the emphasis on a limited number of metrics, primarily citation counts of journals that are indexed in particular databases, is resulting in an increasing proportion of English-language publications. Beyond language, this narrowing of publication behavior affects local context and culture. There are some indications that the increased adoption of open research activity, particularly open infrastructure, offers a practical and effective counter to this global issue.

English became the lingua franca of academic discourse in the twentieth century (Gordin 2015), with recent evidence that the proportion of journal articles published in English compared to the national language in non-English-speaking countries is increasing (McIntosh and Tancock 2023; Weijen 2012). An analysis of 2020 publications registered with Crossref Digital Object Identifiers (DOIs) clearly demonstrated this, with over 85% of the works published in English. The next largest languages represented were German (2.9%), Portuguese (2.2%), Spanish (2.0%), and Bahasa Indonesian (1.3%) (UNESCO 2023a). A caveat is that other countries have their own registration agencies, excluding the works only registered therein from this count. Books also follow this trend; the Book Citation Index reflects the same language biases for books as Crossref does for journals, with a 2013 study finding that 75% of the book chapters indexed originating from the United States and England. The language other than English with the greatest share of book chapters was German with 14% (Torres-Salinas et al. 2013). The domination of English in academic publication is partly due to the emphasis institutions place on assessment of researchers and the importance they place on university rankings, which are both heavily slanted towards English-language research outputs.

Given the focus of this article on how assessment affects local culture and language, it makes a distinction between areas of the world. It uses the term Global South in the context of a “general rubric for decolonised nations roughly south of the old colonial centres of power” (Haug 2021). The authors work in an open access (OA) scholarly book environment, but the proportion of research and publication on assessment of journals overwhelms the assessment of books, and much of the discussion in the article reflects this imbalance. We begin with a discussion about assessment at a university level before looking at the differences in the way journal articles and books are assessed. As books are still the major publication form in the humanities and social sciences, it is important to not only focus on journals. Scholarly infrastructure and how it is embedded in assessment systems are discussed, and the embedded bias towards English-language research outputs throughout is explored. The article then introduces alternative assessment options, including the use of open infrastructure, and explores how these alternatives can assist with issues of lingual and participatory equity. The second part of the article discusses the open infrastructure example of the OAPEN Library and the Directory of Open Access Books (DOAB).

1.1 Assessment at the university level

University rankings have long been a mechanism of assessment in the academic environment, with several rankings capturing world attention for nearly two decades. The three major global university rankings claim slightly different points of measurement. For example, the 2024 Times Higher Education (THE) World University Rankings “analysed more than 134 million citations across 16.5 million research publications and included survey responses from 68,402 scholars globally” (Times Higher Education 2024). The QS World University Rankings are based on an “analysis of 17.5m academic papers and the expert opinions of over 240,000 academic faculty and employers” (Quacquarelli Symonds 2024). The Academic Ranking of World Universities “uses six objective indicators to rank world universities, including the number of alumni and staff winning Nobel Prizes and Fields Medals, number of highly cited researchers selected by Clarivate, number of articles published in journals of Nature and Science, number of articles indexed in Science Citation Index Expanded™ and Social Sciences Citation Index™ in the Web of Science™, and per capita performance of a university” (ShanghaiRanking 2022).

Despite these claims of difference, all three university rankings primarily measure a university’s reputation and its research performance with dependence on bibliometric databases (Craddock 2022; Bridgestock 2021). Analysis has found the variables used provide an ambiguous quantification of a university’s performance. According to Friso Felten et al. (2020), “it revealed that the rankings all primarily measure two concepts—reputation and research performance—but it is likely that these concepts are influencing each other… . Our results also show that there is uncertainty surrounding what the rankings’ variables exactly quantify.” In effect, the results are biased towards science-oriented universities and are more favorable towards older institutions in the English-speaking parts of the world.

There has been a long history of commentary on and criticism of university rankings. An early call to critically examine the underlying assumptions of the league tables (Steele, Butler, and Kingsley 2006) has been followed by a considerable body of work. The Leiden Manifesto published 10 principles in 2015 to guide research evaluation, in order to make sure that quantitative aspects are not overshadowed by qualitative measures (Hicks et al. 2015). In the United Kingdom, the 2022 Harnessing the Metric Tide report (Curry, Gadd, and Wilsdon 2022) encouraged institutions to engage more responsibly with university rankings, including considering signing up to the More Than Our Rank initiative (INORMS 2022). The Agreement on Reforming Research Assessment has been signed by more than 400 European research performing and funding organizations and argues to “avoid the use of rankings of research organisations in research assessment” (CoARA 2022). This increased activity around responsible metrics has been described as a “professional reform movement” (Rushforth and Hammarfelt 2023).

University rankings show a clear bias towards institutions in the United States and the United Kingdom, with the Academic Ranking of World Universities (ARWU) and THE reporting the proportion of institutions from these countries in the top 200 ranked universities as 41% and 44%, respectively (Horton 2023). The bias is even more stark when considering the top 20 ranked universities across THE and ARWU. In 2021, 19 of those 20 positions were awarded to universities from the United States and United Kingdom (Nassiri-Ansari and McCoy 2023). This is partially a reflection of these rankings using bibliometric sources, which are dominated by English-language journals, with the advantages this provides native English speakers (Altbach 2012; Selten et al. 2020; Vernon, Balas, and Momani 2018).

The very low proportion of universities located in Africa, Asia, Latin America, and the Caribbean represented in rankings has been described as the “silencing of the South” (Horton 2023). This reflects a “colonial hierarchy” that entrenches “the global dominance of universities from the Global North while driving universities in the Global South to focus on priorities that are externally determined and which may require them to divert significant resources away from core academic activities towards the intensive and time consuming business of data collection and international competition” (Nassiri-Ansari and McCoy 2023, 18).

The university ranking system has “given rise to an ‘unhelpful nexus’ between universities, publishers, funders and global ranking agencies, as researchers and their institutions chased higher international rankings through publication numbers,” according to Australia’s chief scientist (Cassidy 2023). There are, however, signs of a shift in this trajectory. Some universities have taken action. A ranking system based in the United States, the US News and World Report, has experienced multiple universities’ withdrawal (Allen and Takahashi 2022), and Utrecht University withdrew from the 2024 THE rankings (Utrecht University 2023). University rankings are hugely influential at many levels, but their heavy focus on the Global North and English-language publications limits participation from universities outside English-speaking countries. Indeed, Rhodes University referred to the “neocolonial nature of ranking systems” in its statement about its withdrawal from university rankings (Rhodes University 2023). Elsewhere, the university rankings have been described in terms of their “coloniality” (Nassiri-Ansari and McCoy 2023).

1.2 Assessment at the journal level

The journal impact factor (JIF) purports to identify the frequency an average article in a journal has been cited in a specific period. It is based on a calculation “by dividing the number of current year citations to the source items published in that journal during the previous two years” (Clarivate 2023). Like university rankings, the JIF has come under prolonged scrutiny with decades of articles condemning the use of the JIF to measure researchers. Criticisms of the way the impact factor is calculated include variation due to statistical effects, the short period of time it covers, the lack of representation in the sample of journals that is used, and the effect of the research field on the impact factor. As an example of the representation gap, journals in the Emerging Sources Citation Index for Global South publications were only assigned JIFs in 2023 (Quaderi 2023). There is also considerable criticism of its misuse as a gauge of the relative importance of individual researchers and institutions (Amin and Mabe 2003; Hecht, Hecht, and Sanberg 1998; Opthof 1997; Seglen 1997; Walter et al. 2003). Among the many concerns raised about impact factors is the opaque nature of the decision about what a “citable” article type is. In 2006, when the JIF was managed by Thomson Reuters, this resulted in a comment that “science is currently rated by a process that is itself unscientific, subjective, and secretive” (PLoS Medicine Editors 2006). Today JIFs are published by Clarivate in Journal Citation Reports (JCR) (Clarivate 2023).

JIFs are not calculated for all journals, and one of the major criticisms of the JIF is the basis on which a journal is selected to be included in the reports (Bollen et al. 2005). The original selection process was to provide access to the “most important and influential scholarly journals,” but the majority of journals are in English from North America and Europe, with many areas of the world underrepresented in terms of coverage (Steele, Butler, and Kingsley 2006). JIFs are simply inappropriate in the context of journals focused on local issues; indeed, many national journals are unique in their fields, meaning traditional impact factors to indicate use of journals is misleading (Taşkın et al. 2020). A recent study criticized its lack of bibliodiversity, due to a large focus on publications from the Global North (Bardiau and Dony 2024).

Using the JIF as a proxy for assessing the quality of a specific article or individual is highly problematic, with citation distributions “so skewed that up to 75% of the articles in any given journal had lower citation counts than the journal’s average number” (Bohannon 2016). This is due to a common occurrence when a small number of articles in a given journal have a high number of citations which affects the overall JIF (Larivière et al. 2016). In recognition of these long-standing concerns, in 2012, the San Francisco Declaration on Research Assessment (DORA) was launched with the intent to “Put science into the assessment of research” (DORA 2014). Today that mission is “to advance practical and robust approaches to research assessment globally and across all scholarly disciplines” (DORA, n.d.).

Over a decade after DORA was launched, the Agreement on Reforming Research Assessment argues for the “abandon[ment] of inappropriate uses in research assessment of journal- and publication-based metrics, in particular inappropriate uses of Journal Impact Factor (JIF) and h-index” (CoARA 2022). It has become clear that a narrow focus on publications as the sole point of assessment is problematic, with calls to a shift to “assessing the values and impacts of science and with a focus on the people who are doing, engaging with and/or benefiting from science” (UNESCO 2023a, 10).

Regardless, the JIF continues to be used to assess academics “despite the numerous warnings against such use” (McKiernan et al. 2019). The JIF is high stakes for academic careers, which can result in individuals and journals gaming the outcome, resulting in “radically new forms of academic fraud and misconduct” (Biagioli and Lippman 2020).

1.3 Assessing books

Books are not measured using the same mechanisms as journals. The longer writing and publication timeframes associated with books means citation counting over a period of a few years is nonsensical given the citation would not be published within the timeframe. There are indicators the “half-life” of scholarly books is well over a decade (Arao, Santos, and Guedes 2015). In addition, the multiple categories into which books can be placed, beyond the discipline forming the subject of the work, make standardized measurements highly challenging. In academic book publishing, formats can range across a monograph, an edited volume, a major reference work, and textbooks. The possibility of multiple editions of the same title also complicates the landscape. A study of over 70,000 books demonstrated the metrics of a given title varied significantly from one measurement mechanism to another, challenging the possibility of a standardized metric (Halevi, Nicolas, and Bar-Ilan 2016).

The challenges to standardized assessment posed by books have not prevented attempts to create indexes. Clarivate offers the Book Citation Index in Web of Science, containing more than 137,000 books, and Scopus indexes over 334,000 books (Clarivate, n.d.-a; Elsevier, n.d.-a). However, recent research considering the discipline of political science indicated “the Web of Science Book Citation Index is failing to meet its stated ambitions—because it is not well structured to achieve those ambitions and not well marketed to the essential actors in the publishing industry who are critical for it to function as intended” (Hill and Hurley 2022).

The bibliometric metrics used for journals are not helpful for measuring the impact of books. Instead, other indicators are needed. In an attempt to develop a mechanism for performance evaluation of research in the humanities, particularly given the limitation of the Web of Science exclusion of citing references in books, A. J. M. Linmans (2010) devised a solution. The proposal was to measure the extent to which books of the same authors are represented in the collections of representative academic libraries in different countries. This idea gained traction and was adopted into commercial applications such as PlumX (Torres-Salinas, Robinson-Garcia, and Gorraiz 2017). Plum Analytics was subsequently acquired by Elsevier (Plum Analytics 2017). The value of measuring library holdings was further supported by a comparison of print and electronic holding counts as a mechanism to predict the impact of books. The work identified a need for a distinction between these types of counts and concluded that curated print holdings are a more accurate indicator of impact, compared with the large collection of electronic books in libraries (Maleki 2022).

Another way to measure a book’s scholarly value is based on the prestige of the publisher. In several European countries this type of assessment is being practiced. Whether the quality of a publisher can be objectively quantified is doubtful. The lack of transparency of publishers’ quality assurance processes and the fact that experts in different countries may have contradictory opinions on the prestige of a publisher lead to less than optimal results (Dagienė 2023). Scholarly books do not lend themselves to easy metrification. This could potentially be an advantage for the medium given the challenges bibliometric databases are posing for journal publishing.

1.4 The influence of bibliometric databases

University rankings and the JIF are based on one of the two main commercial bibliometric databases: Clarivate’s InCites (based on Web of Science) and Elsevier’s SciVal (based on Scopus). These are incredibly influential. The JIF is generated from Clarivate’s Web of Science. THE and QS rankings both use Elsevier’s SciVal as their publication and citation data source. The ARWU uses Clarivate’s InCites database as its publication and citations source (Szluka, Csajbók, and Győrffy 2023). The 2021 REF exercise in the United Kingdom used citation information from Clarivate Analytics (Higher Education Funding Council for England 2018). Prior to its cancellation, the Australian Research Council’s 2023 research assessment exercise, Excellence in Research for Australia, had also selected Clarivate Analytics to provide the citation information (Australian Government 2023).

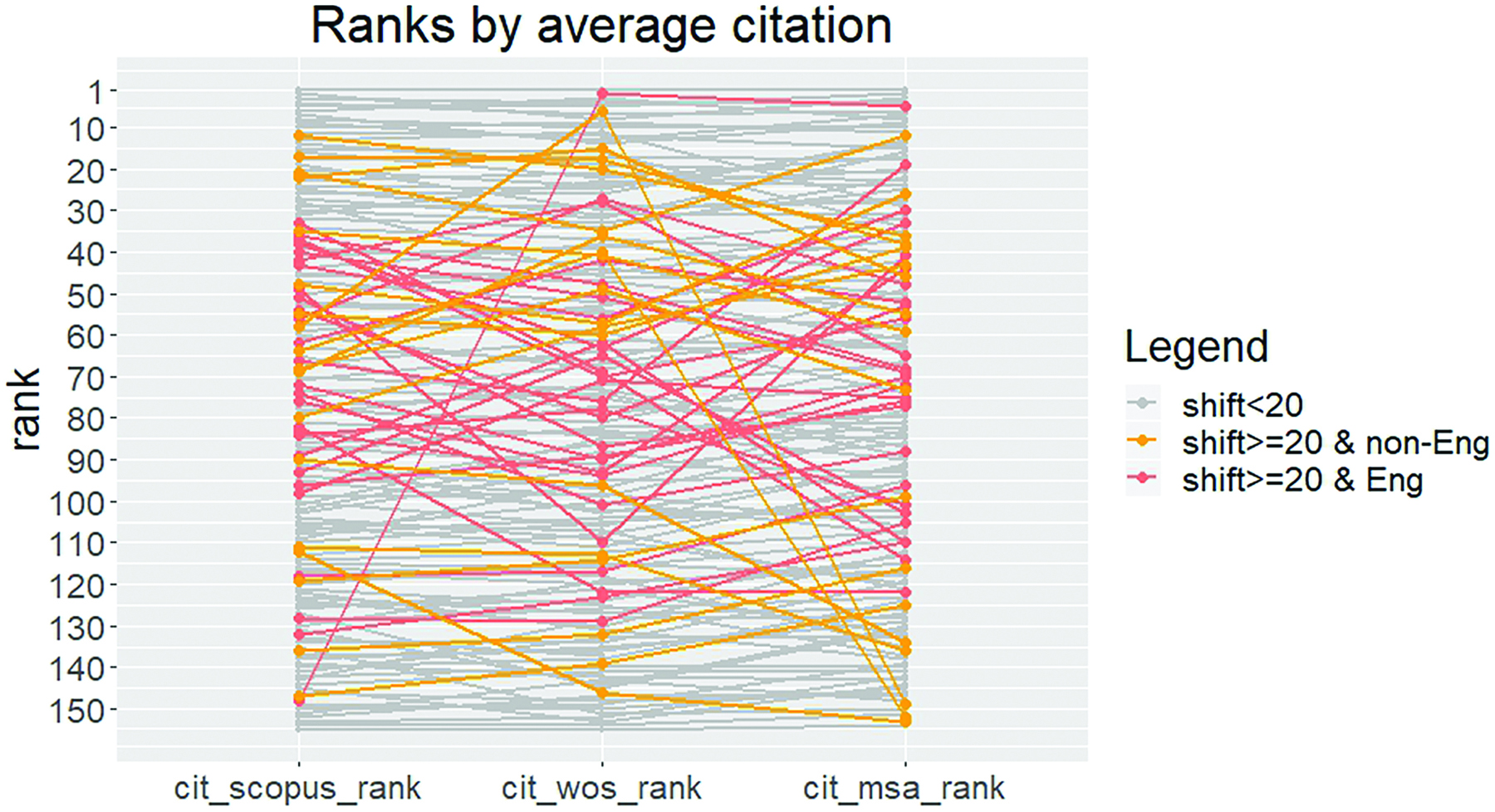

Given that these databases ostensibly use the same source information, it follows that a comparison of them should result in alignment of data. However, a bibliographic comparison across Web of Science, Scopus, and the now retired Microsoft Academic found significant differences across databases. The implications for university rankings was “drastic” with the most impacted universities being non-English-speaking universities and “those outside the top positions in international university rankings” (Huang et al. 2020) (see Figure 1).

These differences take on great significance when considering the impact bibliometric indicators have when they are integrated into systems of incentives. A global study found that contrary to the international trend of self-citations reducing during the period 1996 to 2019, a subsection of countries demonstrated the reverse—with the common factor among those countries being they introduced direct or indirect rewards for the bibliometric performance of scientists. Note these were not performance-based funding systems; rather, “it appears that the crucial factor influencing this anomalous self-citation behavior is the proximity of incentives based on citations to an individual researcher’s career and wage” (Baccini and Petrovich 2023). The authors of the study note, “when bibliometric indicators, and citation-based indicators in particular, are integrated into systems of incentives, they cease to be neutral measures to become active components in the research system. As such, they are able to modify the behavior of entire scientific communities. Hence, they should be handled by science policy makers with the most extreme caution” (Baccini and Petrovich 2023).

Commercial databases take a narrow view of scholarly literature with a heavy focus on English-language journals derived from the Global North. As an example, in 2022, of the over 25,000 journals published across 136 countries in multiple languages using the open source Open Journal System, only 1.2% were indexed in the Web of Science and 5.7% in Scopus (Khanna et al. 2022). This clearly disadvantages publications in local languages. This English-language bias is reflected in the selection process for the two main commercial databases. For journals publishing in languages other than English, Scopus requires that the journal has “content that is relevant for and readable by an international audience, meaning: have English language abstracts and titles” and that an English-language journal home page is available. This also applies to books, for which “book metadata (including title and abstract) must be in the English language” (Elsevier, n.d.-b). The Web of Science gives priority “to books presented in English; however, books presented in other languages will be considered if they are of interest to a sufficiently broad research community” (Clarivate, n.d.-b). For journal articles, “regardless of the language of the main body of published content, the journal must provide an accurate, comprehensible English language translation of all article titles. Scholarly articles must have abstracts, and those abstracts must be translated to English” (Clarivate, n.d.-b).

Some authors argue that it is critical to decentralize journal indexing by establishing independent regional-level citation databases to reduce Western bias in gauging the quality of journals (Bol et al. 2023). There are some existing examples of regional (Latin American) indexation platforms using local scientometric tools, including Scientific Electronic Library Online (SciELO), the Redalyc database and digital library, the Latin American and Caribbean Health Sciences Literature (LILACS) database, and the Latindex directory of scholarly journals from Latin America (Salager-Meyer 2015). Last, a recent article describes how open access helps to close the gap between lower and higher income countries, based on citation patterns. Again, usage of Web of Science was higher for authors from higher income countries (Karlstrøm, Aksnes, and Piro 2024).

Moves have also been made towards open alternatives to the commercial databases. In recent years, the OpenAlex search engine is gaining traction as an open source alternative index of research across the world (Priem, Piwowar, and Orr 2022). Launched in 2022, it currently indexes over 245 million academic publications. The Leiden Ranking Open Edition, an open alternative to institutional rankings, has been developed by the Centre for Science and Technology Studies (CWTS) at Leiden University in collaboration with the Curtin University’s Open Knowledge Initiative and was released on January 30, 2024 (Centre for Science and Technology Studies 2024).

1.5 Scholarly infrastructure: “Whoever controls the media, controls the mind”

Global North commercial academic publishers are increasingly in control of scholarly infrastructure.1 This has been achieved through a process described by some as “vertical integration” and “platformisation” (Ma 2023). As an example, Elsevier, through its parent company RELX, has acquired and launched products that extend the influence and ownership of infrastructure to all stages of the academic knowledge production process (Posada and Chen 2018). Other descriptions of the same phenomenon have used the term “platform capitalism” (Chan 2019), which refers to the consolidation of scholarly publishing expanding to end-to-end platforms (Pooley 2022; Berger 2021). The bibliometric databases discussed above form part of this platformization.

Consolidation of the management of knowledge into the control of a small number of Global North companies poses many issues including the reinforcement of the power of these platforms. The business model creates a circular “hypercompetitive environment with the purpose of increasing the volume of publications. It is because the higher the number of publications, the more data can be collected for data products and consultancy services,” which in turn are marketed back to the institutions providing the publications (Ma 2023). This monopolization and commercialization of scholarly data has led to large data companies such as RELX and Clarivate being described as “data cartels” (Lamdan 2022).

The issues extend to global copyright law which, it is argued, “in its current design and interpretation, is essentially a product of the North,” resulting in “the international commercial scholarly publishers based and enclosing scientific knowledge in the global North,” a situation that effectively closes science from those working in the Global South (Beiter 2023). This situation has implications for all research production but is particularly problematic for institutions in the Global South, which are using the same assessment patterns as their “Northern” counterparts. In addition to reducing bibliodiversity through stifling opportunities for non-profit, scholar-led, and library publishers, platform capitalism poses a further disadvantage to Southern scholars as knowledge creators due to the exclusion of journals from the Global South (Berger 2021). This exclusion has led to an increased dependency on English-language publication.

Institutions place high emphasis on assessment of researchers through citation counts and the importance they place on university rankings, which in turn are also dependent on citation counts. This results in a need to obtain as high a level of citations as possible, and there is ample evidence that publishing in English results in higher citations, particularly in English-language journals. The emphasis on English-language titles in bibliographic databases increases the discoverability of these journals over those in other languages. One study demonstrated within a given journal, articles written in English have a higher chance of being cited and a receive a higher number of citations than those published in Spanish, Portuguese, French, Japanese, or Korean (Di Bitetti and Ferreras 2017). German-language medical papers are less likely to be cited in English-language articles than German-language articles (Winkmann, Schlutius, and Schweim 2002). China has an official policy of encouraging more international publication in English to increase the globalization of Chinese scholars (Flowerdew and Li 2009). This emphasis on English publication also extends to books. In the mid-1970s, the number of French publications reflected the proportion of French speakers in Canada, but today that number is below parity. French-speaking researchers favor publishing in English due to “a desire for more visibility, impact or peer recognition” (Lobet and Larivière 2022, 25).

This emphasis of publishing in “international” (i.e., English-language) journals has multiple consequences for non-native English speakers, including problems with having the quality of their English reviewed over the academic content of their work and limited access to scientific research for those who do not speak English in both the scientific community and the community at large (Márquez and Porras 2020). The focus on English has serious consequences for those publishing in their first language, with one scholar using the phrase “cucaracha (cockroach) syndrome” to describe “the feeling of my research and perspective being routinely undervalued and underappreciated due to my local context and language abilities” (Pérez-Nebra 2023). These biases also affect how research is reported globally, with arguments that there is a strong need to ensure there are multilingual means to access research and that “Google Translate is not enough” (McElroy and Bridges 2018, 618; Taşkın et al. 2020).

There are also implications for non-native-English-speaking students when they are expected to read English textbooks. While English-language textbooks were seen as less difficult than English-language articles, one study found that the time taken for Swedish students to read these books was considerably longer than when reading in their first language (Eriksson 2023). The amount of extra time required to work in a second language is also a significant issue for authors (Flowerdew and Li 2009; Meneghini and Packer 2007).

The English-language focus is an issue for the type of research undertaken in non-English-speaking countries. “In such a case, some transfer of knowledge may have taken place from South to North, but South-South transfer—transfer to where that knowledge could be most relevant and needed—is impeded” (Lor 2023, 14). This can result in an “imbalance in knowledge transfer in countries where English is not the mother tongue; much scientific knowledge that has originated there and elsewhere is available only in English and not in their local languages” (Amano, González-Varo, and Sutherland 2016). There is some evidence that the choice of language is influenced by the intended audience. For work targeted at a local scientific community, there is a tendency to publish in the local language; however, international discourse, particularly in the natural and technological sciences, uses English as the singular language (Acfas 2021; Chinchilla-Rodríguez, Miguel, and de Moya-Anegón 2015).

There appears to be a disciplinary tendency for publications in the humanities and social sciences to be more likely to be published in the local language (Flowerdew and Li 2009). For example, the number of books published in French is considerably higher in the human sciences than the natural sciences (Acfas 2021). In fields of social sciences such as political science, sociology, geography, history, and folklore, there are “countless contextual (social, political, economic, and cultural) reasons for publishing ‘nationally,’ using the respective languages,” which runs contra to publication only in English-language international journals (Paasi 2005, 773).

Having to work in English when it is not the researchers’ primary language also brings cultural issues and adaptations by the researchers (Woolston and Osório 2019). This extends to choosing collaborators, with implications for the focus of the research undertaken. For example, the choice of country with which Argentinian researchers collaborate has a direct effect on research impact, with the highest impact tied to collaboration with the United Kingdom, the United States, and Germany (Chinchilla-Rodríguez, Miguel, and de Moya-Anegón 2015).

The combination of the commercial ownership of scholarly information and the related emphasis on English-language publishing is having a profound effect on what research is undertaken. Lai Ma (2023) argues, “It is apparent that the platformisation of scholarly information is affecting … the authority as to what is knowledge or information.” The international use of English as the lingua franca is causing an “emerging tendency for homogenisation in geographical research and publication practice” (Paasi 2005, 772).

Kazumi Okamoto (2015, 72) notes, “If we have to tackle issues that hinder social science scholars from regions that are not included in the West, our first target should be the taken-for-granted universality of the Western social science knowledge generation and dissemination system and of norms and conventions in the Western social sciences.” Concerns about the anglicization of academic discourse include loss of culture, identity, ideology, meaning, and transfer of knowledge. This not only undermines and slows down the development of national cultures, but the emphasis on culture and ideology of English-speaking countries means it shapes the formation of scientific discourse and defines what is considered to be valid research (Acfas 2021).

One of the consequences of this homogenization is that “non-traditional” forms of knowledge acquisition are marginalized. An example is the study of Native American and other Indigenous peoples “whose knowledge traditions and worldviews make few or no distinctions between or at least inextricably link the physical world and the metaphysical world” (Shipley and Williams 2019, 296). This represents an alternative worldview to that presented in traditional Global North research. The cultural differences between the originating country and the Global North perspective can be so marked that they form “separate discourse communities” in terms of the issues studied and method of approach (Flowerdew and Li 2009, 9).

1.6 Considerations of alternate assessment

While early discussions around “open” were focused on open access to published literature (Chan et al. 2002), the global discussions have broadened to open science. The word “science” here is in context of the Latin scienta, meaning knowledge, and encompasses all research. However, terms such as “open research” are also used to mean the same thing. Open science is “a set of principles and practices that aim to make scientific research from all fields accessible to everyone for the benefits of scientists and society as a whole” (UNESCO 2023b). The philosophy behind open science includes equity as an outcome, so it is worth considering how some of the issues discussed above are reflected in the open environment. Open science policies are unevenly distributed globally, with Europe playing a leading role in open science policy development and implementation. Plan S was launched in 2018, where major European and American funders joined to place requirements for funded published research to be openly accessible, usually via payment for publication (cOAlitionS 2018). In 2022, while the European Union accounted for 17% of total global articles, reviews, and conference papers, they accounted for 26% of the world’s gold open access publication (STM, n.d.). Furthermore, an analysis of open science policies has shown there is an overwhelming focus on making research outputs available while neglecting to provide concrete guidance on how equity, diversity, and inclusion and public participation in research can be achieved (Chtena et al. 2023). These inequities have led to arguments that “progressive open access practices and policies need to be adopted, with an emphasis on social justice as an impetus, to enhance the sharing and recognition of African scholarship, while also bridging the ‘research-exchange’ divide that exists between the global south and north” (Raju and Badrudeen 2022). Multilingualism can promote inclusiveness and equity of researchers, and policymakers should consider multilingualism as a mechanism for encouraging bibliodiversity and avoiding English as the lingua franca turning into the lingua unica (Leão 2021).

1.6.1 Is “open” addressing issues of lingual and participation equity?

In parallel with the policy environment, the locations of open publication and data repositories are heavily dominated by the Global North. Although many researchers are sharing their findings in open access repositories, “Western Europe and North America account for nearly 85% of all the open access repositories while Africa and the Arab region account for less than 2% and 3%, respectively” (UNESCO 2023a, 42). The language spread across data repositories is more positive, although far from ideal: “of the 3,117 data repositories, the majority of data repositories indexed in the Registry of Research Data Repositories use English, followed by German (9%), French (9%), Spanish (4%), and Chinese (3%); other languages are used in less than 16% of the indexed data repositories” (UNESCO 2023a, 43).

Open access is, however, increasing the diversity of citation sources “by institutions, countries, subregions, regions, and fields of research, across outputs with both high and medium–low citation counts” (Huang et al. 2024). This finding, from analysis of large-scale bibliographic data from 2010 to 2019, showed a stronger effect through open access disciplinary or institutional repositories than open access via publisher platforms.

Open access publication is offering an alternative option for publishing in languages other than English. Diamond open access journals do not charge a fee to publish. In the Directory of Open Access Journals between 2020 and 2022, of articles published in languages other than English, 86% were in diamond journals (UNESCO 2023a). While open access outputs from Northern Europe and North America benefit most from increases in citations from use of their work in other regions in the world, there are indications that open access outputs from traditionally underrepresented regions are increasing (Huang et al. 2024).

As with most of the evidence and examples provided in this article, the majority of research is focused on the journal publishing environment, but there is some research into monographs. For example, current interest in publishing monographs as open access is relatively low, with the majority of publishers in a recent survey indicating that open access monographs represent less than 10% of published works, and fewer than 10% of authors inquire about OA publication options (Shaw, Phillips, and Gutiérrez 2023). It is worth noting that there is substantially higher usage of OA monograph content than closed books across a wider range of countries, including underserved populations and low- to middle-income countries (Neylon et al. 2021).

Open access is making significant inroads into the Global North stranglehold, providing opportunities for publication and access to a wider range of research than is available commercially. This is possible due to the infrastructure supporting publication, distribution, analysis, and preservation.

1.6.2 The open infrastructure alternative

There are alternatives to the large commercial infrastructure providers. Open infrastructure is a set of services, protocols, standards, and software built by the community for the benefit of the community to support open research practices (Invest in Open Infrastructure 2022). Generally, community-based open infrastructures are values driven, with some emphasizing their “cannot be sold” status (Casas et al. 2024). A global ecosystem of open infrastructure has been developing over the past 20 years in parallel.

Many open infrastructures start as a project or idea from a few members in the scholarly communication community and are often initially reliant on volunteer labor or philanthropy. However, there have been multiple recent initiatives to formalize and consolidate open infrastructures. As an example, the Principles of Open Scholarly Infrastructure (POSI) “offers a set of guidelines by which open scholarly infrastructure organisations and initiatives that support the research community can be run and sustained” (Bilder, Lin, and Neylon 2020).

Funding for open infrastructure can be challenging, often relying on memberships, grant funding, or the largesse of a supporting institution. There have been some initiatives to formalize the financial support of open infrastructure. In 2017 the 2.5% Commitment was proposed, in which “every academic library should commit to invest 2.5% of its total budget to support the common infrastructure needed to create the open scholarly commons” (Lewis et al. 2018). The same year saw the Global Sustainability Coalition for Open Science Services (SCOSS) form as “a network of influential organisations committed to helping secure OA and OS infrastructure well into the future,” where open infrastructures are invited to apply for SCOSS coordinated funding (Global Sustainability Coalition for Open Science Services, n.d.). Invest in Open Infrastructure (IOI) arose as a concept in 2018 and secured funding in 2019, with paid staff from 2020. IOI takes a “big picture” approach, “providing actionable, evidence-based guidance and tools to institutions and funders of open infrastructure, and piloting funding mechanisms to catalyse investment and diversify funding sources for open infrastructure” (Invest in Open Infrastructure 2022). In the open monograph space, the Open Book Collective “brings together publishers, publishing service providers, and scholarly libraries to secure the diversity and financial futures of open access book production and dissemination.” The goal is to “restore custody over scholarly publishing” (Open Book Collective, n.d.).

There are calls to subsidize not-for-profit publishers to allow them to scale and survive, noting that “expecting them to offer cost savings over established commercial publishers is a sure-fire way to see them fail” (Johnson 2024). A clear example is in Latin America, where the main drivers “have been public universities, scientific societies, research foundations and other government organizations” (Hagemann 2023). It is important to align the support for infrastructure with the way research itself is supported and move away from reliance on volunteers and philanthropy (Thibault et al. 2023). There are some good exemplars, such as large organizations deciding to internally develop and support open infrastructure, with CERN as a clear example (CERN Open Science, n.d.). Recent collective international work has focused on strengthening the diamond open access ecosystem, with intention to develop a “global federation for Diamond Open Access” (Science Europe 2023). The German Research Foundation (DFG) is calling for proposals that “seek to boost the performance capacity of Diamond Open Access infrastructures operating in Germany” (German Research Foundation 2024).

However, for all of the substantial work and investment into open infrastructure, “the use of metrics in comparing and benchmarking individual achievement to university performance becomes the choke point in the further development of open research infrastructure” (Ma 2023). There is a real threat to the sustainability of open infrastructure and multilingualism if the research endeavor continues to focus solely on citations as a means of assessment. In the next section, we will discuss the collections of the OAPEN Library and DOAB and how they are addressing issues of multilingualism and bibliodiversity.

2 How has OAPEN addressed these issues?

The OAPEN Foundation is a not-for-profit organization based in the Netherlands, with its registered office at the National Library in The Hague. OAPEN promotes and supports the transition to open access for academic books by providing open infrastructure services to stakeholders in scholarly communication, such as hosting, deposit, quality assurance, dissemination, and digital preservation. The foundation works with publishers to build a quality-controlled collection of open access books (OAPEN, n.d.). The OAPEN Foundation manages two open access books platforms and might be considered as one of the more prominent infrastructure providers in this space. The oldest of the two platforms is the OAPEN Library, officially launched in 2010 and set up to host and disseminate open access books and chapters (Ogg 2010). It aims to build a quality-controlled collection and to provide services for publishers, libraries, and research funders in the areas of dissemination, quality assurance, reporting, and digital preservation. DOAB was officially launched in 2013, as a joint service of OAPEN, OpenEdition, CNRS, and Aix-Marseille Université, provided by the DOAB Foundation (Whitford 2014). Since then it has become a global focal point for open access books and metadata and is often seen as the de facto source for open access books. The OAPEN Foundation is engaged in the global infrastructure community activity as a signatory to POSI and a beneficiary of SCOSS funding as described above.

Both platforms make the daily updated metadata of the complete collection freely available, under a Creative Commons Zero (CC0) license. This means that the metadata—in several formats—is in the public domain, without any restrictions. In addition, the availability does not only allow libraries and aggregators to use this in their own offerings but also enables scrutiny of the collection data presented in this article.

The collection of the OAPEN Library is also skewed towards books in English. Perhaps it is also not surprising that the total number of downloads for titles in that language is quite large, and this tends to obscure the usage of the other titles. However, when looking at the usage on a country-by-country basis, the picture is more nuanced. When the top 10 book downloads in many countries are analyzed, it becomes clear that the books written in the national language or a much-used language are downloaded frequently. In countries where English is a major language, many books deal with regional concerns. This counters the narrative of the dominance of English (Snijder 2022).

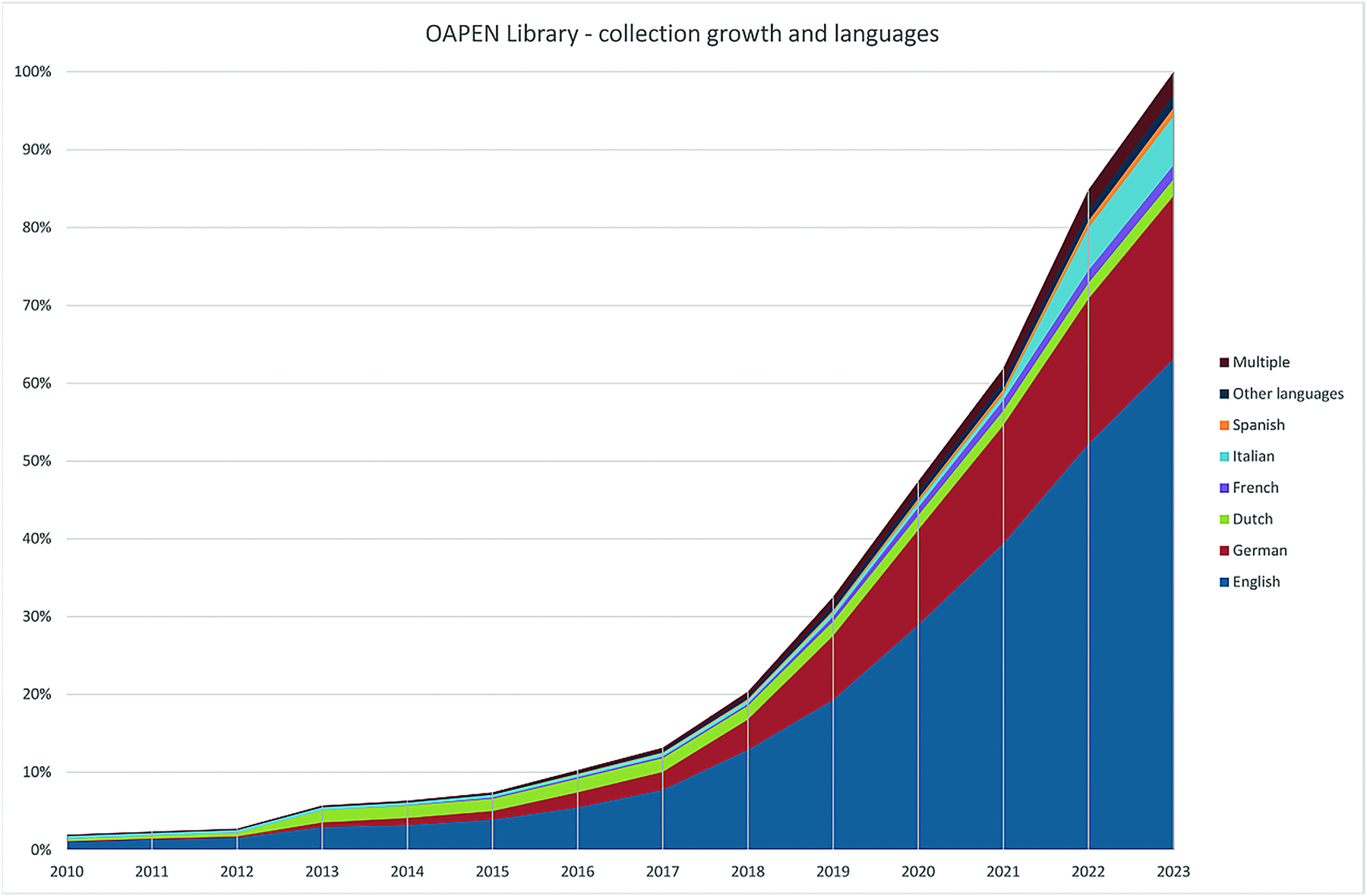

Currently, English is the dominant language in the OAPEN Library, but what have the trends been over the last decade?

2.1 OAPEN analyzed

Both the OAPEN Library and DOAB are using the open source DSpace 6 environment. As previously mentioned, the metadata of their complete collections are freely available.2 The metadata contain a range of information about the titles, including the date titles have been added to the collections.3 This allows us to count the number of titles per year. For this analysis, the metadata of the complete collections have been downloaded as a comma-separated text file. The data have been uploaded in Excel, and the values of the column “dc.date.accessioned” have been used to determine the year a certain title has been added to the collection of the OAPEN Library or DOAB, respectively. In the charts below, the number of titles in the collections in a certain year is shown as a percentage of the number of titles in 2023.

When we look at the growth of the OAPEN Library collection over the years (see Figure 2), the continuous dominance of the English language is striking. In the early years, the percentage of titles in languages other than English was higher, but as time progressed most added titles were written in English. Also, the total amount of German-language titles kept growing at a steady pace. In the last two years, the influx of Italian titles is visible; this is due to the efforts made by one publisher, Firenze University Press.

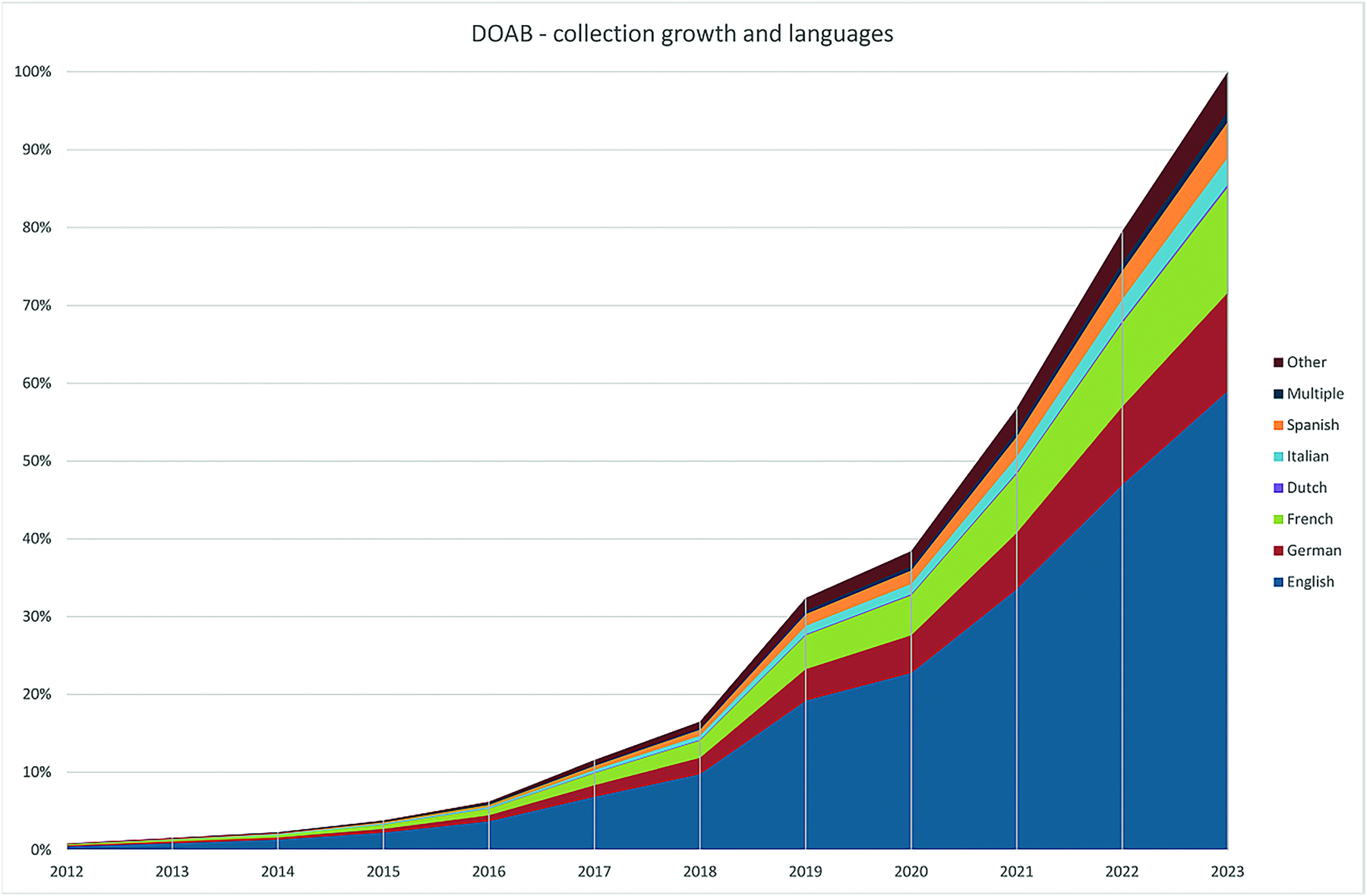

Compared to OAPEN, DOAB has a larger variety of languages. While in absolute numbers English is also dominant, DOAB represents a larger percentage of books in other languages (see Figure 3). Here, French titles outnumber the books in German, while we also see a significant number of titles in Spanish next to the Italian publications. The percentage of titles in languages other than English in DOAB is higher than the OAPEN collection: 41% versus 37%.

Perhaps it is interesting to note that two publishers are responsible for 17% of the DOAB collection, and both publish in English only. If those 13,746 titles were not counted, the percentages change to 50% for non-English titles. At the very least, this example illuminates the scale differences between book publishers and how this affects bibliodiversity.

The titles in both platforms are provided by publishers. OAPEN started as a European project (indeed the “E” in the original acronym stood for European), which explains the relatively large percentage of European publishers. And in later years, more US-based and other publishers followed. But compared to DOAB, relatively few publishers from the rest of the world have added their books in the OAPEN Library. A possible barrier to engagement with OAPEN is the fact that hosting is not free; there are operational costs.

In contrast, DOAB does not host titles but acts as a directory, pointing to where open access books are hosted on other platforms. There are no costs for publishers to be listed on DOAB. Furthermore, a cooperation has been set up with so-called trusted networks, such as French-based OpenEdition, the Brazilian SciELO Books, and US-based Project Muse and JSTOR. This helps to explain the differences in the collections.

2.2 Comparing English-language and language other than English (LOTE) books

The previous sections have demonstrated the pressure on the international research community to publish in English. The current research assessment environment contains several biases that favor English, which obviously disadvantages many scholars. In a certain respect, this issue is even more pressing in the realm of books. While articles are the norm in science, technology, and medicine, a large portion of research in the humanities and social sciences is published in books. While the sciences study events that are primarily linked to the laws of nature, humanities and social sciences work on issues that are highly specific to certain cultures and languages. A lack of bibliodiversity is even more impactful here.

If we decide to eschew the current mechanisms for assessing value or the quality of work, the question is with what to replace it. Publishing in open access is a way to maximize the impact of publications by removing barriers to readership. The OAPEN Library and DOAB have been launched to support this process for books. When books are openly available, a much-used evaluation criterium is the number of downloads. Here we see the same disparity between English and other languages: books in English are more downloaded on average compared to books in other languages. This can be observed in the OAPEN Library. In 2011, compared to the total number of downloads per average title, titles in English are approximately 150% of the average of the complete OAPEN Library usage. This situation did not change significantly more than a decade later. In 2022, the number of median downloads of English-language titles is, in most instances, much higher than those of titles in other languages (Snijder 2013, 2023).

In other words, if the number of downloads is used as a yard stick, books in languages other than English are still at a disadvantage. Additionally, as has been mentioned earlier, download data can differ significantly, based on the tools used. An example is the difference in usage data reported by the Google Analytics environment, compared to the COUNTER compliant numbers provided by IRUS-UK (Snijder 2021). Other types of measurement also come with drawbacks. We could quantify international reach, measuring how many countries have been reached. However, this is diametral towards the notion of local focus. Another option is looking at qualitative aspects, but this comes with its own problems. While quantitative measurement is prone to bias—including the choices of what is measured—it is possible to “audit” if the level of openness is sufficient. How to do this for qualitative assessments?

2.3 Is OAPEN unwittingly part of the problem?

Assessment of non-English documents—whether citations or downloads—is always more fraught than assessment of English-language titles. The OAPEN Library and DOAB do not exist in a vacuum but are functioning in an existing power structure which is obviously biased towards the Global North. Platforms such as the OAPEN Library and DOAB are part of a global internet infrastructure that is not evenly distributed: the ease of downloading books and chapters differs strongly around the world. In other words, for readers in parts of the world with a well-developed internet infrastructure, getting access to online resources is easy. If the internet infrastructure is not optimal, retrieving open access materials—which by definition are distributed online—is more challenging. This is illustrated in Figure 4, which shows the difference in internet connection speeds across countries.

Currently, the focus on English-language publications is part of the environment in which we work. However, just as there is a growing consensus that change is necessary in the way research is assessed, the percentage of open access books in non-English languages in DOAB is increasing.

The 2023 UNESCO report on global open science outlook argues that the “transformation from the conventionally ‘closed’ to open science requires a profound shift in the way science is produced, accessed, governed and used” (UNESCO 2023a, 18). The OAPEN Library and DOAB as “open science infrastructure” are represented in UNESCO’s mapping of requirements to move from “closed” conventional science to open science. The collections fit the description towards the open end of the table: “Platforms permit usership for all. Digital architectures begin to facilitate use in different languages and accessibility needs” (UNESCO 2023a, 18). That said, there is still more work to be done.

3 Conclusion

Assessment in academia takes place at several levels. For instance, global university rankings, which claim to be based on objective criteria, are biased towards institutions in the United States and the United Kingdom with a very low proportion of universities in Africa, Asia, Latin America, and the Caribbean. The impact measurement of journals is heavily dependent on the JIF. Apart from internal issues, the selection of journals is not balanced: the majority of journals are in English and are from North America and Europe, with many areas of the world underrepresented in terms of coverage. The underlying databases (Clarivate’s Web of Science and Elsevier’s Scopus) that provide data for both the university rankings and the JIF are also biased in the same way. Impact measurement of books is less dependent on the citation counting of the JIF, but when citations are used, the focus lies on English-language titles. The power imbalances are reinforced by the underlying infrastructure.

All this leads to a pattern of exclusion. Universities, articles, and books that do not conform to the Global North paradigm and perspectives are at a disadvantage. This pattern is also visible in the open access environment, of which the OAPEN Library and DOAB are a part. Obviously, this is not an ideal situation. Many researchers are marginalized, which hampers the development of knowledge in large parts of the world. It also disadvantages many readers for whom English is a barrier.

This is not something that will change overnight. Despite the criticism of the status quo, there is no indication we are already entering a new age of global equality in the creation and consumption of knowledge. Against this background it is encouraging that DOAB– which could be seen as the focal point for open access peer-reviewed books—is already listing a relatively large number of titles in languages other than English. For the coming years, it will focus on adding publications from Asia and Africa. Our hope is this will help in the creation of a truly global scholarly communication ecosystem.

Notes

- The quotation “Whoever controls the media, controls the mind” is attributed to Jim Morrison from the Doors: https://quoteinvestigator.com/2022/08/17/media-mind/. ⮭

- OAPEN Library metadata: https://oapen.org/librarians/15635975-metadata; and DOAB metadata: https://www.doabooks.org/en/librarians/metadata-harvesting-and-content-dissemination. ⮭

- All metadata fields used for describing titles can found at https://oapen.fra1.digitaloceanspaces.com/5f9bf2c50f8c472194c4f351fe4f448f.xlsx. ⮭

References

Acfas. 2021. “Portrait et défis de la recherche en français en contexte minoritaire au Canada.” https://www.acfas.ca/sites/default/files/documents_utiles/rapport_francophonie_final.pdf.https://www.acfas.ca/sites/default/files/documents_utiles/rapport_francophonie_final.pdf

Allen, Ryan M., and Tomoko Takahashi. 2022. “Top Law Schools’ Rejection of Ranking Should Inspire Others.” University World News, December 17, 2022. https://www.universityworldnews.com/post.php?story=20221214075821995.https://www.universityworldnews.com/post.php?story=20221214075821995

Altbach, Philip G. 2012. “The Globalization of College and University Rankings.” Change: The Magazine of Higher Learning 44 (1): 26–31. https://doi.org/10.1080/00091383.2012.636001.https://doi.org/10.1080/00091383.2012.636001

Amano, Tatsuya, Juan P. González-Varo, and William J. Sutherland. 2016. “Languages Are Still a Major Barrier to Global Science.” PLoS Biology 14 (12): e2000933. https://doi.org/10.1371/journal.pbio.2000933.https://doi.org/10.1371/journal.pbio.2000933

Amin, Mayur, and Michael A. Mabe. 2003. “Impact Factors: Use and Abuse.” MEDICINA (Buenos Aires) 63 (4): 347–54.

Arao, Luiza Hiromi, Maria José Veloso da Costa Santos, and Vânia Lisbôa Silveira Guedes. 2015. “The Half-Life and Obsolescence of the Literature Science Area: A Contribution to the Understanding the Chronology of Citations in Academic Activity.” Qualitative and Quantitative Methods in Libraries 4 (3): 603–10.

Australian Government. 2023. “Clarivate Chosen as Citation Provider for ERA 2023.” Australian Research Council. https://www.arc.gov.au/news-publications/media/network-messages/clarivate-chosen-citation-provider-era-2023-0.https://www.arc.gov.au/news-publications/media/network-messages/clarivate-chosen-citation-provider-era-2023-0

Baccini, Alberto, and Eugenio Petrovich. 2023. “A Global Exploratory Comparison of Country Self-Citations 1996–2019.” PLoS ONE 18 (12): e0294669. https://doi.org/10.1371/journal.pone.0294669.https://doi.org/10.1371/journal.pone.0294669

Bardiau, Marjorie, and Christophe Dony. 2024. “Measuring Back: Bibliodiversity and the Journal Impact Factor Brand, a Case Study of IF-Journals Included in the 2021 Journal Citations Report.” Insights 37. https://doi.org/10.1629/uksg.633.https://doi.org/10.1629/uksg.633

Beiter, Klaus D. 2023. “Access to Scholarly Publications in the Global North and the Global South—Copyright and the Need for a Paradigm Shift under the Right to Science.” Frontiers in Sociology 8. https://www.frontiersin.org/articles/10.3389/fsoc.2023.1277292.https://www.frontiersin.org/articles/10.3389/fsoc.2023.1277292

Berger, Monica. 2021. “Bibliodiversity at the Centre: Decolonizing Open Access.” Development and Change 52 (2): 383–404. https://doi.org/10.1111/dech.12634.https://doi.org/10.1111/dech.12634

Biagioli, Mario, and Alexandra Lippman, eds. 2020. Gaming the Metrics: Misconduct and Manipulation in Academic Research. Cambridge, MA: MIT Press. https://doi.org/10.7551/mitpress/11087.001.0001.https://doi.org/10.7551/mitpress/11087.001.0001

Bilder, Geoffrey, J. Lin, and Cameron Neylon. 2020. “The Principles of Open Scholarly Infrastructure (v1.1).” The Principles of Open Scholarly Infrastructure. https://doi.org/10.24343/C34W2H.https://doi.org/10.24343/C34W2H

Bohannon, John. 2016. “Hate Journal Impact Factors? New Study Gives You One More Reason.” Science, July 6, 2016. https://doi.org/10.1126/science.aag0643.https://doi.org/10.1126/science.aag0643

Bol, Juliana A., Ashley Sheffel, Nukhba Zia, and Ankita Meghani. 2023. “How to Address the Geographical Bias in Academic Publishing.” BMJ Global Health 8 (12): e013111. https://doi.org/10.1136/bmjgh-2023-013111.https://doi.org/10.1136/bmjgh-2023-013111

Bollen, Johan, Herbert Van de Sompel, Joan A. Smith, and Rick Luce. 2005. “Toward Alternative Metrics of Journal Impact: A Comparison of Download and Citation Data.” Information Processing and Management 41 (6): 1419–40. https://doi.org/10.1016/j.ipm.2005.03.024.https://doi.org/10.1016/j.ipm.2005.03.024

Bridgestock, Laura. 2021. “World University Ranking Methodologies Compared.” Top Universities, updated April 19, 2021. https://www.topuniversities.com/university-rankings-articles/world-university-rankings/world-university-ranking-methodologies-compared.https://www.topuniversities.com/university-rankings-articles/world-university-rankings/world-university-ranking-methodologies-compared

Casas Niño de Rivera, Alejandra, Marco Tullney, Marc Bria, Famira Racy, and John Willinsky. 2024. “By Design, PKP Is Not for Sale.” Public Knowledge Project, March 14, 2024. https://pkp.sfu.ca/2024/03/14/pkp-not-for-sale/.https://pkp.sfu.ca/2024/03/14/pkp-not-for-sale/

Cassidy, Caitlin. 2023. “Australia’s Research Sector Chases Rankings over Quality and Is ‘Not Fit for Purpose,’ Chief Scientist Says.” The Guardian, November 14, 2023. https://www.theguardian.com/australia-news/2023/nov/15/australia-research-sector-chases-rankings-not-fit-for-purpose-dr-cathy-foley.https://www.theguardian.com/australia-news/2023/nov/15/australia-research-sector-chases-rankings-not-fit-for-purpose-dr-cathy-foley

Centre for Science and Technology Studies. 2024. “CWTS Leiden Ranking Open Edition.” https://open.leidenranking.com.https://open.leidenranking.com

CERN Open Science. n.d. “Open Infrastructure.” Accessed January 14, 2024. https://openscience.cern/infrastructure.https://openscience.cern/infrastructure

Chan, Leslie. 2019. “Platform Capitalism and the Governance of Knowledge Infrastructure.” 2019 Digital Initiatives Symposium, University of San Diego, April 30, 2019. https://digital.sandiego.edu/symposium/2019/2019/9.https://digital.sandiego.edu/symposium/2019/2019/9

Chan, Leslie, Darius Cuplinskas, Michael Eisen, Fred Friend, Yana Genova, Jean-Claude Guédon, Melissa Hagemann, et al. 2002. “Budapest Open Access Initiative.” Budapest Open Access Initiative, February 14, 2002. https://www.budapestopenaccessinitiative.org/read/.https://www.budapestopenaccessinitiative.org/read/

Chinchilla-Rodríguez, Zaida, Sandra Miguel, and Félix de Moya-Anegón. 2015. “What Factors Affect the Visibility of Argentinean Publications in Humanities and Social Sciences in Scopus? Some Evidence beyond the Geographic Realm of Research.” Scientometrics 102:789–810. https://doi.org/10.1007/s11192-014-1414-4.https://doi.org/10.1007/s11192-014-1414-4

Chtena, Natascha, Juan Pablo Alperin, Esteban Morales, Alice Fleerackers, Isabelle Dorsch, Stephen Pinfield, and Marc-André Simard. 2023. “The Neglect of Equity and Inclusion in Open Science Policies of Europe and the Americas.” SciELO Preprints. https://doi.org/10.1590/SciELOPreprints.7366.https://doi.org/10.1590/SciELOPreprints.7366

Clarivate. 2023. Journal Citations Reports. https://clarivate.com/products/scientific-and-academic-research/research-analytics-evaluation-and-management-solutions/journal-citation-reports/.https://clarivate.com/products/scientific-and-academic-research/research-analytics-evaluation-and-management-solutions/journal-citation-reports/

Clarivate. n.d.-a. Book Citation Index. Accessed January 15, 2024. https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/book-citation-index/.https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/book-citation-index/

Clarivate. n.d.-b. “Web of Science Book Evaluation Process and Selection Criteria.” Clarivate (blog). Accessed April 26, 2024. https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/editorial-selection-process/book-evaluation-process-and-selection-criteria/.https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/editorial-selection-process/book-evaluation-process-and-selection-criteria/

Clarivate. n.d.-c. “Web of Science Journal Evaluation Process and Selection Criteria.” Clarivate (blog). Accessed May 15, 2024. https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/editorial-selection-process/editorial-selection-process/.https://clarivate.com/products/scientific-and-academic-research/research-discovery-and-workflow-solutions/webofscience-platform/web-of-science-core-collection/editorial-selection-process/editorial-selection-process/

cOAlitionS. 2018. “Plan S: Making Full and Immediate Open Access a Reality.” https://www.coalition-s.org.https://www.coalition-s.org

CoARA. 2022. “The Agreement on Reforming Research Assessment.” CoARA, July 20, 2022. https://coara.eu/agreement/the-agreement-full-text.https://coara.eu/agreement/the-agreement-full-text

Craddock, Alex. 2022. “What’s Actually Behind University Rankings?” Insider Guides (blog), October 24, 2022. https://insiderguides.com.au/university-rankings-explained/.https://insiderguides.com.au/university-rankings-explained/

Curry, Stephen, Elizabeth Gadd, and James Wilsdon. 2022. Harnessing the Metric Tide: Indicators, Infrastructures & Priorities for UK Responsible Research Assessment. Research on Research Institute. https://doi.org/10.6084/m9.figshare.21701624.v2.https://doi.org/10.6084/m9.figshare.21701624.v2

Dagienė, Eleonora. 2023. “Prestige of Scholarly Book Publishers—an Investigation into Criteria, Processes, and Practices across Countries.” Research Evaluation 32 (2): 356–70. https://doi.org/10.1093/reseval/rvac044.https://doi.org/10.1093/reseval/rvac044

Di Bitetti, Mario S., and Julián A. Ferreras. 2017. “Publish (in English) or Perish: The Effect on Citation Rate of Using Languages Other than English in Scientific Publications.” Ambio 46 (1): 121–27. https://doi.org/10.1007/s13280-016-0820-7.https://doi.org/10.1007/s13280-016-0820-7

DORA. 2014. “San Francisco Declaration on Research Assessment: Putting Science into the Assessment of Research.” American Society for Cell Biology. https://www.ascb.org/files/SFDeclarationFINAL.pdf.https://www.ascb.org/files/SFDeclarationFINAL.pdf

DORA. n.d. “About DORA.” Accessed May 15, 2024. https://sfdora.org/about-dora/.https://sfdora.org/about-dora/

Elsevier. n.d.-a. “Scopus Content.” Accessed January 15, 2024. https://www.elsevier.com/en-au/products/scopus/content.https://www.elsevier.com/en-au/products/scopus/content

Elsevier. n.d.-b. “Scopus Content Policy and Selection.” Accessed May 15, 2024. https://www.elsevier.com/en-au/products/scopus/content/content-policy-and-selection.https://www.elsevier.com/en-au/products/scopus/content/content-policy-and-selection

Eriksson, Linda. 2023. “ ‘Gruelling to Read’: Swedish University Students’ Perceptions of and Attitudes towards Academic Reading in English.” Journal of English for Academic Purposes 64 (July): 101265. https://doi.org/10.1016/j.jeap.2023.101265.https://doi.org/10.1016/j.jeap.2023.101265

Flowerdew, John, and Yongyan Li. 2009. “English or Chinese? The Trade-Off between Local and International Publication among Chinese Academics in the Humanities and Social Sciences.” Journal of Second Language Writing 18 (1): 1–16. https://doi.org/10.1016/j.jslw.2008.09.005.https://doi.org/10.1016/j.jslw.2008.09.005

German Research Foundation. 2024. “Boosting Diamond Open Access.” Information for Researchers, no. 2. https://www.dfg.de/en/news/news-topics/announcements-proposals/2024/ifr-24-02.https://www.dfg.de/en/news/news-topics/announcements-proposals/2024/ifr-24-02

Giménez Toledo, Elea, Emanuel Kulczycki, Janne Pölönen, and Gunnar Sivertsen. 2019. “Bibliodiversity—What It Is and Why It Is Essential to Creating Situated Knowledge.” Impact of Social Sciences (blog), December 5, 2019. https://blogs.lse.ac.uk/impactofsocialsciences/2019/12/05/bibliodiversity-what-it-is-and-why-it-is-essential-to-creating-situated-knowledge/.https://blogs.lse.ac.uk/impactofsocialsciences/2019/12/05/bibliodiversity-what-it-is-and-why-it-is-essential-to-creating-situated-knowledge/

Global Sustainability Coalition for Open Science Services. n.d. Accessed May 15, 2024. https://scoss.org.https://scoss.org

Gordin, Michael D. 2015. Scientific Babel: How Science Was Done before and after Global English. Chicago: University of Chicago Press.

Hagemann, Melissa. 2023. “Latin America Exemplifies What Can Be Accomplished When Community Is Prioritized over Commercialization.” International Open Access Week (blog), October 2023. https://www.openaccessweek.org/blog/2023/latin-america-exemplifies-what-can-be-accomplished-when-community-is-prioritized-over-commercialization.https://www.openaccessweek.org/blog/2023/latin-america-exemplifies-what-can-be-accomplished-when-community-is-prioritized-over-commercialization

Halevi, Gali, Barnaby Nicolas, and Judit Bar-Ilan. 2016. “The Complexity of Measuring the Impact of Books.” Publishing Research Quarterly 32 (3): 187–200. https://doi.org/10.1007/s12109-016-9464-5.https://doi.org/10.1007/s12109-016-9464-5

Haug, Sebastian. 2021. “What or Where Is the ‘Global South’? A Social Science Perspective.” Impact of Social Sciences (blog), September 28, 2021. https://blogs.lse.ac.uk/impactofsocialsciences/2021/09/28/what-or-where-is-the-global-south-a-social-science-perspective/.https://blogs.lse.ac.uk/impactofsocialsciences/2021/09/28/what-or-where-is-the-global-south-a-social-science-perspective/

Hecht, Frederick, Barbara K. Hecht, and Avery A. Sanberg. 1998. “The Journal ‘Impact Factor’—a Misnamed, Misleading, Misused Measure.” Cancer Genetics and Cytogenetics 104 (2): 77–81. https://doi.org/10.1016/S0165-4608(97)00459-7.https://doi.org/10.1016/S0165-4608(97)00459-7

Hicks, Diana, Paul Wouters, Ludo Waltman, Sarah de Rijcke, and Ismael Rafols. 2015. “Bibliometrics: The Leiden Manifesto for Research Metrics.” Nature 520 (7548): 429–31. https://doi.org/10.1038/520429a.https://doi.org/10.1038/520429a

Higher Education Funding Council for England. 2018. “Clarivate Analytics Will Provide Citation Data during REF 2021.” https://archive.ref.ac.uk/guidance-and-criteria-on-submissions/news/clarivate-analytics-will-provide-citation-data-during-ref-2021/.https://archive.ref.ac.uk/guidance-and-criteria-on-submissions/news/clarivate-analytics-will-provide-citation-data-during-ref-2021/

Hill, Kim Quaile, and Patricia A. Hurley. 2022. “Web of Science Book Citation Indices and the Representation of Book and Journal Article Citation in Disciplines with Notable Book Scholarship.” Journal of Electronic Publishing 25 (2). https://doi.org/10.3998/jep.3334.https://doi.org/10.3998/jep.3334

Horton, Richard. 2023. “Offline: The Silencing of the South.” The Lancet 401 (10380): 889. https://doi.org/10.1016/S0140-6736(23)00561-5.https://doi.org/10.1016/S0140-6736(23)00561-5

Huang, Chun-Kai (Karl), Cameron Neylon, Chloe Brookes-Kenworthy, Richard Hosking, Lucy Montgomery, Katie Wilson, and Alkim Ozaygen. 2020. “Comparison of Bibliographic Data Sources: Implications for the Robustness of University Rankings.” Quantitative Science Studies 1 (2): 445–78. https://doi.org/10.1162/qss_a_00031.https://doi.org/10.1162/qss_a_00031

Huang, Chun-Kai, Cameron Neylon, Lucy Montgomery, Richard Hosking, James P. Diprose, Rebecca N. Handcock, and Katie Wilson. 2024. “Open Access Research Outputs Receive More Diverse Citations.” Scientometrics 129:824–45. https://doi.org/10.1007/s11192-023-04894-0.https://doi.org/10.1007/s11192-023-04894-0

INORMS. 2022. “More Than Our Rank.” July 12, 2022. https://inorms.net/more-than-our-rank/.https://inorms.net/more-than-our-rank/

Invest in Open Infrastructure. 2022. “About.” August 5, 2022. https://investinopen.org/about/.https://investinopen.org/about/

Johnson, Rob. 2024. “Not-for-Profit Scholarly Publishing Might Not Be Cheaper—and That’s OK.” Impact of Social Sciences (blog), January 9, 2024. https://blogs.lse.ac.uk/impactofsocialsciences/2024/01/09/not-for-profit-scholarly-publishing-might-not-be-cheaper-and-thats-ok/.https://blogs.lse.ac.uk/impactofsocialsciences/2024/01/09/not-for-profit-scholarly-publishing-might-not-be-cheaper-and-thats-ok/

Karlstrøm, Henrik, Dag W. Aksnes, and Fredrik N. Piro. 2024. “Benefits of Open Access to Researchers from Lower-Income Countries: A Global Analysis of Reference Patterns in 1980–2020.” Journal of Information Science. Published ahead of print, April 20, 2024. https://doi.org/10.1177/01655515241245952.https://doi.org/10.1177/01655515241245952

Khanna, Saurabh, Jon Ball, Juan Pablo Alperin, and John Willinsky. 2022. “Recalibrating the Scope of Scholarly Publishing: A Modest Step in a Vast Decolonization Process.” Quantitative Science Studies 3 (4): 912–30. https://doi.org/10.1162/qss_a_00228.https://doi.org/10.1162/qss_a_00228

Lamdan, Sarah. 2022. Data Cartels: The Companies That Control and Monopolize Our Information. Stanford, CA: Stanford University Press.

Larivière, Vincent, Véronique Kiermer, Catriona J. MacCallum, Marcia McNutt, Mark Patterson, Bernd Pulverer, Sowmya Swaminathan, Stuart Taylor, and Stephen Curry. 2016. “A Simple Proposal for the Publication of Journal Citation Distributions.” bioRxiv. https://doi.org/10.1101/062109.https://doi.org/10.1101/062109

Leão, Delfim. 2021. “Multilingualism in Scholarly Communication: Main Findings and Future Challenges.” Septentrio Conference Series, 16th Munin Conference on Scholarly Publishing, no. 4 (November). https://doi.org/10.7557/5.6212.https://doi.org/10.7557/5.6212

Lewis, David W., Lori Goetsch, Diane Graves, and Mike Roy. 2018. “Funding Community Controlled Open Infrastructure for Scholarly Communication: The 2.5% Commitment Initiative.” College & Research Libraries News 79 (3). https://doi.org/10.5860/crln.79.3.133.https://doi.org/10.5860/crln.79.3.133

Linmans, A. J. M. 2010. “Why with Bibliometrics the Humanities Does Not Need to Be the Weakest Link.” Scientometrics 83:337–54. https://doi.org/10.1007/s11192-009-0088-9.https://doi.org/10.1007/s11192-009-0088-9

Lobet, Delphine, and Vincent Larivière. 2022. Study on the ASPP and the Situation of Scholarly Books in Canada. Ottawa, Canada: Federation for the Humanities and Social Sciences. https://www.federationhss.ca/sites/default/files/2023-02/Study-on-the-ASPP-and-Situation-of-Scholarly-Books-in-Canada.pdf.https://www.federationhss.ca/sites/default/files/2023-02/Study-on-the-ASPP-and-Situation-of-Scholarly-Books-in-Canada.pdf

Lor, Peter. 2023. “Scholarly Publishing and Peer Review in the Global South: The Role of the Reviewer.” JLIS.it 14 (1): 10–29. https://doi.org/10.36253/jlis.it-512.https://doi.org/10.36253/jlis.it-512

Ma, Lai. 2023. “The Platformisation of Scholarly Information and How to Fight It.” LIBER Quarterly: The Journal of the Association of European Research Libraries 33 (1): 1–20. https://doi.org/10.53377/lq.13561.https://doi.org/10.53377/lq.13561

Maleki, Ashraf. 2022. “Why Does Library Holding Format Really Matter for Book Impact Assessment? Modelling the Relationship between Citations and Altmetrics with Print and Electronic Holdings.” Scientometrics 127:1129–60. https://doi.org/10.1007/s11192-021-04239-9.https://doi.org/10.1007/s11192-021-04239-9

Márquez, Melissa C., and Ana Maria Porras. 2020. “Science Communication in Multiple Languages Is Critical to Its Effectiveness.” Frontiers in Communication 5. https://www.frontiersin.org/articles/10.3389/fcomm.2020.00031.https://www.frontiersin.org/articles/10.3389/fcomm.2020.00031

McElroy, Kelly, and Laurie M. Bridges. 2018. “Multilingual Access: Language Hegemony and the Need for Discoverability in Multiple Languages.” College and Research Libraries News 79 (11): 617–20. https://doi.org/10.5860/crln.79.11.617.https://doi.org/10.5860/crln.79.11.617

McIntosh, Alison, and Christopher Tancock. 2023. “The Lasting Language of Publication?” Elsevier Connect, March 9, 2023. https://www.elsevier.com/connect/the-lasting-language-of-publication.https://www.elsevier.com/connect/the-lasting-language-of-publication

McKiernan, Erin C., Lesley A. Schimanski, Carol Muñoz Nieves, Lisa Matthias, Meredith T. Niles, and Juan P. Alperin. 2019. “Use of the Journal Impact Factor in Academic Review, Promotion, and Tenure Evaluations.” eLife 8 (July): e47338. https://doi.org/10.7554/eLife.47338.https://doi.org/10.7554/eLife.47338

Meneghini, Rogerio, and Abel L. Packer. 2007. “Is There Science beyond English? Initiatives to Increase the Quality and Visibility of non-English Publications Might Help to Break Down Language Barriers in Scientific Communication.” EMBO Reports 8 (2): 112–16. https://doi.org/10.1038/sj.embor.7400906.https://doi.org/10.1038/sj.embor.7400906

Nassiri-Ansari, Tiffany, and David McCoy. 2023. “World-Class Universities? Interrogating the Biases and Coloniality of Global University Rankings.” United Nations University–International Institute for Global Health briefing paper, February 2023. https://doi.org/10.37941/PB/2023/1.https://doi.org/10.37941/PB/2023/1

Neylon, Cameron, Alkim Ozaygen, Lucy Montgomery, Chun-Kai (Karl) Huang, Ros Pyne, Mithu Lucraft, and Christina Emery. 2021. “More Readers in More Places: The Benefits of Open Access for Scholarly Books.” Insights 34. https://doi.org/10.1629/uksg.558.https://doi.org/10.1629/uksg.558

OAPEN. n.d. “About Us.” Accessed March 29, 2024. https://www.oapen.org/oapen/1891940-organisation.https://www.oapen.org/oapen/1891940-organisation

Ogg, Lesley. 2010. “Launch of the OAPEN Library at the Frankfurt Book Fair.” Association of Learned and Professional Society Publishers (blog), September 20, 2010. https://blog.alpsp.org/2010/09/launch-of-oapen-library-at-frankfurt.html.https://blog.alpsp.org/2010/09/launch-of-oapen-library-at-frankfurt.html

Okamoto, Kazumi. 2015. “What Is Hegemonic Science? Power in Scientific Activities in Social Sciences in International Contexts.” In Theories and Strategies against Hegemonic Social Sciences, edited by Michael Kuhn and Shujiro Yazawa, 55–73. Stuttgart: ibidem-Verlag. https://core.ac.uk/display/230558208.https://core.ac.uk/display/230558208

Open Book Collective. n.d. Accessed January 14, 2024. https://openbookcollective.org.https://openbookcollective.org

Opthof, Tobias. 1997. “Sense and Nonsense about the Impact Factor.” Cardiovascular Research 33 (1): 1–7. https://doi.org/10.1016/S0008-6363(96)00215-5.https://doi.org/10.1016/S0008-6363(96)00215-5

Our World in Data. n.d. “Landline Internet Subscriptions per 100 People, 1998 to 2001.” Accessed November 22, 2023. https://ourworldindata.org/grapher/broadband-penetration-by-country.https://ourworldindata.org/grapher/broadband-penetration-by-country

Paasi, Anssi. 2005. “Globalisation, Academic Capitalism, and the Uneven Geographies of International Journal Publishing Spaces.” Environment and Planning A: Economy and Space 37 (5): 769–89. https://doi.org/10.1068/a3769.https://doi.org/10.1068/a3769

Pérez-Nebra, Amalia Raquel. 2023. “Experiences of Scholarly Marginalization.” Upstream, August 1, 2023. https://doi.org/10.54900/p60g8-kck64.https://doi.org/10.54900/p60g8-kck64

PLoS Medicine Editors. 2006. “The Impact Factor Game.” PLoS Medicine 3 (6): e291. https://doi.org/10.1371/journal.pmed.0030291.https://doi.org/10.1371/journal.pmed.0030291

Plum Analytics. 2017. “Elsevier Acquires Leading ‘Altmetrics’ Provider Plum Analytics.” Press release, February 2, 2017. https://plumanalytics.com/press/elsevier-acquires-leading-altmetrics-provider-plum-analytics/.https://plumanalytics.com/press/elsevier-acquires-leading-altmetrics-provider-plum-analytics/

Pooley, Jeff. 2022. “Surveillance Publishing.” Journal of Electronic Publishing 25 (1). https://doi.org/10.3998/jep.1874.https://doi.org/10.3998/jep.1874

Posada, Alejandro, and George Chen. 2018. “Inequality in Knowledge Production: The Integration of Academic Infrastructure by Big Publishers.” In ELPUB 2018, edited by Leslie Chan and Pierre Mounier. Toronto, Canada: Association Francophone d’Interaction Homme-Machine (AFIHM). https://doi.org/10.4000/proceedings.elpub.2018.30.https://doi.org/10.4000/proceedings.elpub.2018.30