1. Introduction

In this note we continue an old discussion of some familiar results about the asymptotics of Bayesian updating (aka conditionalization1) using countably additive2 credences. One such result (due to Doob 1953, with details reported in Section 2) asserts that, for each hypothesis of interest , with the exception of a probability 0 “null” set of data sequences, the Bayesian agent’s posterior probabilities converge to the truth value of . Almost surely, the posterior credences converge to the value 1 if is true, and to 0 if is false. So, with probability 1, this Bayesian agent’s asymptotic conditional credences are veridical: they track the truth of each hypothesis under investigation. This feature of Bayesian learning is often alluded to in a justification of Bayesian methodology, e.g., Lindley (2006: ch. 11) and Savage (1972: §3.6): Bayesian learning affords sound asymptotics for scientific inference.

In Section 3, we explore the asymptotic behavior of conditional probabilities when these desirable asymptotics fail and credences are not veridical. We identify and illustrate five varieties of such failures, in increasing severity. An extreme variety occurs when conditional probabilities approach certainty for a false hypothesis. We call these extreme cases episodes of deceptive credences, as the agent is not able to discriminate between becoming certain of a truth and becoming certain of a falsehood.3 Result 1 establishes a sufficient condition for credences to be deceptive. In Appendix A, we discuss four other, less extreme varieties when conditional probabilities are not veridical.

In Section 4 we apply our findings to a recent exchange prompted by Belot’s (2013) charge that familiar results about the asymptotics of Bayesian updating display orgulity: an epistemic immodesty about the power of Bayesian reasoning. In rebuttal, Elga (2016) argues that orgulity is avoided with some merely finitely additive credences for which the conclusion of Doob’s theorem is false. Nielsen and Stewart (2019) offer a synthesis of these two perspectives where some finitely additive credences display what they call (understood as a technical term) reasonable modesty, which avoids the specifics of Belot’s objection. Our analysis in Section 4 shows that these applications of finite additivity support deceptive credences. We argue that it is at least problematic to call deceptive credences “modest” in the ordinary sense of the word ‘modest’ when deception has positive probability.

2. Doob’s (1953) Strong Law for Asymptotic Bayesian Certainty

For ease of exposition, we use a continuing example throughout this note. Consider a Borel space of possible events based on the set of denumerable sequences of binary outcomes from flips of a coin of unknown bias using a mechanism of unknown dynamics. The sample space consists of denumerable sequences of 0s (tails) and 1s (heads). The nested data available to the Bayesian investigator are the growing initial histories of length , , arising from one denumerable sequence of flips, which corresponds to the unknown state. The class of hypotheses of interest are the elements of the Borel space generated by such histories.

For example, an hypothesis of interest might be that, with the exception of some finite initial history, the observed relative frequency of 1s remains greater than 0.5, regardless whether or not there is a well-defined limit of relative frequency for heads. Doob’s result, which we review below, asserts that for the Bayesian agent with countably additive credences over this Borel space, with the exception of a P-null set of possible sequences, her/his conditional probabilities, converge to the truth value of .

Consider the following, strong-law (countably additive) version of the Bayesian asymptotic approach to certainty, which applies to the continuing example of denumerable sequences of 0s and 1s.4 The assumptions for the result that we highlight below involve the measurable space, the hypothesis of interest, and the learning rule.

The measurable space . Let be a denumerable sequence of sets, each equipped with an associated, atomic -field , where if then . That is, the elements of are the atoms of . is the state-space and is the set of the measurable events for the ith experiment. Form the infinite Cartesian product of all sequences , where . The -field is generated by the measurable rectangles from : the sets of the form where and for all but finitely many values of . is the smallest -field containing each of the individual . As for each , also is atomic with atoms the sequences .

Each hypothesis of interest is an element of . That is, in what follows, the result about asymptotic certainty applies to an hypothesis provided that it is “identifiable” with respect to the -field, , generated by finite sequences of observations.5 These finite sequences constitute the observed data.

We are concerned, in particular, with tracking the nested histories of the initial experimental outcomes:

That is, for , let be the first -terms of .

The probability assumptions. Let be a countably additive probability over the measurable space , and assume there exist well-defined conditional probability distributions over hypotheses , given the histories , .

The learning rule for the Bayesian agent: Consider an agent whose initial (“prior”) joint credences are represented by the measure space . Let be this agent’s (“posterior”) credences over having learned the history .

Bayes’ Rule for updating credences requires that .

The result in question, which is a substitution instance of Doob’s (1953: T.7.4.1), is as follows:

For , let be the indicator for . if and if . The indicator function for identifies the truth value of .

Asymptotic Bayesian Certainty: For each ,

In words, subject to the conditions above, the agent’s credences satisfy asymptotic certainty about the truth value of the hypothesis . For each measurable hypothesis , and with respect to a set of infinite sequences that has “prior” probability 1, for each in her/his sequence of “posterior” opinions about , , converges to probability 1 or 0, respectively, about the truth or falsity of .

To summarize: For each in , as , the sequence of conditional probabilities, , asymptotically correctly identifies the truth of or of by converging to 1 for the true hypothesis in this pair. In this sense, asymptotically, the Bayesian agent learns whether or obtains.

Definition: Call an element of a veridical state if converges to .6

In other words, the non-veridical states constitute the failure set for Doob’s result.

3. Veridical versus Deceptive States and Their Associated Credences

Next, we examine details of conditional probabilities given elements of the failure set, even when the agent’s credences are countably additive and the other assumptions in Doob’s result obtain. Specifically, consider the countably additive Bayesian agent’s conditional probabilities, , in sequences of histories that are generated by points in the failure set, —the complement to the distinguished set of veridical states. It is important, we think, to distinguish different varieties of non-veridical states within the failure set.

At the opposite pole from the veridical states, the states in —states whose conditional probabilities converge to the truth about —are states whose histories create conditional probabilities that converge to certainty about the false hypothesis in the pair .

Define as a deceptive state for hypothesis if converges to .

For deceptive states, the agent’s sequence of posterior probabilities also creates asymptotic certainty. This sense of certainty is introspectively indistinguishable to the investigator from the asymptotic certainty created by veridical states, where asymptotic certainty identifies the truth. Thus, to the extent that veridical states provide a defense of Bayesian learning—the observed histories move the agent’s subjective “prior” for towards certainty in the truth value of —deceptive states move the agent’s subjective credences towards certainty for a falsehood. Thus, for the very reasons that states in underwrite a Bayesian account of Bayesian learning of , deceptive states frustrate such a claim about . Then, Doob’s result serves a Bayesian’s need provided that the Bayesian agent is satisfied that, with probability 1, the actual state is veridical rather than deceptive with respect to the hypothesis of interest.

When the failure set for an hypothesis is deceptive, then the investigator’s credences about converge to 0 or to 1 for all possible data sequences. But this convergence is logically independent of the truth of since the investigator is unable to distinguish veridical from non-veridical data histories.

Less problematic than being deceptive, but nonetheless still challenging for a Bayesian account of objectivity, is a non-deceptive state where for each , infinitely often

(1)

Then, with respect to hypothesis , infinitely often induces non-veridical conditional probabilities that mimic those from a deceptive state.

Definition: Call a state that satisfies Equation (1) intermittently deceptive for hypothesis .

Definition: Consider a non-veridical state where, for each , infinitely often . Call such a state intermittently veridical for hypothesis .

Within the failure set for an hypothesis, the following partition of non-veridical states appears to us as increasingly problematic for a defense of Bayesian methodology, in the sense that seeks asymptotic credal certainty about the truth value of the hypothesis driven by Bayesian learning. In this list, we prioritize avoiding deception over obtaining veridicality:7

states that are intermittently veridical but not intermittently deceptive;

states that are neither intermittently veridical nor intermittently deceptive;

states that are both intermittently veridical and intermittently deceptive8;

states that are intermittently deceptive but not intermittently veridical;

states that are deceptive.

We find it helpful to illustrate these categories within the continuing example of sequences of binary outcomes. Consider the set of denumerable, binary sequences: . That is, in terms of the structural assumptions in Doob’s result, ; each is the 4-element algebra , for ; and the inclusive -field is the Borel -algebra generated by the product of the .

First, if is defined by finitely many coordinates of (a finite dimensional rectangular event) then converges to the indicator function for , , after only finitely many observations. Then and all states are veridical. That is, there is no sequence where the conditional probabilities fail to converge to . Moreover, this situation obtains regardless whether is countably or merely finitely additive, provided solely that is a conditional probability that satisfies the following propriety condition: whenever .

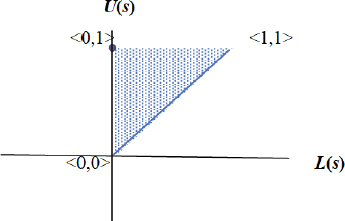

Next, consider an hypothesis that is logically independent of each finite dimensional rectangular event, an hypothesis that is an element of the tail -sub-field of . For instance, note that each sequence has a well-defined lim inf and lim sup of the relative frequency for the digit 1. For , let . The collection of all such sets is a partition of into -measurable events, each of which has cardinality of the continuum. Figure 1, below, graphs these points in the isosceles right triangle with corners , and .

Let be the subset of of sequences with a well-defined limit of relative frequency for the digit 1. In Figure 1, corresponds to the set of ordered pairs with , the (solid blue) line of points along the main diagonal.

For a countably additive personal probability that satisfies de Finetti’s (1937) condition of exchangeability, this subset of has personal “prior” probability 1, . Also, assume for convenience that this probability is not extreme within the class of exchangeable probabilities: . Then for each sequence in , , and trivially, also . For the result on asymptotic Bayesian certainty, then . However, on the complementary set, for the conditional probabilities satisfy: ; hence, each is deceptive: category . Moreover, under these conditions, when a state is not veridical then it is deceptive: the posterior probability converges to .

Definition: Call a failure set deceptive if each state in the failure set is deceptive for .

Also, in this case we say that the associated credence for is deceptive.

We summarize this elementary finding as follows:

Result 1 Suppose that the credence function treats each possible initial history as not “null”: . Then for each hypothesis for which , the failure-set for is not empty and deceptive.

Moreover, if the space is uncountable, so that there is an uncountable partition of the space each of whose elements is an uncountable set, as depicted in Figure 1, then we have the following as well:

Corollary For each finitely additive probability on a space of denumerable sequences of (logically independent) random variables, where each initial history is not “null,” there exists an hypothesis , with , whose failure set is an uncountable set, and that failure set is deceptive.

The non-veridical states, , can populate each of the other four categories, (A)–(D). We discuss these in Appendix A.

4. Reasonably Modest but Deceptive Failure Sets

Next, we apply these findings to a recent debate about what Belot (2013) alleges is mandatory Bayesian orgulity. We understand Belot’s meaning as follows. For a Bayesian agent who satisfies, e.g., the conditions for Doob’s result, the set of samples where the desired asymptotic certainty fails for an hypothesis (the so-called “failure set” for ) has probability 0. Nonetheless, this failure set may be a “large” or “typical” event when considered from a topological perspective. Specifically, the failure set may be comeager with respect to a privileged product topology for the measurable space of data sequences. As we understand Belot’s criticism, such a Bayesian suffers orgulity because she/he is obliged by the mathematics of Bayesian learning to assign probability 0 to the possible evidence where the desired asymptotic result fails, even when this failure set is comeager.

In a (2016) reply to Belot’s analysis, A. Elga focuses on the premise of countable additivity in Doob’s result. Countable additivity is required in neither Savage’s (1972) nor de Finetti’s (1974) theories of Bayesian coherence. Elga gives an example of a merely finitely additive (and not countably additive) probability over denumerable binary sequences and a particular hypothesis where with positive probability (in fact, with probability 1) the investigator’s posterior probability fails to converge to the indicator function for . So, not all finitely additive coherent Bayesians display orgulity.

M. Nielsen and R. Stewart (2019) extend the debate by explicating what they understand to be Belot’s rival account of reasonable modesty of Bayesian conditional probabilities. They offer a reconciliation of Elga’s rebuttal and Belot’s topological perspective. For Nielsen and Stewart, a credence function is modest for an hypothesis provided that it gives (unconditional) positive probability to the failure set for the convergence of posterior probabilities to the indicator function for . By this account, each credence in the class of countably additive credences is immodest over all hypotheses that are the subject of the asymptotic convergence result but have non-empty failure sets. Since requiring modesty for all such hypotheses is too strong of a condition even for (merely) finitely additive credences—as per the Corollary to Result 1, above—Nielsen and Stewart propose a standard of reasonable modesty. This condition requires modesty solely for failure sets that are typical in the topological sense, for some privileged topology.

With their Propositions 1 and 2, Nielsen and Stewart point out that there exist a class of merely finitely additive credences (with cardinality of the continuum) such that each credence function in this class assigns unconditional positive probability (even probability 1) to each comeager set. Then, such a credence displays reasonable modesty for each failure set that is “typical.”

Below, we show that the reasonably modest credences that Nielsen and Stewart point to with their Proposition 1, nonetheless, mandate deceptive failure sets for specific hypotheses. And as we explain (in Appendix B), Nielsen and Stewart’s Proposition 2 provide reasonably modest credences in their technical sense at the price of making it impossible to learn about hypotheses that concern unobserved parameters, in all familiar statistical models.

First we argue that this sense of “modesty” is mistaken when deception is not a null event, regardless whether the modesty is reasonable or not. When the investigator’s credences are merely finitely additive, with respect to a particular hypothesis the failure set for Doob’s result may have positive prior probability, as is well known.9 In such cases, the investigator’s credences are called modest according to Nielsen and Stewart. Suppose, further, that such a modest credence also has a deceptive failure set. Then, each state is either veridical or deceptive. But the investigator behaves just as though asymptotic certainty tracks the truth. That is, the fact that the set of deceptive states (for a particular hypothesis) has positive probability— rather than —the fact that the investigator’s credence is “modest,” is irrelevant to the investigator’s decision making. Here is why.

Let be an hypothesis, and suppose that each state is either veridical for or deceptive for . Then, for each state , the sequence converges to 1 if and only if either is veridical and in , or if is deceptive and in . And converges to 0 if and only if is veridical and in , or if is deceptive and in . Hence, the investigator becomes asymptotically certain about the truth of no matter what data are observed. This analysis holds regardless of what prior probability the investigator assigns to and regardless how probable is the failure set. The modesty of for , namely that , is irrelevant to this conclusion. And so too, it is irrelevant to this conclusion whether the modesty of for is reasonable or not. It is irrelevant whether is a comeager set or not.

To put this analysis in behavioral terms, suppose the Bayesian investigator faces a sequence of decisions. These decisions might be practical, with cardinal utilities that reflect economic or legal, or ethical consequences. Or, these decisions might be cognitive with epistemically motivated utilities, e.g., for desiring true hypotheses over false ones, or for desiring more informative over less informative hypotheses. Or, these might form a mixed sequence of decisions, with some practical and some cognitive. Suppose each decision in this sequence rides on the probability for one specific hypothesis and, regarding the corresponding sequence of Bayesian conditional probabilities for that parallel these decisions, the investigator’s credence is deceptive for . Then, asymptotically, the investigator’s sequence of decisions will be determined by the asymptotic certainty—the conditional credence for of 0 or 1—that surely results, no matter which sequence of observations obtains. But if also the investigator has a positive unconditional probability for deception, this “modesty” plays no role in her/his sequence of decisions. The “modesty” reported by her/his unconditional probability of deception, , be it a large or a small positive probability, is irrelevant to the sequence of decisions that she/he makes. When a failure set is both deceptive and non-null, the Bayesian investigator ignores this in her/his decision making, treating all certainties alike. Just as if . We do not agree, then, that the investigator’s credences are modest for hypothesis when the failure set is deceptive and .

One example in which the conditions of this analysis hold was given by Elga (2016) and is an instance of our continuing example about binary sequences. In Elga’s example, is the hypothesis that the binary sequence satisfies . In his example the failure set is deceptive with probability 1, i.e., .10

A large class of examples of this kind arise by using Proposition 1 of Nielsen and Stewart. Here is how Proposition 1 applies to the continuing example of the Borel space, , of binary sequences on . Let be a non-extreme, exchangeable countably additive probability. That is, in addition to being an exchangeable probability, for each finite initial history, i.e., for each of the possible sequences , and for each , then . By Doob’s result, is not modest (in Nielsen and Stewart’s sense) because, for each hypothesis its failure set is , . Let be a finitely additive, 0–1 (“ultrafilter”) probability with the property that if E is a comeager set in , then .11 Fix and define , the mixture of these two probabilities.

Nielsen and Stewart’s Proposition 1 establishes that is reasonably modest, since for each hypothesis , if the failure set is comeager, then . However, as we show next, Proposition 1 creates reasonably modest credences that, in the Continuing Example, have failure sets for specific hypotheses that have positive probability, are comeager, and are deceptive.

Result 2 In the continuing example, let be the hypothesis that the binary sequence belongs to the set of maximally chaotic relative frequencies, corresponding to the (red) point in Figure 1. This is the set of sequences with lim inf and lim sup . Then the failure set for under , , has positive probability, , is comeager, and is deceptive.

Proof: Because both and for each history , , then .

Under there is a distinguished binary sequence in the following sense. The finite initial histories form a binary branching tree: for each there are distinct histories . Because is an “ultrafilter” distribution, then for each and for each possible finite initial history of length , or . So, there is one and only one sequence where, for each ,

.12 That is, for each sequence there exists an such that for all ,

. Thus, for each there exists an such that for all ,

.13

Specifically, the failure set is either the set (if the sequence belongs to ), or it is the set (if the sequence belongs to ). In either case, the failure set is deceptive for . According to Cisewski et al. (2018) is a comeager set. Evidently then, is a comeager set where .14

We emphasize that certainty with deception is indistinguishable from certainty that is veridical. In the context of Result 2, the investigator can tell when the observed history differs from the history that would be observed in the one distinguished sequence, . But that recognition provides no basis for altering the certainty, , that results once the observed history departs from the distinguished one, once . Regardless the magnitude of the (unconditional) probability of deception, , the investigator cannot identify when certainty is deceptive rather than when it is veridical. Her/his conditional credence function, , already takes into account the total evidence available. Certainty is certainty, full stop.

We have argued above that a credence is not epistemically modest where there is an hypothesis that has a deceptive failure set that is not P-null. Then, in the continuing example, each probability created according to Proposition 1 fails this test of epistemic modesty.

In Summary, it is our view that having a positive probability over non-veridical states is not sufficient for creating an epistemically modest credence because categories (D) or (E) may have positive prior probability as well. Indeed, in the continuing example, each probability created according to Proposition 1 fails this test of epistemic modesty.

5. We Summarize the Principal Conclusion of this Note:

When the failure set for an hypothesis is deceptive and not null, that is in conflict with an attitude of epistemic modesty about learning that hypothesis.

Regarding the asymptotics of Bayesian certainties, e.g., Doob’s result, neither of Nielsen and Stewart’s concepts of modesty, nor reasonable modesty distinguishes deceptive from other varieties of failure sets. According to Result 2, in the Continuing Example each credence that satisfies Nielsen and Stewart’s Proposition 1 admits an hypothesis whose failure set is P-non-null, comeager, and deceptive.

Acknowledgements

We thank two anonymous referees for their constructive feedback. Research for this paper was supported by NSF grant DMS-1916002.

Notes

- To model changes in personal probability when learning evidence , Bayesian conditionalization requires using the current conditional probability function as the updated conditional probability upon learning evidence . ⮭

- We use the language of events to express these conditions. Let be a probability function. Let , be -many pairwise disjoint events and their union: if , and . Finite additivity requires: . Let be countably many pairwise disjoint events and their union: if , and . Countable additivity requires: . ⮭

- Deceptive credence is a worse situation for empiricists than what James (1896: §10) notes, where he famously writes, But if we are empiricists [pragmatists], if we believe that no bell in us tolls to let us know for certain when truth is in our grasp, then it seems a piece of idle fantasticality to preach so solemnly our duty of waiting for the bell. It is not merely that the investigator fails to know when, e.g., her/his future credences for an hypothesis remain forever within epsilon of the value 1. With deceptive credences, the agent conflates asymptotic certainty of true statements with asymptotic certainty of false statements. The two cases become indistinguishable! ⮭

- See, also, Theorem 2, Section IV of Schervish and Seidenfeld (1990). ⮭

- See Schervish and Seidenfeld (1990), Examples 4a and 4b for illustrations where is not an element of and where the asymptotic certainty result fails. ⮭

- For ease of exposition, where the context makes evident the hypothesis in question, we refer to states as veridical or deceptive simpliciter. ⮭

- We note in passing that the categories may be further refined by considering sojourn times for events that are required to occur infinitely often. Also, the categories may be expanded to include, -veridical and -deceptive, where for some , conditional probabilities, , accumulate (respectively) to within of and to within of . We do not consider these variations here. ⮭

- Our understanding is that case (C) satisfies the conditions for what Belot (2013) calls a “flummoxed” credence. Weatherson (2015) discusses varieties of “open minded” credences, including those that are “flummoxed,” in connection with Imprecise Probabilities. Here, we focus on failures of veridicality for coherent, precise credences. ⮭

- Moreover, when credences are merely finitely additive, the investigator may design an experiment to ensure deceptive Bayesian reasoning. For discussion see Kadane, Schervish, and Seidenfeld (1996). ⮭

- By contrast, in Cisewski, Kadane, Schervish, Seidenfeld, and Stern’s (2018) version of Elga’s example, for the same hypothesis , the failure set, , is the whole space; whereas, for each and for each , . Then the failure set generates solely indecisive conditional credences: each state is neither intermittently veridical nor intermittently deceptive—category (B). ⮭

- Existence of such 0–1 finitely additive probabilities is a non-constructive consequence (using the Axiom of Choice) that the comeager sets form a filter: They have the finite intersection property and are closed under supersets. ⮭

- Note well that is merely finitely additive as , since each unit set , each denumerable sequence , is a meager set. ⮭

- More generally, if the agent’s conditional probabilities become and stay immodest, as they become the sequence of countably additive conditional probability function, . So, though is modest, with P-probability 1 its conditional credences become and stay immodest. ⮭

- Similarly, Result 2 applies to each hypothesis of a comeager set whose complement includes the support of the countably additive, immodest probability . ⮭

- When either and , or and , or and , then the behavior of is not determined. This issue is relevant to the illustration of case (A), with clause (ii), below. ⮭

- The Corollary to Result 1 establishes that the same phenomenon occurs when Nielsen and Stewart’s Prop. 2 is generalized to include finitely additive credences that assign positive probability to each finite initial history and a positive (but not necessarily probability 1 credence) to each comeager set of sequences. ⮭

References

Belot, Gordon (2013). Bayesian Orgulity. Philosophy of Science, 80(4), 483–503.

Cisewski, Jessica, Kadane, J. B., Schervish, M. J., Seidenfeld, T., and Stern, R. B. (2018). Standards for Modest Bayesian Credences. Philosophy of Science, 85(1), 53–78.

de Finetti, Bruno (1937). Foresight: Its Logical Laws, Its Subjective Sources. In Kyburg, Henry E. Jr. and Smokler, Howard E. (Eds.), Studies in Subjective Probability (1964). John Wiley.

de Finetti, Bruno (1974). Theory of Probability (Vol. 1). John Wiley.

Doob, Joseph L. (1953). Stochastic Processes. John Wiley.

Elga, Adam (2016). Bayesian Humility. Philosophy of Science, 83(3), 305–23.

James, William (1896). The Will to Believe. The New World, 5, 327–47. Reprinted in his (1962) Essays on Faith and Morals. The World.

Kadane, Joseph B., Schervish, M. J., and Seidenfeld, T. (1996). Reasoning to a Foregone Conclusion. Journal of the American Statistical Association, 91(435), 1228–36.

Lindley, Dennis V. (2006). Understanding Uncertainty. John Wiley.

Nielsen, Michael, and Stewart, R. (2019). Obligation, Permission, and Bayesian Orgulity. Ergo, 6(3), 58–70.

Savage, Leonard J. (1972). The Foundations of Statistics (2nd rev. ed.). Dover.

Schervish, Mark J. and Seidenfeld, T. (1990) An Approach to Certainty and Consensus with Increasing Evidence. Journal of Statistical Planning and Inference, 25(3), 401–14.

Weatherson, Brian (2015) For Bayesians, Rational Modesty Requires Imprecision. Ergo, 2(20), 529–45.

Appendix A

Here, we discuss and illustrate categories (A)–(D) of failure sets using the continuing example. Restrict the exchangeable “prior” probability so that, in terms of de Finetti’s Representation Theorem, the “mixing prior” for the Bernoulli parameter is smooth, e.g., let it be the uniform . Choose and consider the hypothesis . So, with the “uniform” prior, ; so, .

The set of veridical states for this credence and hypothesis includes each sequence where,

either —in which case obtains and ;

or, either or —in which case obtains and .15

The non-veridical states (the failure set) , the set of sequences where does not converge to the indicator , include states such that or . For such a state , fails to converge and

and .

Then is both intermittently veridical and intermittently deceptive for —category (C).

In order to illustrate the other three categories of non-veridical states, (A), (B), and (D), the following adaptation of the previous construction suffices. Depending upon which category is to be displayed, consider a state such that the likelihood ratio oscillates with suitably chosen bounds, in order to have the sequence of posterior odds,

oscillate to fit the category. This method succeeds because, as is familiar, the posterior odds equals the likelihood ratio times the prior odds:We illustrate category (A) using the same hypothesis and credence as above. For a non-veridical state in category (A), consider a sequence such that both:

. Then is intermittently veridical as, infinitely often, the relative frequency of ‘1’ falls strictly between and , and

-

but there exists , where for only finitely many values of ,

—so that is not intermittently deceptive;

and infinitely often —so that is not veridical.

Appendix B

In this appendix we consider Nielsen and Stewart’s Proposition 2, and related approaches for creating a reasonably modest credence, . We adapt Proposition 2 to the continuing example of the Borel space of denumerable binary sequences. Consider a finitely additive probability on the space of binary sequences in accord with Nielsen and Stewart’s Proposition 2, where

(i) for each possible finite initial history;

and (ii) , whenever comeager.

Nielsen and Stewart’s Proposition 2 asserts that, however is defined on the field of finite initial histories, which space we denote by , then may be extended to a finitely additive probability that is extreme with respect to the field of comeager and meager sets in . For example, if is a countably additive probability on , then might agree with on , while if is a comeager set. Then, is reasonably modest in the technical sense used by Nielsen and Stewart since, whenever a failure set is comeager, .

We do not know whether the conclusion of Result 2 extends also to the reasonably modest credences created according to the technique of Proposition 2. For instance, we do not know, for a general , when an hypothesis has a deceptive failure set with . Evidently, we are unwilling to grant that a credence satisfying Proposition 2 is epistemically modest about learning an hypothesis merely because whenever is a comeager set.

However, there is a second issue that tells against the technique of Proposition 2 for creating reasonable modesty. In Proposition 1, probability values from the immodest countably additive credence for events in the tail field of are relevant to the values that the reasonably modest credence gives these events. And, as is countably additive, the probability values for tail events are approximated by values in . In short, under the method used in Proposition 1, probability values for events in constrain the reasonably modest values of . However, in Proposition 2 the P1 values in are not relevant to the -values for events in the tail field. In Proposition 2, the probability values are stipulated to be extreme for comeager sets, regardless how the -credences are assigned to the elements of the observable . The upshot is that with credences the investigator is incapable of learning about comeager sets based on Bayesian learning from finite initial histories.

With respect to the continuing example, Cisewski et al. (2018) establish that the set of sequences corresponding to the one point in Figure 1 is comeager. Thus, in order to assign a prior probability 1 to each comeager set, this agent is required to hold an extreme credence that the sequence has maximally chaotic relative frequencies: .

As above, let the hypothesis of interest be : the hypothesis that the sequence has maximally chaotic relative frequencies. Then Result 1 obtains as and for each . No matter what the agent observes, her/his posterior credence about remains extreme. With credence , the failure set for is the meager set (hence a -null set) of continuum many states corresponding to each point in Figure 1 other than the corner . Each point in the failure set for is deceptive: the failure set is deceptive!16 On what basis do Nielsen and Stewart dismiss the deceptiveness of as irrelevant to the question whether is an appropriate credence for investigating statistical properties of binary sequences? We speculate their answer is, solely, that the failure set is meager.

Propositions 1 and 2 do not exhaust the varieties of finitely additive probabilities that assign positive probability to each comeager set in . For instance, one may recombine the techniques from these two Propositions as follows.

Let be an (immodest) countably additive probability on that assigns positive probability to each finite initial history. Let be a finitely additive probability defined on obtained by the technique of Proposition 2, but where and agree on . So, , for the hypothesis that the sequence is maximally chaotic. Then, in the spirit of Proposition 1, define as a (non-trivial) convex combination of and : let and define . Then avoids the difficulty displayed by the probability of Proposition 2, discussed above, namely . There is no prior certainty under that the sequence is maximally chaotic.

But has its own difficulties. Here are two. The Corollary applies to with the hypothesis : that the sequence is either maximally chaotic or has a well-defined limit of relative frequency. In Figure 1, corresponds to the sequences either in the set corresponding to the point or in the set of points with well-defined limits of relative frequency, where . The failure set for is uncountable and deceptive, though meager. Second, makes all observations irrelevant for learning about the hypothesis : the sequence is maximally chaotic. This follows because

So, for each initial history, Independent of the history .