Introduction

To introduce students to the library research process, we designed a library research guide applying Universal Design for Learning Guidelines (CAST, 2018) and accessible design principles. However, before embedding this guide in our learning management system, where it would reach over 40,000 students, including over 5,500 students with registered disabilities, we recognized our responsibility to ensure that it was accessible to all our students. While librarians can use accessibility standards, such as the Web Content Accessibility Guidelines (https://www.w3.org/WAI/standards-guidelines/wcag/), to inform and evaluate accessible design, these do not guarantee accessibility or usability for all users. How, then, can we ensure library research guides are both accessible and usable for students with disabilities? In this paper, we outline our experience conducting an accessible user experience (UX) study of a library research guide to ensure the technical and functional accessibility of the guide for all students, specifically centering students with disabilities.

Literature Review

According to the W3C Web Accessibility Initiative (WAI), “Web accessibility means that people with disabilities can equally perceive, understand, navigate, and interact with websites and tools” (2021). The Web Content Accessibility Guidelines define the four principles of web accessibility (perceivable, understandable, navigable, and robust) and include testing criteria. Typically, web accessibility evaluation centers on testing for conformance. However, the guidelines acknowledge that conformance to them does not ensure accessibility for all types, degrees, and combinations of disabilities. According to WAI (2021), “Combining accessibility standards and usability processes with real people ensures that web design is technically and functionally usable by people with disabilities. This is referred to as usable accessibility or accessible user experience.” Not only does involving users with disabilities help identify accessibility and general usability issues, but it also amplifies usability issues that impact all users (Henry, 2007).

In searching the literature, we found that accessibility studies of library research guides did focus on conformance to accessibility standards through manual testing (Chee & Weaver, 2021; Stitz & Blundell, 2018), automated testing (Greene, 2020; Pionke & Manson, 2018), and a combination of the two (Rayl, 2021). While these studies investigated and informed conformance, they failed to include the experiences of students with disabilities.

We found several usability studies on library research guides, but they did not focus on accessibility, nor did they specifically include users with disabilities (Almeida & Tidal, 2017; Conrad & Stevens, 2019; Sonsteby & DeJonghe, 2013; Thorngate & Hoden, 2017). However, with the inclusion of accessibility in the study design, Rearick et al. (2021) conducted usability testing to analyze the Universal Design for Learning elements used in their most-viewed online course guide. They worked with organizations on their campus to recruit students who might have experienced barriers when using this guide. During their study at least three of their participants were not able to perceive content, indicating that some participants may have had disabilities, although this was not explicitly stated.

More broadly, there were several examples of involving users with disabilities in library UX research. Pontoriero & Zippo-Mazur (2019) conducted a survey and focus group regarding student awareness of library supports and highlighted the importance of including the perspectives of students with disabilities when researching library user experiences. Mulliken (2019) interviewed eighteen blind academic library users to investigate their experiences with libraries and their online resources. They provided information on participants' challenges using the library websites to search for resources while using screen readers, but this study focused on only one type of disability. Brunskill (2020) involved students with various disabilities in evaluating a web page that described accessibility services. However, the participants only reviewed the content of the web page, not its accessibility.

Our study builds upon this existing research on library research guides, accessibility evaluation, and usability testing, and it adds to the critical conversations on involving users with disabilities in library UX. Our accessible UX study uniquely centers students with diverse disabilities in the evaluation of a library research guide.

Background

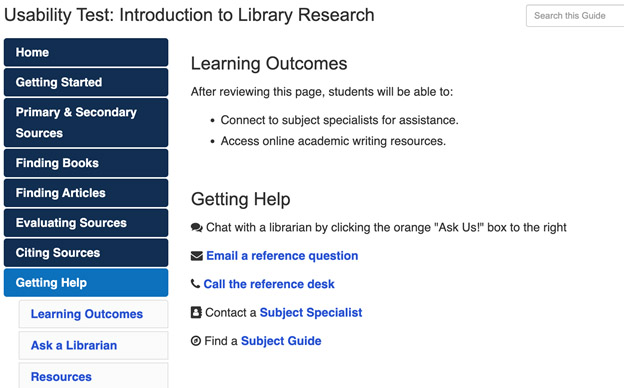

We created the Introduction to Library Research guide using Springshare’s LibGuides platform in February 2018, two years prior to our study. We designed the guide to orient students to the library research process. In addition to the home page, it includes pages on how to get started, locate and use primary and secondary sources, find a book, find an article, evaluate sources, cite sources, and get help. We created a duplicate guide for the purpose of conducting this study.

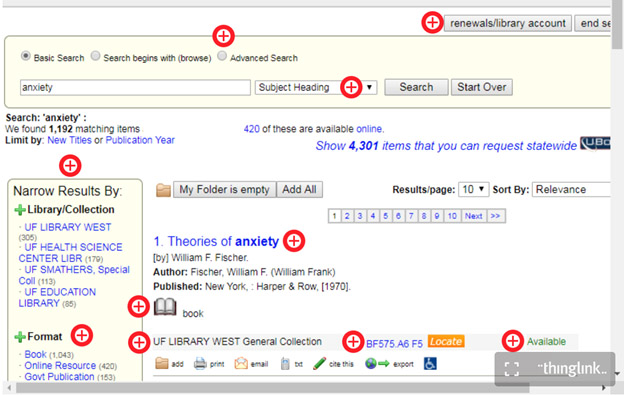

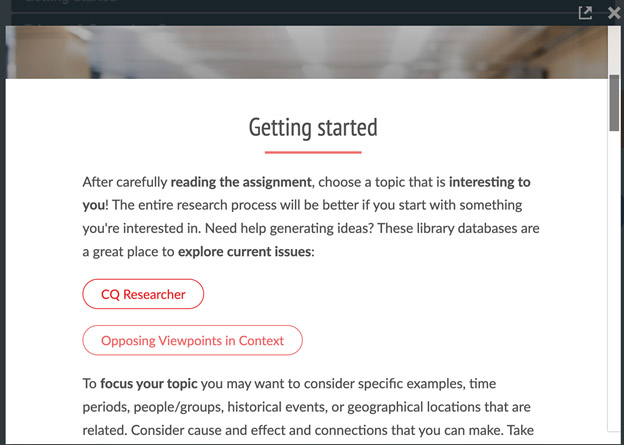

The guide is multimodal, meaning that it represents information through multiple media. According to Universal Design for Learning Checkpoint 2.5 (CAST, 2018), “there is extensive research to support the representation of information through a variety of formats: video, diagram, image, music, animation, and more.” Our guide includes text, embedded videos, and embedded learning objects. For example, to provide multiple formats of the information, we embedded an Adobe Spark page on the home page. We used Adobe Spark as a dynamic and engaging way to alternatively present the information. We also included a plain and accessible PDF version that can be printed or read by a screen reader. On the “Finding Books” page we included a ThingLink, an “education technology platform that makes it easy to augment images, videos, and virtual tours with additional information and links” (ThingLink, n.d.). We used this tool to define and annotate parts of the library catalog interface, allowing users to click on hotspots for additional information in key areas.

Research Questions

While we designed the library research guide applying the Universal Design for Learning Guidelines (CAST, 2018) and accessible design principles, we wanted to ensure the guide was accessible and usable for all students. The following questions guided the study design:

Do students with disabilities perceive the library research guide to be accessible?

Do students with disabilities perceive the library research guide to be usable?

Methodology

Recruitment

The research team worked in collaboration with the Disability Resource Center (DRC) to recruit participants for this study. Eligible participants were undergraduate students with any disability. We did not limit the scope of disabilities included, as an inclusive approach would best represent the diverse needs of students. However, we recognized that the study did not represent all disabilities nor identify all barriers.

We made a recruitment flyer and placed it in locations frequented by students with disabilities, including the library’s Accessibility Studio and the DRC main office, as well as in the DRC Newsletter. Our collaborator at the DRC also sent the flyer directly to four affiliated student groups. We gave students who participated a ten-dollar gift card for their participation.

The final group of participants included six undergraduate students with disabilities. We selected every student who responded to the call for participation. Once the recruitment process was completed, we shared the names of participants with the DRC to request a list of the disabilities represented by the participants. The DRC only shared a list of disabilities for the group, to ensure privacy and anonymity. Participants’ names were not linked to specific disabilities, the interview responses, or to any of the audio/screen capture recordings.

Participants

Six undergraduate students with disabilities participated in the study. The participants represented a range of characteristics that include sensory, physical, and cognitive disabilities. Each participant disclosed at least one of the following disabilities to the DRC: attention deficit hyperactivity disorder, autism spectrum disorder, cerebral palsy, generalized anxiety disorder, major depressive disorder, and visual impairment. Although some disabilities were apparent during the study, we did not associate individual disabilities with participants or their data.

Although the number of participants represented a small percentage of the 5,500 students registered with the DRC, the six participants were close to usability testing standards according to Nielsen (2012), which recommends five users to gain insights in a usability study. We recognized that our study alone could not represent the diverse disabilities of all students or address all accessibility issues.

Measures and Materials

Our accessible UX study included three task scenarios and an interview with ten questions (see appendix). The DRC representative reviewed the test script, including tasks and questions, to better ensure our neurodiverse participants would clearly understand the directions. We recorded the tasks and interviews using screen and audio recording tools (Morae software, Yeti USB microphone) for qualitative analysis.

Procedure

In preparation for the study, we asked participants to indicate if they would need additional accommodations. Assistive technologies, such as MAGic (computer magnification program), JAWS (screen reader program), and Kurzweil (text to speech software), were available to participants through university-wide subscriptions. We also provided an adjustable height desk, a large key keyboard, noise-canceling headphones, and a trackball mouse that were available from existing library equipment. In addition, we offered a printed version of the tasks and questions and gave participants the option to provide written responses during the interview.

Throughout our recruitment process, we informed all participants about the purpose of our study to improve the accessibility and usability of a library research guide; that they would interact with the guide to complete tasks, using assistive technologies if needed; and that we would interview them about their experience. We repeated this information upon their arrival and at the beginning of the test to ensure participants felt comfortable with the process. All participants provided informed consent before testing began. To limit any potential test anxiety, we emphasized to our participants that this was not a test and that some barriers could prevent them from completing the tasks.

We directed the participants to think aloud while completing the scenarios, a standard method for usability testing. This method occasionally prompted both the interviewer and observer to ask follow-up questions and identify issues with the research guide. Participants had 20 minutes to complete the task scenarios, which included a flexible task of self-directed exploration that was not assigned a success/failure rating. Each participant explored the guide in their own unique way, but all participants indicated they were ready to move on to the interview at the end of the allotted time.

We limited the number of tasks based on our considerations of assistive technology use and participant energy levels. We chose the most important tasks for participants to complete first, and we included an exploratory task for the remaining time which allowed us to observe their preferred way to interact with the guide. After completing the tasks, we interviewed participants and encouraged them to clarify their points on the guide itself.

Once the UX testing was complete, the data analysis process began with transcribing the six interviews and recording successes/failures of the guide. We analyzed the interview transcripts using deductive thematic analysis (Kuckartz, 2019; Tracy, 2019). We chose the thematic categories based on the accessibility principles from WAI (2018) and usability metrics from the International Organization for Standardization (2018). See Table 1 for definitions and examples. Once we defined the categories, we reviewed and manually coded the transcripts together, frequently confirming our codes in relation to the category definitions. We combined the coded passages into a list under each thematic category to identify patterns and summarize the results.

| Category | Definitions and Examples |

|---|---|

| Perceivable |

“Information and user interface components must be presentable to users in ways they can perceive” (WAI, 2018). Examples of perceivable web content include text alternatives, video captions, and sufficient color contrast. |

| Operable |

“User interface components and navigation must be operable” (WAI, 2018). Examples of operable web content include keyboard shortcuts, sequential navigation, and descriptive headings and links. |

| Understandable |

“Information and the operation of the user interface must be understandable” (WAI, 2018). Examples of understandable web content include predictability, readable text, and consistent navigation. |

| Robust |

“Content must be robust enough that it can be interpreted by a wide variety of user agents, including assistive technologies” (WAI, 2018). An example of robust web content includes content that is compatible with screen readers. |

| Effectiveness |

“Accuracy and completeness with which users achieve specified goals” (ISO, 2018). Examples of effectiveness in web content include providing multiple paths for completion and clear and meaningful directions; related to successfully completing tasks without errors or assistance. |

| Efficiency |

“Resources expended in relation to the accuracy and completeness with which users achieve goals” (ISO, 2018). Examples of efficiency in web content include reducing the number of steps, having clear labels and meaningful shortcuts; related to speed and time needed to complete tasks. |

| Satisfaction |

“Freedom from discomfort, and positive attitudes towards the use of the product” (ISO, 2018). Examples of satisfaction include web content that meets user needs and expectations. |

| Other |

Insights that do not fit within the existing dimensions. Examples include specific suggestions for improvement. |

Findings

We present our findings in the categories of accessibility and usability identified above.

Perceivable Information and User Interface

Participants experienced notable barriers perceiving the user interface of the guide. One participant had trouble seeing the color blue and could not see the links in the navigation menu nor within the body of the guide. The participant also noted that they often rely on a text resizing widget, and they suggested adding one to the guide. A second participant suggested increasing the font size of the headings. We made changes to the guide based on these findings, such as increasing the font size of the navigation menu, headings, and body text. Although we did not change the color of links, we strengthened their weight to increase visibility.

Operable User Interface and Navigation

Participants found the guide easy to navigate, mentioning the side navigation menu, clear and relevant headings, and the ability to find the information they needed without getting lost. One participant stated:

I thought it was pretty easy to navigate through. I thought the tabs on the side were clear enough. When you clicked on one, it would take you to a certain part of the page, so you didn’t have to dig through the whole page to find what you wanted, so that was nice.

However, one participant noticed that some of the sub-menu links jumped to the middle of the guide page where there were no headings, leading to confusion about the location of the needed information. Based on these findings, we made sure every section had a heading and that they linked correctly to the sub-menu. We also added next and previous buttons to aid users in sequentially navigating the guide.

Understandable Information and User Interface

Overall, participants easily understood how to use the guide and understood the content. One participant shared, “The guide was very easy to use and read. Everything worked just like I thought it would, and I’m sure if I was looking for some help with research, this would be a great guide to get started.” However, one participant shared that both the embedded Adobe Spark page and ThingLink learning objects were confusing to them. We used Adobe Spark and ThingLink, open interactive media software, to add dynamic content to the library research guide. See Figure 1 for an example of the ThingLink and Figure 2 for an example of the Adobe Spark page. The participant believed that if they had known how to read the Adobe Spark page, it would have helped them better understand the guide.

Although we did not make any changes to the guide, since the Adobe Spark page offered an alternative format for users, we plan to review it further and possibly integrate its content into the guide itself so that users better understand how to use it. We updated the ThingLink to feature our new discovery tool and added emphasis to the hotspots.

Robust Content and Interpretation

One participant tested the guide’s compatibility with the Kurzweil screen reader software available to students. While they found that most of the content on the guide was compatible with the screen reader software, they discovered that the embedded ThingLink learning object was not. When we created the learning object, we recognized the challenge of identifying parts of a user interface for users with disabilities. Although the screen reader could not read the text, we decided to keep this embedded object because of its integrated Microsoft immersive reader. We also highlighted its audio description feature in the guide page directions included above the ThingLink learning object.

Effectiveness in Achieving Goals

Regarding the usability of the guide, all participants completed the first task scenario, “Navigate through the guide to find the option to ‘ask a librarian’ for help,” however, one participant required guidance, and one participant found the answer on the Adobe Spark page instead of within the guide (see Figure 3). Participants struggled more with task two: “Navigate through the guide to find information on how to search for a book in the library catalog. Find how to check if a book is available in the library catalog.” Three of the six participants required assistance locating the embedded ThingLink learning object, and two other participants needed guidance using it. We believe that some of the confusion was due to the wording of task two itself and did not reflect the guide’s effectiveness. The task asked them to locate availability information for the book depicted on the embedded ThingLink learning object, and did not, as some participants misunderstood, require them to search the library catalog.

Despite the needed assistance in the first two tasks, participants shared that the guide was well organized and effective for their learning during the interview. One participant stated, “The guide has gone beyond what I had expected. I actually received a lot of extra knowledge from this guide, aiding in my potential research and just having all of these resources available for topic explorations.”

Efficiency in Achieving Goals

Due to the focus on accessibility and the potential use of assistive technologies, we decided not to collect data about time on task. However, participants were able to review the entire guide within the time provided for exploration (task three). Participants noted that what they learned from the guide would help them find information faster. One participant stated, “Links to databases were especially good because then I could get to the scholarly research more quickly than if you just said try looking for some databases. These links are very valuable.”

Satisfaction of User Experience

The participants expressed their overall satisfaction with the guide, using statements such as “it’s a really good base” and “it is definitely awesome,” specifically mentioning the organization and content meeting their research needs. One participant stated, “I really liked how getting help was really easy… I looked at everything, and [the guide] has pretty much everything you need to write a research paper, so that’s great.”

Suggestions for Improvement

Invited to share ideas for improvement, participants believed the guide could benefit from adding instructional videos, incorporating visual design elements, modifying specific examples, and expanding content. Participants mentioned additional content would be helpful in the sections about evaluating sources, primary and secondary sources, and citation management. One participant also suggested adding an orienting video that explains the guide itself. We added content to several pages, changed examples, and linked to additional resources based on these suggestions. We plan to add fully accessible videos in the future.

Limitations

Our study did have several limitations, primarily the limited number of participants and disabilities represented. For example, our study did not include a participant who relies on a screen reader to access the content or a participant who relies on captions to perceive the embedded multimedia. We did have one participant who tested the screen reader compatibility of the guide, but since they do not rely on a screen reader, they were still able to perceive the content without it. As mentioned earlier, we recognize that our study alone does not represent the diverse disabilities of all students. We also recognize disability can intersect with other marginalized identities that can influence how students experience disability, an aspect that we did not further explore in the study.

Another limitation to the study included the use of a testing lab, which does not represent the authentic experience of users who rely on their own devices and technologies to navigate online. A final limitation included the limited number of tasks. While intentional in design, it does limit the evaluation of the guide’s usability.

Conclusion

The study revealed that not all content on the library research guide was fully accessible. The participants identified accessibility barriers including hard-to-read links and embedded objects that could not be read by the assistive technology provided and were confusing to some. In regard to usability, all participants found the guide to be easy to use and effective for learning, and they enjoyed interacting with the guide.

At a large university with thousands of students with disabilities, online learning content must be accessible and usable. By conducting an accessible UX study, we gained a deeper understanding of web accessibility issues and a greater sense of how our students with disabilities navigate and use the web. This study informed improvements to the guide to make it more accessible and usable for all students.

Not only did this study inform our understanding of accessible design, but it also informed our understanding of accessible research practice. Considerations such as assistive technologies, multiple options for engagement, and flexibility within the test itself can allow for a better testing experience for students with disabilities. In fact, one participant found meaning in the test itself and shared the following in gratitude:

I would just like to say thank you so much, and I mean, it really means a lot that you’re working to improve the accessibility for students with disabilities. I think it’s really noble of you guys because it’s difficult, like I had difficulty, in the beginning, doing the tasks. It’s just that I think that is a great direction to take, and that is reflected upon the care that you guys have for students with disabilities and just accessibility in general.

This statement aligns with the finding that involving students with disabilities in user testing benefits the individual student and the broader campus community (Brown et al., 2020). We hope that practitioners consider involving students with disabilities in their work to ensure accessibility and usability for all learners.

Key Takeaways

The following points are suggestions for conducting your own accessible UX study:

Design the research study, and all related materials, with accessibility in mind, building in flexibility and providing options when possible (e.g., include all study details on recruitment flyers).

Partner with the Disability Resource Center or other accessibility experts on campus to assist with research design and recruitment (e.g., communication with student organizations).

Communicate all details of the study and provide opportunities for participants to ask questions and share their accessibility needs throughout the study (e.g., pause for questions during interviews).

Remove any known barriers and provide assistive technologies in the test environment (e.g., provide adjustable height desk).

Acknowledgements

We would like to acknowledge the significant contributions of our research partners Muhammad Rehman, Beth Roland, and Swapna Kumar. We’d also like to thank the students who participated in making our work more accessible. Finally, we’d like to thank the editors for making this research open and accessible to the practitioners in our field.

References

Almeida, N., & Tidal, J. (2017). Mixed methods not mixed messages: Improving LibGuides with student usability data. Evidence Based Library and Information Practice, 12(4), 62–77. https://doi.org/10.18438/B8CD4Thttps://doi.org/10.18438/B8CD4T

Brown, K., de Bie, A., Aggarwal, A., Joslin, R., Williams-Habibi, S., & Sivanesanathan, V. (2020). Students with disabilities as partners: A case study on user testing an accessibility website. International Journal of Students as Partners. 4(2), 97–109. https://doi.org/10.15173/ijsap.v4i2.4051https://doi.org/10.15173/ijsap.v4i2.4051

Brunskill, A. (2020). “Without that detail, I’m not coming”: The perspectives of students with disabilities on accessibility information provided on academic library websites. College & Research Libraries, 81(5), 768–788. https://doi.org/10.5860/crl.81.5.768https://doi.org/10.5860/crl.81.5.768

CAST. (2018). Universal design for learning guidelines version 2.2. https://udlguidelines.cast.org/https://udlguidelines.cast.org/

Chee, M., & Weaver, K. D. (2021) Meeting a higher standard: A case study of accessibility compliance in LibGuides upon the adoption of WCAG 2.0 guidelines. Journal of Web Librarianship, 15(2), 69–89. https://doi.org/10.1080/19322909.2021.1907267https://doi.org/10.1080/19322909.2021.1907267

Conrad, S., & Stevens, C. (2019). “Am I on the library website?”: A LibGuides usability study. Information Technology and Libraries, 38(3), 49–81. https://doi.org/10.6017/ital.v38i3.10977https://doi.org/10.6017/ital.v38i3.10977

Greene, K. (2020). Accessibility nuts and bolts: A case study of how a health sciences library boosted its LibGuide accessibility score from 60% to 96%. Serials Review, 46(2), 125–136. https://doi.org/10.1080/00987913.2020.1782628https://doi.org/10.1080/00987913.2020.1782628

Henry, S. L. (2007). Just ask: Integrating accessibility throughout design. http://www.uiaccess.com/accessucd/http://www.uiaccess.com/accessucd/

International Organization for Standardization. (2018). Ergonomics of human-system interaction—Part 11: Usability: Definitions and concepts (ISO Standard No. 9241-11:2018). https://www.iso.org/standard/63500.htmlhttps://www.iso.org/standard/63500.html

Kuckartz, U. (2019). Qualitative text analysis: A systematic approach. In G. Kaiser & N. Presmeg (Eds.), Compendium for early career researchers in mathematics education (pp. 181–197). Springer. https://doi.org/10.1007/978-3-030-15636-7_8https://doi.org/10.1007/978-3-030-15636-7_8

Mulliken, A. (2019). Eighteen blind library users’ experiences with library websites and search tools in U.S. academic libraries: A qualitative study. College & Research Libraries, 80(2), 152–168. https://doi.org/10.5860/crl.80.2.152https://doi.org/10.5860/crl.80.2.152

Nielsen, J. (2012). How many test users in a usability study? Nielsen Norman Group. https://www.nngroup.com/articles/how-many-test-users/https://www.nngroup.com/articles/how-many-test-users/

Pionke, J. J., & Manson, J. (2018) Creating disability LibGuides with accessibility in mind. Journal of Web Librarianship, 12(1), 63–79. https://doi.org/10.1080/19322909.2017.1396277https://doi.org/10.1080/19322909.2017.1396277

Pontoriero, C., & Zippo-Mazur, G. (2019). Evaluating the user experience of patrons with disabilities at a community college library. Library Trends, 67(3), 497–515. https://doi.org/10.1353/lib.2019.0009https://doi.org/10.1353/lib.2019.0009

Rayl, R., (2021) How to audit your library website for WCAG 2.1 compliance. Weave: Journal of Library User Experience 4(1). https://doi.org/10.3998/weaveux.218https://doi.org/10.3998/weaveux.218

Rearick, B., England, E., Lange, J. S., & Johnson, C. (2021). Implementing universal design for learning elements in the online learning materials of a first-year required course. Weave: Journal of Library User Experience, 4(1). https://doi.org/10.3998/weaveux.217https://doi.org/10.3998/weaveux.217

Sonsteby, A., & DeJonghe, J. (2013). Usability testing, user-centered design, and LibGuides subject guides: A case study. Journal of Web Librarianship, 7(1), 83–94. https://doi.org/10.1080/19322909.2013.747366https://doi.org/10.1080/19322909.2013.747366

Stitz, T., & Blundell, S. (2018). Evaluating the accessibility of online library guides at an academic library. Journal of Accessibility and Design for All, 8(1), 33–79, https://doi.org/10.17411/jacces.v8i1.145https://doi.org/10.17411/jacces.v8i1.145

ThingLink. (n.d.). ThingLink for teachers and schools. https://www.thinglink.com/en-us/eduhttps://www.thinglink.com/en-us/edu

Thorngate, S., & Hoden, A. (2017). Exploratory usability testing of user interface options in LibGuides 2. College & Research Libraries, 78(6), 844–861. https://doi.org/10.5860/crl.78.6.844https://doi.org/10.5860/crl.78.6.844

Tracy, S. (2019). Qualitative research methods: Collecting evidence, crafting analysis, communicating impact (2nd ed.). Wiley-Blackwell.

W3C Web Accessibility Initiative (WAI). (2018). Web Content Accessibility Guidelines (WCAG) 2.1. https://www.w3.org/TR/2018/REC-WCAG21-20180605/https://www.w3.org/TR/2018/REC-WCAG21-20180605/

W3C Web Accessibility Initiative (WAI). (2021). Accessibility, usability, and inclusion. https://www.w3.org/WAI/fundamentals/accessibility-usability-inclusion/https://www.w3.org/WAI/fundamentals/accessibility-usability-inclusion/

Appendix

User Experience Tasks

Task 1: Navigate through the guide to find the option to “ask a librarian” for help.

Task 2: Navigate through the guide to find information on how to search for a book in the library catalog. Find how to check if a book is available in the library catalog.

Task 3: Review the content of the guide for the remaining minutes. You will be asked to share your experience using the guide, but you will not be tested on the content itself.

Interview Questions

How was your experience using the library research guide?

What did you like about the research guide? What worked well for you?

How well were you able to navigate through the research guide?

Do you think that the research guide was organized effectively?

-

Did you face any difficulties while using the research guide?

If yes, please share them in detail.

-

Did you use assistive technology to access the content of the research guide?

Was there anything that was not accessible to you?

Was any of the content confusing to you?

Do you think the guide is effective in learning about library research?

What do you think can be improved?

Is there anything else you’d like to share with us about the guide?