Context

A large student population, combined with increasing demands on library personnel workload, made it difficult for our university library to reach all of its library users with a traditional, in-person library orientation. In response, the library created a gamified experience called LibGO, short for library game orientation. Long before we wrote any game code, however, LibGO began with design thinking.

IDEO’s design-thinking process (Kelley & Littman, 2001) and Norman’s (2013) user-centered design approach have been the bases for many recent innovative practices within a range of disparate industries, including libraries. According to IDEO, design thinking is both an approach and a mindset, “a human-centered approach to innovation that draws from the designer’s toolkit to integrate the needs of people, the possibilities of technology, and the requirements of business success” (IDEO, n.d., para. 1). With the goal of improving the user experience (UX), the five phases of design thinking can aid in the creation of successful designs, even in difficult projects such gamifying a library orientation.

This case study addresses the critical role of design thinking in the production of LibGO. After reviewing literature on broadly applicable gamification mechanics, and narrowly looking at existing UX and gamification in libraries, we discuss the importance of the five phases of design thinking. This discussion is followed by the methodologies we used to create a gamified library orientation experience through an iterative design process involving the phases of empathize, define, ideate, prototype, and test. We emphasize the fourth and fifth phases, in which we used a minimal amount of coding as well as iterative usability testing prior to launch. We describe a sequence of issues we encountered during playtests and discuss remedies we took to balance storyline, content, educational objectives, and gameplay.

We completed the design and development of LibGO with limited UX and coding expertise, and so we hope to inspire other novices to make similar attempts at improving the user experience.

Literature Review

Gamification Mechanics

The definition of games or gaming is dependent upon the industry (Pagulayan et al., 2008), and can set the tone of the product. Commercial games for the entertainment industry do not have to balance entertainment with learning objectives, as do educational games. “Games are usually more colorful, wacky, and escapist than productivity applications, with the inclusion of interesting storylines or animated characters” (Pagulayan et al., 2008, p. 742).

Sailer et al. (2017) defined gamification as the implementation of game design elements for non-gaming purposes. Their case study identified seven key elements that are visible to players: points, badges, leaderboards, performance graphs, meaningful stories, avatars, and teammates. Usage of such game design elements “modifies that environment, thereby potentially affecting motivational and psychological user experiences” (p. 374). These elements suggest that gamification may affect motivational outcomes and can be a solution for learning contexts if well-designed.

Elements of interaction, engagement, and immersion are key to a gamified experience (Gunter et al., 2008). The field of human-computer interaction is both user-centered and iterative, using common practices of ethnographic research, prototyping, and testing. In general, a prototype is a representation of an idea, and prototypes can take many forms, from a simple sketch on paper to a fully functioning artifact. Prototypes are quick and inexpensive ways to uncover design issues before significant time and money are put into the development of the final product. Prototype design and playtesting support human-computer interaction in game design by providing users with an early look at the final product, as well as allowing designers the opportunity to identify functional requirements and performance issues early on (Beaudouin-Lafon & Mackay, 2008).

As compared to usability tests or online survey research, playtests are advantageous to game designers because they provide actionable feedback derived from users’ hands-on play experience (Pagulayan, 2008). The primary focus is on perceptions and supports the iterative process key to human-computer interaction and UX design.

Most successful games have a storyline, allowing players to have a sense of engagement. There are two main perspectives: the designer’s story and the player’s story (Rouse, 2001). The designer’s story is the storytelling built into the game, cueing the player as to purpose and content: “Some games have stronger designer’s stories, such as adventure and role-playing games, while others have little to no designer’s story, such as classic arcade games like Pacman and puzzle games like Tetris” (Winn & Heeter, 2007, para. 12). The player’s story is present in every game, and the storyline drives it. Players find a storyline to be most meaningful when it engages their social groups; games can accomplish this objective through ethnographic research-based persona creation. A persona is based on a fictional character and is derived from a profile of social attributes of the target audience; game developers then use these personas to drive storylines and player goals.

Other successful characteristics of game design are replayability (Prensky, 2001) and scoring; these are immersive activities whereby players feel connected to the game. Developers boost replayability through multiple avenues to completing the game’s goals as well as the insertion of random elements into the game, stimulating player interest (Winn & Heeter, 2007). Points or a scoring system can replace the simple count of right versus wrong choices and heighten the feeling of competitive play.

Commercial gaming relies on an iterative process of prototyping and playtesting (Salen & Zimmerman, 2006), which is even more necessary for designing great learning games that present complexity in their pedagogy (Winn & Heeter, 2007). Regardless of game purpose, playtesting helps designers refine their game’s mechanics by moving from the theoretical to actualized successes or failures. Although game-based learning is frequently associated with video games, libraries often prefer to focus on a more general application of both UX methods and digital games (Long, 2017).

UX and Design Thinking in Libraries

UX and design thinking have increasingly entered literature in the context of libraries in the past ten years, and can help libraries address usability concerns and adapt to changes in user behaviors and preferences. For example, the University of Technology Sydney adapted the design thinking process from Stanford’s design school to create a five-step process for improving wayfinding in academic libraries (Luca & Narayan, 2016). Porat and Zinger (2018) discussed their success of improving the library’s discovery tool through usability studies. Library literature also discusses the use of personas in understanding the library user’s experience (Sundt, 2017; Zaugg & Ziegenfuss, 2018) and how the psychology of UX can help us question assumptions about typical library users (Fox, 2017). These are just some of many examples of applying UX methods, including wayfinding, navigation and discovery, to enhance the experiences of library users.

User-centered design, UX, and library games often appear together in the literature. Somerville and Brar (2009) discussed the successful outcome of a user-centered approach to digital library projects, one that emphasized focus groups, ethnographic studies, and participant observation in addition to iterative design and prototyping. The design experience in this study served to acquaint its librarians with systems design and usability testing principles, new roles for these information professionals. Addressing the user perspective can therefore not only help library users but aid in the further professional development of the library staff.

Gamified Library Experiences

Narrowing in on library games yields examples of gamified learning activities focused on the research process (Long, 2017), library instruction (Battles et al., 2011), plagiarism, library anxiety (Reed & Caudle, 2016), information literacy (Guo & Goh, 2016), and library orientation or tours (Smith & Baker, 2011). The literature shows a high use of prototyping in university libraries (Long, 2017; Guo & Goh, 2006; Ward, 2006), however these were primarily for website design or website usability projects and not for gamification of library activities.

Long (2017) described how librarians at Miami University aspired to create an engaging and interactive tutorial that was easy to build and maintain with little learning curve. In order to address the challenge of teaching the research process in an online tutorial format, the library ran pilot tests for two text-based tutorials—with Google Sites and Twine—and a video-based tutorial with Adventur. Although gamifying the research process through these pilots proved fruitful for promoting fun and learning support, Long pointed out the need for future adaptations to address evolving technologies and accessibility concerns.

Librarians at Utah Valley University (UVU) created two self-paced library orientation games (Smith & Baker, 2011). Students played the game LibraryCraft online; it guided them around the library’s website in a type of medieval quest based on World of Warcraft. The authors solicited feedback from library student employees on many factors: artwork, storylines, wording of text, and overall UX. Of the fifty-two survey respondents, 84.5 percent indicated that the game taught them a new library skill, while 56 percent reported difficulty using the game. Interestingly, LibraryCraft was not as popular as the library’s physical game, Get a Clue; the UVU authors attributed this difference to the complexities of digital development and launching an online game during a busy time of the year. Perhaps most impressive about the game development at UVU was their communication plan and small focus groups with students (Baker & Smith, 2012). Additionally, this online game did not require a download in order to play, making it more accessible to library users.

Guo and Goh (2016) employed a user-centered design process to create an information literacy game to counteract the increasing numbers of students unwilling to approach librarians when encountering difficulty searching. While designing Library Escape, the authors facilitated design workshops, created prototypes, and solicited feedback. The design workshops had participants familiarize themselves with two existing game-based learning activities at different institutions; participants then discussed what they liked and disliked about these games, which informed the design of Library Escape. Once completed, Library Escape user testing feedback expressed the need to change presentation formats for this role-playing game, avoiding time-consuming introductory videos to allow the gamer to start play quicker. The game itself could take an hour or more to play, a large time commitment for players. It focused on library instruction rather than library orientation.

University of Kansas students designed a digital game for the university libraries to help address library anxiety among undergraduates; it was implemented in the first-year experience curriculum (Reed & Caudle, 2016). The student developer team used Twine software, wrote the game storyline, and created animated GIFs. Impressively, the game is still active and accessible to users not affiliated with the university. Another noteworthy feature is that in addition to the HTML file used to play the game in a browser, the authors also made a README file available which explains the game in more detail.

The University of Alabama Libraries created the web-based alternate reality game, Project Velius, as an interactive form of traditional library instruction (Battles et al., 2011). The authors cited several resourcing challenges in producing the game, such as librarian lack of experience with online game development, as well as librarian workload—developers had to produce the game in addition to their regular duties.

Although there are several citations in the literature on the use of gamified experiences in libraries, those studies tend to focus on the context of the game—for example, research instruction—and not on the development process. Libraries tend to describe the results once they launch a product or service, and not the process it took to get there. Most significantly, there is a lack of discussion of UX design methods, particularly of iterative prototypes and playtesting prior to final product launch. We seek to fill this gap by documenting the development approach, including the iterative prototyping and testing phases of LibGO.

Methodology and Development Approach

Taking a user-centered design approach with the five phases of design thinking, as well as emphasizing iterative development and testing, yielded an achievable development framework for gamifying the library orientation experience. This is one method to look at the broader concept of UX, which covers a user’s feelings, attitude, and behavior while using a product, service, system, or space. The combination of UX and design thinking ensures that the process of collecting research on user needs and applying that synthesized knowledge will effectively yield enhanced user satisfaction.

According to the Stanford d.school, the full design cycle includes five phases: empathize, define, ideate, prototype, and test (see fig. 1). Suggested resources are available in appendix A.

Although we describe the five design thinking phases linearly, the process itself is circular and iterative. During any phase, new discoveries can influence the decision to reconsider work done in previous phases. Below, we briefly describe each phase, including general project applications as well as its specific application to LibGO.

Empathize

The first phase involves empathizing with users. For libraries this means learning, observing, and understanding how library users feel about a product or service. Literature demonstrates that the “not knowing” feeling is common, especially among users who have never participated in library instruction classes that may provide a brief orientation and tour of the library. As applied to LibGO, we found overwhelming evidence of users not knowing which library resources were available to them. We collected these frustrations over the years through librarian observation and student survey feedback. Library orientations can counteract the library anxiety—including the struggle of how to locate buildings, items, and services—affecting new and returning student and faculty library users (Luca & Narayan, 2016).

Define

The second phase involves defining the problem. With the knowledge gained by empathizing with the library users in the first phase, we reflected on user behaviors, needs, and desires. We defined the problem as: reaching all types of library users with a traditional, in-person library orientation is not possible. How do we reach users beyond first-year students, who are the main recipents of in-person orientation and how do we make the orientation information engaging and relevant?

In this phase, we also focused on the project broadly with game objectives, logistics, event launch, budget, staffing, and documentation of processes and workflows (see appendix B). At this point, there was no game development, but rather a step of defining the processes, workflows, and division of responsibilities needed to achieve the goals of helping the library users. For example, we defined launching and marketing of the product in the workflows. We had to decide whether to help users with the defined problem through an event, an online environment, or even both. These event and online experiences required preparations to target potential users and included tasks such as recruitment emails and marketing drafts, institutional review board approval, and event design.

We also addressed potential staff needed throughout the process in this phase. Getting student workers to help run aspects of the event, as well as to encourage other students to participate in-person, can be a very effective means of delegating the workload. Recruiting participants can be time-consuming as it concerns marketing, dealing with no-shows, offering incentives, and providing physical and web space for testing (Mitchell & West, 2016); thus, it is important to reflect on a project timeline and include potential partners when available.

At this point, the formal and informal student surveys demonstrated that the user population wanted online access to resource knowledge and that they were more likely to use such a product if it were engaging. We discussed possible solutions including trivia games, tutorials, and virtual reality.

Ideate

The third phase, ideate, involves brainstorming solutions to the defined problem. We considered many solutions, including user engagement, grounded in an empathic approach to increasing library user interest and exploration of library resources. This approach sparked the initial concept of a university trivia game as a way to pilot the use of Twine, an open source software for non-linear stories. We selected Twine for its ease of access, affordability, and low learning curve; it uses HTML, CSS, and JavaScript. We used the default Twine theme despite it presenting color contrast challenges with text-based activities.

We designed a small pilot with five questions, each with multiple choice answers and related images for graphical appeal. Three colleagues played the game and gave feedback on the potential use of this software for a library-based engagement activity. The feedback came from people with varying academic backgrounds and roles. Although informal and anecdotal, all feedback demonstrated that the pilot showed promise but that several areas needed improvement: refinement of the storyline and greater visual appeal were necessary for a more enjoyable game experience. This quick and generalized feedback during the ideation phase led to further research and collaborative efforts that informed the game-development process.

Next, the focus transitioned from a trivia game to a more game-like experience, similar to a “choose-your-own-adventure” theme. We drafted a generic storyline and developed personas for the characters needed to create a personalized adventure. We, the game developers, are not programmers, but rather two librarians whose strengths lie in research design and digital libraries. Combining these skills with UX methodologies enabled us to design working prototypes based on our internal workflows (as detailed in appendix B).

We considered a variety of potential users for the game experience. Our institution is experiencing increasing numbers of transfer, graduate, and online students—groups who often have difficulty attending a traditional in-person library orientation tour. Similarly, faculty, staff, and community members are users of the library who do not get the opportunity to join classroom-based library instruction or new-student tours. Therefore, we expanded the intended audience for this game design to include individuals who are not part of the groups typically served in library orientation tours.

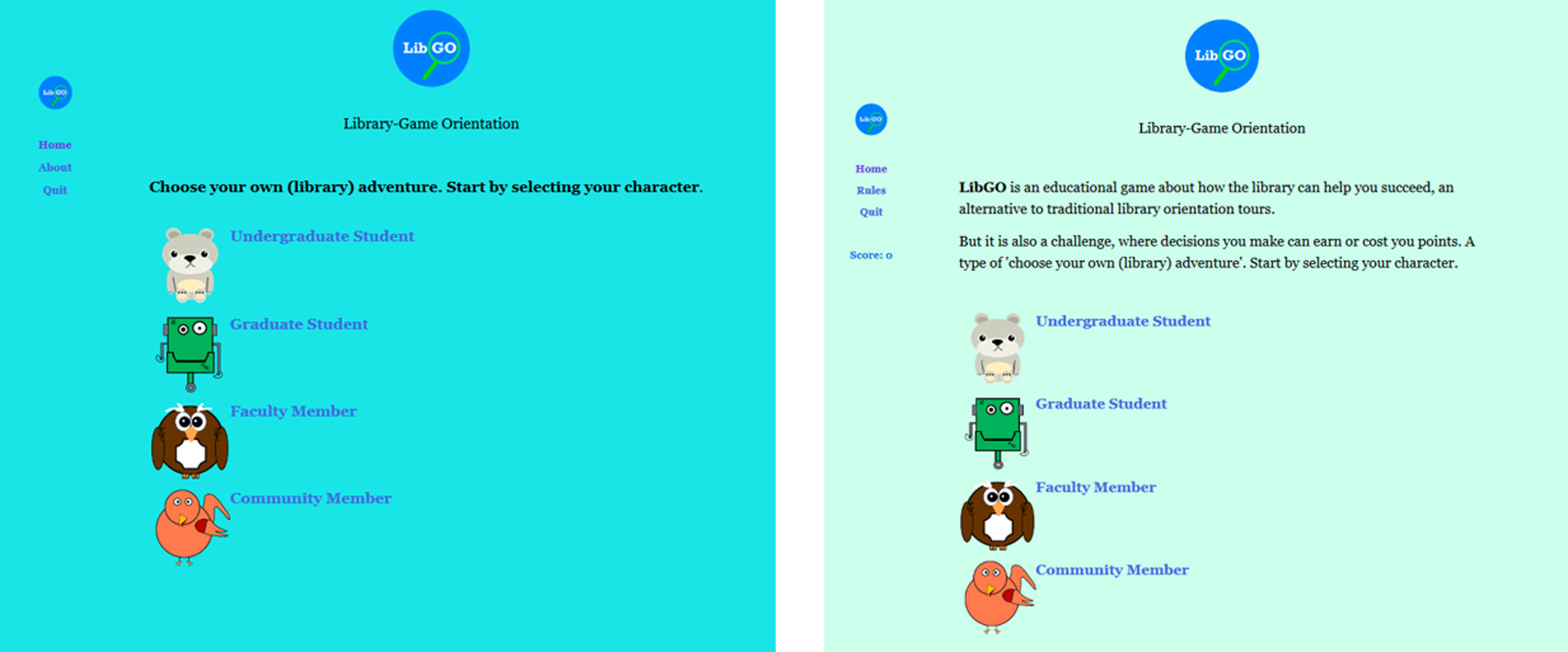

With the general storyline, target population of users, and goals of the game in place, the actual game design began by looking at university publications that gave a sense of the student, faculty, and community population. From the data in the Middle Tennessee State University Fact Book (2017), an annual publication available online, we created personas based on the four major groups identified as the intended audience: undergraduate students, graduate students, faculty, and community members (see fig. 2). We identified point-of-interest (POI) destinations, which were locations, services, or resources located throughout the library. We analyzed these POIs for the different information needs of the four personas, resulting in shared and specific POIs in response to the character path the game user selected.

Prototype

The fourth phase involves creating prototypes of the possible solutions formed during the ideate phase. Rather than making one prototype, this phase included both low-fidelity and high-fidelity prototypes to facilitate the early evaluation of designs.

A prototype can have varying levels of fidelity. Low-fidelity prototypes are often quick sketches on paper, a low or no-cost hypothesis that can easily be tested and modified. High-fidelity prototypes often cost more to produce—both time to create and cost of materials—and reflect artifacts that more closely resemble the final look and/or function of the final prototype or product. Regardless of fidelity level, developers are meant to iteratively test and modify prototypes in order to weed out design issues and evaluate the effectiveness of the product.

By pushing together large tables, we created a surface large enough to hold twenty-four pieces of 8.5 x 11 inch paper in seven rows. As a low-fidelity prototype, each paper represented a screen of a POI in the LibGO journey, with rectangular paper strips cut into varying sizes to show directional pathway options for each prototype screen. The prototype also included print outs of image possibilities for each page, allowing for easy viewing and swapping out of images for testing the look and flow of the paper prototype. Our interactions with this level of prototyping in the early stages of game design led to discussions on the POIs common among the user groups and on how to simplify the design.

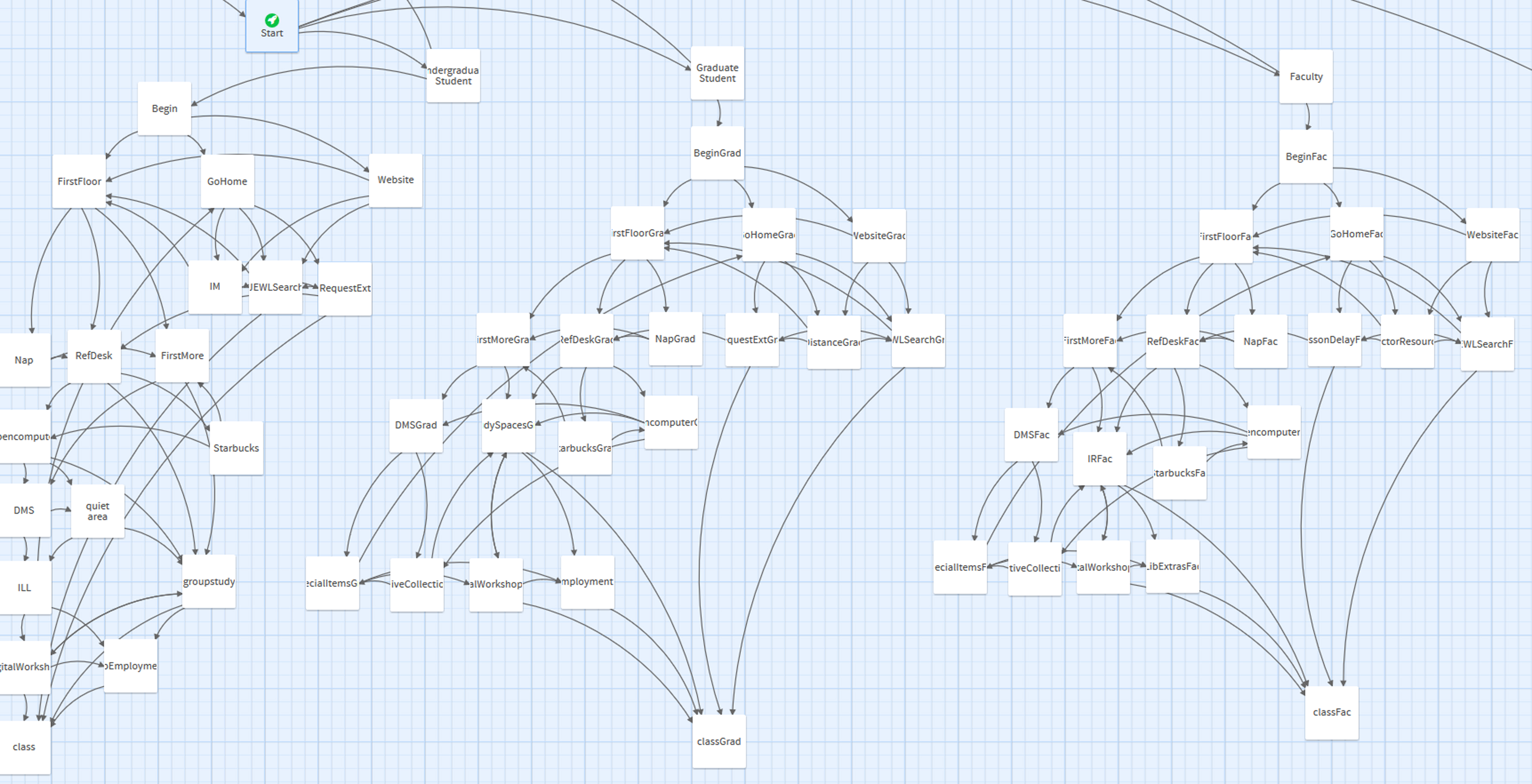

The resulting solution set the common POIs as fixed locations—regardless of character selected—and restricted the data flow to only four levels of character advancement opportunities; these decisions required fewer changes to the code. This evolved into a smaller representation of prototypes that were more manageable and are represented in the data flow diagram in Figure 3.

Game developers have many options for creating data flow diagrams:

LibreOffice Draw (https://www.libreoffice.org), free downloadable presentation software;

Microsoft PowerPoint, commercial presentation software;

Lucidchart (https://www.lucidchart.com/pages/), free, in-browser draw software;

proto.io (https://proto.io), free trial or subscription, in-browser draw software;

diagrams.net (https://app.diagrams.net/), free, in-browser draw software.

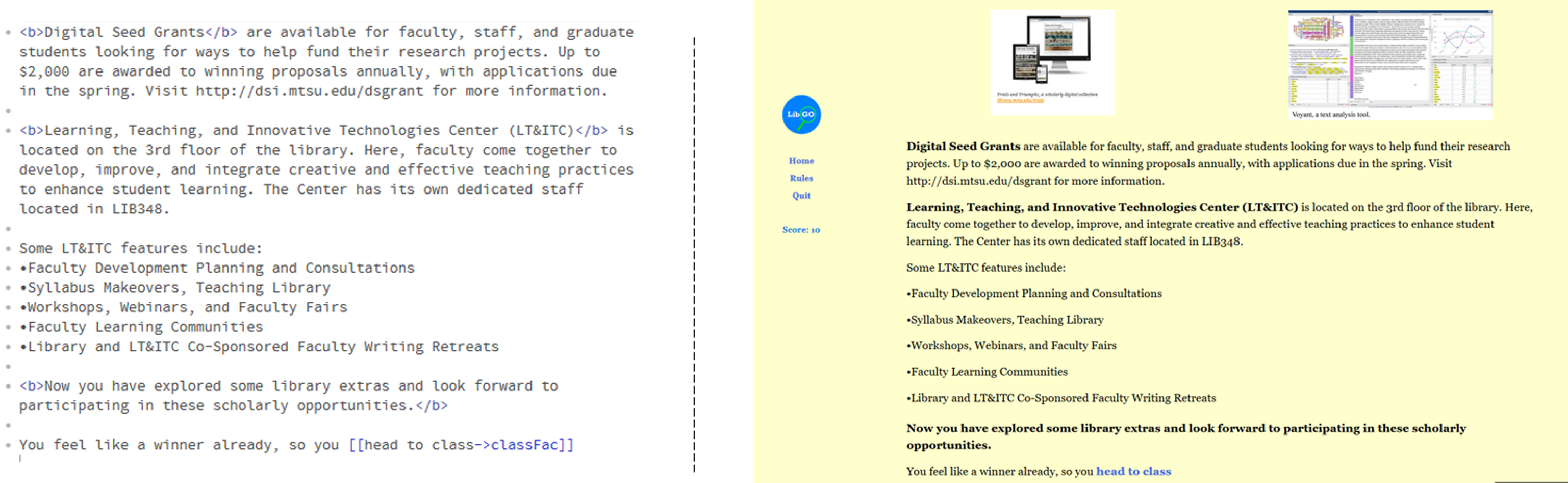

Sample single screen data.

Example of a single screen of the data flow diagram for the Graduate character. Image on the left is one screen of the diagram workflow with script and semi-code notes in low-fidelity form. Image in the upper right is a screenshot of the corresponding high-fidelity prototype.

We replicated the data flow diagram and low-fidelity prototyping for each character. The arrows in the data flow diagram show the possible directional pathways, color-coded to each page. The format and layout of the data flow was the same for each character; however, the content of the pages may have had differences. The semi-uniformity we describe here enabled simplification of coding, keeping the game development manageable for novice developers.

We applied the changes discussed during the exploration of the paper prototype to the high-fidelity prototype, which had its own data flow diagram within the Twine software (see fig. 5). Prototyping for each character continued at both low- and high-fidelity, as new iterations of construction and testing revealed areas of incremental improvement. To see an example of the high-fidelity prototype from the developer’s view and the user’s view (the user interface), see Figure 6.

In the left image of Figure 6, notice the <b>Digital Seed Grants</b>. This uses HTML to bold the words between the tags <b>and</b>. HTML has a lower learning curve than programming languages, and creators can use it to aid in piloting gamified experiences in Twine, as we did with LibGO.

Test

The fifth phase involves an iterative cycle of testing the prototype and making modifications. This testing happens before implementation of the final product, service, or space. Once we had a functional high-fidelity prototype, two rounds of playtesting commenced. Usability testing is ideally done in multiple rounds with a small number of participants, with three to five users as a target number, in order to have time and a budget to test more design iterations (Nielsen, 2012). Accordingly, four to five playtesters participated in each round of LibGO testing. We selected participants with varying backgrounds, skills, and roles from each department in the library—public services, collection development, technology, and administration—and we included student workers representing a range of academic disciplines. Both rounds of playtesting used a pre- and post-playtest survey that asked for participant consent. The playtest, the surveys, and a final post-launch survey received institutional review board approval.

The pre-playtest survey first asked playtesters to list the computer model, browser, and character they would be testing. The survey then asked the participant to identify the average number of hours per week they played games—of any kind—and the start time of their playtest so we could later determine a completion time range. The post-playtest survey asked the playtesters to record the time they finished playing LibGO and had a set of fifteen questions that gathered initial perceptions, detailed accounts, and final reactions, along with an optional open-response question. Given the feedback and observational reactions during round 1 of playtesting, we added one more question to the post-test survey used in round 2.

We adapted playtest questions from Patton (2017), and we wrote them to address the goals of our original research study on gamifying library orientation. A copy of the pre- and post-playtest survey is available in appendix C. To summarize, the sixteen post-playtest questions included:

five open-response answers to questions about initial perceptions of LibGO;

six questions on detailed accounts of play, five yes/no and one free-text to document the playtester’s final score;

four questions on final reactions, two no/maybe/yes options and two Likert-scale;

one open response field for the playtester to provide additional feedback.

Results of Iterative Prototyping and Testing

To review, discovering user needs and empathizing with the users’ access to and anxiety over the library orientation led to the definition of the storyline. User research confirmed the need for multiple viewpoints, so we applied multiple personas of library users. The low-fidelity prototypes facilitated improvement of the game’s storyline and the pathway of each persona, and these turned into high-fidelity prototypes. These prototypes underwent iterative testing before launch to the public.

This section describes the playtesting. In usability studies, this phase seeks to analyze data and observations, so preliminary analysis after each test can identify hotspots—the worst problems—allowing designers to immediately address these issues (Rubin & Chisnell, 2008).

Playtest Analysis

Both rounds of playtesting used surveys to understand the prototypical experiences of the intended LibGO users. Prior to starting the usability and playtesting for each round, we administered a pre-playtest survey which gathered demographic information including the device, browser, and character selected for playtesting by the user, as well as the average number of hours per week the user spent playing games of any kind—board, video, and computer. After completion of this survey, users began playing LibGO, during which we observed their reactions and behaviors. After completion of LibGO, the playtesters took the post-playtest survey. The post-playtest data collection included both quantitative and qualitative measurements, which we report on below.

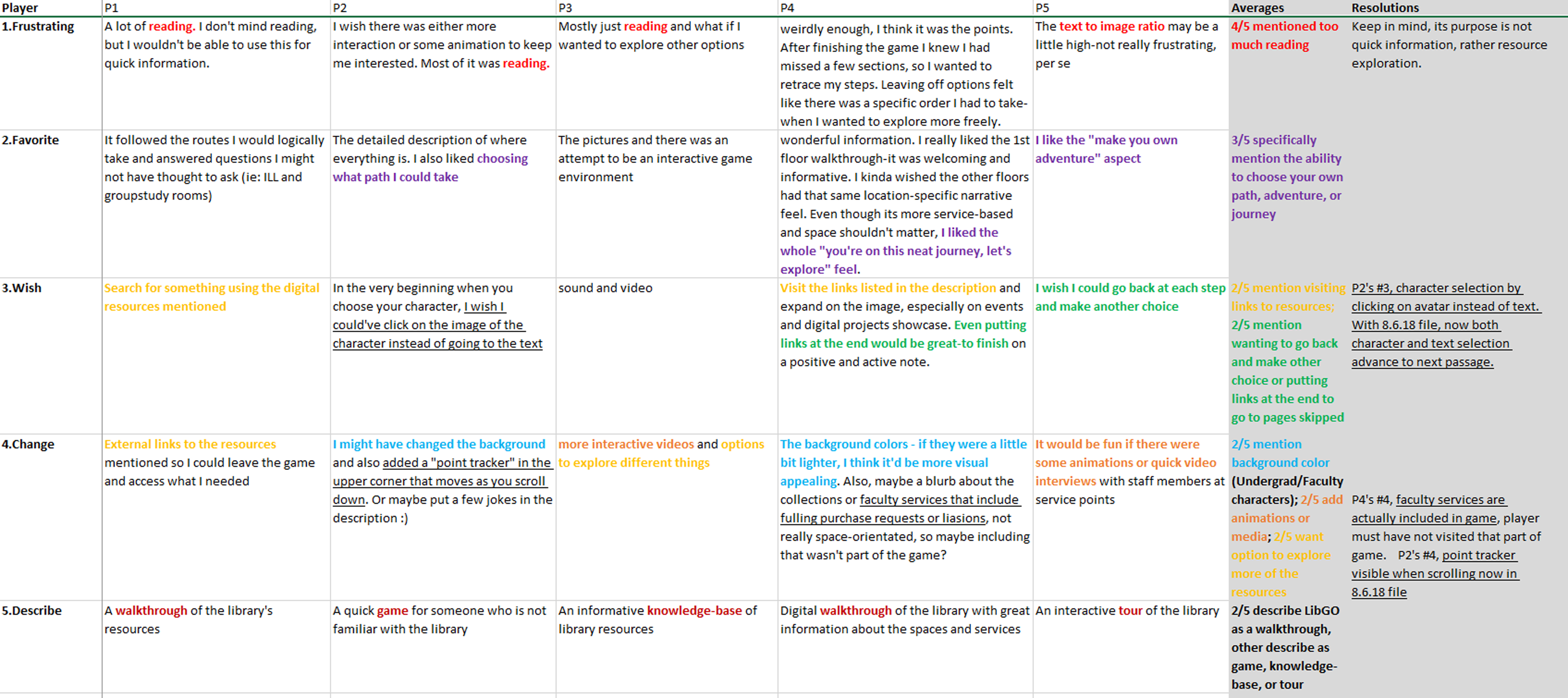

We used a form of affinity diagramming for both rounds of playtesting in order to formulate common themes from the same prompt and analyze the playtesters’ responses to each qualitative question. See Figure 7 for a screenshot of a sample coding of themes. This type of coding, not to be confused with computer programming, is the process of generating a word or short phrases—the codes—and then assigning them to sections of transcripts derived from interviews or surveys; it is a method of interpreting the meaning of textual content. Codes can be pre-defined or interpretive. Examples of interpretive codes include codes for attitudes toward something or consequences that result from acting on something (Asher & Miller, 2011).

Iterative analysis and open coding of these themes from round 1 and round 2 informed the design changes to the data flow and final Twine coding of LibGO. Color-coding for similar themes is a useful strategy in affinity diagramming and is most typically accomplished with different colored sticky notes. We found that moving this analysis to spreadsheet form not only allowed for similar trends to emerge as they would on paper, but it also enabled open coding practices with helpful Find and Replace abilities to search for related words within the playtesters’ responses. The purpose of both methods was to discover themes and trends in the data (Asher & Miller, 2011).

Round 1

Round 1 playtesting included five playtesters selected from the library, all of whom tested LibGO on a Windows computer in the library. Pre-playtest survey results in Table 1 indicate the browser playtesters used to play LibGO, average number of playtesters’ weekly gaming hours, and their selected character. Note that the character selection does not necessarily represent the role of the individual playtester, as this group was comprised of faculty, staff, and student workers from the library.

Playtesting round 1: pre-playtest.

| Round 1 Playtester | Player 1 | Player 2 | Player 3 | Player 4 | Player 5 |

|---|---|---|---|---|---|

| Browser | Chrome | Mozilla | Chrome | Mozilla | Chrome |

| Weekly Game Hours | 0 | 5–7 | 1–2 | 1–2 | 0 |

| Character Selected | Graduate | Undergraduate | Faculty | Faculty | Graduate |

Findings from Round 1 Playtesting Feedback

Below are responses to the first post-playtesting question, “What is the most frustrating aspect of what you just played?”

Player 1: A lot of reading. I don’t mind reading, but I wouldn’t be able to use this for quick information.

Player 2: I wish there was either more interaction or some animation to keep me interested. Most of it was reading.

Player 3: Mostly just reading and what if I wanted to explore other options.

Player 4: Weirdly enough, I think it was the points. After finishing the game I knew I had missed a few sections, so I wanted to retrace my steps. Leaving off options felt like there was a specific order I had to take-when I wanted to explore more freely.

Player 5: The text to image ratio may be a little high-not really frustrating, per se.

Below are responses to the second question of the post-playtest survey, “What was your favorite part/aspect?”

Player 1: It followed the routes I would logically take and answered questions I might not have thought to ask (ie: ILL and group study rooms.)

Player 2: The detailed description of where everything is. I also liked choosing what path I could take.

Player 3: The pictures and there was an attempt to be an interactive game environment

Player 4: wonderful information. I really liked the 1st floor walkthrough-it was welcoming and informative. I kinda wished the other floors had that same location-specific narrative feel. Even though its more service-based and space shouldn’t matter, I liked the whole “you’re on this neat journey, let’s explore” feel.

Player 5: I like the “make you own adventure” aspect.

The remaining three open-response questions on initial perceptions covered wishes, changes, and description. As a result of this user feedback, we:

Updated character selection to advance via both text and image selection.

Made the back and forward buttons more visible in the user interface.

Created a webpage listing links to resources—POI locations—so users could revisit information and learn more about the library.

Made background colors for the Undergraduate and Faculty characters lighter.

Added a point tracker in the sidebar menu area, so users could see their points add up in a consistent location, even while scrolling.

Confirmed the faculty services content was already a part of the POIs; the playtester must not have visited that part of the game. This issue reinforced the need to create a resources page, so users could visit all POIs and links in LibGO after game play.

The detailed accounts section asked five questions regarding specific details of the playtester’s experience. The results of this section indicated that all five playtesters finished the game, did not want to quit the game early, and received an award during gameplay. Asked if the playtester learned anything new about the library while playing LibGO, three out of five said yes. This is interesting because all five playtesters worked in the library at some level—faculty, staff, or student worker—yet three out of five learned something new.

Noteworthy findings of the final reactions section pertained to user enjoyment and engagement. Four of the five playtesters responsed to these questions. Asked to rate their enjoyment of the play experience on a scale of 1 (boring) to 5 (enjoyable), playtesters gave ratings yielding an average of 3.5 for enjoyment. We also asked the playtesters to rate how engaged they felt while playing, and they gave an average rating of 3.75 for engagement. These findings suggested that the playtesters were likely to play again and had moderate enjoyment and feelings of engagement.

The final question of the post-playtest survey was an open response area, and four out of five playtesters gave responses. Among the compliments and suggestions for additions or edits, common themes emerged: less text, more media, more options to explore, and links to resources. One playtester found a spelling error in the game’s text, which we corrected.

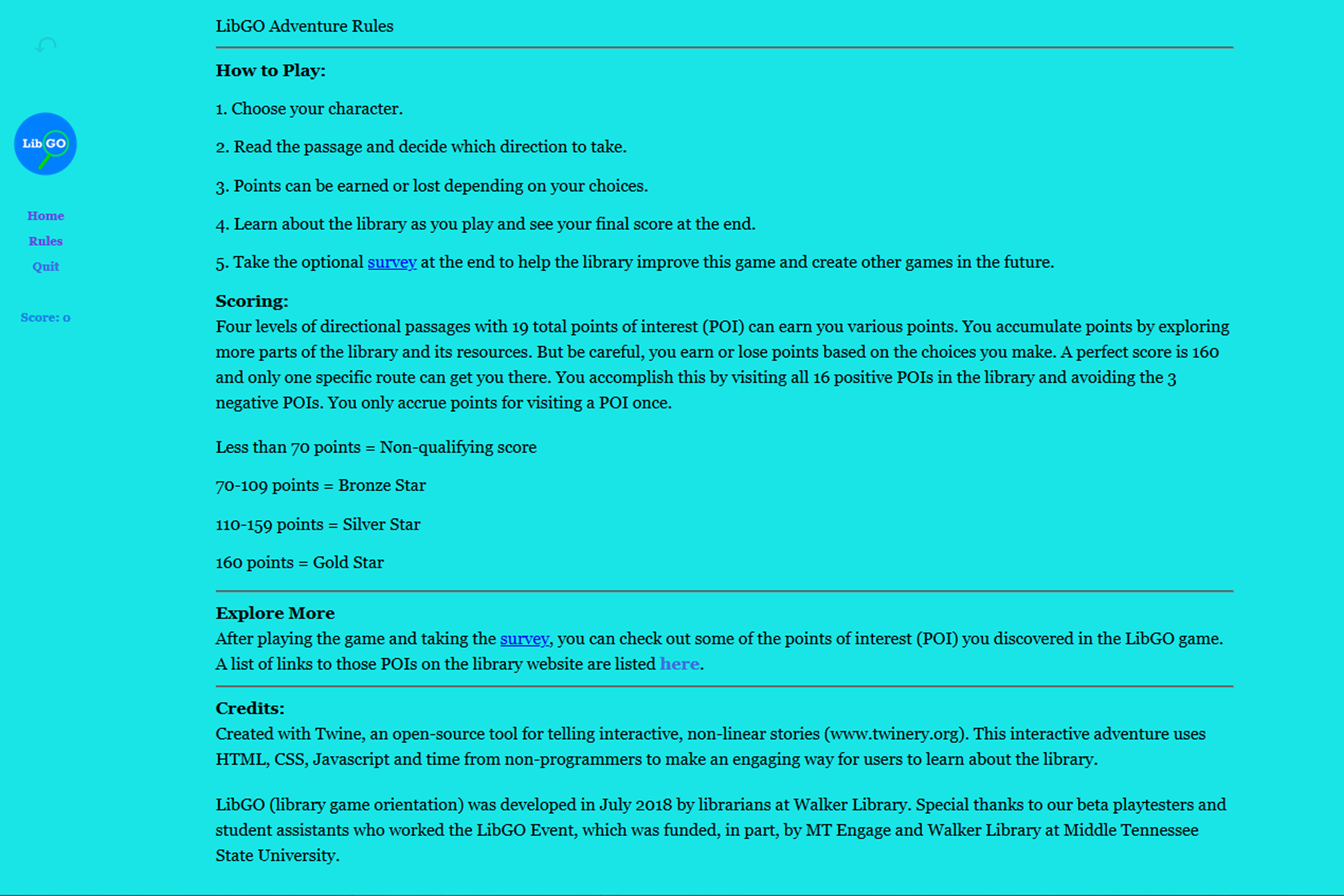

Round 1 Observational Notes

Although the playtesting was an individual activity, we conducted it in a group setting. We were in proximity to hear and observe playtesting behavior. At times playtesters made verbal comments while playing, such as, “These characters are so cute!” Either during or after the game, more than one playtester asked for clarification of the highest score as well as tips on how to achieve that score. This information regarding user questions, preferences, and expectations was exactly the type of feedback we wanted. As a result, the idea of an About page—later renamed Rules—emerged. This section provided users an overview on how to play the game, its scoring system, and some basic information regarding the development of LibGO. See Figure 8.

Round 2

Round 2 of playtesting included four playtesters selected from the library, all of whom tested LibGO on a Windows computer in the library. The pre-playtest survey results in Table 4 indicate the browser playtesters used to play LibGO, their average number of weekly gaming hours, and their selected character. As was the case with round 1, the character selection does not necessarily represent the role of the individual playtester, as this group was comprised of faculty, staff, and student workers from the library.

Playtesting round 2: pre-playtest.

| Round 2 Playtester | Player 1 | Player 2 | Player 3 | Player 4 |

|---|---|---|---|---|

| Browser | Chrome | Mozilla | Chrome | Chrome |

| Weekly Game Hours | 1–2 | 1–2 | 0 | 1–2 |

| Character Selected | Faculty | Community | Faculty | Undergraduate |

After taking the pre-playtest survey and playing LibGO, the round 2 playtesters took a post-playtest survey. This survey was identical to the post-playtest survey administered to the round 1 playtesters, with the exception of one added question as we mentioned in appendix A. This yes/no question asked whether the participant visited the About page in the sidebar.

Findings from Round 2 Playtesting Feedback

Initial perceptions in round 2 revealed different experiences for the new round of playtesters. Three out of four playtesters in round 2 mentioned a concern about scoring. They stated a desire to attain a high score, for example asking if choosing “silly answers” would cost them points, and stating that they “really wanted that gold star.” Relatedly, two out of four playtesters’ favorite aspect of the game was the “tricky” or “gotcha” choices—for example, taking a nap instead of pursuing research. The other two listed the freedom to explore or learn on their own as their favorite aspect. One playtester found the possibility of losing points for funny decisions as both their most frustrating and favorite aspect of LibGO.

The remaining three open response questions regarding initial perceptions covered wishes, changes, and description. In terms of something a user wished their character could do but could not, one user wished for the ability to go backwards in gameplay. Although this ability existed, either the playtester did not attempt to go back or did not recognize how to do so. As a result of this feedback, we reaffirmed the decision to keep the functionality giving users the ability to go-back or undo previous choices in the game.

There were mixed answers to the question, “If you could change, add, or remove anything from the experience, what would it be?” But because of user feedback, we:

Created and provided a list of all POIs at the end of the game.

Added the list of POIs to the About page—later renamed Rules—so the resources were accessible at any point of gameplay.

Enabled a trial and error method of choices in the game by giving users the option to start over or try again for a new score; this included adding Undo/Back arrows.

Updated instructions and rules, so users knew there was only one route to hit all POIs in game, which is required to obtain a perfect score.

The detailed accounts section of the round 2 post-playtest survey asked six questions regarding the playtester’s experience. The results indicated that all four playtesters finished the game, did not want to quit the game early, and received an award during gameplay. Half of participants said they learned something new about the library while playing LibGO, and half said they visited the About page in the sidebar menu. Based on user feedback, we:

Renamed About to Rules, in order to help users identify where to go if they needed help before or during play.

Added details on scoring to Rules.

The final question of the post-play survey was an open response area asking for any feedback, and the responses provided helpful information. One playtester admitted to playing again, under a different character, in an attempt to maximize their score. We have referenced this desire to try for the maximum score, and it is important to note. A second playtester found LibGO to be “an excellent way for users to fill in the knowledge gaps privately and on their own time.” Some playtesters gave specific suggestions to add content that was pertinent to specific users of the library, for example, how to purchase library materials. One playtester admitted being confused at first, “but as I went on, I picked it up and [understood].” This type of feedback was important for understanding the UX and the on-boarding process of a new website experience. Playtesters provided suggestions on improving the perceived boxy-storyline as well as affirmation of LibGO’s purpose, with one playtester stating, “Wish I had this as freshman, would have been extremely useful.”

Round 2 Observational Notes

As with round 1, the playtesting was an individual activity done in a group setting. We were in proximity to hear and observe playtesting behavior. Two of the four playtesters in round 2 made verbal comments while playing, including, “I wanted a high score, how do I get a high score?!” and, “I can’t get it, I want to keep trying. Ugh, how do you get a high score?” Although these comments were not directed at anyone, we were interested to see how this gamified aspect of orientation motivated the users to keep exploring.

Discussion of Iterative Testing Results

To begin analyzing the playtests, we decided to put the collected data into a form that would allow patterns to visually emerge. We used spreadsheets for this project, but use whatever works best for the person analyzing the data, including outlining programs or mind-mapping software (Rubin & Chisnell, 2008). Next, we tried to identify the tasks with which users had the most difficulty. Tools that can help determine this factor include making lists, tallies, matrices, structure models, and flow diagrams, among others (Rubin & Chisnell, 2008). In the case of LibGO, we kept a running list of errors, issues, and suggestions based on playtest results as well as our own usability tests, which included debugging (see fig. 9). From this list, we tried to identify if user error stemming from either a player misunderstanding or a game attribute caused the misunderstanding. Finally, this list prioritized the problems by criticality. This allowed us to “structure and prioritize the work that is required to improve the product” (Rubin & Chisnell, 2008, p. 262).

Descriptive statistics gleaned from the data collection also helped the playtest analysis. For example, play times ranged from 4 to 15 minutes, with an average of 7 minutes and 45 seconds. It was interesting that the average time played in round 1 was 8 minutes and 28 seconds whereas the average time played in round 2 decreased to 7 minutes and 15 seconds (see Table 5). Possible factors for this change, such as LibGO improvements from the iterative design, could be grounds for further analysis in future versions of LibGO.

Play duration and scores.

Player 8 did not list a numeric final score, rather they listed the silver star award. This means player 8 had a numeric value between 110 and 159. For demonstrative purposes, we represent this as the median of that range, 135*.

| Player | Duration (minutes) Round 1 | Duration (minutes) Round 2 | Overall Duration Both Rounds | Final Score out of 160 |

|---|---|---|---|---|

| 1 | 6 | 100 | ||

| 2 | 8 | 80 | ||

| 3 | 5 | 90 | ||

| 4 | 13 | 80 | ||

| 5 | 10 | 75 | ||

| 6 | 5 | 100 | ||

| 7 | 5 | 100 | ||

| 8 | 15 | 135* | ||

| 9 | 4 | 90 | ||

| Average | 8 minutes and 28 seconds | 7 minutes and 15 seconds | 7 minutes and 45 seconds | 94.44 (score) |

Initial perceptions data demonstrated several differences between round 1 and round 2 playtesters. Round 1 playtesters were most frustrated by the use of a text-heavy game format. By contrast, round 2 playtesters were most frustrated by not knowing how to achieve a high score. It was very interesting that no one in round 2 mentioned LibGO’s heavy reading load. There are many possible explanations for this difference: for example, the iterative design process took round 1’s usability feedback forward into improvements made before round 2’s playtesting. It is also possible that participants in round 2 were game enthusiasts and did not mind the text load. In future prototype testing, follow-up interviews with the playtesters may shed light on this difference.

When asked how to describe LibGO, there were a variety of answers in both rounds of playtesting. The terms game, walkthrough, and tour each had two uses while knowledge-base and overview each had one use. Despite the differences in their description of LibGO, all four participants in round 2 said they would play again, while only one of five participants in round 1 said they would play again and three said they might play again. Just over half of the total participants—five out of nine—said they learned something new about the library while playing LibGO, which is interesting because all nine playtesters work at the library. Therefore, these results indicated that this was a learning tool even for those who work in the library, giving us a sense of being on the right track for using gamification as an alternative form of learning.

The iterative design process was critical to improving many structural issues of the game discovered during playtesting; specifically, several factors within LibGO discouraged replayability. If a player chose to replay LibGO, the score continued from the previous game—the points did not reset. Similarly, if players used the in-game Undo/Back arrows, the score did not recalculate. Understanding the critical value of replayability, we chose to fix this scoring aspect in the later iteration of the game.

Round 2 playtesters mentioned wanting links to the About screen during game play. We had purposely avoided in-game links in order to keep users playing the game—thereby not potentially losing them before the game’s completion. This design strategy, however, was not helpful to users who wanted more information about a resource they discovered during the game. Therefore, we decided to provide a list of linkable resources in the About page, so users could connect to content post-game.

The playtesting, meant to be a silent activity, also allowed for an impromptu think-aloud test as playtesters vocalized concerns while interacting with or navigating through LibGO. Whether the remarks were said unknowingly or not, they ranged from processing or questioning oneself, congratulatory remarks when advancing through play, or frustration when the user did not get a high score. The latter left some shouting, “How do I get a high score?” or, “I want a high score, can I play again?” This think-aloud process was not planned, but it ultimately spurred improvements to iterations of the prototype.

Another finding from the first round of playtesting was that the audio content was not ideal for an event in a computer classroom with restricted sound capabilities on the computers. Therefore, although the playtesters suggested additional multimedia content, we decided to limit content to one video as one intended audience would be playing the game in an environment without sound. A second intended audience, online players, would be able to control the audio of their personal computing device.

Although gameplay involved text-heavy content at times and required players to answer questions, the playtesting proved that the pedagogy surrounding game mechanics—such as achieving a top score—was more complicated than a trivia game or walking tour. This issue of engagement was an early concern for us. We wondered whether the gamified library experience would have enough game mechanics to leave players feeling the experience was interactive, engaging, and immersive.

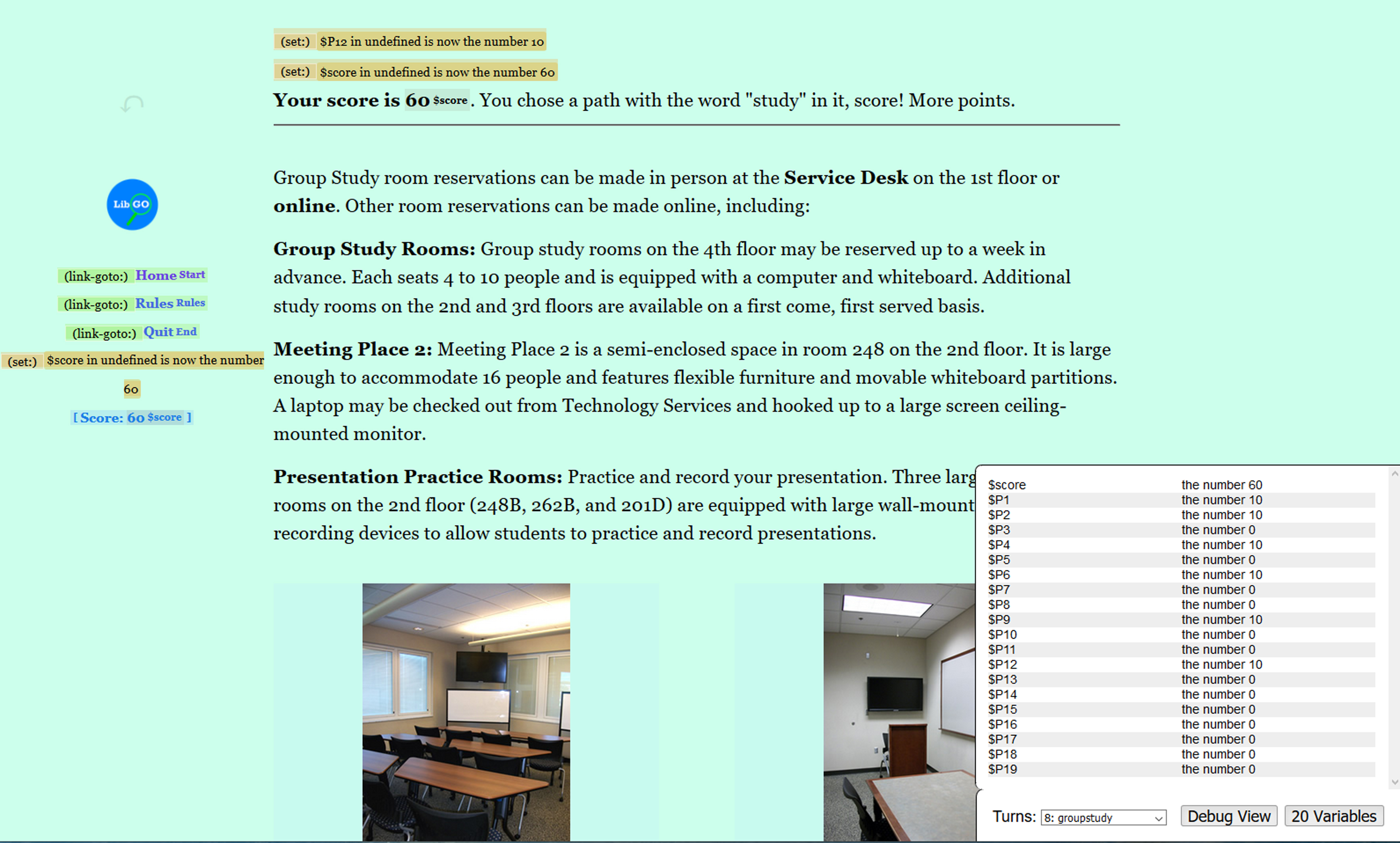

The playtesting also showed that coding these themes and trends iteratively from round 1 to round 2, and also collectively for a larger sample, informed the design changes to the data flow and final game coding of LibGO. One example of this is the changes made to the start screen as seen in Figure 10, which shows how LibGO’s start screen looked at the beginning of round 1 playtesting (left) and after round 2 playtesting (right). It is important to note that triangulating think-aloud data and survey data from the playtests was highly effective in resolving user errors and design issues. The use of both qualitative and quantitative data can help evaluate the efficiency or usability of a user interface and learning objectives for the content illustrated in the user interface.

Implementation Challenges

Despite working on this project in addition to performing our regular job duties, we experienced a brisk pace of progress. After roughly three months of game design and testing, LibGO was ready to be released to the public. The use of design-thinking methods was critical to this rapid development. This method not only helped us produce a gamified experience within a reasonable timeframe, but it also afforded us a measure of success in meeting the needs of the intended audience.

Human-computer interaction is not a process of designing for oneself, but for the user. Although challenging at times, we knew we had to distance ourselves from our own design preferences and focus on the needs and interests of the users. The use of personas, a tactic grounded in the empathy phase, was an extremely helpful technique to keep the real users in mind while designing gamified experiences. We extended this mindset to the playtesting by empathizing with the playtesters while ideating new solutions to their frustrations or desires.

Balancing player entertainment and learning was difficult, as highlighted by the playtesters’ answers to the post-playtest question regarding the most frustrating and favorite aspects of LibGO. This is a normal challenge for any type of educational game; for example, that most playtesters in round 1 thought there was too much reading. Although we made some effort to reduce the reading content for round 2 playtesting, we had to remember that the purpose of LibGO was not quick information, but rather resource exploration.

Thinking through the logic for the scoring algorithm also proved to be challenging. However, consulting with an expert and trial and error testing to find the right algorithm was worth the effort as accurate scoring is a highly desired feature and game mechanism. Another task was figuring out how to override the default settings of the sidebar menu for the Twine software, which affected the game’s menu location. Reviewing software documentation and support forums proved useful in resolving this issue. It is important to note that Twine’s default settings work well for general game development, and that we only suggest editing the code for those comfortable with CSS, HTML, and JavaScript.

Multimedia elements were yet another area for improvement. Although there was never a goal for LibGO to reach industry gaming standards, we—novice developers—could look at adding features in future iterations such as the ability to zoom in on images and adding animations. Likewise, a best practice is to gather all images, audio, and video to be used in the game on the same server or other location that the developers control. LibGO relied on some images and videos the library created and posted to social media platforms. In theory, this requires less coding because the developer can copy the link from the image and place it in the game code, but if the library alters its social feeds, the image is lost and no longer present in the game.

In future iterations, we would also like to modify the storyline so it feels, as one participant described, “less boxy.” Thus, in addition to playtesting, future work on LibGO should include interviews to ask users to elaborate on such comments. Another example of a participant comment worth following up on was, “Wish I had this as a freshman.” It is an intended goal of LibGO to exhibit empathy toward our users and their needs, and following up on how and why the user feels this way may prove fruitful to design considerations.

Beyond the technical aspects, even simple marketing had its challenging aspects. One example included what to call the product without disappointing users—game, tour, story, adventure, trivia—because the reality is that LibGO is not a popular entertainment game like Minecraft, Clue, or Fruit Ninja. Another concern included how to get people to play it at all, a problem later addressed with a launch event offering prize bags for in-person game players. This one-day event, post playtesting, generated fifty-eight of the original study’s 167 participants; it is uncertain whether the expense of this event justified the participation rate.

Conclusion

In this paper we sought to demonstrate how librarians can use design thinking to guide the development and design of an innovative library resource intended to enhance the experiences of library users. The quantitative surveys, gameplay observations, and qualitative feedback of the nine playtesters demonstrated that stakeholders appreciated this form of UX, but it required fine-tuning for large-scale deployment. Game mechanics, storyline conflicts, and marketing the game to users would have stagnated without the use of design concepts such as empathy, ideation, iterative prototyping, minimal coding, and playtesting.

Circular and iterative phases of design and development made the difference. Functional games are great but are limited in their appeal and success if the designers do not understand the intended users. From the earliest design stages, and even throughout the iterative cycles of prototyping and testing, we never lost sight of empathizing with the playtesters and ideating improvements based on the user reactions, desires, and frustrations.

Common pitfalls of playtesting and usability testing are twofold: not only do designers assume that they are the average user, but designers can be apt to mistake the use of personas as user testing. One does not equal the other. Both necessitate the need for playtesting and usability testing prior to launch of a gamified experience.

Additionally, the success of pilot projects and usability tests can provide proof of concept when trying to get buy-in from stakeholders. To establish value for UX and design thinking, it is important to conduct small research and usability tests, then steadily increase studies and recruit collaborators while showing growth and progress. This will educate an organization about the benefits of what you are doing, and, in time, become normalized practices. A second attempt can use pilots and usability tests, whether built in-house or outsourced. If the decision is to build in-house, it may be possible to use students to build the product either through a job or competition; direct hire of a vendor could be another option.

As a result of the process outlined in this case study, we know design thinking and gamification are mutually beneficial practices. The next step is to better understand how to engage with more users, to assess what makes people interested in learning, and to identify how to motivate such participation. We learned a valuable lesson from this project: it is not enough to have technical skills or a mechanically functioning game. Without empathy as part of the design foundation, people will not care to play the game because of a lack of interest or relevance to user preferences. Additionally, this case study supports the fact that libraries are capable of using UX and design thinking to improve programs, services, and resources for their communities. Minimal coding, iterative prototyping, and playtesting are design thinking approaches that can lead to high-impact improvements to the library user experience.

Appendix A - Suggested Resources

For those who are thinking of potential game design or UX opportunities but lack skills or experience, consider free resources such as the d.school Starter Kit (https://dschool.stanford.edu/resources/dschool-starter-kit). Librarians in particular may prefer Web Junction’s “Creating Holistic User Experiences” webinar (https://learn.webjunction.org/course/index.php?categoryid=43). Other great resources are available on the Nielsen Norman Group website (https://www.nngroup.com) and within IDEO’s Design Thinking for Libraries toolkit (http://designthinkingforlibraries.com).

Appendix B - LibGO Workflow Documentation

Division of Responsibilities & Timeline

Institutional Review Board Approval

Survey

Playtest (Round 1: 8/6/18; Round 2: 9/14/18)

Character Development

Brainstorm: purpose, objectives

Points of Interest (POIs); personas

Game Development

LibGO code: sequence, scoring system, levels

LibGO Data Flow Diagrams; Passage Wireframes

Prototypes: Low-Fidelity; High-Fidelity

Playtesting (beta users with game feedback)

Review code, random testing, screen/paper proofing copy

MT Engage grant

Human Resources

Playtesters: Invited: 15 Actual: 9

Event workers

Marketing

Grab Bag, prizes, snacks

Signage

LibGO Event

Worker assignments and schedules

Ticketing system

Papers 1 and 2

1-Research study

2-Case study

Appendix C - LibGO : The Playtest Protocol

Step 1: Pre-Playtest:

-

Select Device:

Computer [PC/Mac] Tablet model:___________________ Phone [Android/Apple/other]

-

Browser Selection:

Chrome Mozilla Edge Opera Safari Other

-

On average, how many hours a week to do you play games? (includes board game, video, computer)

0 1–2 3–4 5–7 8+

-

Character:

Undergraduate Graduate Faculty Member Community Member

Record Start Time: __________

Please start playing LibGO now (do not start Step 2 questions until after you completed the game)

Step 2: Post-Playtest

Record End Time: ____________

Initial Perceptions

What is the most frustrating aspect of what you just played?

What was your favorite part/aspect?

Was there anything you wished your character could do but couldn’t?

If you could change, add or remove anything from the experience, what would it be?

How would you describe this to someone else?

Detailed Accounts

6. |

Did you learn anything new about the library? |

No |

Yes |

7. |

Did you finish the game? |

No |

Yes |

8. |

Did you quit the game or want to quit the game? |

No |

Yes |

9. |

Were you awarded anything? |

No |

Yes |

10. |

What was your final score? |

___________ |

Final Reactions

11. |

Would you play it again/would you recommend others to play it? |

No |

Maybe |

Yes |

12. |

Did you feel like your choices mattered and affected your play? |

No |

Somewhat |

Yes |

13. |

On a scale of 1–5 (1=boring; 5=enjoyable): how would you rate the enjoyment of your play experience? |

||||

|

1 |

2 |

3 |

4 |

5 |

14. |

On a scale of 1–5 (1=not engaged; 5=very engaged): how engaged did you feel while playing? |

||||

|

1 |

2 |

3 |

4 |

5 |

15. |

Open Response (any feedback, negative and positive comments; perceptions of story, functionality, anything) |

||||

End of survey

Note about Playtest Survey

Playtest questions were adapted from Patton (2017), and written to help address the goals of the developers’ mixed-methods, original research study (which had its own survey instrument) on gamifying library orientation. The reasoning behind the playtest was to validate the usability of LibGO before releasing it for a larger study, and to increase its effectiveness in helping library users.

Round 1 playtest had a total of 15 questions. One question on whether the user visited the About page was later added, putting the total at 16 questions for Round 2.

References

Asher, A. & Miller, S. (2011). So you want to do anthropology in your library? Or a practical guide to ethnographic research in academic libraries. http://www.erialproject.org/wp-content/uploads/2011/03/Toolkit-3.22.11.pdfhttp://www.erialproject.org/wp-content/uploads/2011/03/Toolkit-3.22.11.pdf

Baker, L. & Smith, A. (2012). We got game! Using games for library orientation. LOEX 2012, Columbus, OH. 63–66. https://commons.emich.edu/loexconf2012/12/https://commons.emich.edu/loexconf2012/12/

Battles, J., Glenn, V., & Shedd, L. (2011). Rethinking the library game: Creating an alternate reality with social media. Journal of Web Librarianship, 5(2) 114–131. https://doi.org/10.1080/19322909.2011.569922https://doi.org/10.1080/19322909.2011.569922

Beaudouin-Lafon, M. & Mackay, W. E. (2008). Prototyping tools and techniques. In A. Sears & J. A. Jacko (Eds.), The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications (pp. 1017–1039). Lawrence Erlbaum Associates.

Fox, R. (2017). The etymology of user experience. Digital Library Perspectives, 33(2) 82–87. https://doi.org/10.1108/DLP-02-2017-0006https://doi.org/10.1108/DLP-02-2017-0006

Gunter, G., Kenny, R. F., & Vick, E. H. (2008). Taking educational games seriously: Using the RETAIN model to design endogenous fantasy into standalone educational games. Educational Technology Research and Development, 56(5–6), 511–537.

Guo, Y. R. & Goh, D. H. (2016). Library escape: User-centered design of an information literacy game. The Library Quarterly, 86(3) 330–355. https://doi.org/10.1086/686683https://doi.org/10.1086/686683

IDEO. (n.d.). Design thinking defined. https://designthinking.ideo.comhttps://designthinking.ideo.com

Kelley, T. & Littman, J. (2001). The art of innovation: Lessons in creativity from IDEO, America’s leading design firm. Currency/Doubleday.

Long, J. (2017). Gaming library instruction: Using interactive play to promote research as a process. In T. Maddison & M. Kumaran (Eds.), Distributed Learning (pp. 385–401). Elsevier.

Luca, E. & Narayan, B. (2016). Signage by design: A design-thinking approach to library user experience. Weave: Journal of Library User Experience, 1(5). https://doi.org/10.3998/weave.12535642.0001.501https://doi.org/10.3998/weave.12535642.0001.501

Middle Tennessee State University Office of Institutional Effectiveness, Planning and Research. (2017). Fact Book. https://www.mtsu.edu/iepr/factbook/factbook_2017.pdfhttps://www.mtsu.edu/iepr/factbook/factbook_2017.pdf

Mitchell, E. & West, B. (2016). DIY usability: Low-barrier solutions for the busy librarian. Weave: Journal of Library User Experience, 1(5). https://doi.org/10.3998/weave.12535642.0001.504https://doi.org/10.3998/weave.12535642.0001.504

Nielsen, J. (2012, January 3). Usability 101: Introduction to usability. Nielsen Norman Group. https://www.nngroup.com/articles/usability-101-introduction-to-usability/https://www.nngroup.com/articles/usability-101-introduction-to-usability/

Norman, D. (2013). The design of everyday things. Basic Books.

Pagulayan, R. J., Keeker, K., Fuller, T., Wixon, D., & Romero R. L. (2008). User-centered design in games. In A. Sears & J. A. Jacko (Eds.), The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications (pp. 741–759). Lawrence Erlbaum, Associates.

Patton, S. (2017, April 27). The definitive guide to playtest questions. Schell Games. https://www.schellgames.com/blog/the-definitive-guide-to-playtest-questionshttps://www.schellgames.com/blog/the-definitive-guide-to-playtest-questions

Porat, L. & Zinger, N. (2018). Primo user interface—not just for undergrads: A usability study. Weave: Journal of Library User Experience, 1(9). https://doi.org/10.3998/weave.12535642.0001.904https://doi.org/10.3998/weave.12535642.0001.904

Prensky, M. (2001). Digital game-bassed learning. McGraw-Hill.

Reed, M. & Caudle, A. (2016). Digital storytelling project on library anxiety. KU ScholarWorks. https://hdl.handle.net/1808/21508https://hdl.handle.net/1808/21508

Rouse, R. (2001). Game design theory and practice. Wordware Publishing.

Rubin, J. & Chisnell, D. (2008). Handbook of usability testing: How to plan, design, and conduct effective tests (2nd ed.). Wiley.

Sailer, M., Hense, J. U., Mayr, S. K., & Mandl, H. (2017). How gamification motivates: An experimental study of the effects of specific game design elements on psychological need satisfaction. Computers in Human Behavior, 69 371–380. https://doi.org/10.1016/j.chb.2016.12.033https://doi.org/10.1016/j.chb.2016.12.033

Salen, K. & Zimmerman, E. (2006). The game design reader. MIT Press,

Smith, A. & Baker, L. (2011). Getting a clue: Creating student detectives and dragon slayers in your library. References Services Review, 39(4) 628–642. https://doi.org/10.1108/00907321111186659https://doi.org/10.1108/00907321111186659

Somerville, M. M. & Brar, N. (2009). A user-centered and evidence-based approach for digital library projects. The Electronic Library, 27(3) 409–425. https://doi.org/10.1108/02640470910966862https://doi.org/10.1108/02640470910966862

Sundt, A. (2017). User personas as a shared lens for library UX. Weave: Journal of Library User Experience, 1(6). https://doi.org/10.3998/weave.12535642.0001.601https://doi.org/10.3998/weave.12535642.0001.601

Tassi, R. (2009). Tool personas. Service Design Tools. Retrieved July 31, 2018. https://servicedesigntools.org/tools/personashttps://servicedesigntools.org/tools/personas

Ward, J. L. (2006). Web site redesign: The University of Washington Libraries’ experience. OCLC Systems & Services: International Digital Library Perspectives, 22(4) 207–216. https://doi.org/10.1108/10650750610686252https://doi.org/10.1108/10650750610686252

Winn, B. & Heeter, C. (2007). Resolving conflicts in educational game design through playtesting. Innovate: Journal of Online Education, 3(2), Article 6.

Zaugg, H. & Ziegenfuss, D. H. (2018). Comparison of personas between two academic libraries. Performance Measurement and Metrics, 19(3) 142–152. https://doi.org/10.1108/PMM-04-2018-0013https://doi.org/10.1108/PMM-04-2018-0013