Introduction

Long before the rise of chatbots and the COVID-19 pandemic, libraries have been providing virtual reference services via live chat or instant messages with a librarian. Professionals have debated the proper widget placement (Frantz & Westra, 2010) and whether to embed a chat box on the page or float a button (Fruehan & Hellyar, 2021), as well as explored the inclusion of proactive pop-up features, a method of initiating a chat for the user after a predefined amount of time on the webpage (Imler et al., 2016). Overall, these studies have enriched our collective understanding of how users perceive and interact with the widgets. However, web technologies are always changing, and patron expectations for how chat should work on the web are shaped by their experience with comparable digital services outside of libraries. Therefore, it is worthwhile for libraries to evaluate their front-end systems regularly and determine if their setup is meeting the needs of their audience.

In our own case, having selected a new chat service in 2019, we wanted to explore chat widget configurations and solicit feedback from the community to determine the placement and label of the widget. To do this, we devised two usability studies following simple industry-standard “thinking aloud” methodologies (Nielsen, 2012b). This paper presents a selection of findings from these studies as well as a discussion of our approach in examining the placement, labeling, and functionality of chat services on our website.

For the purposes of centering this discussion, it is paramount to define the terms “chat services” and how they relate to “virtual reference services.” Chat services are the subset of virtual reference services that pertain to instant messaging on library websites. Virtual reference services are a set of interconnected services that facilitate reference provision through several modes. Librarians may use email, video conferencing, direct message, SMS/texting, instant message, and social media for virtual reference, depending on the context. However, I will focus on instant messaging with librarians via chat widget buttons embedded on discrete web pages.

Chat widgets come in a variety of flavors and functionality; they may initiate a proactive pop-up window, which prompts users to chat after a period of idleness on the page; be embedded in the page itself; or open a chat in a different browser window. Furthermore, while chat services are present in all manner of libraries, this article will focus on chat widgets in academic libraries.

Literature Review

Librarians have studied chat services for as long as they have been available. Wells (2003) declared it is “generally acknowledged that academic chat reference services do not receive heavy use” (p. 133). In agreement, Tennant mused that same year that perhaps the technology was too new for us to understand whether low engagement was due to a lack of interest or a lack of awareness (2003). To boost the number of engagements in their library, Wells (2003) increased the size of the chat widget, added it to more pages, and reflected on the usage data. As a result, they determined that chat widgets that stand out more tend to get more use. Chat widgets that appeared on item-level catalog and database pages got more use because they served the user at a particular time of need.

In the late 2000s and early 2010s, trends emerged, such as the creation of dedicated “Ask a Librarian” pages with fully embedded chat boxes (Bell & DeVoe, 2008). The call-to-action labels used for the buttons and embedded chat windows varied, but solid patterns and trends revealed themselves. Labeling buttons or links “Ask a Librarian” is prevalent, as is “Live Help” (Bedwell, 2009). One major finding in the early literature was that consolidating chat services to one platform and placing the widget on as many pages as possible led to significant usage increases. In the case of University of Oregon Libraries, adding their chat widget to popular and perhaps complicated search tool pages led to a 500 percent increase in engagement (Frantz & Westra, 2010).

The mid-2010s saw new trends such as the implementation of proactive chat widgets that dynamically opened a chat window after a specified duration of idle user time on the page or other conditions. Waves of usability studies published on chat widgets also cropped up during this time. The University of North Carolina conducted a usability evaluation of their virtual reference services and determined that patrons found chat to be the most effective and usable service (Chow & Croxton, 2014). In 2016, librarians at Pennsylvania State University conducted usability studies to explore patron reactions to proactive pop-up features. They found that patron response was overwhelmingly positive. Only one of thirty participants noted annoyance in reaction to the pop-up (Imler et al., 2016). In the case of University of Texas at San Antonio, implementing “a proactive, context-sensitive chat system” increased engagement 340 percent (Kemp et al., 2015, p. 764).

After experiencing proactive features in action, some libraries had concerns that automatic pop ups would be intrusive or frustrating for users. Bowling Green State University decided to implement the features on select pages of their website, and it led to a 132 percent increase in engagement (Rich & Lux, 2018). Librarians at the University of Texas at Arlington employed proactive chat in their implementation of ProQuest’s Summon discovery layer, databases, and research guides. Users met this implementation with greater engagement than anticipated, which led to the need to increase their staff presence on chat during peak hours. Their statistics showed an average of nine chats per hour during this time. Ultimately, the experiment revealed that chat questions doubled from 4,020 in 2015–16 to 8,120 in 2016–17 (Pyburn, 2019).

Recent literature on library chat services has continued to highlight the importance of user interface design, chat widget placement, and taking advantage of enhancements or special features. However, the COVID-19 pandemic has led to greater levels of chat engagement and has forced libraries to rely more heavily on remote learning and online services. In 2021, librarians at McGill University analyzed pre- and post-pandemic chat transactions and confirmed that the “average length of interactions increased from Fall 2019 to Fall 2020, and librarians spent a greater amount of time chatting during the pandemic” (Hervieux, 2021, p. 277). Perhaps now, more than ever, it is important to ensure that we get library chat services right because they are such a critical channel of communication with our patrons.

Background

Pratt Institute is a school located in Brooklyn, NY. We have a full-time enrollment of 5,137 undergraduate and graduate students. The Libraries have a full-time staff of twenty-seven, including seven faculty librarians. In addition to other virtual reference services, we offer real-time online chat on a regular basis. Faculty librarians and trained graduate assistants staff chat. In 2018, our website used a LibraryH3lp chat box that was embedded on our top-level navigation pages on the right sidebar. The chat box was also embedded on a number of our research guides, but it was not featured on all guides (see fig. 1).

Additionally, we did not have the chat widget on our discovery layer or catalog. After redesigning the website collaboratively with the Pratt’s School of Information, we moved to a new chat platform, LibChat, in July 2019 and implemented the best practices outlined in the literature review above. We used plain language for the button label and embedded the widget on all pages of our discovery layer and catalog. Additionally, we added it to the core pages of our website and research guides. Our first implementation used the right side “slide-out” style of widget with the label “Ask a Librarian.” This floating button appeared on the right side of the viewport in the middle of the page and slid in a chat window when clicked. We did not enable proactive notification features.

Like the others before us, we immediately saw a dramatic increase in engagement upon making these changes. Our total number of yearly transactions nearly doubled—a roughly 94 percent increase—from 2018: in 2018, we recorded 324 transactions, and in 2019 we recorded 629 transactions. However, with this increased engagement, we also saw a spate of new and consistent issues. Occasionally, users would open a conversation with our librarians via LibChat and write the equivalent of an email message before closing the tab, not realizing that the chat was a live instant messaging service.

While the increase in engagement was dramatic, we wanted to fully understand the increase and determine if there were further adjustments we could make to the button label to provide a user experience that was more in line with patron expectations. This also led to internal discussions about whether or not the slide-out placement was the best for user engagement. Lastly, we were curious about enabling proactive features, but similar to Rich and Lux (2018), we had suspicions that the pop-up effect may be off-putting or annoying.

Methodology

In order to understand the user behavior and find the appropriate configuration, we performed live usability testing on our website with several sections of Pratt’s graduate-level usability course, INFO 644: Usability Theory & Practice. In each of the two studies detailed below, a representative from the library met with a group of three to four INFO 644 student researchers to lay out usability testing goals, develop a screener survey to send to the Pratt community, and establish one to three tasks on the Libraries’ website to test live with selected participants in-person or via screen share on Zoom For the screener survey, Study 1 used Jotform and Study 2 used Google Forms . From the survey results, student researchers selected five to eight candidates for testing, which Nielsen noted is adequate for determining usability issues (2012a).

Individual student researchers in INFO 644 administered each test. They recorded the tests for post-test analysis using Zoom screen-recording software, and they used Excel and Google Sheets for marking the success or failure of the interface and recording notes. They implemented pre- and post-task surveys to gauge participants’ baseline understanding and to record their success and thoughts on each task. Tests used thinking aloud —a practice of having the participant narrate their thought process to evaluators while navigating the site and completing the tasks. We selected this approach because it is an established method with a track record of success that is both flexible and robust (Nielsen, 2012b). Following completion of each study, student researchers reviewed the recordings, noted which tasks were successes or failures, and made recommendations for the Libraries with an end-of-course usability report.

Usability Testing & Findings

Below are detailed descriptions of two studies that focused on chat services in the Libraries.

Study 1: Determining the Ideal Location of the Chat Widget and Proactive Features

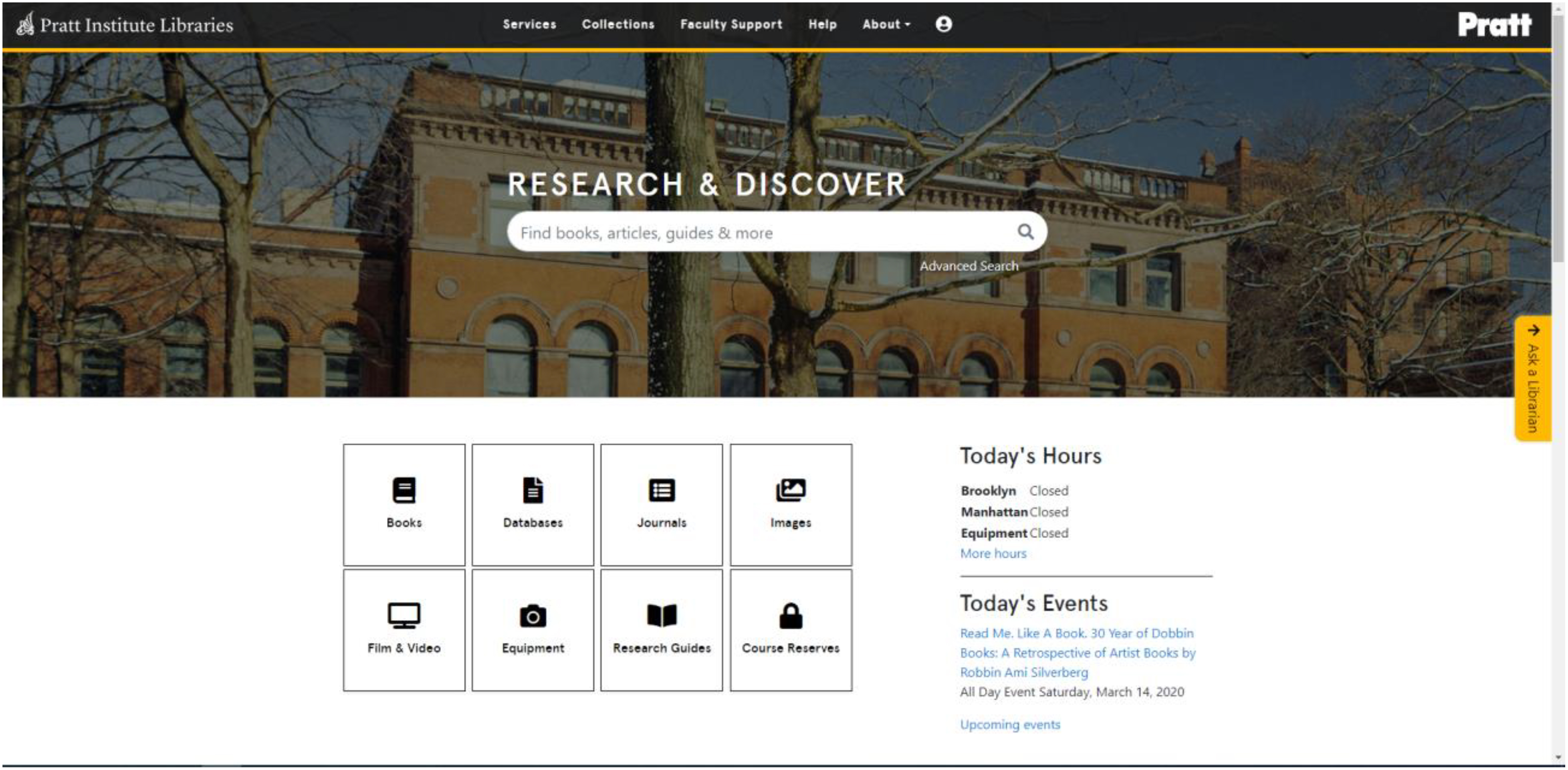

Our first study examined user interaction on our discovery layer, EBSCO Discovery Service. The study had three user tasks, but only one of these focused on the Ask a Librarian chat widget. At the time of the study, Fall 2020, our chat widget was a yellow button anchored to the right side of the screen in the middle of the viewport and using the slide-out style. It was labeled with “Ask a Librarian” and included an arrow to indicate that it had slide-out functionality (see fig. 2).

Six participants from the Pratt community were selected via screening questionnaire. The target demographic was faculty and students with little to no experience with our website. We gave participants a prompt to find information on a non-existent poet, a search that would yield no results. After running the search, only one of the six participants opted to use the Ask a Librarian feature. When prompted to seek out how to contact a librarian, two of the six had difficulty finding it. Two participants noted that the rotated text on the slide-out button was difficult to read. Five of the six participants noted that they would prefer the chat button at the bottom or bottom right of the viewport. Lastly, five of the six participants thought the button would initiate a chat with a real person and not a chatbot.

While we did not have proactive chat features enabled on our website at the time, we also wanted to gather input from the community about the potential implementation of automatic chat prompts. To that end, we showed the six participants another academic library website with the same chat widget, LibChat, that had the feature enabled. When asked, “What do you think of this automatic chat prompt?” four participants responded positively and two responded neutrally. One participant mentioned that it would be “one more thing for me to close.” However, when comparing the proactive features to our own implementation, five out of the six preferred the proactive features to a chat system that did not automatically prompt patrons to engage. Following the study, we decided to enable proactive features and move the chat widget to the bottom-right corner of the viewport.

Study 2. Determining the Appropriate Label for the Chat Button

Like many libraries before us, we launched our new chat system with the widget using “Ask a Librarian” as the button label. In an effort to better understand if that label was related to the recurring asynchronous and email-like chat interactions from patrons, we decided to explore it through surveys and further usability testing. In 2022, we sent out a screener survey to all Institute students and faculty.

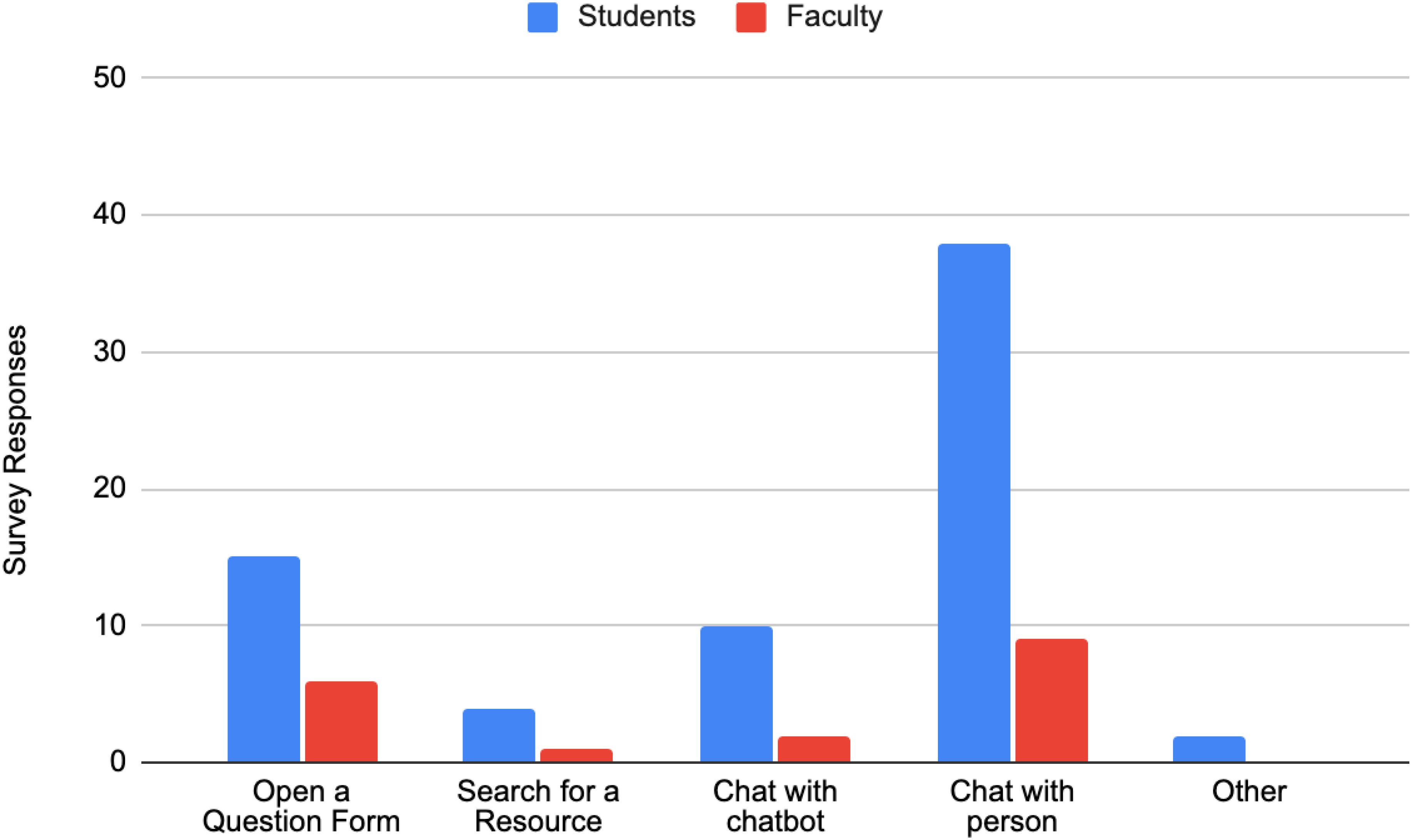

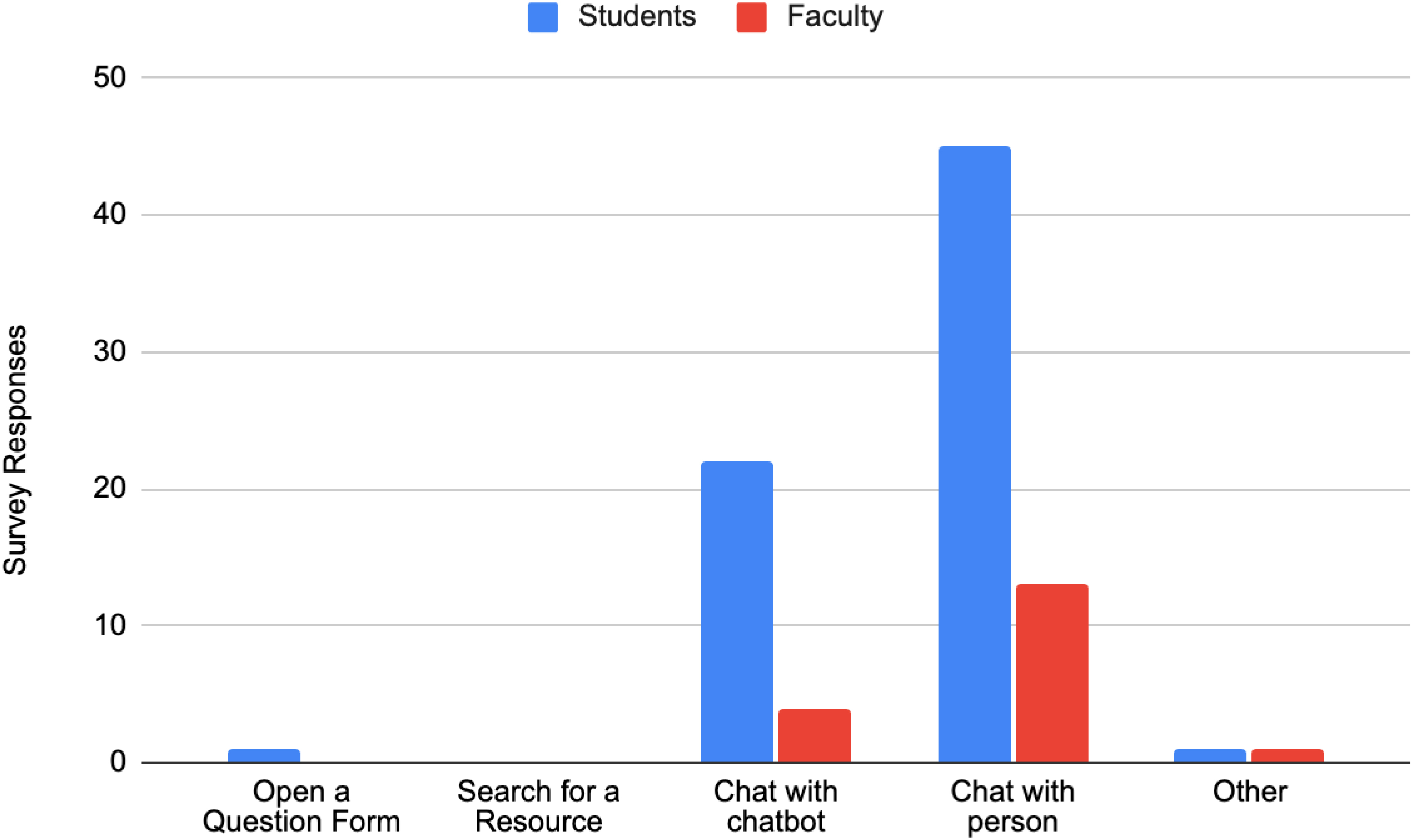

Among the standard questions we asked in the screener survey, we also presented two image variations of the yellow chat widget button—one labeled “Ask a Librarian” and the other labeled “Live Chat.” Beneath each image, we asked the following, “Imagine that you see this button on the homepage of the Libraries’ website. What do you think it does when you click on it?”

Of the sixty-nine students who responded to the survey, fifteen thought “Ask a Librarian” would open a question form. Conversely, only one student respondent thought “Live Chat” would open a question form. Similarly, of the eighteen faculty who responded, six thought “Ask a Librarian” would open a question form and zero thought “Live Chat” would open a question form. One faculty member selected “other” and entered that they were “not sure” what clicking on “Live Chat” would do (see figs. 3 and 4).

From the eighty-seven respondents, nine participants were selected for testing: two undergraduate students, four graduate students, and three faculty members. Of the three tasks in this study, one was dedicated to interacting with the chat widget. The chat widget we tested was labeled “Live Chat.” From the homepage, we asked the participants where they would go to receive assistance from a librarian with a complex task they didn’t know much about. Six of the nine engaged with the chat widget. However, five out of nine participants didn’t immediately interact with the chat widget, choosing instead to engage in chat only after spending time scrolling or navigating the site. Three did not interact with the chat widget at all. Instead, these three settled for alternative methods of contacting a librarian, such as booking a Zoom appointment. Of those that engaged with the chat widget, two opened the chat when prompted by the proactive pop up. The other four participants opened the chat from the “Live Chat” button at the bottom right corner of the screen.

Discussion

Following the first study, we enabled proactive chat features, and our chat engagement has grown substantially. In 2020, prior to proactive features, we had a total of 1753 chats. In 2021, after enabling them, this increased to 2171 chats, a sizable difference of 418 chats. However, we gathered these statistics during a global pandemic, throughout which we provided the vast majority of our services and resources online by necessity.

One further complication is that, per the study, we changed the location of the widget to the bottom-right at the same time that we enabled proactive features. As a result, it can be difficult to attribute any specific cause when considering the boost in numbers alone. As research has shown, there are many variables that motivate users to engage with online chat (McLean & Osei-Frimpong, 2019). In either case, Budiu recommended the bottom right location as the conventional placement for floating button customer service chat widgets (2019). We were glad to see the participants of our study supported this recommendation.

Moreover, we can also say that our participants were largely amenable to the proactive features. Our results join the growing body of research that indicates proactive chat is not as off-putting or annoying as librarians may be inclined to think.

Regarding the second study, the screener survey results show a significant difference in patron expectations between the “Ask a Librarian” and “Live Chat” button labels. This may appear obvious to our readers, but it highlights the importance of using plain and clear language for call-to-action buttons. In particular, Budiu notes, “Do Not Hide Chat Under Vague Labels such as Ask a Question”; such labels can be confusing for patrons and lead to misunderstandings (2019). On the one hand, we noticed a greater number of students and faculty associating “Ask a Librarian” with question forms. On the other hand, more students and faculty associate “Live Chat” with chatbot interactions. This perhaps speak to prior experiences with other website chat services in recent years. There should be additional research to determine if there is a more accurate label.

The screener data gave us some interesting information, but the test results of the second study are less conclusive. We were pleased that most of our patrons opted to use the chat button. Some of them were directly encouraged by the proactive features, which supports our decision to enable it. However, that three didn’t see the widget at all is concerning to us. We need to do more research to fully understand this segment of our users.

As of this writing, we have not made the switch from “Ask a Librarian” to the “Live Chat” label, but we will explore this possibility for future updates. In addition, we aim to further examine how to make our chat widget more noticeable and understand why some may miss it entirely.

Conclusion

Ultimately, our two studies confirm that placing the library chat widget at the bottom right of the website viewport and using proactive pop-up features is preferable for our patrons. However, determining the ideal chat widget button label requires more research. “Ask a Librarian” is a popular button label for library chat widgets, but our study suggests that this label can be mistaken for an asynchronous question form.

Acknowledgements

I would like to thank all of the students who conducted usability testing in support of improving our website. Study 1: Ben Gross, Viola Li, Mingqi Rui, and Gabrielle Belli.

Study 2: Isik Erturk, Kyle Oden and Priyanka Gangwal. Special thanks also to the professors who have taught INFO 644 and collaborated with us over the years, Craig MacDonald and Elena Villaespesa.

Appendix

Study 1 Pre-task Questions

Are you located in the NYC/metro area, or out of state?

Where do you normally access the library website from (physical location)?

What device do you most often use to access online library services?

How would you rate your digital literacy skills?

What do you typically use the library for? (i.e. read magazines, personal books, academic research, your job, etc.)

How many minutes/hours do you typically spend using library resources (online and in-person) a week?

Study 1 Post-task Questions

Path A: Did Not Interact with Chat Widget

Do you have any other ideas on how you might find information from Pratt on this topic?

Did you consider seeking help from a librarian?

(If NO) Do you think this study environment influenced you to not seek help?

Did you notice the “Ask a Librarian” button on the right side of the window?

(IF YES) Did the buttons text help you understand what it does? (labeling issue?)

(IF YES) Did you consider trying this service to find information about Augustus Cardinham?

(IF NO) What would make the button more noticeable for you? (position, text label, etc.)

Path B: Interacted with Chat Widget

What do you think the colored dot on the “Ask a Librarian” button means?

What do you (or did you, if they already clicked it) expect clicking on the button will do?

Did you expect a separate browser window like this to open?

Is there another place you’d prefer a chat box like this to appear? Why?

Would you rather enter your email, or leave that field blank? Why?

If you knew that providing your email was so that the librarian could reconnect to you in case the chat got disconnected, would you leave your email, or leave the field blank?

Do you think you’re going to chat with a real person or a chatbot?

Viewing Competitor Library Chat Widget

What do you think of this automatic chat prompt?

Do you prefer getting a prompt like this vs. the Pratt Library chat method?

How would you feel if this automatic chat prompt only appeared once, or very infrequently?

Study 2 Screener Questions

Faculty

Which department do you work in?

How often do you visit/use Pratt Library’s website?

Which devices do you use to access Pratt Libraries?

What do you use the website mostly for?

Imagine that you see this button on the homepage of the Libraries’ website. What do you think it does when you click on it? (Ask-a-Librarian)

Imagine that you see this button on the homepage of the Libraries’ website. What do you think it does when you click on it? (Live Chat)

Students

What year do you graduate?

How often do you visit/use Pratt Library’s website?

Which devices do you use to access Pratt Libraries?

What do you use the website mostly for? Check all that apply.

Imagine that you see this button on the homepage of the Libraries’ website. What do you think it does when you click on it? (Ask-a-librarian)

Imagine that you see this button on the homepage of the Libraries’ website. What do you think it does when you click on it (Live Chat)

Study 2 Pre-task Questions

Please sign the form by choosing one of the options.

Please share your full name and pronouns.

Which profile suits you the best?

Please share your highest level of education along with the name of the degree.

Which of the following best describes your comfort with general technology changes on interfaces you work with often?

Lastly, how would you rate your experience on the current Pratt website between 0 to 5? (0 to 5, where 0 is very bad, 1 is quite bad, 2 is somewhat bad, 3 is somewhat good, 4 is quite good, and 5 is very good.)

Study 2 Post-task Questions

Task 1:

Were you able to complete the task successfully?

How long did you take to work on this task?

How would you rate the experience of completing this task on a scale of 0 to 5? (0 to 5, where 0 is very bad, 1 is quite bad, 2 is somewhat bad, 3 is somewhat good, 4 is quite good, and 5 is very good.)

Task 2:

Were you able to complete the task successfully?

How long did you take to work on this task?

How would you rate the experience of completing this task on a scale of 0 to 5? (0 to 5, where 0 is very bad, 1 is quite bad, 2 is somewhat bad, 3 is somewhat good, 4 is quite good, and 5 is very good.)

Task 3:

Were you able to complete the task successfully?

How long did you take to work on this task?

How would you rate the experience of completing this task on a scale of 0 to 5? (0 to 5, where 0 is very bad, 1 is quite bad, 2 is somewhat bad, 3 is somewhat good, 4 is quite good, and 5 is very good.)

References

Bedwell, L. (2009). Making chat widgets work for online reference. Online, 33(3), 20–23. https://dalspace.library.dal.ca/handle/10222/45253https://dalspace.library.dal.ca/handle/10222/45253

Bell, S. J., & DeVoe, K. M. (2008). Chat widgets: Placing your virtual reference services at your user’s point(s) of need. The Reference Librarian, 49(1), 99–101. https://doi.org/10.1080/02763870802103936https://doi.org/10.1080/02763870802103936

Budiu, R. (2019, January 13). The user experience of customer-service chat: 20 guidelines. Nielsen Norman Group. https://www.nngroup.com/articles/chat-ux/https://www.nngroup.com/articles/chat-ux/

Chow, A. S., & Croxton, R. A. (2014). A usability evaluation of academic virtual reference services. College & Research Libraries, 75(3), 309–361. https://doi.org/10.5860/crl13-408https://doi.org/10.5860/crl13-408

Frantz, P., & Westra, B. (2010). Chat widget placement makes all the difference! OLA Quarterly, 16(2), 16–21. https://doi.org/10.7710/1093-7374.1282https://doi.org/10.7710/1093-7374.1282

Fruehan, P., & Hellyar, D. (2021). Expanding and improving our library’s virtual chat service: Discovering best practices when demand increases. Information Technology and Libraries, 40(3). https://doi.org/10.6017/ital.v40i3.13117https://doi.org/10.6017/ital.v40i3.13117

Hervieux, S. (2021). Is the library open? How the pandemic has changed the provision of virtual reference services. Reference Services Review, 49(3/4), 267–280. https://doi.org/10.1108/RSR-04-2021-0014https://doi.org/10.1108/RSR-04-2021-0014

Imler, B. B., Garcia, K. R., & Clements, N. (2016). Are reference pop-up widgets welcome or annoying? A usability study. Reference Services Review, 44(3), 282–291. https://doi.org/10.1108/RSR-11-2015-0049https://doi.org/10.1108/RSR-11-2015-0049

Kemp, J. H., Ellis, C. L., & Maloney, K. (2015). Standing by to help: Transforming online reference with a proactive chat system. The Journal of Academic Librarianship, 41(6), 764–770. https://doi.org/10.1016/j.acalib.2015.08.018https://doi.org/10.1016/j.acalib.2015.08.018

McLean, G., & Osei-Frimpong, K. (2019). Chat now… Examining the variables influencing the use of online live chat. Technological Forecasting and Social Change, 146, 55–67. https://doi.org/10.1016/j.techfore.2019.05.017https://doi.org/10.1016/j.techfore.2019.05.017

Nielsen, J. (2012a, June 3). How many test users in a usability study? Nielsen Norman Group. https://www.nngroup.com/articles/how-many-test-users/https://www.nngroup.com/articles/how-many-test-users/

Nielsen, J. (2012b, January 15). Thinking aloud: The #1 usability tool. Nielsen Norman Group. https://www.nngroup.com/articles/thinking-aloud-the-1-usability-tool/https://www.nngroup.com/articles/thinking-aloud-the-1-usability-tool/

Pyburn, L. L. (2019). Implementing a proactive chat widget in an academic library. Journal of Library & Information Services in Distance Learning, 13(1–2), 115–128. https://doi.org/10.1080/1533290X.2018.1499245https://doi.org/10.1080/1533290X.2018.1499245

Rich, L., & Lux, V. (2018). Reaching additional users with proactive chat. The Reference Librarian, 59(1), 23–34. https://doi.org/10.1080/02763877.2017.1352556https://doi.org/10.1080/02763877.2017.1352556

Tennant, R. (2003, January). Revisiting digital reference. Library Journal, 128(1), 38–40.

Wells, C. A. (2003). Location, location, location: The importance of placement of the chat request button. Reference & User Services Quarterly, 43(2), 133–137.