Amid the growing suite of initiatives that many centers for teaching and learning (CTLs) offer are course design institutes (CDIs). Unlike the singular or episodic workshop, and different from a faculty learning community, the CDI is an intensive, multiday initiative guiding instructors through the process of (re)designing a course. Central to most CDIs are research-informed perspectives reflecting backward integrated design principles (e.g., Fink, 2013; Hansen, 2011; Wiggins & McTighe, 2005). Within an environment supported by peer leaders, faculty participants work through the recursive, interwoven processes of articulating course goals and student learning outcomes, crafting assessments that evidence student learning, and creating high-impact learning activities. Coherent with evaluation and assessment frameworks such as Kreber and Brook (2001), educational developers may anticipate an impact from CDIs across six possible levels: participants’ perceptions, participants’ beliefs about teaching and learning, participants’ teaching performance, students’ perceptions of teaching, students’ learning, and institutional culture.

In an academic milieu within which national organizations and institutions of higher education tout the benefits of high-impact practices (HIPs) on student learning (Kilgo et al., 2015; Kuh, 2008), educational developers may reflexively question whether such HIPs exist also for faculty—and whether facets of CDI impact may parallel those of undergraduates immersed in HIPs. To date, few studies within educational development corroborate this speculation; as Carpenter et al. (2017) asserted, “The relationship between Kuh’s HIPs and faculty development is not represented extensively or in much detail in the scholarly literature” (p. 8). Adding to this, they argue for a deeper link between educational development and HIPs, pointing to opportunities to craft programs for faculty that mimic those that work so well for students, such as learning communities.

Eight characteristics of HIPs, advanced by Kuh et al. (2013), may be “easily applied to or used as a framework for faculty development” (Carpenter et al., 2017, p. 9): high performance expectations, investment of time and effort, substantive peer and faculty interactions, experiences with diversity, constructive and timely feedback, opportunities to reflect upon and integrate learning, relevance and real-world application, and public demonstration of competence. Given the similarities between these tenets and those guiding immersive educational development experiences, such as many CDIs, it is reasonable to conjecture that such initiatives may culminate in positive impacts on faculty. Nonetheless, scant evidence affirms an enduring, beneficial effect of CDIs on participants, and no studies acknowledge potential costs and limitations. Thus, our study meets an important need, offering insight into the multifaceted gains and limiting factors influencing faculty beliefs about teaching and learning as well as their perceptions of teaching performance.

Literature Review

We begin our review of the literature with an introduction to the CDI as an instructional intervention for faculty, focusing on studies that sensitized and informed our specific inquiry into the impact of CDIs on faculty learning, growth, and development. From there, we delve into the literature on motivation, which provides theoretical insight into both benefits and costs (i.e., time, effort) of engagement in multiday interventions such as CDIs.

CDI Impact

Gravett and Broscheid (2018) detailed models and genres of educational development, noting the versatile expanse of programming available. Drawing from the work of Hurney et al. (2016), Gravett and Broscheid contended that the specific type, format, or approach utilized (e.g., consultation, workshop) must be nested in faculty learning outcomes that also support student learning outcomes and, plausibly, other institutional or community goals. Should an instructor wish to develop a new service-learning course for undergraduates that necessitates a long-term community partnership, for instance, a short, one-time workshop may be ineffective (Gravett & Broscheid, 2018). Instead, “if the desired outcomes of faculty development … revolve around lasting changes, then more extended, immersive programs may be needed” (p. 100). Institutes provide such depth, typically committing participants to a multiday structure in which they work both individually and with teams on a common aim (e.g., course design).

With support from educational developers, peer mentors, and sometimes student consultants, CDIs specifically “aim to help instructors create rich, active, supportive classroom environments grounded in evidence-based practices; expand their pedagogical content knowledge; become reflective practitioners; and foster teaching community and personal growth” (Palmer et al., 2016, p. 1). Research on the extent to which CDIs promulgate development along these lines, however, is underrepresented in the literature. In a meta-analysis of 138 studies on the impact of educational development, Chism et al. (2012) reviewed 49 studies on institute efficacy; these are largely limited to results focusing on participant satisfaction and perception—for institutes of one day or more, findings pointed to “positive effects on teaching attitudes and changes in teaching practices” (p. 135). Relatedly, they located 20 studies on formal courses in teaching. With some exception, this body of research documents the impact on participants’ thinking about teaching but lacks insight into other impact factors.

More recently, Palmer et al. (2016) published the first systematic study of CDIs—one that richly informs our awareness of short- and long-term impact. Employing a multifaceted, mixed methods approach, they analyzed post-institute satisfaction and perception data, pre- and post-institute surveys on pedagogical confidence, pre- and post-institute self-report tools probing ability to craft learning-focused courses, and pre- and post-institute syllabi. Situating their work in Kreber and Brook’s (2001) impact model, the researchers sought to ascertain multiple points of impact beyond that of participant satisfaction alone. Salient takeaways included the following: participants learned the basic principles of course design, pedagogical confidence (i.e., participant beliefs about teaching and learning) was bolstered, and instructors’ syllabi (post-CDI) favored learning-focused components over content-centric elements (Palmer et al., 2016).

Research into participant perception and satisfaction, beliefs and attitudes, and post-institute applications adds significant value to our understanding of the impact of CDIs on educational development. Absent from the nascent literature on CDIs is a focus on the intersecting benefits and costs of institute engagement on faculty outcomes. Incisively noted by Gravett and Broscheid (2018), “Crafting high-level, applied, authentic learning experiences may be a daunting task for faculty” (p. 87). Designing and implementing a new course does not transpire in a vacuum; consider the array of other activities vying for a faculty member’s focus (e.g., research development and grant procurement, promotion and tenure, service and outreach expectations). To this end, one wonders if the positive impacts of CDIs are indeed sustainable and what, if any, limitations accrue for participants.

Motivation Theory

Motivation theory provides a lens through which we can begin to make meaning of this quandary. Although theories of motivation abound, the work that has been done in educational development does not offer a theoretical framework for understanding faculty motivation to participate in a CDI (Lowenthal et al., 2013). Of the range of available options, expectancy-value (EV) models of motivation stand out “for their ability to synthesize multiple theoretical perspectives, capture the key components of what motivates an individual, and explain a wide range of achievement-related behaviors” (Barron & Hulleman, 2015, p. 1). Furthermore, Eccles et al.’s (1983, as cited in Flake et al., 2015) EV model of motivation has been widely used to understand learner experiences (e.g., academic choices, performance behaviors, student attitudes) in an educational context. In the case of CDIs, faculty participants are the learners of interest, rendering EV a suitable lens through which we may understand facets of the faculty experience of CDIs.

A recent amendment to EV theory is Hulleman and Barron’s (2015) expectancy-value-cost (EVC) theory of motivation. EVC theory focuses on three factors central to a comprehensive understanding of overall motivation: having an expectation that one can be successful in a task (i.e., expectancy), placing value in that task (i.e., value), and recognizing the costs associated with that task (i.e., cost). Although the original theory included cost as a sub-factor, Hulleman and Barron theorize that cost is a third central factor necessary to understanding motivation. To date, however, cost remains under-theorized, and little empirical measurement has been done in this area (Flake et al., 2015).

Overview of the Model

There are four important features of the EVC model of motivation. First, the model is psychological, based in participant perceptions and interpretations. It is less important how much actual skill a participant has for performing a particular task and much more important how that individual answers the question, “Can I do the task?” In other words, a participant’s beliefs are more relevant to understanding motivation than actual ability. Secondly, the model is developmental; it asserts that the factors intersecting to create motivation can be shaped over time by personal characteristics, experiences, and context. Third, the model suggests that motivation is additive; per Hulleman and Barron (2015), the formula is represented as follows: Motivation = E + V - C. Lastly, the model synthesizes multiple perspectives to explain comprehensively a range of participant achievements and attitudes.

Expectancy (E) suggests that a learner who believes they can do something is more likely to be motivated to participate in a related behavior or task. Thus, a faculty member who expects to be successful in redesigning a course may be motivated to partake in an intensive institute. The second concept, value (V), means that those who value a task are more motivated to do what it takes to achieve that task. Faculty who do not value pedagogical innovation, or see no reason to alter status quo, are unlikely to register for a CDI. However, participants who receive compensation for engaging in a CDI may be motivated, chiefly or peripherally, by a financial incentive.

The last factor is cost (C): the higher the costs associated with a task are, the lower the participant’s motivation will be. Four types of cost are theorized (Hulleman & Barron, 2015). The first, task difficulty, is the amount of effort required. Take, for example, efforts associated with designing transparent, performance-based assignments that engage students. Effort-unrelated costs reduce motivation due to the amount of time, energy, or resources that a faculty member has to expend on other tasks, such as publishing research or caring for children. The third, opportunity cost, is the loss of engaging in valued alternatives due to participating in the targeted task (e.g., missing an exercise class due to time spent on course prep). Lastly, there are negative psychological consequences, such as fatigue experienced after completing a CDI and realizing the time-consuming need to redesign other courses.

Assessing each of the individual factors within the model is necessary to understand which impacts motivation. For example, a faculty member who is experiencing high cost/low motivation from competing demands to complete a tenure dossier may need different support than one with a fixed, deterministic mindset about teaching. While the three factors are intended to be used under the comprehensive framework of gauging motivation, it is important to differentiate between the contributions that each make in shaping participant motivation.

Methodology

Approved by our institutional review board (IRB), this study employed a pre-post time series design across multiple summer offerings of a CDI that transpired at a public, regional institution classified by Carnegie as a master’s comprehensive. The purpose of this study was to investigate the impact of the CDI on faculty members’ development—with particular attention to benefits and limiting factors associated with the construct of motivation as contextualized by expectancy, value, and cost (Hulleman & Barron, 2015). This intent is consistent with the second facet of Kreber and Brook’s (2001) model: exploration of participants’ beliefs about teaching and learning.

Intervention

Each summer our CTL offers an intensive CDI. As is common with most of our CTL programs, participants generally find out about the CDI through our weekly all-faculty email digest, which provides information on upcoming initiatives and faculty resources. Through a regional network, we also invite instructors from nearby and regional institutions (e.g., those without CTLs) to participate free of charge. Participants apply to the CDI, stating their rationale for attending the institute and documenting their hoped-for outcomes. Nearly all applicants are accepted into the institute; those who fail to complete the application (or who are unable to commit to the full week) may be denied a spot. Faculty do not receive stipends to attend.

The curriculum focuses on investigating the situational factors influencing student learning; attending to student motivation, engagement, and development; developing course goals and student learning outcomes; creating assessments that align with outcomes; and creating engaged pedagogies and learning activities. The institute meets for five full (i.e., 7- to 8-hour) sequential days. Approximately 20 participants are divided into groups of four to six, each guided by a small-group faculty facilitator. Using an array of evidence-based pedagogies, key elements are taught by lead facilitators. Various readings and activities (e.g., Fink, 2013; Hansen, 2011), given as homework, enrich participants’ progress and model learner-centered design.

Between sessions, participants complete a daily deliverable; these assessments culminate in a course portfolio that is presented in a gallery format during the final day of the institute. For most participants, the portfolio represents a full unit, module, or week of institutional content (i.e., outcomes, in-class and out-of-class assessments, and activities). In rare cases, participants are able to showcase pedagogy, activities, and assessments for an entire course. Notably, our CTL has developed and published a manualized curriculum for the institute, which allows for consistency across institutes and provides participants, post hoc, with review materials. All facets of the institute curriculum, ranging from what transpires daily to the associated rationale and research bases, are made transparent to institute participants. This allows participants to continue to work on their courses post-institute and to later apply what they have learned to future course development opportunities.

Instrument

To understand the full impact of our CDI, we developed a web-based instrument that included a battery of measures composed of existing or adapted published instruments with sound psychometric properties (see Table 1). We chose this method in lieu of developing unique measures, which entails a lengthy process of content and construct validation and pilot testing. Once our battery was compiled, we invited a cadre of faculty developers, general faculty, and doctoral-level assessment and measurement experts to pilot the instrument. This allowed us to ascertain the amount of time one might expect to spend taking the survey (i.e., 15–25 minutes) as well as to ensure questions were both clear and answerable.

Measures Utilized in CDI Assessment Instrument

| Construct | Original measure | Citation | Number of questions |

|---|---|---|---|

| Metacognition | Metacognitive Awareness Inventory | Schraw & Dennison, 1994 | 25 |

| Motivation | Attitudes Toward Teaching Scale | Hulleman & Barron, 2012; Hulleman & Schiefele, 2010; Hulleman & Springer, 2010 | 30 |

| Reflection | Rumination-Reflection Questionnaire | Trapnell & Campbell, 1999 | 7 |

| Relationship with students | Academic Setting Evaluation Questionnaire | Fernández et al., 1995 | 6 |

| Sense of belonging | Sense of Belonging Scale | Bollen & Hoyle, 1990 | 9 |

For the purpose of this specific article, note that analyses and interpretations were centered on findings related to faculty motivation as measured by the Faculty Attitudes Toward Teaching Scale (Hulleman & Barron, 2012; Hulleman & Schiefele, 2010), which was adapted from the Teacher Interest Survey (see Schiefele, 2009; Schiefele et al., 1993, 2013). The 30-item adaptation is made up of six subscales: expectancy (e.g., I am confident that I can get students to learn), value (e.g., Teaching is a worthwhile career), costs (e.g., Teaching requires me to make sacrifices in other areas of my life), pedagogical interest (e.g., I like learning about new teaching methods), student interest (e.g., Working with students is one of the most enjoyable aspects of teaching), and subject-matter interest (e.g., The topics I get to teach are personally important to me). A complete list of items can be found in the appendix. Responses were recorded on an 8-point Likert scale (ranging from completely disagree to completely agree) and converted to numeric scores (Table 2). The scores for the costs subscale had the opposite sign, as the statements were all negative.

Likert Scale Response Anchors

| Numeric score | Response* |

|---|---|

| 4 | Completely agree |

| 3 | Strongly agree |

| 2 | Agree |

| 1 | Slightly agree |

| −1 | Slightly disagree |

| −2 | Disagree |

| −3 | Strongly disagree |

| −4 | Completely disagree |

* For “costs” subscale these are reversed.

Participants were invited to complete the instrument right before the program (pretest) and during implementation of their (re)designed courses (posttest). The pretest provided baseline data, whereas the posttest captured participants’ longitudinal development. Specifically, the posttest was deployed three-quarters of the way into the (re)designed course, allowing each instructor to determine accurately the ease or difficulty of implementing their course. We also felt we would ascertain a more truthful reflection at this time, as opposed to at the end of the course, which is especially taxing for faculty members. Furthermore, we intended to compare the pretest and posttest in our analysis toward this article’s aims, in order to measure change within the context of a full intervention.

Participants

Across five separate iterations of our CDI, 112 instructional faculty took part. Of these individuals, all but 13 worked for our university, which has an enrollment of approximately 20,800 undergraduates and 1,900 graduate students. At the time of study, the average class size was 25, with 88% of classes having fewer than 50 students. Only 2% of the courses at our university are taught by graduate students or staff. Those CDI participants not employed by our institution arrived from regional, teaching-focused colleges and universities.

Other than college/disciplinary affiliation, we did not collect data on participant demographics (e.g., gender, race/ethnicity, rank). The majority of the participants (28%) were from the humanities and social sciences (which is, already, proportionately large at our university), followed by the behavioral and health studies disciplines (16%), computer science and engineering (14%), mathematics and sciences (14%), and business (11%). Less represented were faculty members from education (6%) and the visual and performing arts (1%). Participants affiliated with other branches of the university (e.g., Student Affairs, Professional and Continuing Education, etc.) represented 9% of our participant pool.

Ninety-five individuals (84.8%) took the pretest, and 49 (43.8%) took the posttest. To ensure participant anonymity, we utilized unique identifiers to match cases across the time series. However, only 32 (28.6%) completed both surveys using the same unique identifier. Among the points of rationale for such a strong initial response rate is that individuals were able to complete the pretest either online (i.e., pre-institute) or at a computer provided during check-in for the institute’s first day. The posttests were made available to participants through an online link distributed through email. Though the interventions were distinct with respect to the year that they were offered and included some variability across annual facilitation teams, the manualized curriculum (i.e., CDI learning outcomes, content, pedagogies and activities, assessments) remained largely intact. An implementation fidelity analysis (Fisher et al., 2014; Smith et al., 2019) was conducted by a doctoral research assistant with affirmative results. This technique ensures that what actually transpires within an instructional intervention is coherent with what was planned, adding validity to our ultimate findings.

Analysis

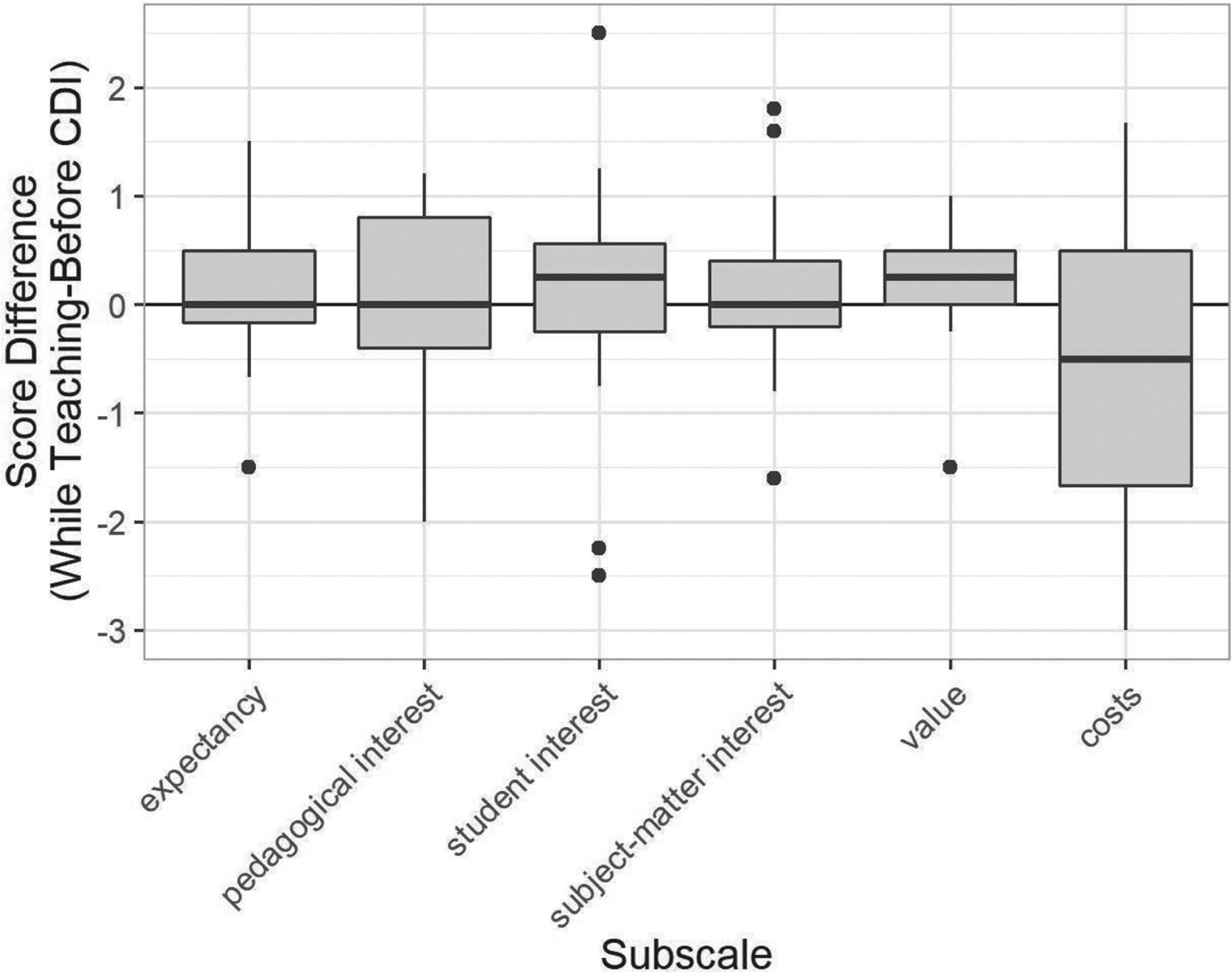

Quantitative data from the Faculty Attitudes Toward Teaching Scale were analyzed using R, an online program language and statistical computing software. Cronbach’s alpha was calculated for each subscale and was consistently high (.82–.87), suggesting internal reliability of scores. Based on diagnostic plots, the data were identified as non-normal; thus, we employed the Wilcoxon signed-rank test, a nonparametric alternative to the dependent samples t test. For participants with complete data across sets (n = 32), we carried out a matched pairs, one-sided Wilcoxon test on the difference between pretest and posttest scores. For all available data (n = 95 for the pretest, n = 49 for the posttest), conducting a Wilcoxon would violate the assumptions of the test, which relies on related or matched samples or on repeated measurements. Thus, results are only reported for a matched pairs, one-sided test. Please refer to Figure 1 and Table 3 for a summary of descriptive findings.

Matched Data Comparison: Difference in Post-participation and Pre-participation Scores

| Scale/subscale | Mean difference (standard deviation) | Wilcox p-value |

|---|---|---|

| Teaching attitudes | −0.02 (0.48) | 0.495 |

| expectancy | 0.13 (0.61) | 0.124 |

| pedagogical interest | 0.08 (0.76) | 0.237 |

| student interest | 0.12 (0.95) | 0.081 |

| subject-matter interest | 0.08 (0.68) | 0.29 |

| value | 0.17 (0.46) | 0.007* |

| costs | −0.57 (1.22) | 0.017* |

Results

For the paired data, findings were significant for the value scale (M = 0.17, SD = 0.46, p = 0.007), indicating a significant increase in a core facet of motivation that influences faculty attitude toward teaching. That is, in the context of motivation, participants’ self-reported value in teaching is amplified from baseline (i.e., before the CDI) to posttest (i.e., during the actual teaching of the course designed during the CDI). For the cost scale, scores decreased significantly (M = −0.57, SD = 1.22, p = 0.017); since the items were negatively coded, this indicates that the perceived cost of teaching (e.g., workload, time, stress, sacrifice, expectations, demand) increases significantly between pre- and post-institute data points. Expectancy and interest scales did not yield significant findings, although the descriptive data indicate positive change (pedagogical interest: M = 0.08, SD = 0.76; student interest: M = 0.12, SD = 0.95; subject-matter interest: M = 0.08, SD = 0.68).

Limitations

Plausibly, participants represented a self-selecting group of faculty already more motivated (i.e., higher expectancy, higher value, lower cost) than their comparable peers; this may also suggest a response bias by those completing pre- and posttests. Although we employed a rigorous time series design utilizing a psychometrically robust set of measures, this exploratory study lacked a control group to which outcome data could be compared. In all likelihood, all faculty experience changes to expectancy, value, and cost—perhaps at levels similar or incommensurate to those of faculty in our CDI. Furthermore, our choice to focus our analysis on a smaller set of matched pairs meant that those who responded to both surveys might have stronger feelings, be more engaged, or be more motivated than those who did not respond. In addition, because of anonymity, we were unable to describe responses by demographic characteristics (e.g., rank, gender). While the results may not be generalizable to all teaching faculty at similar institutions, the findings are transportable to CTLs with intact or developing CDIs (or similar teaching interventions) and for whom a profile of faculty participants may be similar.

Findings and Implications

This study is the first of its kind to delve into the complex intersections between faculty motivation and the impact of CDIs. The results bear important implications not only for faculty but also for CTLs and educational developers. Furthermore, our findings present opportunities for additional investigation, particularly qualitative research. The data reveal that for faculty participants, expectancy (E) remains constant. Notably, the perceived value (V) of teaching, a positive factor, increases, yet so does cost (C), which is a negative factor. This creates an intriguing complication in the context of faculty motivation to teach courses designed through a CDI experience. If expectancy stays the same and both value and cost change in different directions, what happens to faculty motivation overall? Empirically, what else do we need to know, through qualitative inquiry and advanced statistical methods (e.g., structural equation modeling), to learn more about the interactions among motivational facets? Integrally, what unique interventions should educational developers consider before, during, and after the CDI?

In this study’s context, expectancy refers to the belief that one can capably teach a (re)designed course. Stated succinctly, faculty expect to do well teaching the courses that they designed during the CDI—and that facet of motivation does not appear to falter three-quarters of the way through teaching the (re)designed course. That expectancy remains unaltered—and is, of note, already high at the pretest—is unsurprising given the relatively self-selecting nature of faculty who choose to participate in CDIs. Like most CTLs, ours does not offer compulsory or externally mandated programs. Most participants attend because they are interested, intrigued, and motivated to craft or revise a course. The data suggest that they enter the institute with strong self-perceived expectancy and that this lingers, even amid the cost barriers detailed below.

Value, which was amplified between the pretest and posttest, regards the desire or “want” to teach. Those who value a teaching-related task (e.g., crafting lesson plans, creating assessments, delivering materials, etc.) are more motivated to do what may be necessary to achieve that task (Hulleman & Barron, 2015). As with expectancy, enhanced value bolsters overall faculty motivation for teaching. According to Barron (2018), there are a number of ways to increase the value, for students, of an academic course; these include appealing to personal, intrinsic, or situational interests; ensuring content is relevant to students’ lived experiences; expressing faculty enthusiasm in students and the material; engaging students in variety and novelty; and fostering positive relationships and sense of belonging in the classroom. Within our CDI curriculum, Barron’s ideas are explicitly introduced, discussed, and encouraged for faculty to adopt. We conjecture that the extent to which faculty develop learner-centered courses with these and other “value-boosting” interventions may also affect the value they place in the courses they design and facilitate. Fostering positive relationships and sense of belonging in the classroom community, for instance, is not a one-way street; the effect on faculty may be multiplicative.

Regarding value, it is also worth noting that there are not many extrinsic motivators, of which we know, that would increase faculty self-scores over time. That is, faculty are not compensated for participating in our CDI, nor are they paid extra or offered release time for teaching new or redesigned courses. In rare cases, attendees may receive incentives from their academic departments or colleges (e.g., in the form of recognition, awards, or professional development funds) for having engaged in the CDI, but this is rare. Further research—preferably mixed methods—would need to be conducted to assess what factors and lived experiences, intrinsic and external, contribute to value.

Teaching newly designed courses is significantly “costly” for faculty, potentially leading to burnout and fatigue. The issue of cost is also problematic if we, as educational developers, aspire for our faculty to remain motivated to teach using espoused CDI principles, to transition what they have learned from CDIs to other courses, and to model this process for faculty colleagues. Recall that cost is theorized as four-dimensional (Hulleman & Barron, 2015). Faculty may navigate task difficulty, manifest in the amount of effort required to design and implement a course in a different way. Effort-unrelated costs refers to additional time, energy, or resources instructors expend on tasks other than teaching. For many faculty, opportunity costs may be those important things, personal and professional, that faculty sacrifice in order to keep the course on track. Psychological factors, such as anxiety, fear, or fatigue, also influence cost (Hulleman & Barron, 2015).

What Educational Developers Can Do

Among the many issues we have contemplated in studying faculty motivation are the temporal layers within which the phenomenon is manifest. There are three ways that a CDI (and, perhaps, similar teaching interventions such as learning communities) creates effort for faculty members. First, faculty motivation affects learning how to design a new course; expectancy, value, and cost considerations are relevant before and throughout the CDI experience. Faculty members have to learn the principles of backward design, for instance, and build the skills necessary to apply and integrate pedagogical ideals. Although this was not the province of our particular study, educational developers might pay attention to such considerations when designing CDI curricula, which would include the pre-institute experience (e.g., use of pre-institute surveys, considering methods of conveying expectations, etc.).

A second tier wherein motivation challenges may accrue is in course preparation for which faculty, post-CDI, continue to apply principles learned during the CDI to tasks such as syllabus construction, assessment design, and pedagogical choice-making. Herein, the faculty member is a practitioner—sometimes solitarily—and no longer a learner within the supported, guided CDI context. A final layer closely tied to the aforementioned involves effort related to course delivery itself (i.e., the semester or quarter during which faculty members facilitate their courses amid an array of situational factors). Some factors may be anticipated, such as student demographics, and others less so, such as the unplanned onboarding of courses to online delivery in the wake of the COVID-19 pandemic.

That the faculty in our study expect to teach well, and continue to self-report prowess in this area over time, is not a finding to take for granted. Nor may this finding be transferable to all centers, particularly at institutions classified or structured differently from ours, a master’s comprehensive university with a strong teaching focus. To this end, we have forecasted ways to transpose Barron’s (2018) strategies of bolstering student expectancy to the faculty experience. Expectancy is tied to ability and skill, which points to providing faculty with opportunities to practice and receive specific (versus general) feedback as they develop new courses. Herein, facilitator (i.e., educational developer, peer) encouragement is integral. As learners experience growth, development, and improvement, expectancy increases (Barron, 2018). An appropriate balance of challenge and support is also vital; difficulty should be tied to the learner’s skill level in the context of appropriate support (Barron, 2018). Pedagogically, such tenets, if not already present, can be woven thoughtfully into CDI curricula.

The finding that value toward teaching increases from the pretest to the posttest is one that we recommend exploring qualitatively. Unlike some centers that offer post-institute learning communities to help faculty continue their work after the CDI (i.e., the kinds of interventions that strengthen value), ours does not provide this kind of structured support. It would seem that faculty benefit, just as students do, from the application of and engagement with the pedagogical practices that they have learned through the CDI. Varied positive benefits to faculty of innovative teaching practices are documented in the literature (e.g., Meixner, 2010, 2013), both refereed and gray (e.g., listservs, blogs), and come from multiple disciplinary perspectives and methodologies. We imagine that offering faculty ongoing opportunities to reflect on their post-CDI teaching experiences with a community of peers—face-to-face or virtual, synchronous or asynchronous, informal or formal—will serve to enhance value.

Nevertheless, the initiatives (e.g., post-CDI workshops and learning communities) that boost faculty expectancy and value may come at a cost to faculty, which is a factor of concern that has emerged from this study. We recognize, in reflecting on the various pedagogical strategies introduced during our CDI, that many evidence-based practices—though strongly supported by the literature—are extremely time intensive for faculty to enact (particularly when an instructor is deploying a newly designed course for the first time). We recommend, for ourselves and others, thinking more strategically about teaching faculty about efficiencies in course design—presenting “jewels” or “hacks” that facilitate student learning and the assessment of learning in novel ways. Transparent assignment design (see Palmer et al., 2018; Winkelmes et al., 2019) is among these gems, as are opportunities for faculty to embrace individual, peer, and community assessment (Fink, 2013). If course redesign doesn’t attend to grading efficiency, the result may be idealized courses that cannot be implemented realistically. The overall impact could be demoralizing to faculty and contrary to the goals of course design.

The literature on threshold concepts applied to students, faculty, and educational developers (Bunnel & Bernstein, 2012; Kiely & Sexsmith, 2018; King & Felten, 2012; Meyer & Land, 2006) may provide an additional conceptual pathway to understanding and exploring faculty expectancy, value, and cost. Kiely and Sexsmith (2018) deftly wrote, “Learning of new threshold concepts is an important area for faculty development; because these moments are rarer for faculty, they imply greater dissonance and resultant metacognitive shifts” (p. 288). Like our students, faculty traverse the unknown as they learn, grow, and develop as teachers; at times, they encounter states of bewilderment, confusion, and difficulty—“stuck places”—that compromise their motivation to persevere. Educational developers are advised to use an array of tools to help faculty address and navigate such dissonance, reflecting critically on their assumptions and paving new pathways forward (Kiely & Sexsmith, 2018).

As a point of departure from teaching, but a crucial consideration, is the reminder that emanates from the cost data: faculty are whole people, navigating costs to teaching on a daily, sometimes hourly, basis. Such costs—like the need to finalize a manuscript for publication, the responsibility to participate in committees, the challenges of applying for promotion amid a hefty teaching load, and the disproportionate service loads shouldered by faculty of color—are also facets of the “whole” faculty experience to which CTL may pay greater attention. It is possible that course design is more appropriate for faculty during certain phases of their careers and less appropriate at other times. Furthermore, teaching courses using innovative methods, particularly those that are time intensive, may compromise faculty members’ abilities to balance other tasks central to their professional work. For these reasons, centers are also called upon to help faculty manage expectations relative to course design efforts, among their other priorities. To this end, we are inspired by the nascent evolution of centers like our own to embrace career planning programming that helps faculty make wise choices about their time, portfolios, and associations. Already, a host of centers have begun to offer initiatives supporting faculty research, scholarship, and creative activities; career planning and professional development; and even leadership. Such sensitivity to faculty well-being, too, is central.

Biographies

Cara Meixner is former Executive Director of the Center for Faculty Innovation and Professor of Graduate Psychology at James Madison University (USA). Cara applies inclusive inquiry methods to studies of disability, mental health, and educational development. She is recent co-editor of Reconceptualizing Faculty Development in Service-Learning/Community Engagement (Stylus, 2018).

Melissa Altman is a Doctoral Candidate in Strategic Leadership Studies and Instructor of Statistics at James Madison University (USA). Her research centers on power, privilege, and difference in the postsecondary context, with a focus on the contributions and experiences of non-tenure track faculty members.

Megan Good is an Assistant Professor in Graduate Psychology and Associate Assessment Specialist in the Center for Assessment and Research Studies at James Madison University. She earned her PhD in Assessment and Measurement from James Madison University (USA) and her research focus is on the intersection of assessment and educational development, particularly in the context of learning improvement.

Elizabeth Ben Ward is an Assistant Professor of Statistics at James Madison University (USA). Her areas of research expertise include Bayesian statistical analysis, statistical graphics, and statistics education.

Acknowledgments

We wish to acknowledge the contributions of motivation researchers Dr. Kenn Barron and Dr. Chris Hulleman, whose extraordinary theoretical and empirical work—and guidance with instrument development—is appreciated.

References

Barron, K. E. (2018, June). Student motivation [Paper presentation]. Annual meeting of jmUDESIGN, Harrisonburg, VA, United States.

Barron, K. E., & Hulleman, C. S. (2015). Expectancy-value-cost model of motivation. Psychology, 84, 261–271.

Bollen, K. A., & Hoyle, R. H. (1990). Perceived cohesion: A conceptual and empirical examination. Social Forces, 69(2), 479–504. https://doi.org/10.2307/2579670https://doi.org/10.2307/2579670

Bunnel, S. L., & Bernstein, D. J. (2012). Overcoming some threshold concepts in scholarly teaching. Journal of Faculty Development, 26(3), 14–18.

Carpenter, R., Morin, C., Sweet, C., & Blythe, H. (2017). The role of faculty development in teaching and learning through high-impact educational practices. Journal of Faculty Development, 31(1), 7–12.

Chism, N. V. N., Holley, M., & Harris, C. J. (2012). Researching the impact of educational development: Basis for informed practice. To Improve the Academy, 31(1), 385–400.

Eccles, J. S., Adler, T. F., Futterman, R., Goff, S. B., Kaczala, C. M., Meece, J. L., & Midgley, C. (1983). Expectancies, values, and academic behaviors. In J. T. Spence (Ed.), Achievement and achievement motives (pp. 75–146). Freeman.

Fernández, J., Mateo, M. A., & Muñiz, J. (1995). Evaluation of the academic setting in Spain. European Journal of Psychological Assessment, 11(2), 133–137. https://doi.org/10.1027/1015-5759.11.2.133https://doi.org/10.1027/1015-5759.11.2.133

Fink, L. D. (2013). Creating significant learning experiences: An integrated approach to designing college courses (2nd ed.). Jossey-Bass.

Fisher, R., Smith, K., Finney, S., & Pinder, K. (2014). The importance of implementation fidelity data for evaluating program effectiveness. About Campus, 19(5), 28–32.

Flake, J. K., Barron, K. E., Hulleman, C., McCoach, B. D., & Welsh, M. E. (2015). Measuring cost: The forgotten component of expectancy-value theory. Contemporary Educational Psychology, 41, 232–244. https://doi.org/10.1016/j.cedpsych.2015.03.002https://doi.org/10.1016/j.cedpsych.2015.03.002

Gravett, E. O., & Broscheid, A. (2018). Models and genres of faculty development. In B. Berkey, C. Meixner, P. M. Green, & E. A. Eddins (Eds.), Reconceptualizing faculty development in service-learning/community engagement: Exploring intersections, frameworks, and models of practice (pp. 85–106). Stylus.

Hansen, E. J. (2011). Idea-based learning: A course design process to promote conceptual understanding. Stylus.

Hulleman, C. S., & Barron, K. E. (2012). Measures of teaching expectancy, value, and cost [Unpublished instrument]. James Madison University.

Hulleman, C. S., & Barron, K. E. (2015). Motivation interventions in education: Bridging theory, research, and practice. In L. Corno & E. M. Anderman (Eds.), Handbook of educational psychology (3rd ed., pp. 160–171). Routledge.

Hulleman, C. S., & Schiefele, U. (2010). Measures of teaching interest and values [Unpublished instrument]. James Madison University.

Hulleman, C. S., & Springer, M. (2010). Measures of teacher motivation [Unpublished instrument]. James Madison University.

Hurney, C. A., Brantmeier, E. J., Good, M. R., Harrison, D., & Meixner, C. (2016). The faculty learning outcome assessment framework. Journal of Faculty Development, 30(2), 69–77.

Kiely, R., & Sexsmith, R. (2018). Innovative considerations in faculty development and service-learning and community engagement: New perspectives for the future. In B. Berkey, C. Meixner, P. M. Green, & E. A. Eddins (Eds.), Reconceptualizing faculty development in service-learning/community engagement: Exploring intersections, frameworks, and models of practice (pp. 283–314). Stylus.

Kilgo, C. A., Ezell Sheets, J. K., & Pascarella, E. T. (2015). The link between high-impact practices and student learning: Some longitudinal evidence. Higher Education, 69(4), 509–525. https://doi.org/10.1007/s10734-014-9788-zhttps://doi.org/10.1007/s10734-014-9788-z

King, C., & Felten, P. (2012). Threshold concepts in educational development: An introduction. Journal of Faculty Development, 26(3), 5–7.

Kreber, C., & Brook, P. (2001). Impact evaluation of educational development programmes. International Journal for Academic Development, 6(2), 96–108. https://doi.org/10.1080/13601440110090749https://doi.org/10.1080/13601440110090749

Kuh, G. D. (2008). High-impact educational practices: What they are, who has access to them, and why they matter. American Association of Colleges and Universities.

Kuh, G. D., O’Donnell, K., & Reed, S. (2013). Ensuring quality and taking high-impact practices to scale. Association of American Colleges and Universities.

Lowenthal, P. R., Wray, M. L., Bates, B., Switzer, T., & Stevens, E. (2013). Examining faculty motivation to participate in faculty development. International Journal of University Teaching and Faculty Development, 3(3), 149–164.

Meixner, C. (2010). Reconciling self, servant leadership, and learning: The Journey to the East as locus for reflection and transformation. Journal of Leadership Studies, 3(4), 81–85. https://doi.org/10.1002/jls.20142https://doi.org/10.1002/jls.20142

Meixner, C. (2013). Locating self by serving others: A journey to inner wisdom. In J. Lin, R. L. Oxford, & E. J. Brantmeier (Eds.), Re-envisioning higher education: Embodied pathways to wisdom and social transformation. Information Age Publishing.

Meyer, J., & Land, R. (Eds.). (2006). Overcoming barriers to student understanding: Threshold concepts and troublesome knowledge. Routledge.

Palmer, M. S., Gravett, E. O., & LaFleur, J. (2018). Measuring transparency: A learning-focused assignment rubric. To Improve the Academy, 37(2), 173–187. https://doi.org/10.1002/tia2.20083https://doi.org/10.1002/tia2.20083

Palmer, M. S., Streifer, A. C., & Duncan, S. W. (2016). Systematic assessment of a high impact course design institute. To Improve the Academy, 35(2). https://doi.org/10.3998/tia.17063888.0035.203https://doi.org/10.3998/tia.17063888.0035.203

Schiefele, U. (2009). Situational and individual interest. In K. R. Wentzel & A. Wigfield (Eds.), Handbook of motivation at school (pp. 197–222). Routledge.

Schiefele, U., Krapp, A., Wild, K.-P., & Winteler, A. (1993). Der “Fragebogen zum Studieninteresse.” Diagnostica, 39(4), 335–351.

Schiefele, U., Streblow, L., & Retelsdorf, J. (2013). Dimensions of teacher interest and their relations to occupational well-being and instructional practices. Journal for Educational Research Online, 5(1), 7–37.

Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19(4), 460–475. https://doi.org/10.1006/ceps.1994.1033https://doi.org/10.1006/ceps.1994.1033

Smith, K., Finney, S., & Fulcher, K. (2019). Connecting assessment practices with curricula and pedagogy via implementation fidelity data. Assessment & Evaluation in Higher Education, 44(2), 263–282. https://doi.org/10.1080/02602938.2018.1496321https://doi.org/10.1080/02602938.2018.1496321

Trapnell, P. D., & Campbell, J. D. (1999). Rumination-Reflection Questionnaire [Database record]. PsycTESTS. https://doi.org/10.1037/t07094-000https://doi.org/10.1037/t07094-000

Wigfield, A., Eccles, J. S., Schiefele, U., Roeser, R. W., & Davis-Kean, P. (2007). Development of achievement motivation. In N. Eisenberg (Ed.), Handbook of child psychology (Vol. 3). John Wiley & Sons. https://doi.org/10.1002/9780470147658.chpsy0315https://doi.org/10.1002/9780470147658.chpsy0315

Wiggins, G., & McTighe, J. (2005). Understanding by design (2nd ed.). Association for Supervision and Curriculum Development.

Winkelmes, M.-A., Boye, A., & Tapp, S. (2019). Transparent design in higher education teaching and leadership: A guide to implementing the transparency framework institution-wide to improve learning and retention. Stylus.

Appendix

You will be asked to read a series of statements about teaching and then to indicate your level of agreement with each statement using the 8-point response scale below. After reading each item, select the number corresponding to your level of agreement. For example, if a statement completely represents how you feel about teaching, select “Completely Agree.” If you agree strongly but not completely, select “Strongly Agree.” On the other hand, if you only agree slightly with a statement, select “Slightly Agree.” There are no right or wrong answers; just answer each item honestly based on how you currently feel about teaching.

| Subscale | Statement |

|---|---|

| Costs | The current expectations and demands of being a teacher are too high |

| Costs | Teaching requires me to make sacrifices in other areas of my life |

| Costs | I find teaching to be too stressful |

| Costs | My job requires too much time to do it well |

| Costs | Teaching requires too much work |

| Costs | I am unable to put in the time needed to do my job well |

| Expectancy | I am confident that I can get students to learn |

| Expectancy | I am confident that I can get my students to perform well |

| Expectancy | I am confident that I can teach my subject matter well |

| Expectancy | I am confident that I am an effective teacher |

| Expectancy | I believe that I do my job well |

| Expectancy | I believe my teaching makes a difference on students’ growth and development |

| Pedagogical Interest | I really enjoy thinking about ways to become a better teacher |

| Pedagogical Interest | I am always interested in learning new ways on how to become a better teacher |

| Pedagogical Interest | I like learning about new teaching methods |

| Pedagogical Interest | I like thinking about how to make my teaching more effective |

| Pedagogical Interest | It’s important to me to ensure that my teaching methods are up to date |

| Student Interest | I am interested in teaching because I get to help students grow into successful adults |

| Student Interest | The most interesting aspect of being a teacher is seeing students develop over time |

| Student Interest | I am particularly interested in helping my students develop as people |

| Student Interest | Working with students is one of the most enjoyable aspects of teaching |

| Subject-Matter Interest | I really enjoy the topics that I get to teach |

| Subject-Matter Interest | The topics I get to teach are personally important to me |

| Subject-Matter Interest | I like learning about the topics that I teach |

| Subject-Matter Interest | The topics I teach usually put me in a good mood |

| Subject-Matter Interest | I find the topics that I get to teach interesting |

| Value | I am glad I chose teaching as my profession |

| Value | I love being a teacher |

| Value | I really value my job as a teacher |

| Value | Teaching is a worthwhile career |